NVIDIA has made a significant leap in AI computing by introducing the NVIDIA HGX H200. Based on the NVIDIA Hopper architecture, this new platform features the NVIDIA H200 Tensor Core GPU, tailored for generative AI and high-performance computing (HPC) workloads, handling massive data volumes with advanced memory capabilities.

The H200 stands out as the first GPU to incorporate high bandwidth (HBM3e )memory, offering faster and larger memory crucial for generative AI and large language models (LLMs) and advancing scientific computing for HPC workloads. It boasts 141GB of memory at a speed of 4.8 terabytes per second, nearly doubling the capacity and offering 2.4 times more bandwidth than its predecessor, the NVIDIA A100.

Systems powered by H200 from top server manufacturers and cloud service providers are expected to start shipping in the second quarter of 2024. Ian Buck, NVIDIA’s Vice President of Hyperscale and HPC, emphasizes that the H200 will significantly enhance the processing of vast data amounts at high speeds, which is essential for generative AI and HPC applications.

The Hopper architecture marks a notable performance improvement over previous generations, further enhanced by ongoing software updates like the recent release of NVIDIA TensorRT-LLM. The H200 promises to nearly double inference speed on large language models like Llama 2, with more performance enhancements anticipated in future software updates.

H200 Specifications

| NVIDIA H200 Tensor Core GPU | |

|---|---|

| Form Factor | H200 SXM |

| FP64 | 34 TFLOPS |

| FP64 Tensor Core | 67 TFLOPS |

| FP32 | 67 TFLOPS |

| TF32 Tensor Core | 989 TFLOPS |

| BFLOAT16 Tensor Core | 1,979 TFLOPS |

| FP16 Tensor Core | 1,979 TFLOPS |

| FP8 Tensor Core | 3,958 TFLOPS |

| INT8 Tensor Core | 3,958 TFLOPS |

| GPU Memory | 141GB |

| GPU Memory Bandwidth | 4.8TB/s |

| Decoders | 7 NVDEC 7 JPEG |

| Max Thermal Design Power (TDP) | Up to 700W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGs @16.5GB each |

| Interconnect | NVIDIA NVLink: 900GB/s PCIe Gen5: 128GB/s |

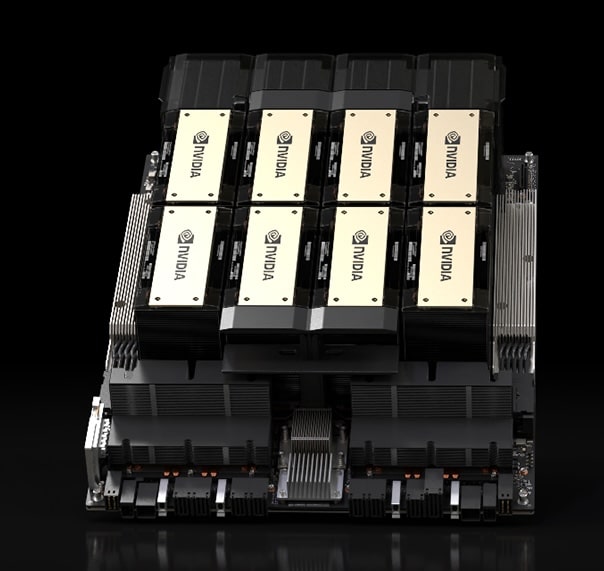

| Server Options | NVIDIA HGX H200 partner and NVIDIA-Certified Systems with 4 or 8 GPUs |

Adaptable Form Factors

NVIDIA H200 will be available in various form factors, including NVIDIA HGX H200 server boards in four- and eight-way configurations, compatible with HGX H100 systems. It’s also available in the NVIDIA GH200 Grace Hopper Superchip with HBM3e. We recently posted a piece on the NVIDIA GH200 Grace Hopper Supperchip. These options ensure H200’s adaptability across different data center types, including on-premises, cloud, hybrid-cloud, and edge environments.

Key server makers and cloud service providers, including Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, are set to deploy H200-based instances starting next year.

The HGX H200, equipped with NVIDIA NVLink and NVSwitch high-speed interconnects, delivers top performance for various workloads, including training and inference for models beyond 175 billion parameters. An eight-way HGX H200 configuration provides over 32 petaflops of FP8 deep learning compute and 1.1TB of high-bandwidth memory, ideal for generative AI and HPC application combined with NVIDIA Grace CPUs and the NVLink-C2C interconnect, the H200 forms the GH200 Grace Hopper Superchip with HBM3e, a module designed for large-scale HPC and AI applications.

NVIDIA’s full-stack software support, including the NVIDIA AI Enterprise suite, enables developers and enterprises to build and accelerate AI to HPC applications. The NVIDIA H200 is set to be available from global system manufacturers and cloud service providers starting in the second quarter of 2024, marking a new era in AI and HPC capabilities.

Amazon

Amazon