Brian recently hosted a podcast with Jason Adrian, the co-chair of the OCP storage project and the hardware lead at Azure, where they discussed the future of storage solutions. The main topic of discussion was the versatile E1.S form factor. Based on our conversation, if you aren’t excited about the promise of this technology, you probably should be.

What is E1.S?

The E1.S form factor (loosely known as “ruler”) came out a few years ago and was mainly to give hyper scalers some flexibility regarding performance and density inside a tiny (slightly larger than an M.2 drive) form factor. Now, it’s looking to make a broader impact on the compute and storage markets.

At the start, the E1.L (a much larger “ruler” form factor), was being used. While it featured 10s of TBs of capacity, it didn’t hold up well in the performance category. M.2 drives were being leveraged for performance workloads, but they also have limitations, mostly when it comes to thermals and serviceability. The early days of ruler SSDs also suffered from too many physical sizes.

While E1.L will have a place for capacity storage, it will mostly be relegated to very large-scale deployments that need the density that the long ruler offers. For most others, including the enterprise, E1.S will be the right blend of capacity and performance. Generally speaking, E1.S is in the lead as the next SSD form factor that will replace U.2 in the data center. There are other contenders, but none is advanced with as much widespread support as E1.S.

The Different Sizes of E1.S

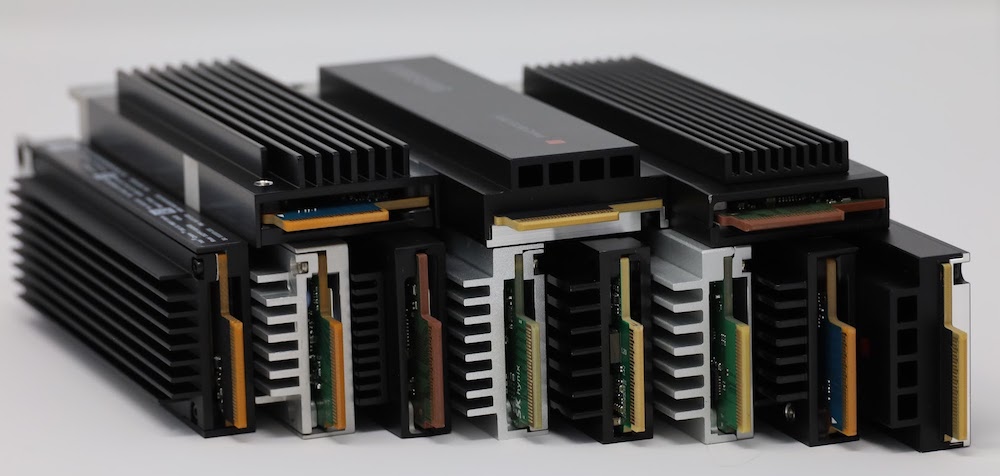

Back in 2019, there were many different sizes of the E1.S form factor, they were either too large (creating real-estate problems for Cloud servers) or unable to reach top performance due to the massive heatsink requirements. The purpose of the E1.S is for it to last more than one generation.

What ultimately came through was a plan by many hyperscalers to standardize the short ruler on a 15mm size (the same z-height as U.2), so that the OEMs could easily pick up and benefit as well. This standardization also makes it much easier for the SSD vendors. Instead of creating and supporting several short ruler solutions, they can now lock in on just one form factor, outside of specific clouds that may order enough volume to warrant additional sizes.

The size benefits of E1.S are critical. Currently, you can really only fit 10 U.2 drives in a 1U server chassis, without going to midplanes or other heroic efforts. With the E1.S, server designers can fit 24 front-mounted drives in a 1U system, which are all hot-swappable with light IDs, enterprise features that both OEM and hyperscale want. Ultimately, E1.S allows for a much more dense 1U server that has an amazing performance profile.

Though the E1.S specification features five different size options, they all use the same PCB length and connector. This means compatibility is a non-issue. The only difference between the versions is the heatsink size, which allows them to output different levels of performance. For example, for organizations that just want a high-capacity boot drive or an SSD for general use, the E1.S features two non-cased versions that can be embedded on the motherboard. The only downside is that they are not easily hot-pluggable, and cannot be used for high-performance unless you attach a heatsink.

The other three specifications (9.5mm, 15mm, and 25mm) are meant for the more demanding and high-performance use cases. The 9.5mm, which is meant for a front service server or storage system, is the highest density solution of the three; though temperatures will start to rise fairly high once as workload intensity increases.

To avoid potential throttling, 15- or 25-mm variants are the best solution in these cases. And of course, 15mm takes the lead from a design standpoint because that’s a z-height server vendors (and customers) are comfortable with.

Why Hasn’t E1.S/L Been Adopted Yet?

The E1 form factor isn’t a new technology. Even though it has been around for the past few years (Supermicro released a few systems with support for the form factor), it still hasn’t been widely adopted. Why is this?

For E1.L, this mainly comes down to need. There aren’t many people who are looking to buy twenty or so 16-32TB SSDs and putting them into a single system. While this is certainly needed in the hyperscale space, the vast majority of organizations in the enterprise sector do not have use for something of this scale, so there isn’t quite of need to create a demand. E1.L is a hyperscale-centric solution. We saw a similar lack of adoption even with U.2 SSDs, the 30TB SKUs simply weren’t selling into the enterprise.

Today, the OEM space has a range of different system sizes; 1U, 2U, 4U, blades, and more. So, there will have to be a lot of incentive to move into a brand-new form factor. For example, slot incompatibility means enterprises will need adaptors to move them around, which is not an effective way to run a server–both from a cost and management side. For example, Dell tried making 1.8″ SSDs a thing, which never took off. However, as Cloud organizations start bringing in more volume of E1.S form factor drives (which will consist of 16TB models and higher in the near future), OEMs will likely have more motivation to start broadly adopting the new drive tech.

Dell, HPE, and others are starting to contribute to this adoption, which helps to unify, rather than fragment, the market. Don’t be surprised to see system configurations that really make sense to enterprises, which might help them look past just U.2.

Thankfully, the front-facing backplane is the only primary component that needs to be developed, as much in the backend will stay the same, albeit a bit more power might be needed. There’s also a big design opportunity for major OEMs that are waiting for a PCIe Gen5 to refresh before moving to E1.S. Future server designs can mitigate the need for cabling, SSD connections can be direct to the board, so server design can be greatly simplified.

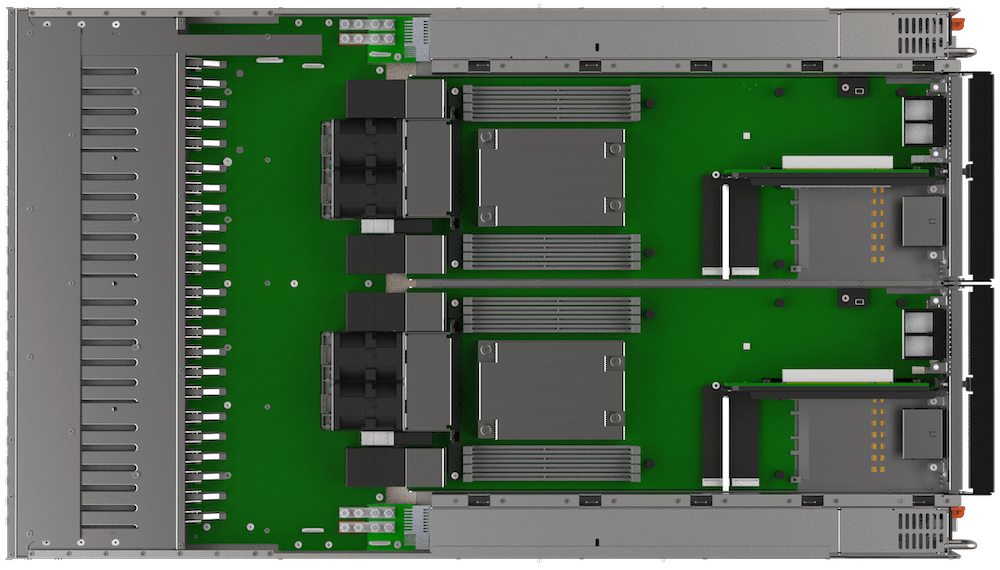

There’s no need to wait though, more dynamic system builders like Viking are doing this already. In the image below, you can see a rendering of their NVMe E1.S server with the lid off. The SSDs in this case connect to a drive plane, that presents to the twin servers in the back. This design is highly elegant, there’s not a single cable in the system at all.

E1.S vs M.2

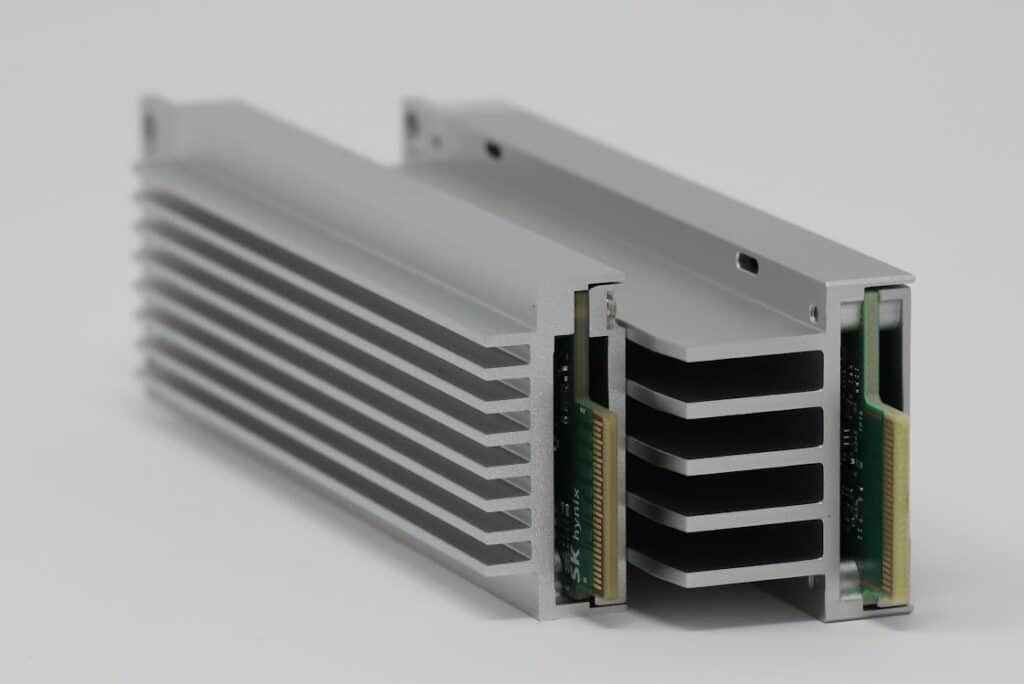

There are three important differences between the two form factors: thermal protection capabilities, capacity, and ease-of-use. While M.2 can be used in hyperscale data centers, it certainly is not the most effective type of configuration. Adapters, heatsinks, and thermal interface materials are all required to keep temperatures low enough so they can reach the desired performance.

With Gen3 M.2 SSDs, you likely require a carrier card to keep M.2 drives cool; however, it is manageable without them in certain workloads. But with Gen4 and the eventual emergence of Gen5, drive wattage increases significantly (from 8W all the way to 15-20W), which means you can’t just vertically stack M.2 drives close together. You most definitely need to attach some type of heatsink on each drive, making server real-estate an issue.

E1.S, on the other hand, has a built-in heatsink and thermal interface material, so no extra intervention is needed to maintain the desired performance. This means it does not have to be throttled for performance to keep temperatures down, which is extremely important as organizations want to take full advantage of potential performance. The technology is now available to completely leverage Gen4 (CPUs, networking ports), and the E1.S 15mm form factor is able to handle it.

There’s also a huge difference in drive management. To replace carrier cards in your server (or an M.2 drive embedded on the board itself), it’s often a long and annoying process. There’s generally no online serviceability, meaning downtime cannot be avoided.

First, you have to power down the system, and completely remove it from the server rack. You then have to pull out the card from the back, remove the heatsink from the M.2 drive, assemble the new SSD (including thermal interface material and the heat sink), add it back in the slot, and turn on the server. You are essentially doing an entire system installation to simply swap out a single M.2 drive. For the hyperscale space, they want all maintenance to be done efficiently and online. Enterprises can certainly benefit from this as well.

The E1.S form factor makes it simple: because they are mounted at the front of the system, it’s as easy as a simple drive swap. You can even have a front-facing boot or caching drive (which were previously tucked away inside the system) to keep virtually everything hot serviceable.

From a cost perspective, companies can save a lot of time and money. The bigger your server environment is, the more you will likely benefit from E1.S due to the sheer density improvements.

One of the more nuanced features of the E1.S is the green/amber LEDs built into the SSD, something that was missing from some other form factors. It also features a mounting location to install a latching mechanism, though the latches themselves will be needed to be added by the consumer. Interestingly enough, Samsung proposed an open-source option for a tool-less latch to OCP Storage last year.

Ultimately, E1.S will allow for more interesting server builds. For example, we have a Supermicro system in the StorageReview lab that consists of half-blade modules that house only three drives slots: a SATA boot drive and twin NVMe SSDs. This is fine for the type of workloads it is meant for; however, with E1.S, the system could easily fit six to eight of these drives in this tiny server blade compared to the current three-drive build. This has the potential to dramatically change what you can do from a storage standpoint.

So Then, is M.2 Dead?

One of the bigger topics that were discussed was whether or not the M.2 connector will be going away from new systems. Jason believes that, as far as OEM and enterprise are concerned, it certainly will. And sooner than you might think, broad-based adoption may only be 18 months away.

During Brian and Jason’s discussion, PCIe Gen5 was mentioned as the inflection point with both E1 and E3 form factors for OEM and data centers. It will also be interesting to see if this will happen in the client space and workstation/high-end desktop market as well, though it’s a bit more complicated for these markets, so it will likely take a bit longer to fully adopt the new form factor.

Conclusion

It has been almost two years since OCP ratified the 15mm form factor and there are already 8 different vendors building drives to this spec. This means you will see more high-density servers and storage systems that will set new bars in IOPS density with a huge focus on serviceability. More specifically, Jason says the 15mm variant of the E1.S form factor will allow for some incredible performance numbers with its 25W+ capabilities when using the upcoming PCIe Gen 5 SSDs. Simply put, we’re only one more server generation away from this new technology. Now is the time to start getting serious about planning to implement E1.S in your data center.

Amazon

Amazon