Supermicro announced the latest addition to the AMD-based Instinct MI350 series GPU-optimized solutions. The updates are expected to deliver greater performance, maximum scalability, and power efficiency. Supermicro has designed this new system for organizations that need the high-end performance of AMD Instinct MI355X GPUs but require an air-cooled environment.

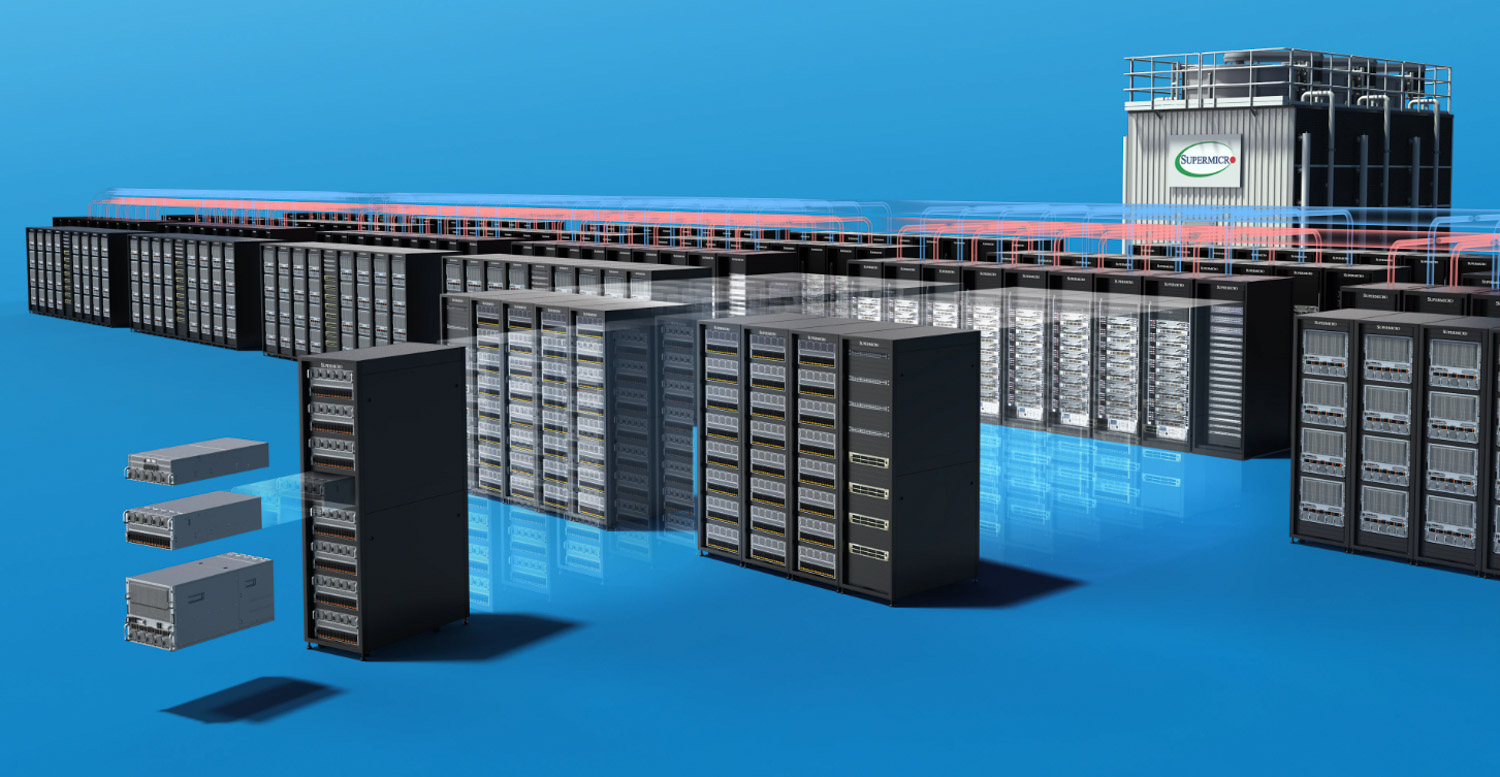

Vik Malyala, SVP of Technology and AI at Supermicro, highlighted the company’s extensive experience delivering high-performance AI and HPC solutions. He emphasized that Supermicro’s DCBBS allows for rapid integration of AMD solutions by deploying advanced technology alongside proven data center solutions. The introduction of new air-cooled AMD Instinct MI355X GPU systems further enhances their AI product portfolio, providing customers with more options for building next-generation data centers.

Expanded Liquid-Cooled and Air-Cooled Servers

Supermicro is expanding its high-performance lineup with new 10U air- and liquid-cooled servers using the industry-standard OCP Accelerator Module (OAM). These GPU servers deliver 288GB HBM3e per GPU, 8TB/s bandwidth, and increased TDP from 1000W to 1400W, achieving up to double the performance of the air-cooled 8U MI350X system. Adding the 10U option to MI355X-powered GPU servers enables higher performance per rack in both air- and liquid-cooled configurations.

AMD announced its collaboration with Supermicro to introduce the air-cooled AMD Instinct MI355X GPU, designed to enhance AI performance within existing data center infrastructures. Travis Karr, AMD’s corporate vice president of business development for Data Center GPU Business, highlighted that this partnership aims to lead in performance and efficiency, delivering advanced AI and high-performance computing solutions globally.

These GPU solutions are designed to offer maximum performance for AI and inference at scale across cloud service providers and enterprises. The extended portfolio of Supermicro accelerated AI servers with AMD Instinct MI350 series GPUs highlights next-generation data center solutions built on Supermicro’s DCBBS architecture and AMD’s latest 4th Gen CDNA architecture, once again delivering advanced AI solutions to market first.

The Supermicro 10U server with AMD Instinct MI355X GPUs is currently shipping.

New AI Factory Cluster Solutions

Supermicro announced new turnkey solutions to streamline deployment and speed up AI factory setup. Based on NVIDIA Enterprise Reference Architectures and Blackwell GPUs, these fully integrated rack-scale clusters include NVIDIA software and Spectrum-X Ethernet networking. They are validated and delivered as complete solutions. Supermicro’s DCBBS further simplifies the process of building or refurbishing data centers into AI factories, reducing lead times and vendor coordination.

Supermicro CEO Charles Liang highlighted the company’s leadership in rapidly delivering new GPU technologies, specifically NVIDIA’s GB300 NVL72 and HGX B300-based AI infrastructure. He emphasized that these advancements are key to democratizing AI across industries, with the AI factory serving as a foundation for transforming companies into AI enterprises. Liang noted that, in collaboration with NVIDIA and Supermicro’s Data Center Building Block Solutions, these efforts help accelerate and streamline AI deployment, achieving industry-leading time-to-online.

Supermicro offers AI factory solutions using NVIDIA-certified systems with Blackwell GPUs, optimized for performance and efficiency in environments limited by power, cooling, and space. These solutions feature Spectrum-X networking and are built on NVIDIA Enterprise Reference Architectures for RTX PRO 6000 Blackwell Server Edition GPUs and HGX B200.AI Factory Clusters.

Preconfigured as scalable units, Supermicro AI factory cluster solutions are available in small, medium, and large configurations, ranging from 4 nodes with 32 GPUs to 32 nodes with 256 GPUs.

The clusters are tested up to L12 at Supermicro’s global sites and include NVIDIA AI Enterprise, Omniverse, Run:ai, Spectrum-X Ethernet, and full cabling for a plug-and-play solution ready to generate tokens from day one. Supermicro offers storage solutions for all stages of the AI data pipeline, supporting the NVIDIA AI Data Platform and AI workflows within the AI factory.

Supermicro is currently taking orders for AI factory cluster solutions in 4, 8, and 32-node configurations with NVIDIA RTX PRO 6000 Blackwell Server Edition or NVIDIA HGX B200 GPUs, optimized for AI and HPC workloads at any scale.

Universal AI, HPC, and visual computing clusters feature NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, built on Supermicro’s 4U and 5U PCIe GPU systems. With 8 GPUs per node in a 2-8-5-200 (CPU-GPU-NIC-Bandwidth) configuration, these cluster solutions support AI inference, enterprise HPC, and graphics and rendering workloads, allowing enterprises to utilize shared infrastructure for multiple applications.

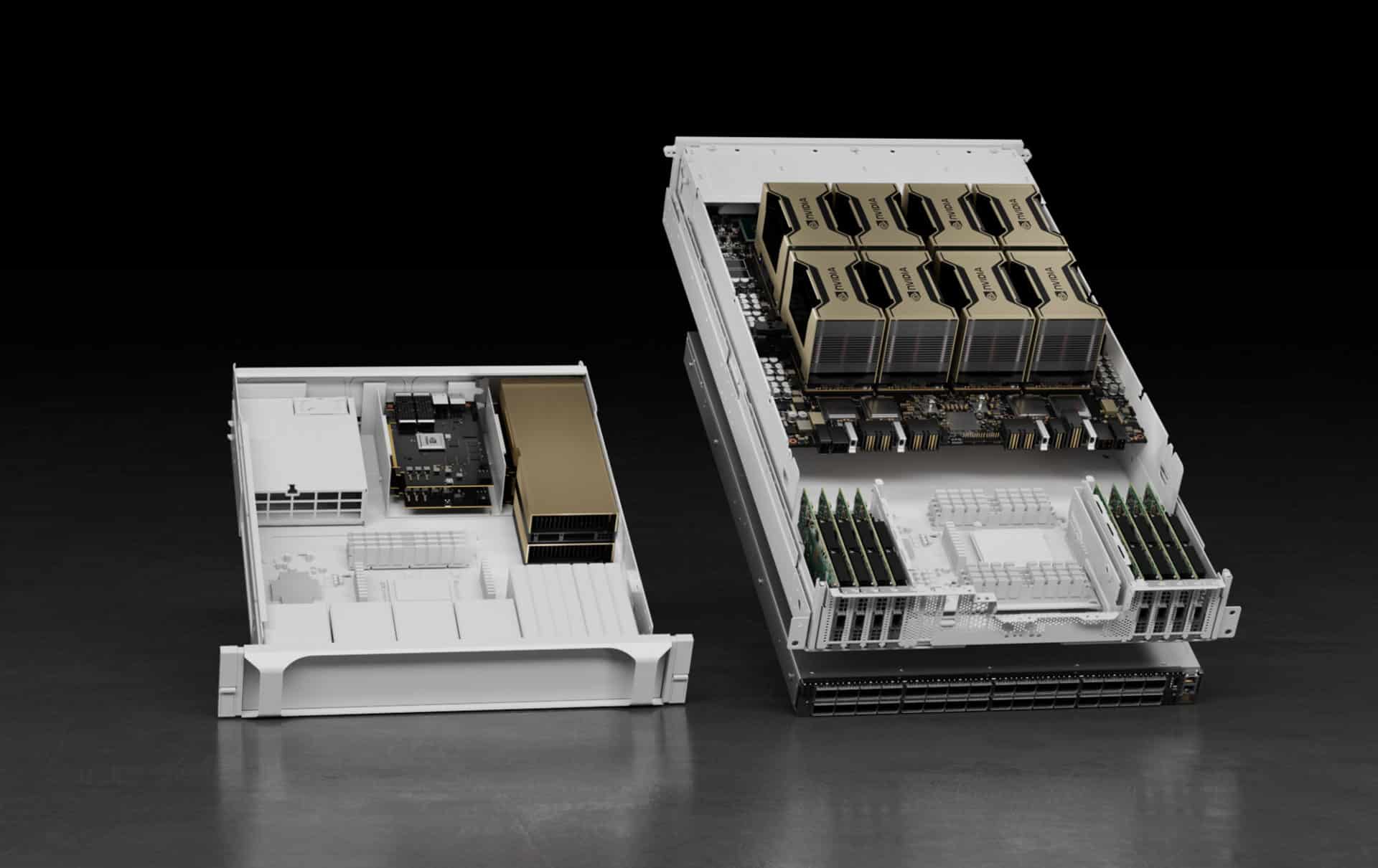

High-performance AI and HPC clusters with NVIDIA HGX GPUs, based on Supermicro’s 10U modular GPU platforms. Each node includes an NVIDIA HGX B200 8-GPU and supports NVIDIA NVLink for maximum GPU-GPU communication. These clusters are optimized for AI model fine-tuning and training, as well as HPC workloads.

For more information on Supermicro AI Factory cluster solutions, visit the Supermicro AI Factory website.

Amazon

Amazon