VAST Data has partnered with Microsoft to offer the VAST AI Operating System (AI OS) natively on Microsoft Azure. This integration aims to create a high-performance, scalable AI infrastructure in the cloud, focusing on agentic AI and production model workflows.

The VAST AI OS will run directly on Azure’s infrastructure, allowing businesses to utilize familiar Azure tools, governance, security, and billing. The goal is to achieve unified management, consistent performance, and Azure-level reliability while extending VAST’s on-premises features into Azure’s GPU-accelerated environment.

VAST Services as a Native Azure Offering

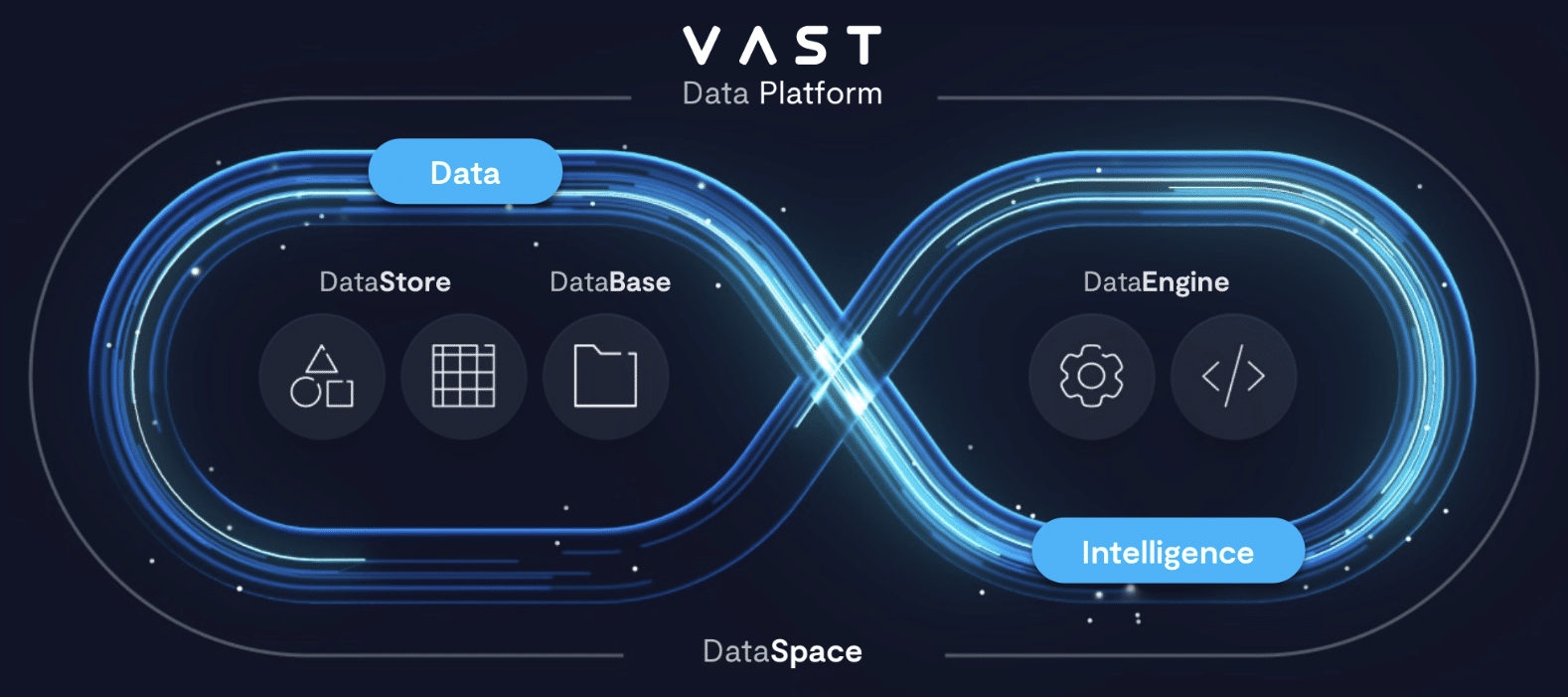

Azure customers will have access to VAST’s comprehensive data services stack, which includes unified storage, data cataloging, and database features. The platform is designed to support complex AI processes across on-premises, hybrid, and multi-cloud environments without requiring application redesign.

By positioning VAST as a cloud-native Azure service, businesses can consolidate AI data services onto a single logical platform. This approach aims to minimize operational challenges and deliver consistent performance, data management, and security, regardless of where the data resides.

Jeff Denworth, VAST Data co-founder, described the partnership as a way to create AI infrastructure that combines performance, scalability, and ease of use for agentic AI. He emphasized that the Azure collaboration enables customers to unify data and AI processes with VAST’s familiar tools, now enhanced by Azure’s global presence and flexibility.

Agentic AI with InsightEngine and AgentEngine

The integration is optimized for agentic AI, in which autonomous agents continuously operate on live data. VAST AI OS on Azure will offer two key services:

- VAST InsightEngine: This provides efficient, high-performance computing and database services tailored for AI data tasks, including vector search, retrieval-augmented generation (RAG), and data preparation. By running compute close to data with improved I/O and indexing, it reduces latency and boosts throughput.

- VAST AgentEngine: This manages autonomous agents on real-time data streams across hybrid and multi-cloud environments. This enables continuous AI processing across globally distributed datasets without the need for manual data staging or pipeline rewriting.

Together, these services establish VAST AI OS on Azure as a control and execution layer for agentic AI workflows across on-premises and multi-cloud environments.

Performance at Scale for Model Builders

VAST AI OS is designed to maintain busy GPU and CPU clusters during training and inference. On Azure, it will use the Laos VM Series and Azure Boost Accelerated Networking.

Key performance features include:

- High-throughput data services to fully utilize GPU and CPU capabilities

- Intelligent caching for frequently accessed datasets to reduce lag time

- Metadata-optimized input/output paths to handle small-file and metadata-heavy AI tasks

The goal is to deliver reliable performance from early tests through multi-region production rollouts, providing model builders with a consistent data layer as they grow.

Hybrid AI with Exabyte-Scale DataSpace

For clients with hybrid or on-premises VAST systems, the exabyte-scale VAST DataSpace offers a unified global namespace across sites and clouds. This single logical view removes data silos and eliminates the need for manual data copying.

Businesses can expand GPU-accelerated tasks from on-premises to Azure without typical data migration or reconfiguration. AI processes can be extended by targeting the same namespace, with VAST managing data placement and movement in the background.

Unified Data Access and AI-Native Database

The Azure-integrated platform includes:

- VAST DataStore: This supports file (NFS, SMB), object (S3), and block protocols from one platform. This setup allows legacy, analytics, and AI tasks to share the same data without needing separate storage stacks.

- VAST DataBase: This combines fast transactional performance, warehouse-class query speed, and data lake economics, enabling mixed workloads to run on a single platform rather than separate OLTP, OLAP, and lake systems.

Elastic, Cost-Efficient Architecture

VAST’s Disaggregated, Shared-Everything (DASE) architecture allows independent scaling of computing and storage resources within Azure. Customers can adjust resources as models, datasets, and demand change, rather than over-allocating tied systems.

Built-in Similarity Reduction also reduces storage requirements by removing duplicate data patterns, especially for large models, embeddings, and dataset versions. This results in a more cost-effective foundation for extensive AI infrastructure.

Alignment with Microsoft’s AI Roadmap

Aung Oo, Vice President of Azure Storage at Microsoft, stated that VAST AI OS on Azure provides a high-performance, scalable platform built on the Laos VM Series with Azure Boost, extending on-premises AI pipelines into Azure’s GPU-supported infrastructure. He noted VAST’s current adoption by model builders for scalability, performance, and AI-native features. He mentioned that the partnership should simplify operations, lower costs, and speed up time-to-insight.

As Microsoft develops its AI infrastructure and custom silicon projects, VAST will work with the Azure team to meet future platform needs. This partnership positions VAST as a key data and AI platform, aiming to support future AI systems with an operating system focused on scalability, performance, and ease of use.

Amazon

Amazon