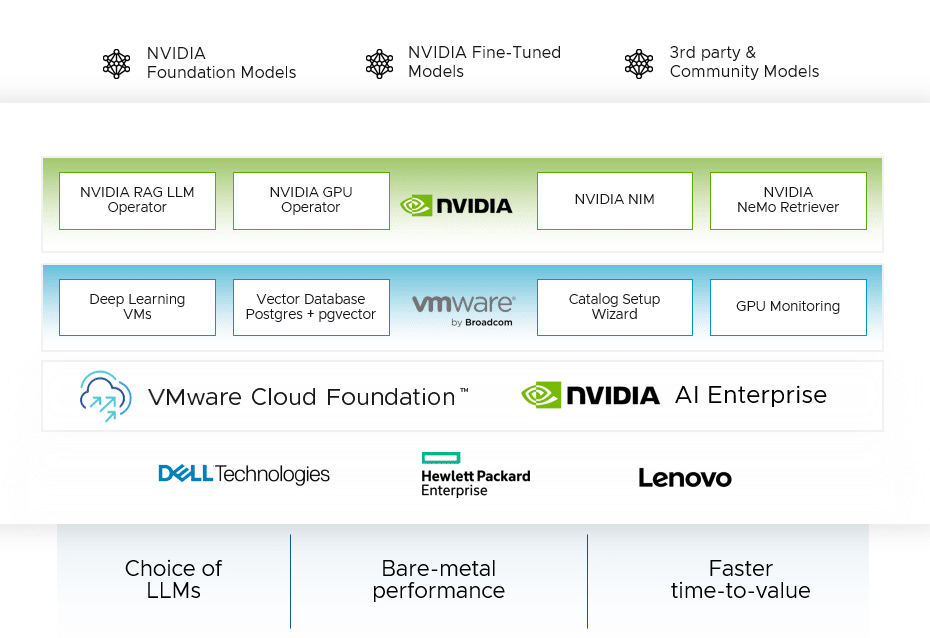

During the 2023 VMware Explore event, VMware announced VMware Private AI and VMware Private AI Foundation in collaboration with NVIDIA. At NVIDIA GTC this week, VMware took it one step closer by announcing the initial availability of VMware Private AI Foundation with NVIDIA. This collaboration resulted in the development of a platform built on VMware Cloud Foundation that incorporates NVIDIA’s AI inference microservices. The platform also includes contributions from companies like Hugging Face.

VMware Private AI Foundation with NVIDIA is designed to advance the implementation of Retrieval Augmented Generation (RAG) workflows, LLM model customization, and inference workloads within data centers. It offers solutions to privacy, cost, and compliance issues through automation tools, deep learning VM images, a vector database, and GPU monitoring capabilities. Notably, acquiring separate NVIDIA AI Enterprise licenses for full functionality is essential.

VMware Private AI Foundation With NVIDIA Advantages

Privacy, Security, and Compliance: The platform offers a sophisticated architecture that ensures data privacy, tight security, and stringent compliance. It is underpinned by VMware’s cloud infrastructure, which includes features like Secure Boot and VM encryption.

Enhanced Performance: By harnessing Broadcom and NVIDIA’s innovations, the platform is engineered to maximize performance for generative AI models, featuring tools for efficient GPU utilization and sophisticated network fabric like NVIDIA NVLink and NVSwitch.

Deployment Simplification and Cost Optimization: The VMware Private AI Foundation with NVIDIA aims to streamline deployment and reduce the costs associated with generative AI models, facilitated by a comprehensive management suite and resource-sharing mechanisms.

Architecture

At its core, the VMware Cloud Foundation offers a comprehensive cloud infrastructure solution, while NVIDIA AI Enterprise provides an expansive, cloud-native AI software platform. Together, they empower organizations to deploy secure and private AI models effectively.

VMware Private AI Foundation with NVIDIA enables on-premises deployments that provide enterprises with the controls to quickly address many regulatory compliance challenges without undergoing a significant re-architecture of their existing environment.

Setting up a deep-learning VM manually can be a time-consuming and complex process. This manual approach may lead to inconsistencies across different development environments, affecting optimization. To help customers, VMware Private AI Foundation with NVIDIA offers pre-configured deep learning VMs with necessary software frameworks such as NVIDIA NGC, libraries, and drivers. This saves users from the hassle of setting up each component individually.

VMware has enabled vector databases by leveraging pgvector on PostgreSQL. This capability is managed through native infrastructure automation and data services management in VMware Cloud Foundation. Data Services Manager simplifies the deployment and management of open-source and commercial databases from a single pane of glass. Vector databases are essential for RAG workflows to enable rapid data querying and real-time LLM output enhancements without retraining.

VMware Cloud Foundation has introduced a Catalog Setup Wizard within the self-service portal to streamline infrastructure provisioning for AI projects. This reduces manual workloads and expedites access to AI/ML infrastructure.

VMware Private Foundation and NVIDIA introduce GPU monitoring capabilities in VMware Cloud Foundation. These capabilities provide views of GPU resource utilization across clusters and hosts alongside the existing host memory and capacity consoles, insights into GPU performance metrics, and aid in optimization and cost management.

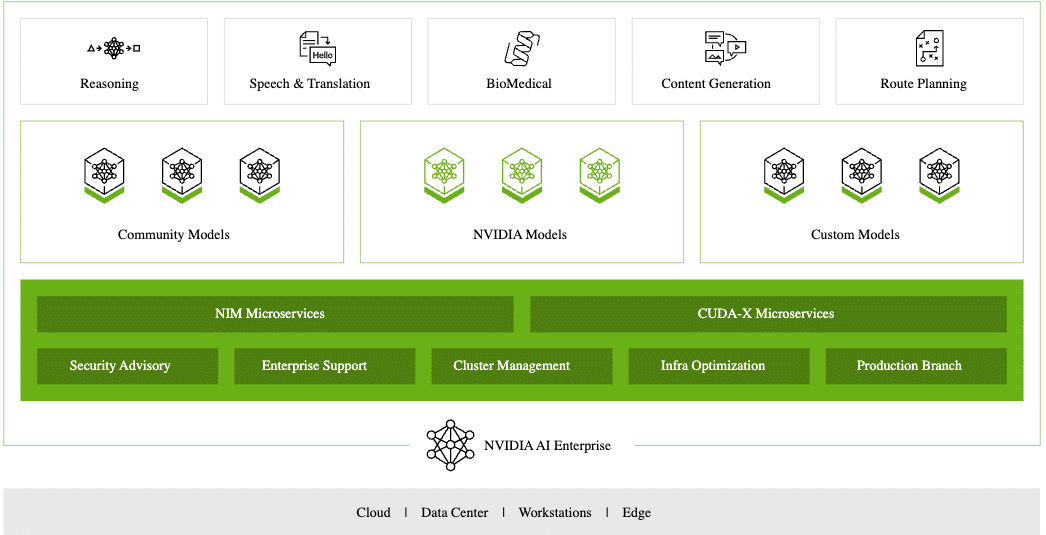

NVIDIA AI Enterprise Capabilities

NVIDIA AI Enterprise capabilities include:

- NVIDIA NIM: A suite of microservices that accelerates Gen AI deployment across a wide array of AI models, utilizing foundational NVIDIA software for efficient inferencing.

- NVIDIA NeMo Retriever: This component of the NVIDIA NeMo platform offers microservices for connecting custom models to business data, ensuring high-accuracy responses with optimal data privacy.

- NVIDIA RAG LLM Operator: Streamlining the deployment of RAG applications into production environments by utilizing NVIDIA’s AI workflow examples.

- NVIDIA GPU Operator: Automates the management of software required for utilizing GPUs within Kubernetes, enhancing performance and management efficiency.

- Server OEM Support: The platform is backed by significant server OEMs such as Dell, HPE, and Lenovo, ensuring broad compatibility and support.

The VMware Private AI Foundation with NVIDIA is a powerful solution tailored to empower enterprises to deploy and manage AI models. It encompasses an ecosystem that addresses AI projects’ security, performance, and sustainability. By using deep learning VMs, vector databases, and a set of NVIDIA tools, the platform streamlines the deployment and management of AI models while meeting enterprise requirements for compliance and performance. Leading server OEMs endorse the platform, ensuring a holistic and cooperative approach to AI.

Amazon

Amazon