At the SC25 event in St. Louis, Brian caught up with his long-time friend Mark Klarzynski, founder and acting CTO at PEAK:AIO. If you have been following StorageReview, then you may be familiar with our coverage of PEAK:AIO, from regular news coverage to medical AI to animal conservation.

As Mark explains, he has “spent a lifetime in storage, and he has known Brian for a very long, long time.” It shows with a very candid, easy conversation about hardware and software, and the evolution of both.

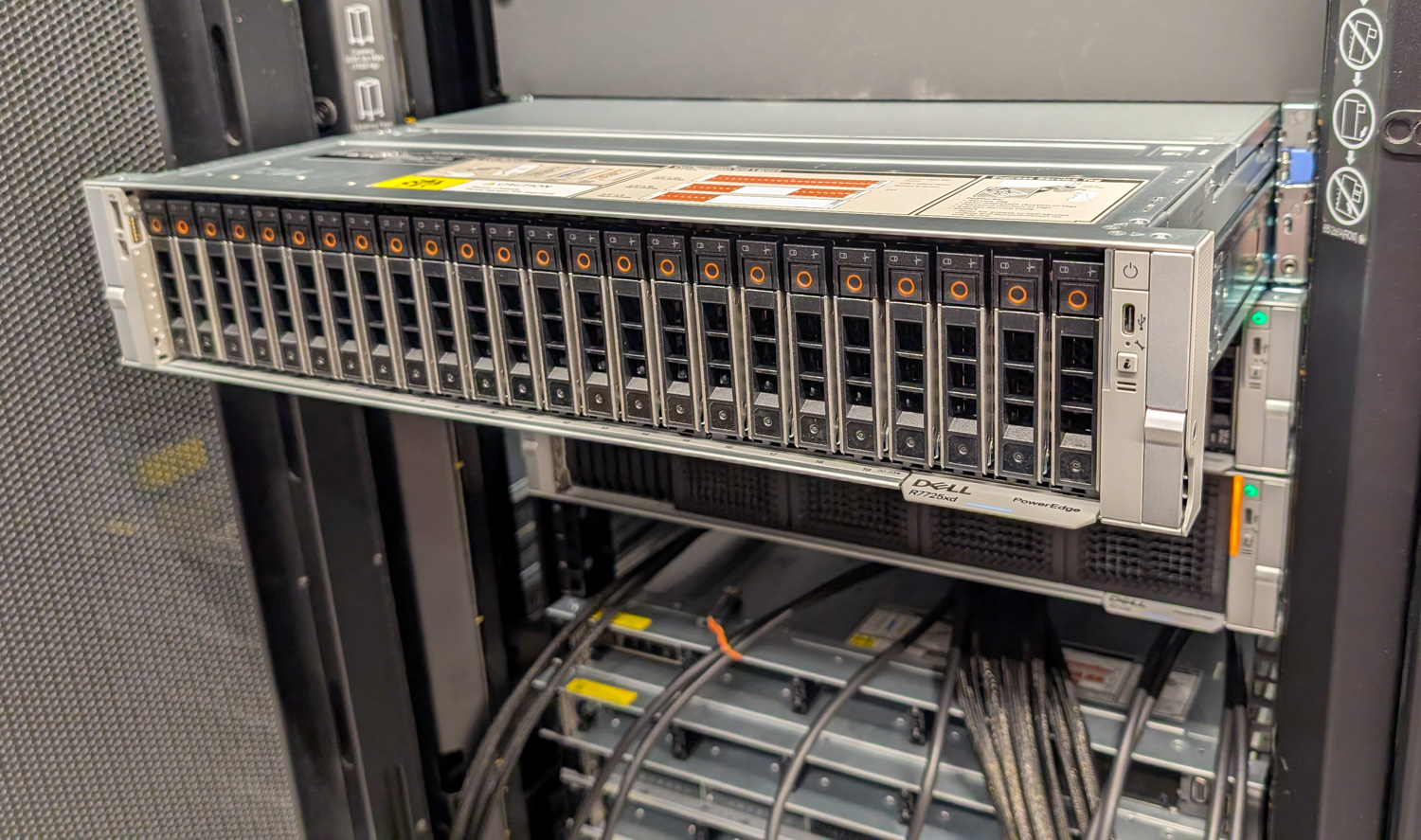

In this really short Podcast, Brian and Mark talk about software development, Dell’s PowerEdge, and GPU servers. Mark is excited about advances in hardware design, especially Dell’s PowerEdge R7725xd, powered by AMD EPYC CPUs.

This short podcast covers a lot of territory, and it is worth listening to in one sitting. However, if you can’t spare 20 minutes, we’ve broken it down into five-minute sections so you can hop around.

0:00 – 5:00

- PEAK:AIO software unlocks the Dell 7725XD’s extreme performance.

- Modern hardware (CPUs, NVMe, networks) is finally good enough that software can “get out of the way.”

- PEAK:AIO removed decades of legacy storage features to get closer to bare metal.

- Their surprise: the Dell box delivered far higher performance than expected; they thought the tests were wrong.

- Philosophy: Making things simple and stable is more complicated than just making them fast.

5:00 – 10:00

- PEAK:AIO chose to remain independent rather than become a feature within a prominent vendor’s product line.

- They work directly with research teams (e.g., brain cancer models), which is more rewarding than just speeding up databases.

- Early focus: compressing what previously required 4–10 storage nodes into a single powerful server.

- Traditional parallel file systems were built for 10GbE + HDD; NVMe and massive PCIe bandwidth are now required.

- With Los Alamos, they’re building an open, modern NFS fabric—no proprietary lock‑in.

10:00 – 15:00

- Hardware “wish list”: more PCIe lanes and PCIe Gen 6 to unlock even more bandwidth.

- Vision: GPU servers that are a model box, inference box, and storage node all in one, yet part of a larger namespace.

- Start small on a GPU server; when storage runs out, add a 7725XD and let PEAK:AIO automatically expand and rebalance.

- Customers want to scale storage only when AI projects prove value, not buy a giant array up front.

- Economics matter: you can’t justify a $1M storage array behind a $300k GPU server for hospitals and similar budgets.

15:00 – 20:00

- Strategy: Use commodity servers plus PEAK:AIO software to replace expensive, specialized storage arrays.

- OEMs like Dell handle fundamental hardware issues; PEAK:AIO focuses on software, stability, and performance.

- Built-in monitoring and predictive intelligence; years in the field with no downtime from their platform.

- Joint project results: ~70M IOPS in a single node and hundreds of GB/s throughput—record numbers for them.

- Next step: put models inside the storage node (with spare CPU/GPU capacity) so inference happens in-box, not over the network.

Amazon

Amazon