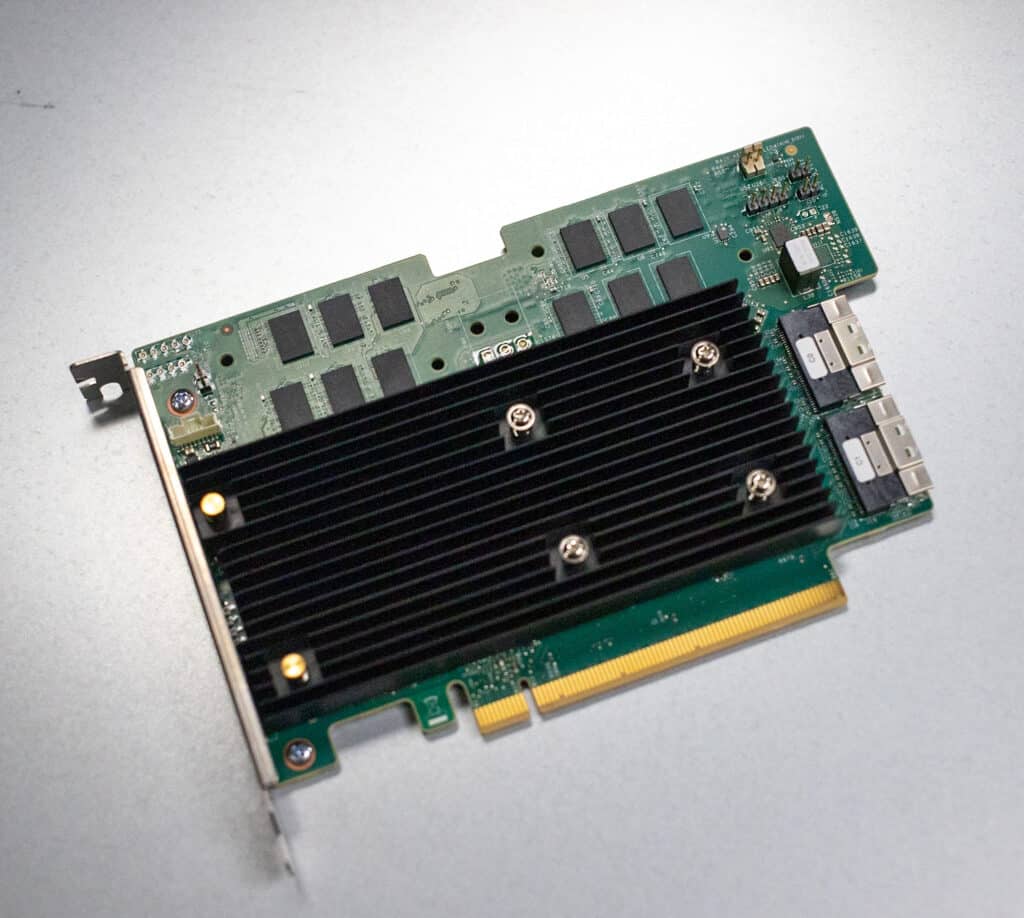

The MegaRAID 9600 series is a third-generation storage adapter that supports SATA, SAS, and NVMe drives, designed to deliver the best possible performance and data availability for storage servers. Compared to the previous generation, the 9600 series offers a 2x increase in bandwidth, over 4x increase in IOPs, 25x reduction in write latency, and 60x increase in performance during rebuilds. As usual, the 9600 family of cards includes a number of configurations. In this review, we’re looking at the Broadcom MegaRAID 9670W-16i, which supports 16 internal ports.

The MegaRAID 9670W-16i is based on the SAS4116W RAID-on-Chip (RoC), which is a key factor in performance improvements across the board. Users can connect up to 240 SAS/SATA devices or 32 NVMe devices per controller with the x16 PCIe Gen 4.0 interface.

The 9600 series also features hardware secure boot and SPDM attestation support, balance protection, and performance for RAID 0, 1, 5, 6, 10, 50, and 60 and JBOD. CacheVault flash cache protection is an option for those who want more protection.

Why Hardware RAID for NVMe?

Hardware RAID has been the go-to option for offering resilient storage since before today’s IT admin’s beards were grey. But as storage has become faster, specifically NVMe SSDs, RAID cards have struggled to keep up with the times. So when Broadcom pitched us on a review of the MegaRAID 9670W-16i, we were a bit dubious. The truth is that there is a performance cost for a RAID card, and we’ve avoided them for many years as a result. That said, the value of what hardware RAID offers is undeniable.

For environments that don’t offer software-RAID options, which include VMware ESXi, customers can’t easily aggregate storage or protect storage with RAID. Although vSAN can be easily implemented at the cluster level, it cannot be used for a standalone ESXi node at the edge. Here customers may want the benefits of pulling together multiple SSDs in RAID for a larger datastore or some data resiliency.

Even in Windows, which offers Storage Spaces for individual servers, certain software RAID types, such as RAID5/6, take a significant hit. In the past, hardware RAID has been an effective solution for bridging the performance gap for SAS and SATA devices, the MegaRAID 9670W aims to change that for NVMe devices.

Broadcom MegaRAID 9670W-16i Test Bed

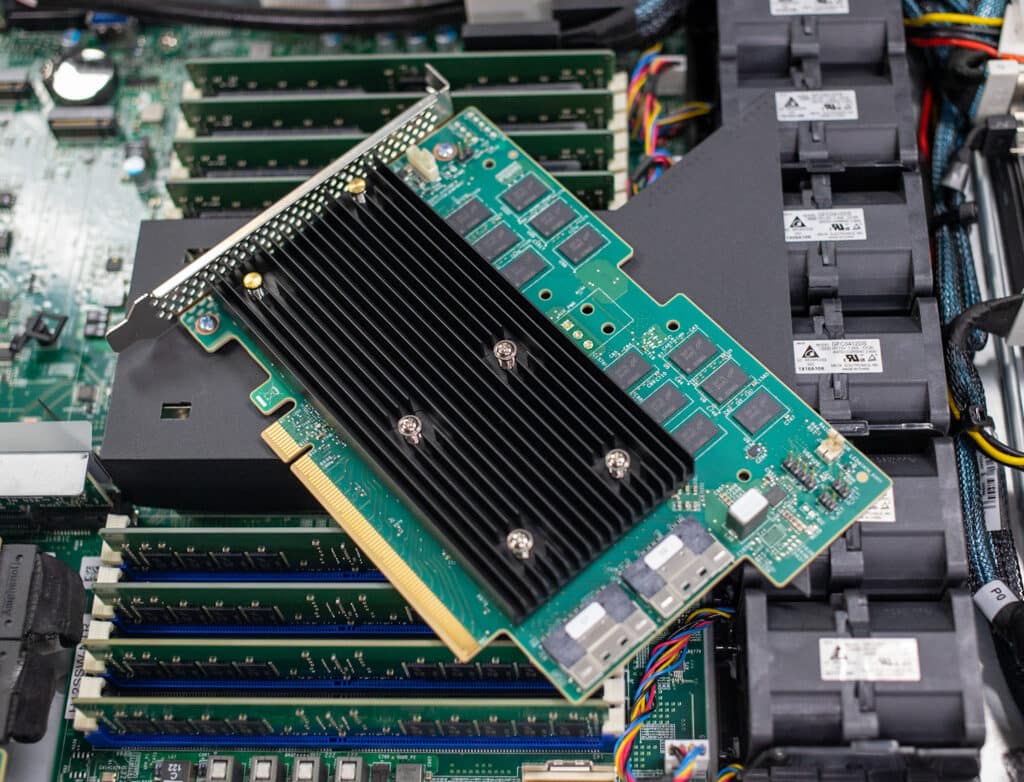

For this review, we worked with Micron, Supermicro, and Broadcom to assemble a test bed. The server is a Supermicro AS-1114S-WN10RT 1U system with AMD Milan 7643 CPU and 128GB of DDR4. Inside that system sits the 9670W-16i, cabled to twin 8-Bay NVMe JBODs. Inside each are 8 x Micron 7450 SSDs formatted to 6.4TB capacity.

To measure the performance of the drives through the MegaRAID 9670W-16i adapter, the benchmarks were split into the following configurations. The first included a JBOD configuration measuring each drive outside of RAID (but still through the HBA), RAID10, and then RAID5 configurations. These configurations were walked through a scripting process to precondition the flash, run the tests they were conditioned for, and move to the next preconditioning/workload mix. This process, in total, measured around 16 hours.

- Total test run time of around 16 hours in this order:

- Sequential preconditioning (~2:15)

- Sequential tests on 16x JBOD, 2x 8DR10, 2x 8DR5 (~2 hours)

- Random preconditioning – 2 parts (~4:30)

- Random optimal tests on 16x JBOD, 2x 8DR10, 2x 8DR5 (~3 hours)

- Random rebuild tests on 1x 16DR10, 1x 16DR5 (~2:30)

- Random write latency for optimal and rebuild for 1x 16DR5 (~1:40)

The first section of performance metrics focuses on the bandwidth through the card in JBOD, RAID10, and RAID5 modes. With the MegaRAID 9670W-16i offering a x16 PCIe Gen4 slot width, its peak performance will be right around 28GB/s in one direction, and that is where the Gen4 slot tops out. By comparison, a U.2 Gen4 SSD connects through a x4 connection and can peak out around 7GB/s, and this is where most enterprise drives can top out for read workloads.

With that said, the MegaRAID 9670W completely saturates the slot it’s connected to. When looking at the read performance, the JBOD configuration comes in with 28.3GB/s with RAID10, and RAID5 comes in a hair below it measuring 28GB/s. When we switch our focus to write performance, the JBOD baseline is 26.7GB/s, while the RAID10 configuration came in with 10.1GB/s and RAID5 at 13.2GB/s. When we look at a 50:50 split of simultaneous read and write traffic, the JBOD configuration measured 41.6GB/s, RAID10 at 19.6GB/s, and RAID5 at 25.8GB/s.

| Workload | JBOD (MB/s) | RAID 10 – Optimal (MB/s) | RAID 5 – Optimal (MB/s) |

|---|---|---|---|

| Maximum Sequential Reads | 28,314 | 28,061 | 28,061 |

| Maximum Sequential Writes | 26,673 | 10,137 | 13,218 |

| Maximum 50:50 Seq Reads:Writes | 41,607 | 19,639 | 25,833 |

When switching our focus to small-block random transfers, we see the MegaRAID 9670W held up quite well in read performance versus the JBOD baseline figure of 7M IOPS. This speed dropped to about half (3.2M IOPS) during a rebuild operation if one SSD failed in the RAID group. Looking at random write performance, the JBOD baseline measured 6.3M IOPS against 2.2M from RAID10 and 1M from RAID5. Those figures didn’t see a considerable drop when an SSD was failed from the group and the RAID card was forced to rebuild. In that situation, RAID10 didn’t change, although RAID5 dropped from 1M to 788k IOPS.

In the 4K OLTP workload with a mixture of read and write performance, the JBOD baseline measured 7.8M IOPS against RAID10 with 5.6M IOPS and RAID5 with 2.8M IOPS. During a rebuild, RAID10 dropped from 5.6M to 2.4M IOPS, and RAID5 dropped from 2.8M to 1.8M IOPS.

| Workload | JBOD | RAID 10 – Optimal | RAID 5 – Optimal | RAID 10 – Rebuilding | RAID 5 – Rebuilding |

|---|---|---|---|---|---|

| 4KB Random Reads (IOPs) | 7,017,041 | 7,006,027 | 6,991,181 | 3,312,304 | 3,250,371 |

| 4KB Random Writes (IOPs) | 6,263,549 | 2,167,101 | 1,001,826 | 2,182,173 | 788,085 |

| 4KB OLTP (IOPs) | 7,780,295 | 5,614,088 | 2,765,867 | 2,376,036 | 1,786,743 |

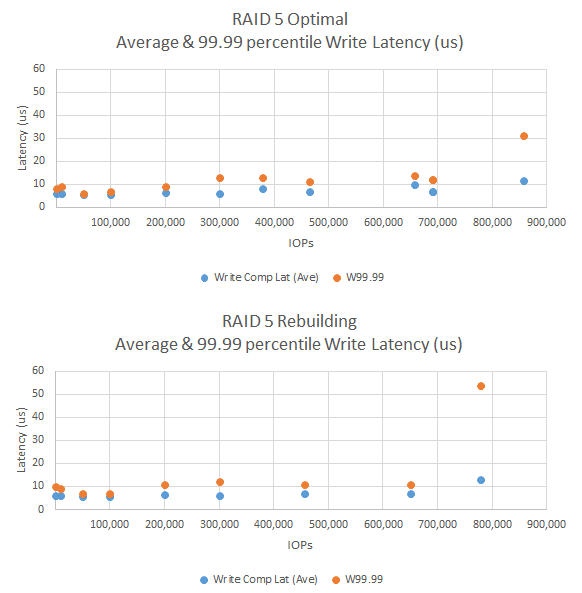

Another important aspect of RAID performance is how well the storage behaves between optimal conditions and rebuild performance if a drive fails. If performance or latency were to take a massive hit, application responsiveness can become a problem. To that end we focused on RAID5 4K random write latency in optimal and rebuilding modes. Across the spectrum, latency remained quite similar, which is exactly what you want to see in a production environment storage system.

We not only evaluated the overall performance of each mode through point-of-time performance metrics, which also included the performance of the RAID card during a rebuild operation, but we also conducted tests to determine the total time it took to rebuild. Here in RAID10, dropping a 6.4TB SSD out of the RAID group and adding it back in took 60.7 minutes for RAID10 with a rebuild speed of 10.4 Min/TB. The RAID5 group took 82.3 minutes with a speed of 14.1 Min/TB.

Final Thoughts

To be honest, we came into this review with heads slightly cocked and one eyebrow raised. We haven’t heard a RAID card pitch for NVMe SSDs in a while, outside of the emerging class of solutions designed around GPUs. So we did have to ask the fundamental question, can hardware RAID even be a thing for NVMe SSDs?

The answer is clearly yes. The performance of PCIe Gen4 allows the MegaRAID 9670W-16i RAID card to keep up with modern SSDs across a variety of workloads. Yes, some areas like bandwidth will be limited with fewer PCIe lanes, but again most production environments don’t sit at those levels.

In peak bandwidth, we saw the MegaRAID 9670W-16i take things right up to the x16 PCIe Gen4 limit of 28GB/s in read and offer up to 13GB/s in RAID5 in write bandwidth. On the throughput side, random 4K read performance topped out at 7M IOPS with write spanning from 1 to 2.1M IOPS between RAID5 and RAID10. For deployments looking to consolidate flash into larger volumes or get around systems that don’t support software RAID, the MegaRAID 9670W has a lot to offer.

If you love storage adapters, then you’re about to get more of this kind of coverage. We’re already exploring the latest-gen servers, like the Dell PowerEdge R760, which offers a twin RAID card configuration based on the same silicon as this card. In the R760 case, Dell attaches 8 NVMe SSDs to each card, giving us a more robust enterprise solution than the one we tested here for validation. So there’s much more to come now that it looks like RAID cards are back on the menu for servers with NVMe SSDs.

Broadcom 9670W-16i Product Page

Amazon

Amazon