The EchoStreams OSS1A is a 1U rack system engineered for use as a flexible appliance server platform most notable for its depth of only 21 inches. The OSS1A incorporates a dual-processor Intel C602A chipset and supports Intel Xeon E5-2600 V2 CPUs with up to 384GB of RAM in order to provide the horsepower necessary to handle a variety of duties in space-constrained and field deployments.

Our review of the OSS1A is based not only on its performance in our suite of enterprise performance benchmarks, but also on our experience using the OSS1A ourselves as part of the StorageReview On-site Testing Toolkit. We selected the OSS1A for use in onsite benchmarks because of the server’s small footprint and because it has the power to perform many of the functions of our laboratory’s benchmarking environment without becoming a bottleneck.

The EchoStreams OSS1A is offered in three configurations with different options for internal storage that can be used in addition to an optional internal SSD bay: two front-accessible 15mm 2.5-inch bays; two internal 15mm 2.5-inch drive bays; four front-accessible 7mm 2.5-inch bays; or two internal 2.5-inch bays with an LCD display panel. An upcoming Haswell version is also in the works, which will offer a 500W power supply to handle the highest 160W TDP processors. The Haswell version will also support two FHHL Gen3 X16 cards, or One FHHL plus one I/O module, which supports dual 10Gb, 40Gb or IB, plus a pair of SFF8644 ports for SAS expansion from onboard SAS3008. This feature would make this platform ideal as the storage head nodes (2x for HA) connected to the EchoStreams eDrawer4060J (4U60bay dual 12G expander JBOD), running NexantaStor or other software defined storage stack. In that configuration the four SSDs in the front can be used as cache.

Our review unit features the form factor with two front-accessible 2.5-inch bays and two Intel Xeon E5-2697 v2 processors with 2.7GHz, 30MB Cache, and 12 cores. We have also configured the server with 16GB (2x 8GB) 1333MHz of DDR3 with registered RDIMMs and a 100GB Micron RealSSD P400e as the boot drive. Generally, our EchoStreams OSS1A is configured with two Emulex LightPulse LPe16202 Gen 5 Fibre Channel HBAs (8GFC, 16GFC or 10GbE FCoE) PCIe 3.0 Dual-Port CFA or two Mellanox ConnectX-3 dual-port 10GbE Ethernet PCIe 3.0 NICs. These components are swapped when the server is being used to drive benchmarks depending on the equipment being tested and workload that is required.

Echostreams OSS1A Specifications

- Supported CPU: Dual socket 2011 Intel Xeon E5 2600 V2 (Ivy Bridge) processors

- Chipset: Intel C602A

- Supported RAM: Up to 384GB DDR3 800/1066/1333/1600 RDIMM/ECC UDIMM/Non-ECC UDIMM/LR-DIMM

- I/O Interface:

- 2 x Rear USB

- 1 x Front USB

- 1 x VGA Port

- 4 x GbE Ethernet

- 1 x MGMT LAN

- Expansion Slots: 1x PCIe Gen3 X16 FHHL & 1x PCIe Gen3 X8 LP slots

- Storage:

- 2 x 2.5″ hot-swap bays or

- 4 x 2.5″ 7mm hot-swap bays or

- 2 x internal 2.5″ bays.

- Server Management: IPMI with iKVM

- Cooling: 3x 97mm blowers

- Front Panel Display:

- 1 x Power On/Off switch & LED

- 1 x Locate switch & LED

- 1 x Reset switch

- 1 x System warning LED

- 4 x LAN LED

- Power: 400W high efficiency 1+1 redundant power supplies

- Gross Weight: 35lbs

- Dimensions:

- System: 21″ x 17″ x 1.74″

- Packaging: 36” x 24.5” x 10.5” (LxWxH)

- Environmental:

- Operating Temperature: 0°C to 35°C

- Non-Operating Temperature: -20°C to 70°C

- Humidity: 5% to 95% non-condensing

- Compliance: CE, FCC Class A, RoHS 6/6 compliant

- Compatible OS: Linux RedHat Enterprise 6 64bit, Suse Linux Enterprise Server 11.2 64bit, VMWare ESX 4.1/ESXi4.1, Windows 2008 R2, Windows Server 2012, CentOS 5.6, Ubuntu 11.10

Build and Design

Our OSS1A review unit incorporates two front-accessible 2.5-inch drive bays recessed into a removable aluminum bezel. The front also provides access to one USB port along with a locator switch and LED, power switch and LED, a reset switch, a system status LED, and four LEDs corresponding to the integrated Ethernet ports on the rear of the system.

Elevated fan exhausts lie behind the front bezel, immediately in front of the dual CPUs and DDR3 RAM. The EchoStreams OSS1A incorporates a dual 400W power supplies in a 1+1 hot swap arrangement on the right of the system. We have configured this server with a 100GB Micron RealSSD P400e boot drive.

The rear panel incorporates 4 GbE ports (including one for management), 2 USB ports, a VGA port, and access to expansion cards.

Testing Background and Storage Media

We publish an inventory of our lab environment, an overview of the lab’s networking capabilities, and other details about our testing protocols so that administrators and those responsible for equipment acquisition can fairly gauge the conditions under which we have achieved the published results. To maintain our independence, none of our reviews are paid for or managed by the manufacturer of equipment we are testing.

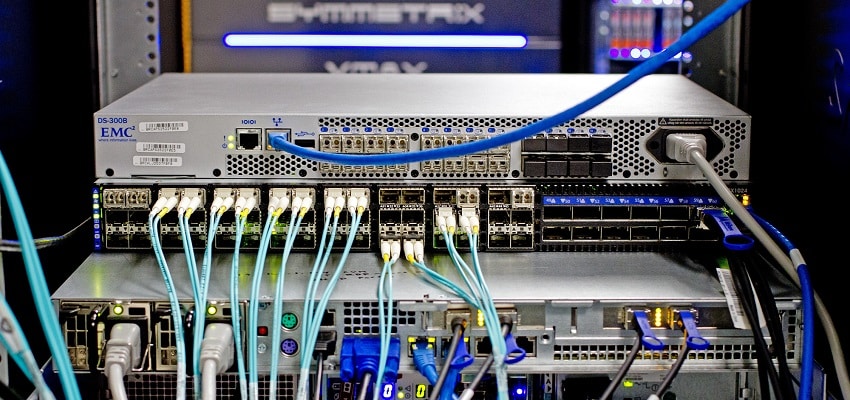

In our standard review process the vendor ships us the platform being reviewed, which we install in the StorageReview Enterprise Test Lab in order to conduct performance benchmarks. However with some platforms there are size, complexity, and other logistical obstacles to this approach, and that is when the EchoStreams OSS1A comes into play.

Our recent review of the EMC VNX5200 platform is an example of a situation which required us to travel to EMC’s lab with the OSS1A in order to conduct iSCSI and FC performance testing. EMC supplied an 8Gb FC switch, while we brought one of our Mellanox 10/40Gb SX1024 switches for Ethernet connectivity. The performance section below is based on the benchmarks conducted with the OSS1A driving the workloads and the VNX5200 as the storage array.

EchoStreams OSS1A-1U configuration:

- 2x Intel Xeon E5-2697 v2 (2.7GHz, 30MB Cache, 12-cores)

- Intel C602A Chipset

- Memory – 16GB (2x 8GB) 1333MHz DDR3 Registered RDIMMs

- Windows Server 2012 R2 Standard

- Boot SSD: 100GB Micron RealSSD P400e

- 2x Mellanox ConnectX-3 dual-port 10GbE NIC

- 2x Emulex LightPulse LPe16002 Gen 5 Fibre Channel (8GFC, 16GFC) PCIe 3.0 Dual-Port HBA

Enterprise Synthetic Workload Analysis

Prior to initiating each of the fio synthetic benchmarks, our lab preconditions the device into steady state under a heavy load of 16 threads with an outstanding queue of 16 per thread. Then the storage is tested in set intervals with multiple thread/queue depth profiles to show performance under light and heavy usage.

Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregated)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

This synthetic analysis incorporates four profiles which are widely used in manufacturer specifications and benchmarks:

- 4k random – 100% Read and 100% Write

- 8k sequential – 100% Read and 100% Write

- 8k random – 70% Read/30% Write

- 128k sequential – 100% Read and 100% Write

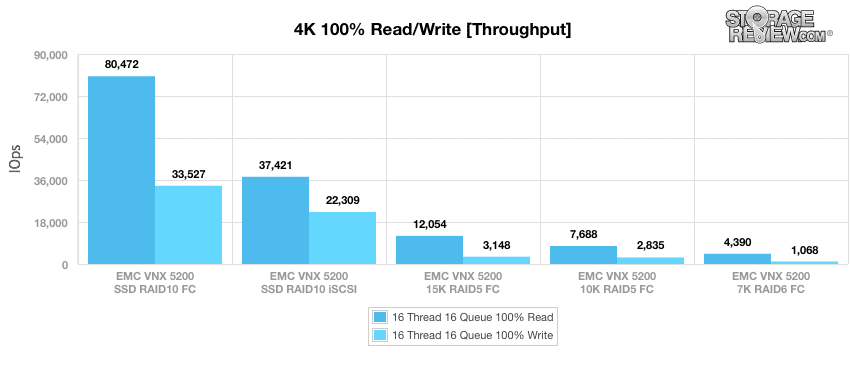

When configured with SSDs and accessed by the EchoStreams OSS1A via Fibre Channel, the VNX5200 posted 80,472IOPS read and 33,527IOPS write. Using the same SSDs in RAID10 with our iSCSI block-level test, the VNX5200 achieved 37,421IOPS read and 22,309IOPS write. Switching to 15K HDDs in RAID5 using Fibre Channel connectivity showed 12,054IOPS read and 3,148 IOPS write, while the 10K HDD configuration hit 7,688IOPS read and 2,835IOPS write. When using 7K HDDs in a RAID6 configuration of the same connectivity type, the EchoStreams OSS1A and VNX5200 posted 4,390IOPS read and 1,068IOPS write.

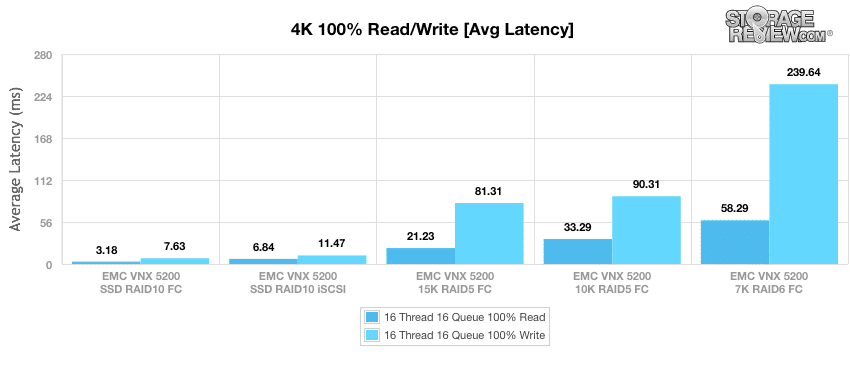

When configured with SSDs in RAID10 and using a Fibre Channel connectivity, this configuration showed 3.18ms read and 7.63ms write. Using the same SSDs with our iSCSI block-level test, the system boasted 6.84ms read and 11.47me write. Switching to 15K HDDs in RAID5 using Fibre Channel connectivity showed 21.23ms read and 81.31ms write in average latency, while the 10K HDD configuration posted 33.29ms read and 90.31ms write. When switching to 7K HDDs in a RAID6 configuration, the VNX5200 posted 58.29ms read and 239.64ms write.

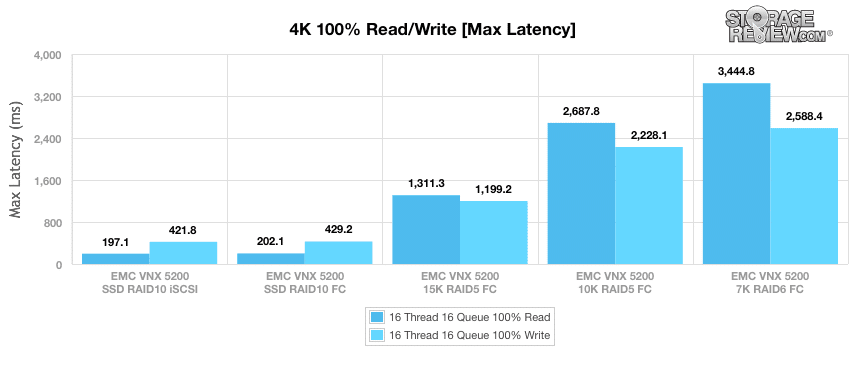

When configured with SSDs in RAID10 and using our iSCSI block-level test, our maximum latency results clocked 197ms for read operations and 421.8ms for write operations. Using the same SSDs with Fibre Channel connectivity, the system measured 202.1ms read and 429.2ms write. Switching to 15K HDDs in RAID5 using Fibre Channel connectivity recorded 1,311.3ms read and 1,199.2ms write for maximum latency, while the 10K HDD configuration posted 2,687.8ms read and 2,228.1ms write. When switching to 7K HDDs in a RAID6 configuration of the same connectivity type, the OSS1A and VNX5200 posted 3,444.8ms read and 2,588.4ms write.

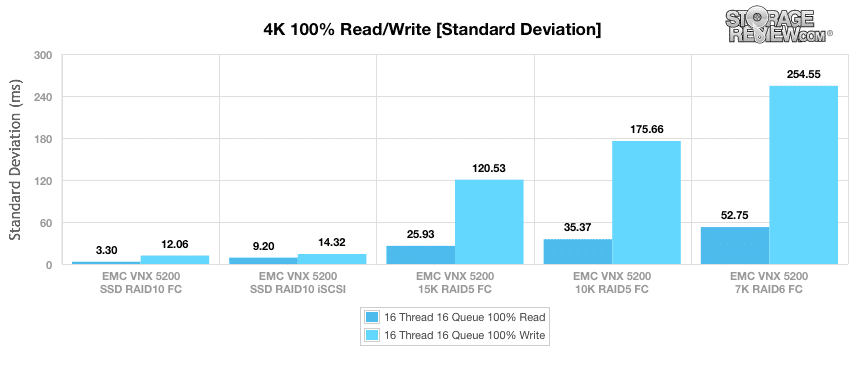

Our last 4K benchmark is standard deviation, which measures the consistency of the system‘s latency performance. When configured with SSDs in RAID10 using our iSCSI block-level test, the OSS1A and VNX5200 posted 3.30ms read and 12.06ms write. Using the same SSDs with Fibre Channel connectivity shows 9.20ms read and 14.32ms write. Switching to 15K HDDs in RAID5 with Fibre Channel connectivity recorded 25.93ms read and 120.53ms write, while the 10K HDD configuration posted 35.37ms read and 175.66ms write. When using 7K HDDs RAID6 configuration of the same connectivity type, the VNX5200 posted 52.75ms read and 254.55ms write.

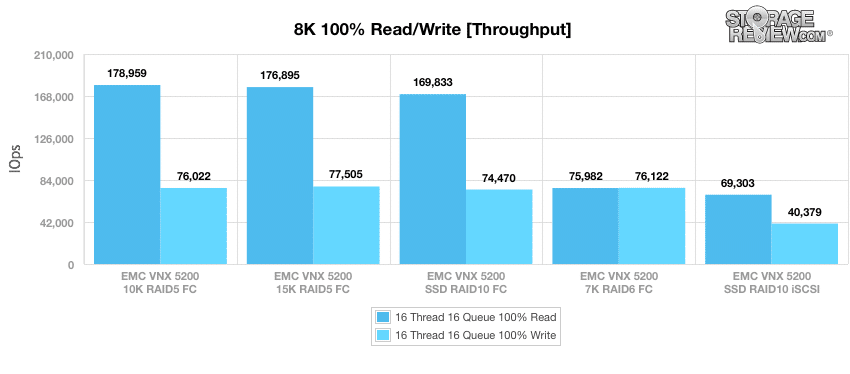

Our next benchmark uses a sequential workload composed of 100% read operations and then 100% write operations with an 8k transfer size. Here, the OSS1A measured 178,959IOPS read and 76,022IOPS write when the VNX5200 was outfitted with 10K HDDs in RAID5 Fibre Channel connectivity. Using 15K HDDs in RAID5 with Fibre Channel connectivity shows 176,895IOPS read and 77,505IOPS write. Switching to SSDs in RAID10 using a Fibre Channel connectivity recorded 169,833IOPS read and 74,470IOPS write, while the SSD iSCSI block-level test showed 69,303IOPS read and 40,379IOPS write. When using 7K HDDs in a RAID6 configuration with Fibre Channel connectivity, the VNX5200 posted 75,982IOPS read and 76,122IOPS write.

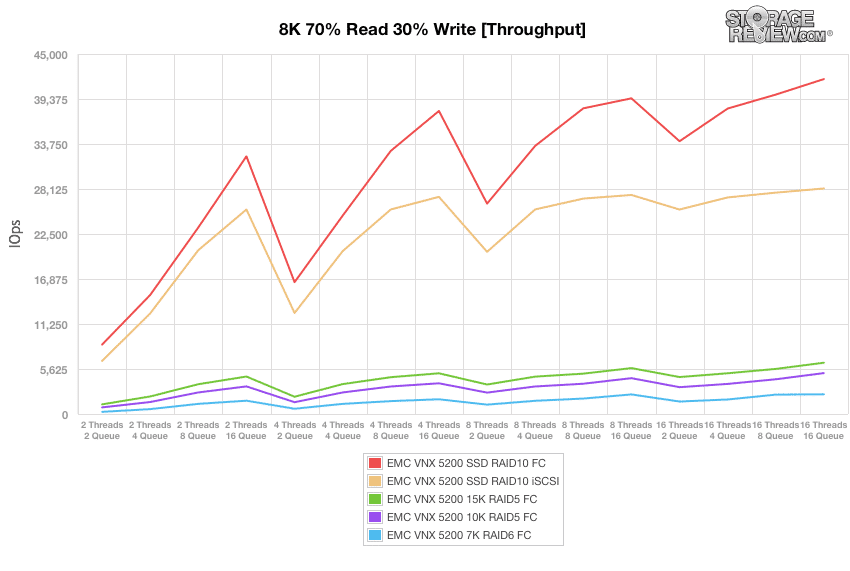

Our next series of workloads are composed of a mixture of 8k read (70%) and write (30%) operations up to a 16 Threads 16 Queue, our first being throughput. When configured with SSDs in RAID10 using Fibre Channel connectivity, the OSS1A and the VNX5200 posted a range of 8,673IOPS to 41,866IOPS by 16T/16Q. Using the same SSDs during our iSCSI block-level test shows a range of 6,631IOPS to 28,193IOPS. Switching to 15K HDDs in RAID5 with Fibre Channel connectivity recorded a range of 1,204IOPS and 6,411IOPS, while the 10K HDD configuration posted 826 IOPS and 5,113 IOPS by 16T/16Q. When using 7K HDDs RAID6 configuration of the same connectivity type, the system posted a range of 267IOPS to 2,467IOPS.

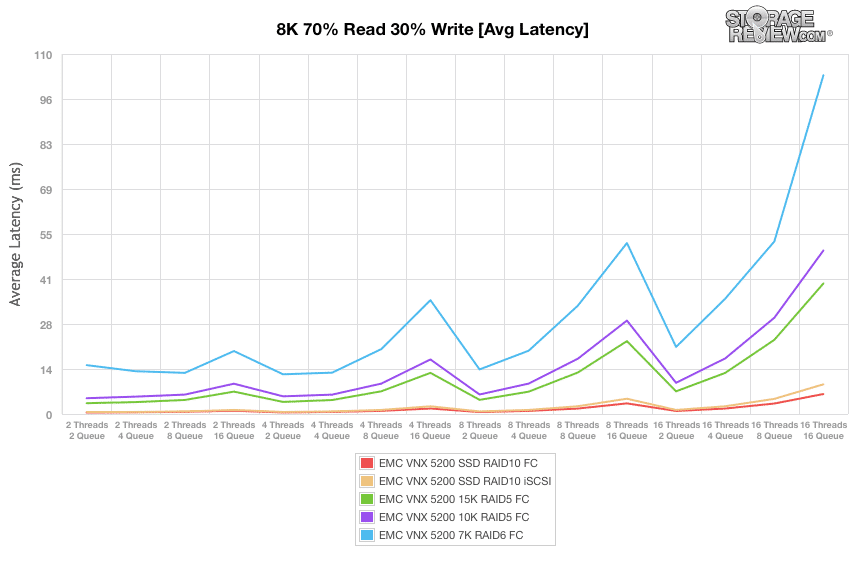

Next, we looked at average latency. When the VNX5200 was configured with SSDs in RAID10 using Fibre Channel connectivity, the OSS1A measured a range of 0.45ms to 6.11ms by 16T/16Q. Using the same SSDs during our iSCSI block-level test shows a range of 0.59ms to 9.07ms. Switching to 15K HDDs in RAID5 with Fibre Channel connectivity recorded a range of 3.31ms and 39.89ms, while the 10K HDD configuration posted 4.83ms initially and 49.97ms by 16T/16Q. When using 7K HDDs RAID6 configuration of the same connectivity type, the VNX5200 posted a range of 14.93ms to 103.52ms.

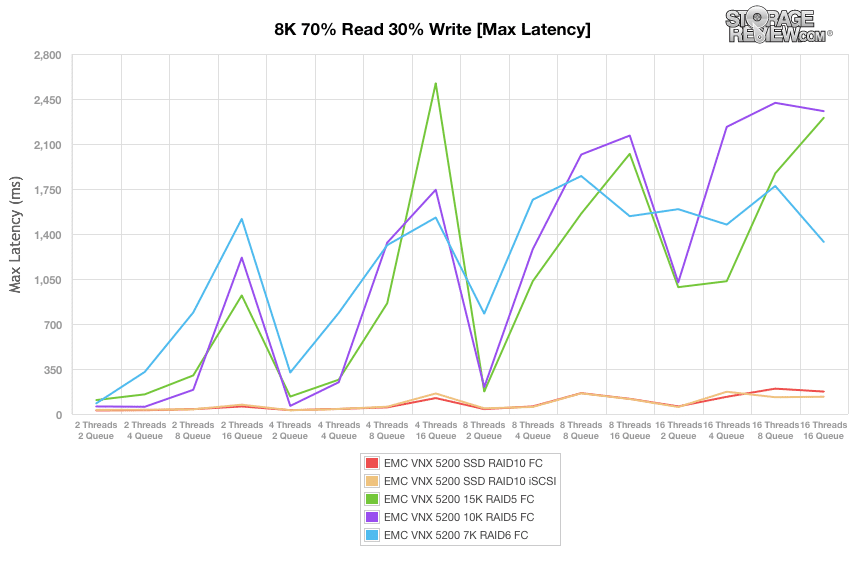

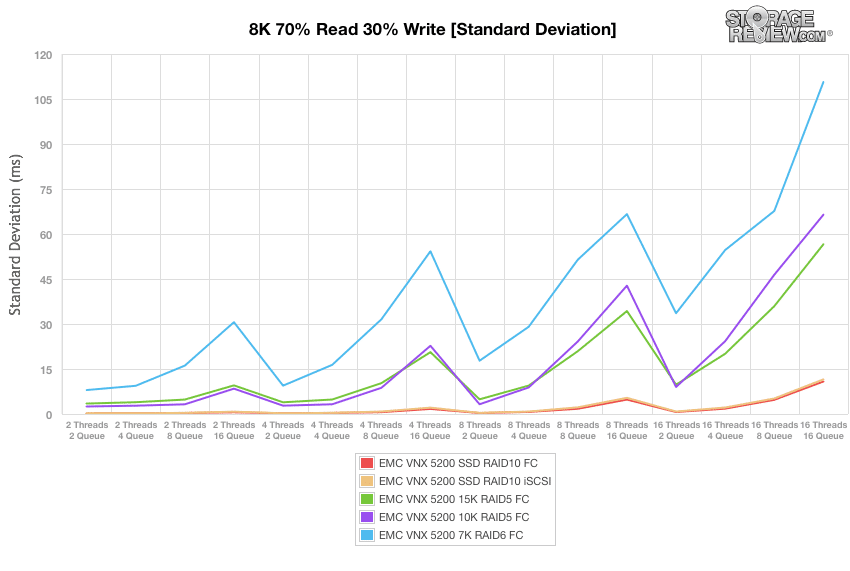

Maximum latency results with SSDs in RAID10 using Fibre Channel connectivity ranged from 27.85ms up to 174.43ms by 16T/16Q. Using the same SSDs during our iSCSI block-level test shows a range of 31.78ms to 134.48ms in maximum latency. Switching to 15K HDDs in RAID5 with Fibre Channel connectivity recorded a range of 108.48ms and 2,303.72ms, while the 10K HDD configuration showed 58.83ms and 2,355.53ms by 16T/16Q. When using 7K HDDs RAID6 configuration of the same connectivity type, the VNX5200 posted a maximum latency range of 82.74ms to 1,338.61ms.

Our next chart plots the standard deviation calculation for latencies during the 8k 70% read and 30% write operations. Using SSDs in RAID10 using Fibre Channel connectivity, the VNX5200 posted a range of just 0.18ms to 10.83ms by 16T/16Q. Using the same SSDs during the iSCSI block-level test shows a similar range of 0.21ms to 11.54ms in latency consistency. Switching to 15K HDDs in RAID5 with Fibre Channel connectivity recorded a range of 3.48ms to 56.58ms, while the 10K HDD configuration showed 2.5ms initially and 66.44ms by 16T/16Q. When using 7K HDDs RAID6 configuration of the same connectivity type, the VNX5200 posted a standard deviation range of 7.98ms to 110.68ms.

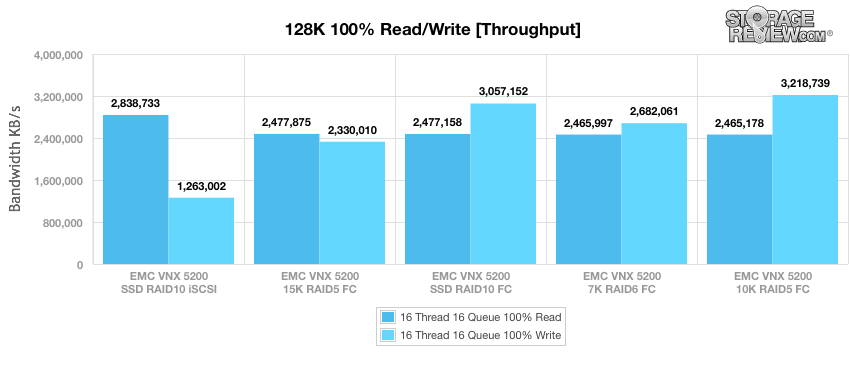

Our final synthetic benchmark made use of sequential 128k transfers and a workload of 100% read and 100% write operations. In this scenario, the OSS1A measured 2.84GB/s read and 1.26GB/s write when the VNX5200 was configured with SSDs using our iSCSI block-level test. Using 15K HDDs in RAID5 with Fibre Channel connectivity shows 2.48GB/s read and 2.33GB/s write. Switching back to SSDs in RAID10 with Fibre Channel connectivity, the OSS1A recorded 2.48GB/s read and 3.06GB/s write. When using 7K HDDs in a RAID6 configuration with Fibre Channel connectivity, the VNX5200 reached 2.47GB/s read and 2.68GB/s write while the 10K HDD configuration posted 2.47GB/s read and 3.22GB/s write.

Conclusion

Since the EchoStreams OSS1A first arrived at StorageReview it has become an important part of our laboratory by allowing us to create a mobile version of our enterprise storage benchmark environment. We have found the chassis design to be a thoughtful compromise between flexibility and size. The interior layout also allows for relatively painless reconfiguration, for example when swapping out PCIe expansion cards to interface with a variety of network protocols and environments. All of this comes without compromising on performance, whereas the platform supports dual Intel Xeon E52600 v2 processors and up to 384GB of DDR3 memory.

This combination of performance and small form factor makes the EchoStreams OSS1A a versatile platform to deploy for an application server that relies on external shared storage. Being so compact means less space used in your datacenter, as well as being rather portable to deploy as a field unit at a remote office or the like. The chassis can also be tailored to the use case with different front-storage options, offering up to 4 7mm slots usable by SSDs where faster on-board storage is required. Overall the EchoStreams OSS1A offers a huge amount of performance in an incredibly short 21″ deep package.

Pros

- Short-depth 1U form factor makes the system more portable and able to be installed in situations where space is at a premium

- Enough processor power and RAM capacity to tackle a variety of workloads

Cons

- Limited internal storage capacity due to chassis size

The Bottom Line

The EchoStreams OSS1A offers the performance and flexibility to take on a variety of tasks, packaged in an impressive short-depth 1U chassis that simplifies transportation and installation in space-constrained locations. This combination has made the OSS1A an indispensable component in StorageReview’s On-site Testing Toolkit.

Amazon

Amazon