Memory has always been the one type of hardware that delivered on the promise of massive speeds. There are a few drawbacks: it is expensive, it is limited in capacity, and it isn’t persistent (in order to maintain the data, memory needs to be powered on, which is further problematic as memory needs a periodic refreshing). Intel and Micron announced 3DXPoint technology about three years ago that would be the first step toward persistent memory. While this technology was not nearly as fast as memory, it was much faster than flash storage and much more cost-effective. Seeing the potential for persistent memory, MemVerge was launched to realize this potential.

The first 3D XPoint devices we saw were more targeted at storage. As time went on Intel leveraged 3D XPoint persistent memory (PMem) to create a faster SSD. Later Intel announced a persistent memory module that sat in the DIMM slot and acted as an expansion to DRAM. While in the past we saw memory for fast needs and storage for big needs, PMem began to blur the line. MemVerge is attempting to blend the best of both worlds, by enabling DRAM performance with large capacity and persistence.

MemVerge has been surveying the landscape of persistent memory and developed software to let PMem hit its potential versus leveraging it as a cache, speed tier, or memory expander. The company uses what it calls Big Memory computing to transform DRAM-only environments into lower costs and more importantly, higher-density memory environments that leverage both DRAM and PMem. It does this by virtualizing the two into a pool of software-defined memory that also delivers software-defined services. On top of the above, the software offers an abstraction layer that allows all applications in a data center to benefit from new types of memory, memory interconnects, processors, and memory allocators to address modern and emerging applications and workloads.

Back in Septmember of last year, MemVerge released the general availability of its Memory Machine. The software is offered in two flavors: Standard Version that virtualizes byte-addressable DRAM and PMem memory speeding apps and lowering costs but doesn’t enable persistence; and AdvancedVersion which is everything above with persistence enabled, as well as enterprise-class memory services that are based on ZeroIO in-memory snapshots.

ZeroIO in-memory snapshots, as the name implies, allows for snapshots of DRAM and PMem with zeroIO to storage. This makes DRAM, which is normally volatile and low-availability, into a high-availability tier. ZeroIO snapshots also allow for what it called time travel, allowing roll back to previous snapshots. There is an AutoSave feature that rolls apps back to the previous snapshot if there is a crash. The snapshots allow for the production of Thin Clones without using more memory resources. And the snapshots can be migrated to other servers and used to create a new app instance.

MemVerge Memory Machine Management

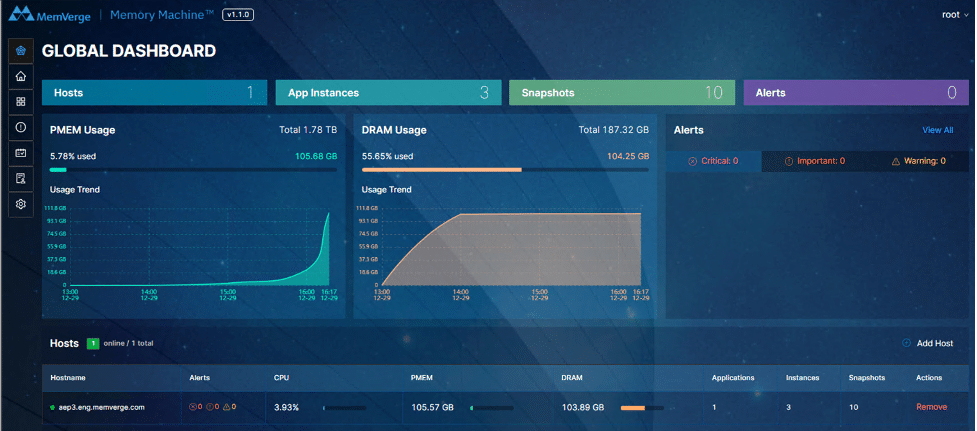

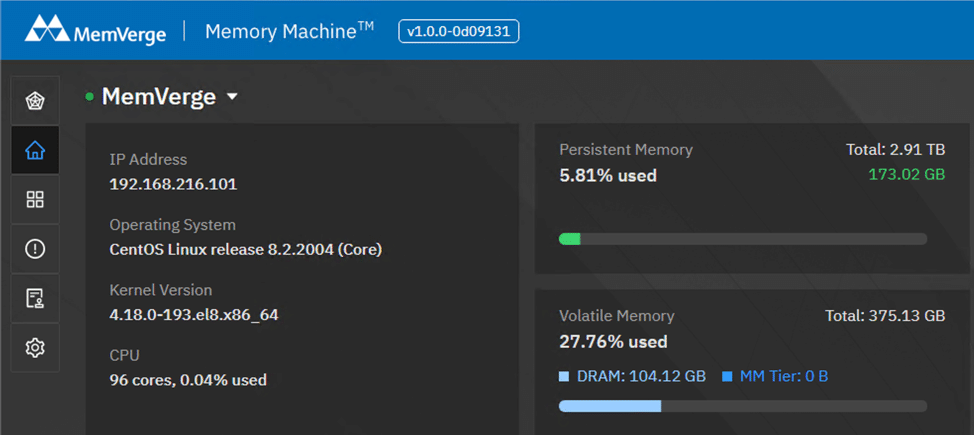

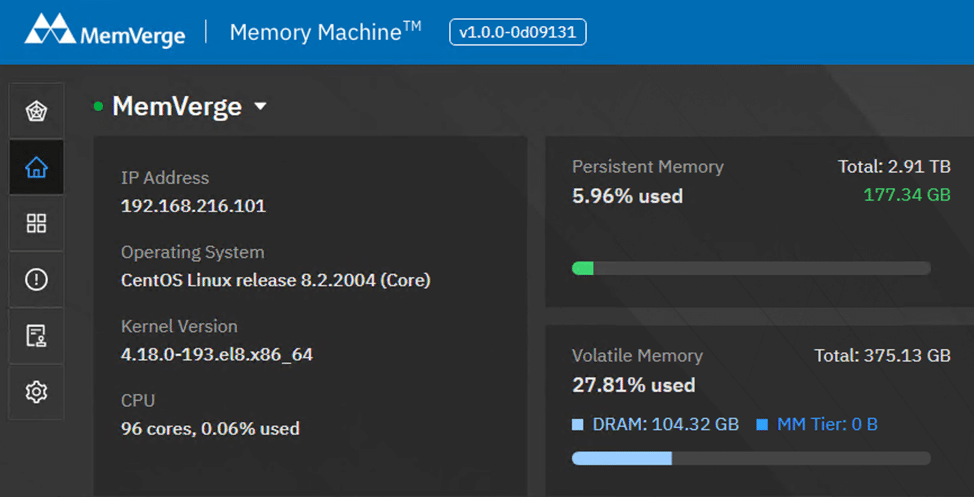

MemVerge Memory Machine has a fairly slick looking GUI. The global dashboard shows elements we’ve come to expect in storage, the differences in this case that DRAM and PMem are the main elements monitored and usage can easily be seen here. Across the top are tabs for hosts, app instances, snapshots, and alerts.

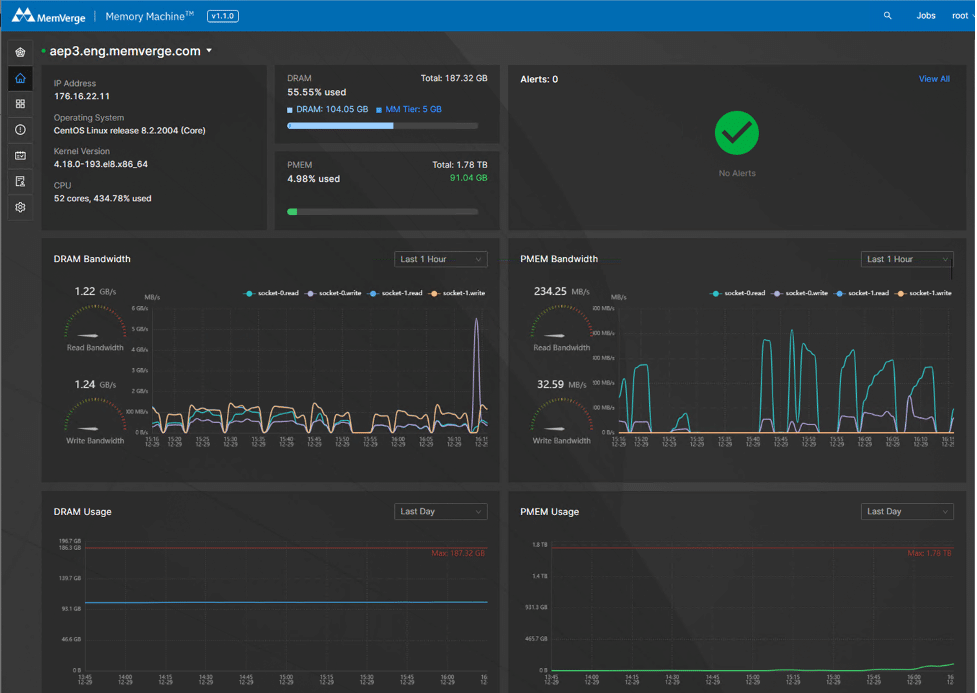

Clicking on the home button on the left brings up basic info on the system (IP address, OS, Kernal version, CPU) as well as a deep dive into Memory and PMem usage and performance.

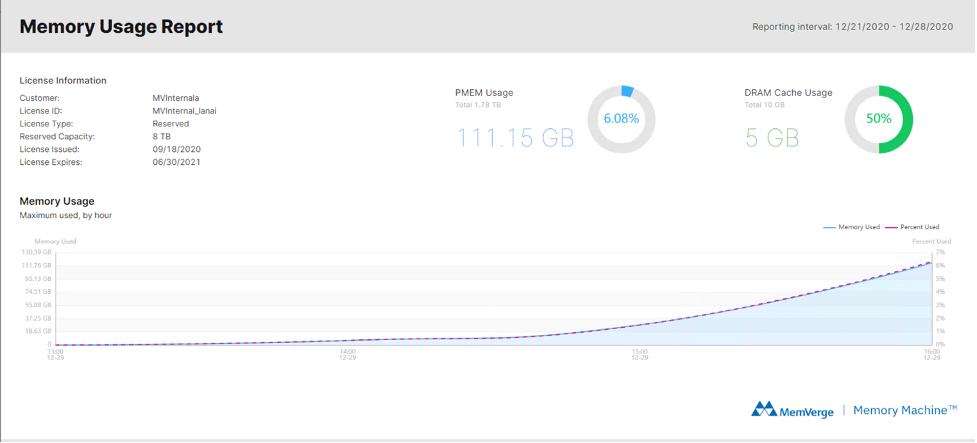

Users can further drill down into memory by pulling up a memory report to see usage by the hour. This also highlights the type, PMem vs. DRAM.

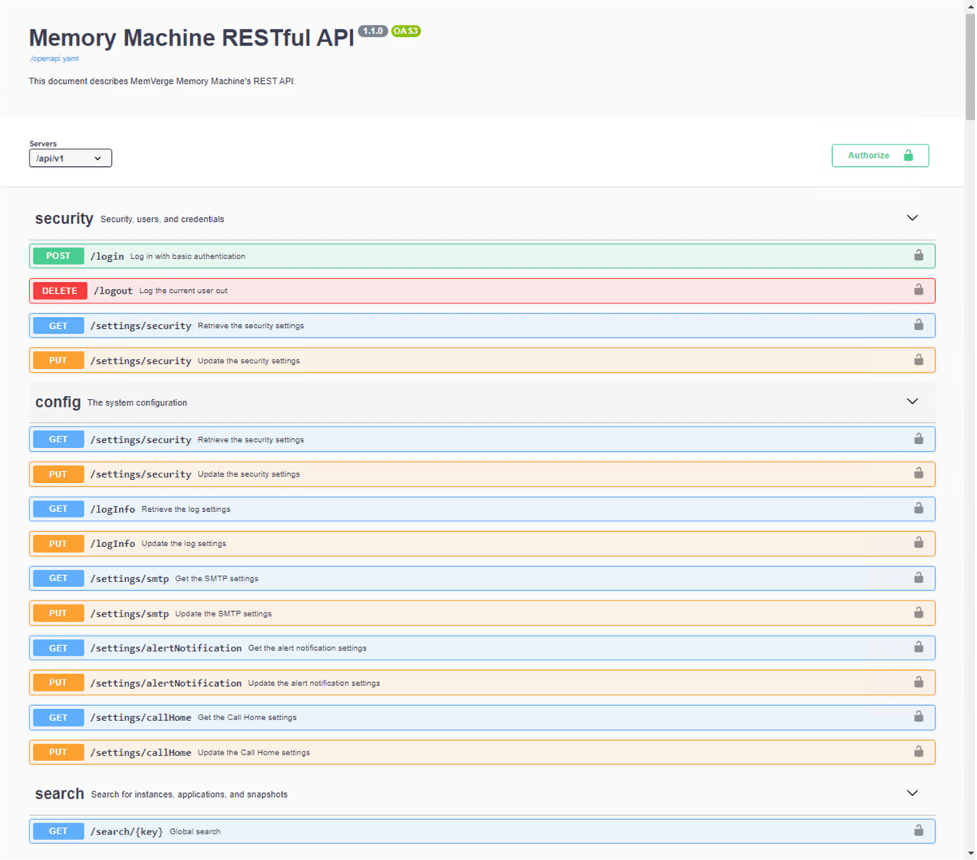

And users can look at Memory Machine’s RESTful APIs as well as configure them.

Snapshots are a big part of Memory Machine, but we will get into that in our performance section.

Configuration

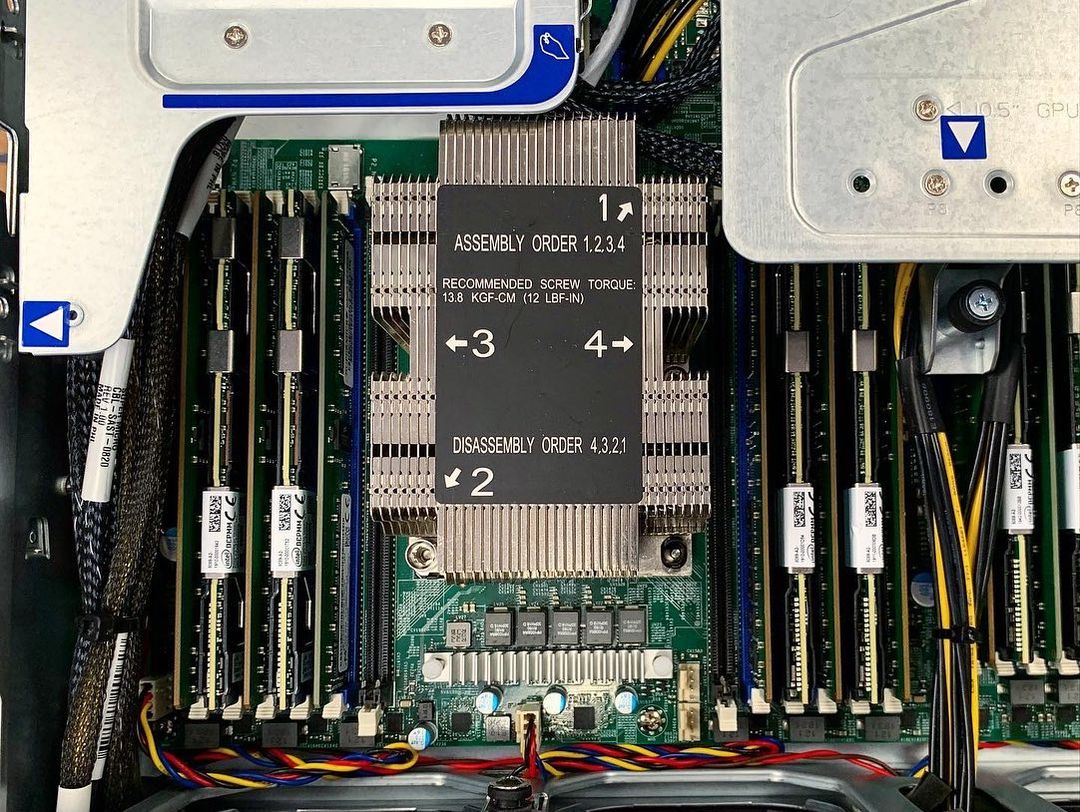

In our lab we leveraged MemVerge Memory Machine on a Supermicro SYS-2029U-TN24R4T with the following specs:

| Hardware Model | Supermicro SYS-2029U-TN24R4T |

| CPU | 2x Intel(R) Platinum(R) 8270 CPU @ 2.70GHz, 26 cores |

| DRAM | DDR4 192GB |

| PMem | 12x128GB |

| OS SSD | 1TB SATA SSD |

| OS | CentOS 8.2.2004 |

| Kernel | 4.18.0-193.19.1.el8_2.x86_64 |

MemVerge Memory Machine Performance

Our normal battery of tests would not make sense here as MemVerge Memory Machine is aimed at workloads and applications that need to run in-memory. Our benchmarks are typically seen as normal to high-stress workloads that would be seen in real life during IT operations. Instead, here we will be looking at a few different tests and we will be looking specifically at things like DRAM versus PMem versus DRAM + PMem and how each shakes out. For this review, we will be using KDB Performance both bulk insert and read test as well as Redis Quick Recovery with ZeroIO Snapshot and Redis Clone with ZeroIO Snapshot.

KDB Performance Testing

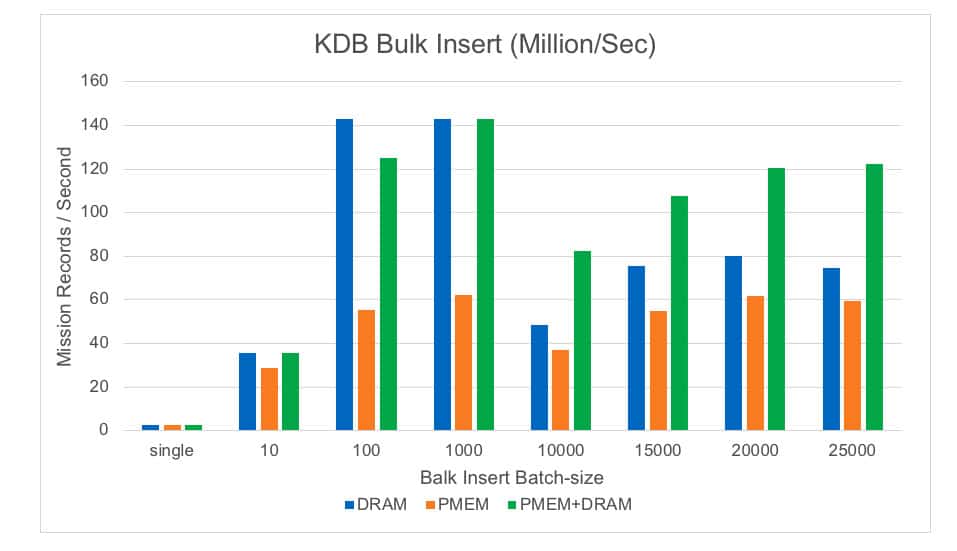

Kx’s kdb+ is a time-series in-memory database. It is known for its speed and efficiency and for that reason very popular at Financial Service Industry. One big constraint for kdb is the limitation of DRAM capacity. MemVerge Memory Machine fits perfectly here so kdb can take full advantage of PMem for expanded Memory space with similar performance to that of DRAM. For the bulk insert test we looked at a single insert, 10, 100, 1000, 10000, 15000, 20000, and 25000 inserts and measured in millions of bulk inserts per second.

With the KDB bulk insert we see some interesting results. At a single insert all three had similar results. In smaller batch sizes, we see DRAM as the top performer. As the batch sizes grow past 10,000 we see Memory Machine take the lead over DRAM with PMem trailing to last each batch.

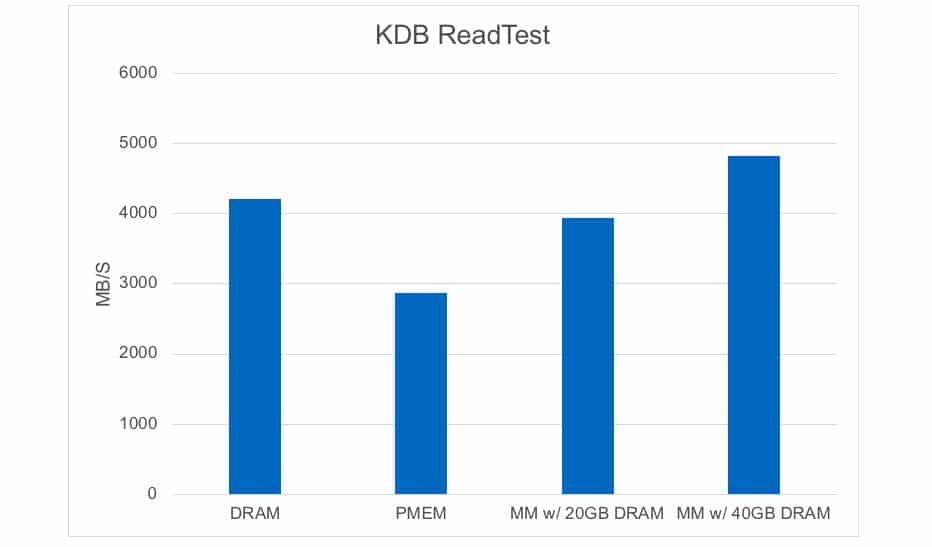

Next, we looked at kdb+with a Read test. Here the test set-up is a bit different. The read test is the same throughout but this time we looked at DRAM only, PMem only, and then PMem with either 20GB or 40GB cache of DRAM. DRAM only was able to hit 4.2GB/s, PMem only hit 2.9GB/s, with a 20GB cache of DRAM, Memory Machine was able to hit nearly the same of the DRAM only with 3.9GB/s, and with 40GB of cache we saw the highest performance of 4.8GB/s. What is more interesting to note is that the last two still allow plenty of DRAM for other tasks.

Redis Snapshot Testing

Redis is an open-source in-memory data store used as a database, cache, and message broker. It is very popular and powerful, so it is an ideal program to benchmark MemVerge Memory Machine. When Redis runs on MemVerge Memory Machine, DRAM and PMem are allocated to Redis. Then MemVerge ZeroIO snapshots save the memory pages of the application without storage IO operations. When Redis crashes, all the data snapshot to PMem is persistent. Crash recovery involves simply pointing to the existing data in PMem without any lifting and shifting of data from storage.

In this section, we will test out the MemVerge ZeroIO snapshot for quick recovery.

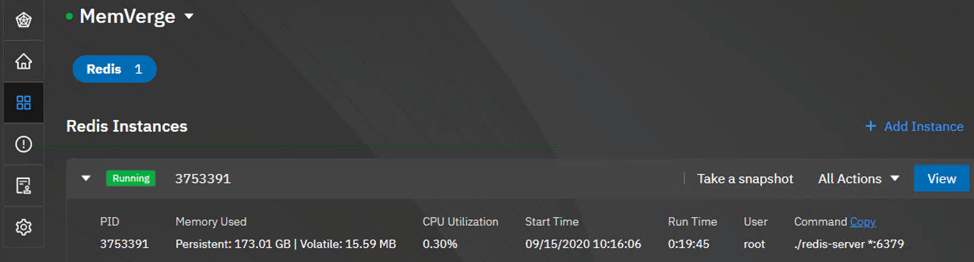

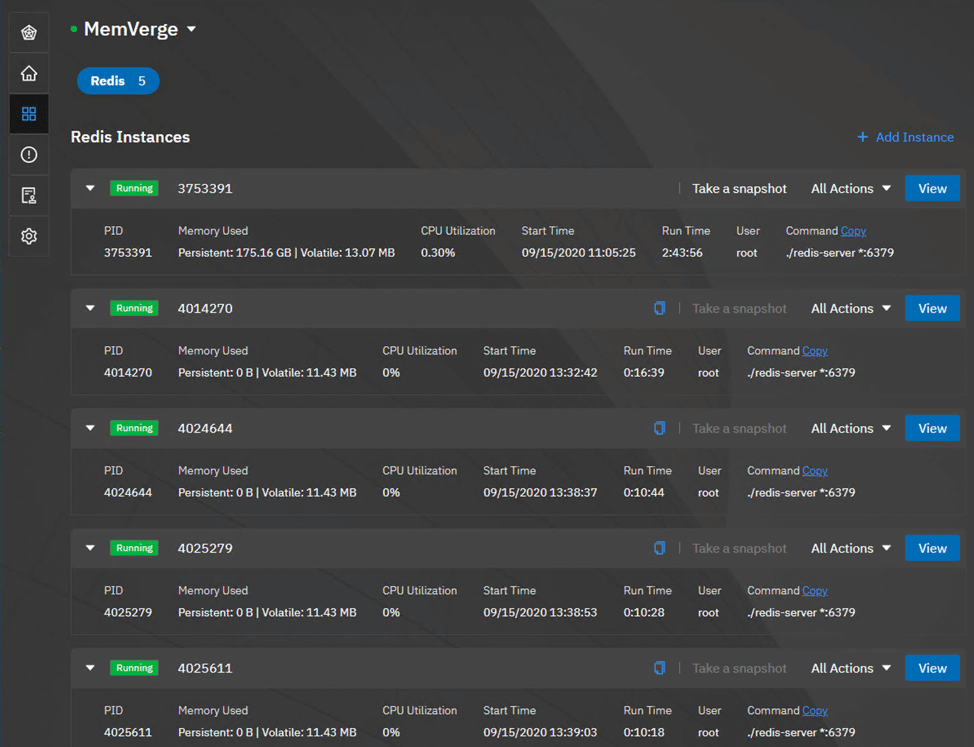

First, we’ll look at Redis Quick Recovery with ZeroIO Snapshot. Unlike performance benchmarks, here we will be showing functionality and ease of use. Once Redis has been install and is running a database, we login to Memory Machine and see things such as how much PMem is being used.

Under the applications tab, we can see that a Redis server is running.

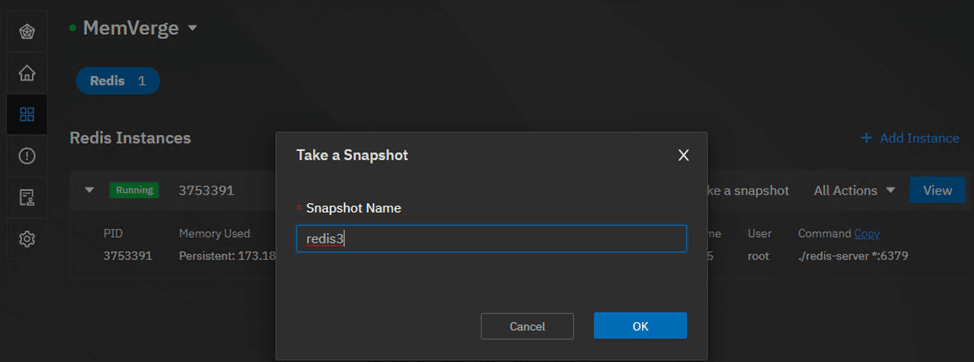

While it is running, we take a few snapshots. Obviously, if snapshots aren’t taken one can’t restore from a snapshot.

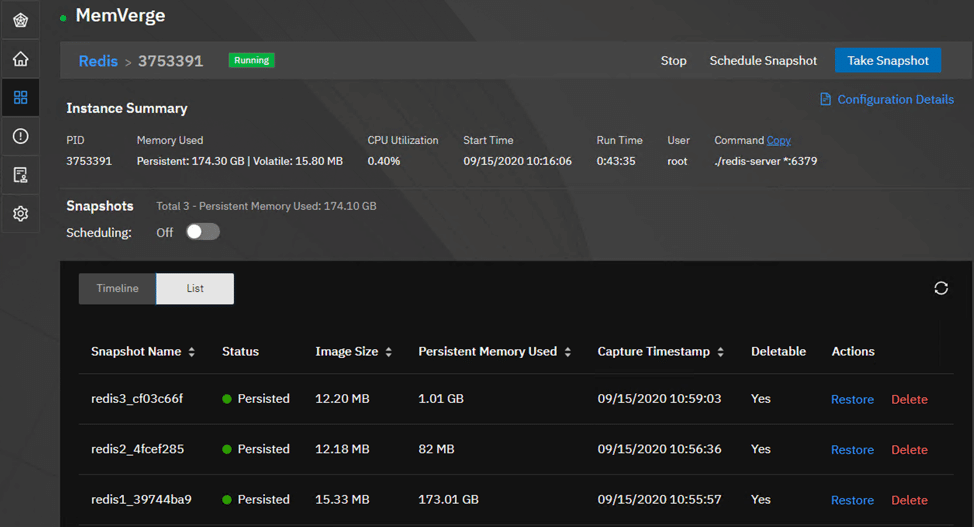

Never hurts to be safe, double-check to make sure we’ve taken some snapshots.

After we know we have them, we trigger a Redis DB failure. We pick a snapshot and restore, after a slight pause, we’re up and running again.

Redis Clone with ZeroIO Snapshot

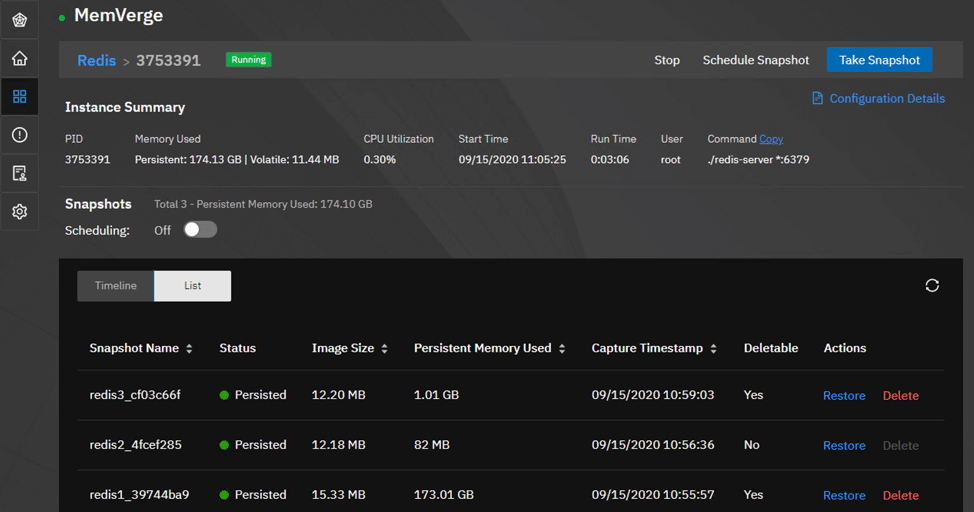

Here we take several of the same steps as above. After the snapshot is taken, we can restore it as a clone with a new namespace and new IP address. We connect to the clone from another host and can see that it takes up about the same memory.

All of the above can be done with a CLI prompt as well for those that prefer.

Conclusion

MemVerge Memory Machine is SDS aimed at combining the performance of memory with the persistence of PMem. PMem is typically used as a cache to accelerate storage. While this is a boon in many instances for storage, in-memory applications need memory performance, not a cache. MemVerge virtualizes both DRAM and PMem into pools of software-defined memory with software-defined services. Memory Machine turns DRAM into a high-availability tier with the use of zeroIO in-memory snapshots. ZeroIO allows for the creation of Thin Clones without taxing memory resources.

As we push into new categories, we’ll have to adopt new performance testing and benchmarks. Testing in-memory SDS with our normal benchmarks wouldn’t be useful as that is not what it is made for. Instead, we leveraged KDB Performance both bulk insert and read test as well as Redis Quick Recovery with ZeroIO Snapshot and Redis Clone with ZeroIO Snapshot. The tests were performed on a Supermicro SYS-2029U-TN24R4T server, though it should work on validated servers that support Intel Optane PMem modules.

With KDM bulk we tested multiple batch sizes looking at DRAM, PMem, and a combination of the two through Memory Machine. As the batches started off small, it was no surprise to see DRAM have the best performance. What was really interesting to see, is that as the test taxed the resources harder, MemVerge did better and better. With the KDM read, we saw similar trends. DRAM performed really well, no surprise there. PMem did ok, but well under DRAM. With only 20GB of cache for DRAM with Memory Machine, it performed at 3.9GB/s compared to DRAM alone’s 4.2GB/s. Giving MemVerge 40GB of DRAM for cache saw the best performance overall with 4.8GB/s while still leaving plenty of DRAM for other tasks.

The second part of performance testing was Redis Quick Recovery with ZeroIO Snapshot and Redis Clone with ZeroIO Snapshot. Here was more of functionality versus quantifiable performance. Essentially we quickly took snapshots, triggered a failure, and were able to be up and running again quickly.

Intel’s PMem is a supremely interesting technology that’s been somewhat slow to adoption because of limited ways to easily adopt and properly leverage the modules. With MemVerge, organizations can take advantage of what PMem has to offer, in a solution that was built specifically with this technology in mind. For those with applications that can benefit from the large and resilient memory footprint PMem offers, MemVerge is definitely worth evaluating.

Amazon

Amazon