Last year we reviewed the QSAN XCubeSAN XS1200 Series that we found to have good performance, good capabilities, and a good price for the SMB and ROBO markets that were its target. For this review, we will be looking at the same appliance with the higher-end XS5226 controller in it. As design and build and management are identical (we are using the same chassis), readers can refer back to the previous review.

Within the XS5200 family (much like the XS1200 family), QSAN offers several form factors and either a single or dual controller–again with an S for single or D for Dual. The XS5226D is a dual controller that is active-active and geared around higher performance for mission-critical environments with the ideal use cases being HPC, virtualization integration, and M&E. The company claims performance as high as 12GB/s sequential read and 8GB/s sequential write with over 1.5 million IOPS.

As stated, we are using the same chassis, which means there are several areas of overlap between the two reviews and will therefore be skipped over here. However, we will be looking at key specification differences as they directly impact performance.

QSAN XCubeSAN XS5226D Specifications

| Raid Controller | Dual-active |

| CPU | Intel Xeon D-1500 quad core |

| Memory | up to 128GB of DDR4 ECC |

| Drive Type | |

| 2.5″ SAS, NL-SAS, SED HDD | |

| 2.5” SAS, SATA SSD (6Gb MUX board needed for 2.5″ SATA drives in dual controller system) | |

| Expansion capabilities | 2U 26-bay, SFF |

| Max drives supported | 286 |

Performance

Application Workload Analysis

The application workload benchmarks for the QSAN XCubeSAN XS5226D consist of the MySQL OLTP performance via SysBench and Microsoft SQL Server OLTP performance with a simulated TPC-C workload. In each scenario, we had the array configured with 26 Toshiba PX04SV SAS 3.0 SSDs, configured in two 12-drive RAID10 disk groups, one pinned to each controller. This left 2 SSDs as spares. Two 5TB volumes were then created, one per disk group. In our testing environment, this created a balanced load for our SQL and Sysbench workloads.

SQL Server Performance

Each SQL Server VM is configured with two vDisks: 100GB volume for boot and a 500GB volume for the database and log files. From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM and leveraged the LSI Logic SAS SCSI controller. While our Sysbench workloads tested previously saturated the platform in both storage I/O and capacity, the SQL test is looking for latency performance.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs, and is stressed by Quest’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across the QSAN XS5200 (two VMs per controller).

SQL Server Testing Configuration (per VM)

- Windows Server 2012 R2

- Storage Footprint: 600GB allocated, 500GB used

- SQL Server 2014

- Database Size: 1,500 scale

- Virtual Client Load: 15,000

- RAM Buffer: 48GB

- Test Length: 3 hours

- 2.5 hours preconditioning

- 30 minutes sample period

SQL Server OLTP Benchmark Factory LoadGen Equipment

- Dell EMC PowerEdge R740xd Virtualized SQL 4-node Cluster

- 8 Intel Xeon Gold 6130 CPU for 269GHz in cluster (Two per node, 2.1GHz, 16-cores, 22MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Mellanox ConnectX-4 rNDC 25GbE dual-port NIC

- VMware ESXi vSphere 6.5 / Enterprise Plus 8-CPU

For our testing, we will be comparing the new controller to the previously one tested. This is less of a “which one is better” and more of a “look at the performance one gets depending on one’s needs.”

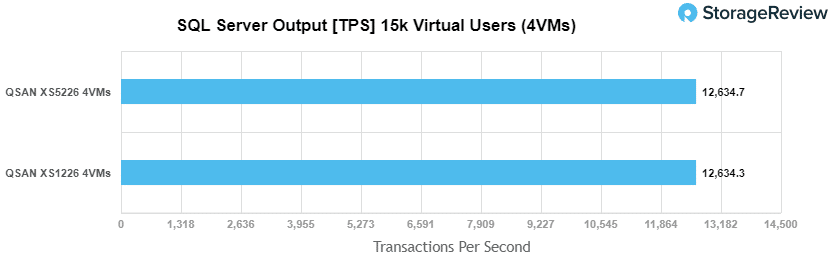

With SQL Server, the difference in controllers really didn’t make much of an overall difference in performance. The XS1226 with 4VMs hit 12,634.3 TPS and the XS5226 with 4VMs hit 12,634.7 TPS.

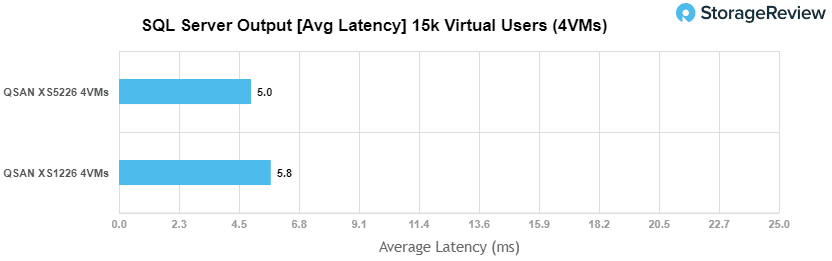

With SQL average latency we saw more of the same. The XS1226 had a latency of 5.8ms and the XS5226 had a latency of 5.0ms.

Sysbench Performance

Each Sysbench VM is configured with three vDisks, one for boot (~92GB), one with the pre-built database (~447GB), and the third for the database under test (270GB). From a system-resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM and leveraged the LSI Logic SAS SCSI controller. Load gen systems are Dell R740xd servers.

Dell PowerEdge R740xd Virtualized MySQL 4 node Cluster

- 8 Intel Xeon Gold 6130 CPU for 269GHz in cluster (two per node, 2.1GHz, 16-cores, 22MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Mellanox ConnectX-4 rNDC 25GbE dual-port NIC

- VMware ESXi vSphere 6.5 / Enterprise Plus 8-CPU

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Storage Footprint: 1TB, 800GB used

- Percona XtraDB 5.5.30-rel30.1

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

In our Sysbench benchmark, we tested several sets of 4VMs, 8VMs, 16VMs, and 32VMs. In transactional performance, the XS5226D showed a strong performance with 6,889 TPS for 4VMs, 13,023 TPS at 8VMs, 21,645 TPS at 16VMs, and 26,810 TPS at 32VMs.

With average latency, the 4VM XS1226 performed slightly better than the XS5226D, 18.1ms to 18.6ms, but the XS5226D edged out the earlier controller in the other VM configurations with 19.7ms for 8VM, 23.9ms for 16VM, and 41ms for 32VM.

In our worst-case scenario latency benchmark, we see the same as with average latency: better in 4VM for the XS1200 series and better in the rest with the XS5200 Series. For the XS5226D, we saw latency of 32.7ms for 4VM, 34.8ms for 8VM, 47ms for 16VM and 76.9ms for 32VM.

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices. On the array side, we use our cluster of Dell PowerEdge R740xd servers:

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

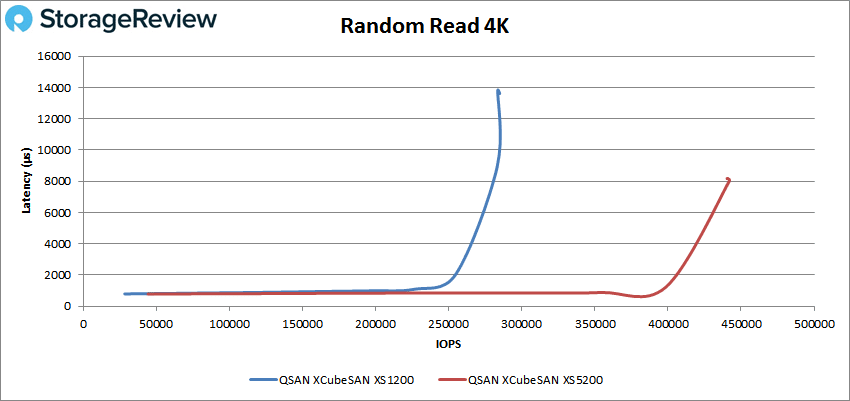

In 4K peak read performance, the XS5226D had sub-millisecond latency performance up to just shy of 400K IOPS, with a peak performance of 442,075 IOPS with a latency of 8.03ms. This blew way past the XS1200 that peaked at 284K IOPS and 13.82ms latency.

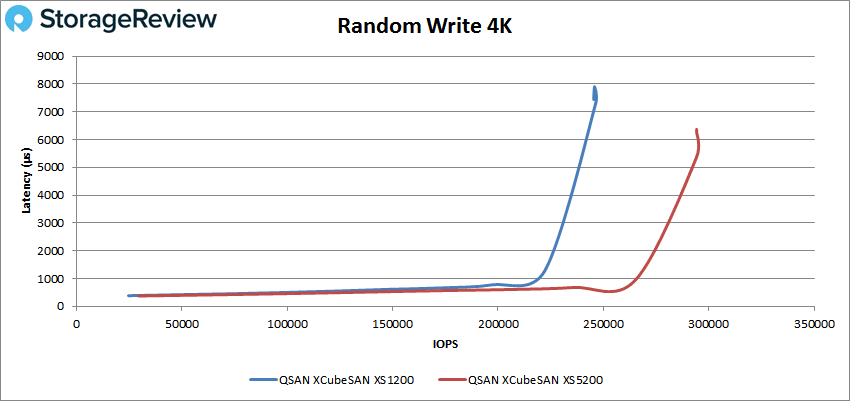

With 4K peak write performance, the new controller had sub-millisecond latency performance up to around 270K IOPS with a peak of 294,255 IOPS with a latency of 6.27ms. For comparison, the old controller had peak performance of about 246K with 7.9ms latency.

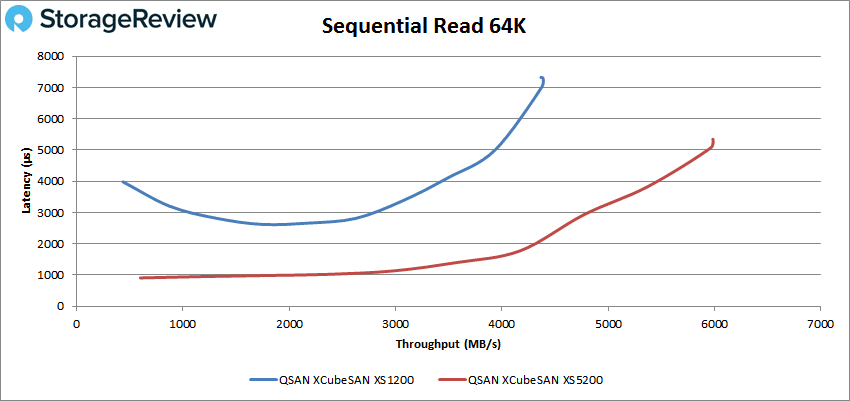

Switching over to sequential performance, in the 64K read the XS5226D rode right under 1ms until about 38K IOPS or 2.3GB/s and peaked at 95,762 IOPS or 5.99GB/s with a latency of 5.34ms. The XS1200 didn’t even have sub-millisecond performance.

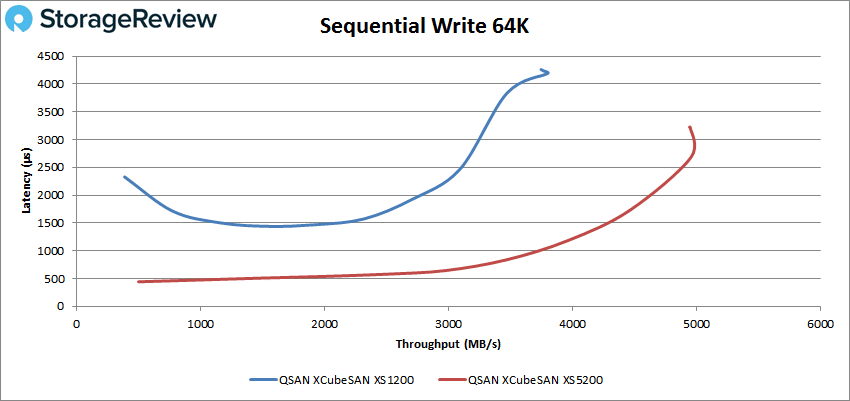

For 64K sequential peak write, the XS5226D had performance under 1ms until about 63K IOPS or 3.9GB/s. It peaked at about 80K IOPS or 4.95GB/s with a latency of 2.68ms.

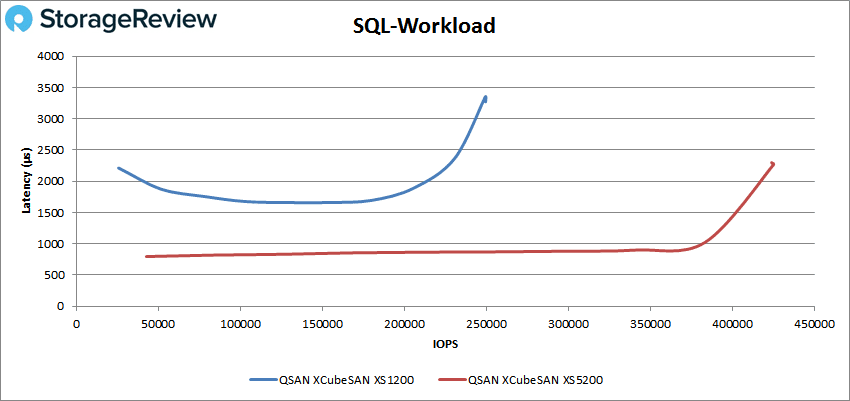

In our SQL workload, the new controller easily outshone its counterpart. The XS5226D had sub-millisecond latency performance until about 380K IOPS and peaked at 425,327 IOPS with a latency of 2.27ms. So the XS5226D controller had about 200K more IOPS with 1ms lower latency.

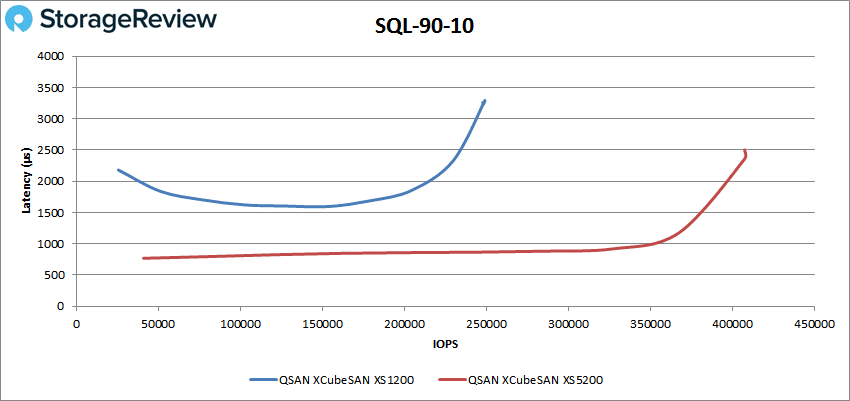

In the SQL 90-10, the XS5226D stayed under 1ms until about 350K IOPS and peaked at 407,661 IOPS with a latency of 2.36ms. Again, it outshone the other controller that had all its performance over 1ms.

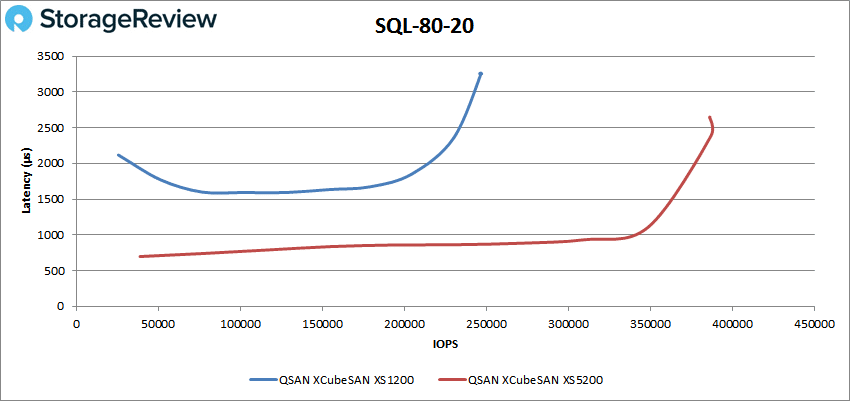

The SQL 80-20 showed the XS5226D with sub-millisecond latency performance until about 340K IOPS and a peak performance of 387,085 IOPS with a latency of 2.4ms. Again, it was quite the performance jump on the XS1200 that had a peak performance of about 247K IOPS at 3.26ms latency.

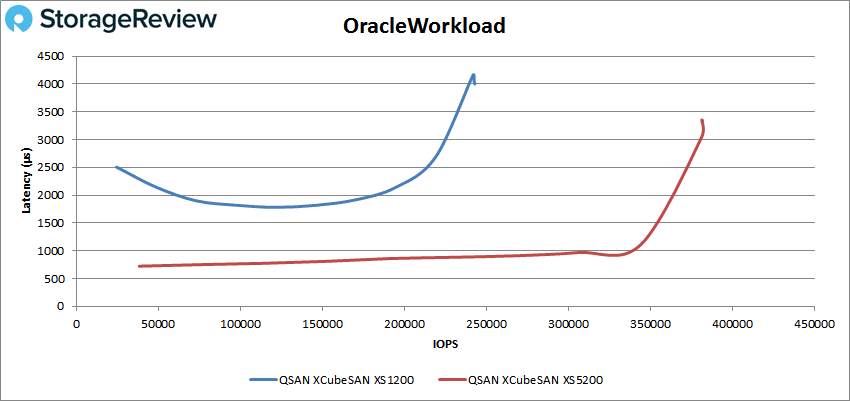

With the Oracle Workload, the XS5226D made it until almost 310K IOPS before breaking 1ms and peaking at 381,444 IOPS with 3.1ms. The XS1200 peaked at 246,186 IOPS with a latency of 4.2ms.

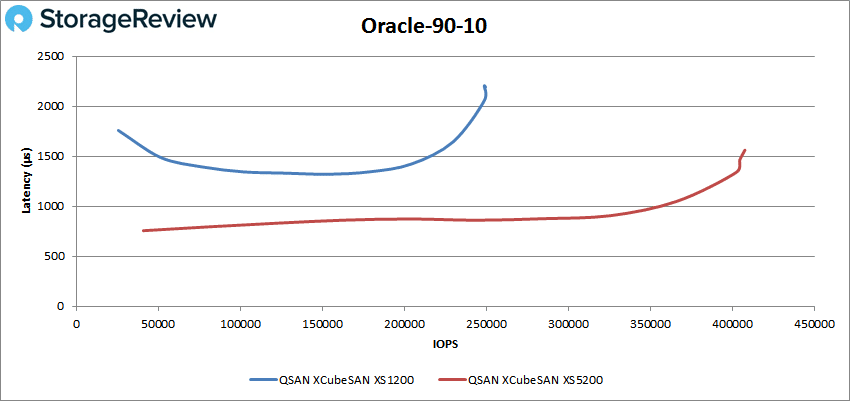

With the Oracle 90-10, the XS5226D stayed below 1ms until about 360K IOPS and peaked at 407,763 IOPS with a latency of 1.56ms. For comparison, the XS1200 peaked at 248,759 IOPS with 2.2ms latency and never dipped below 1ms throughout its run.

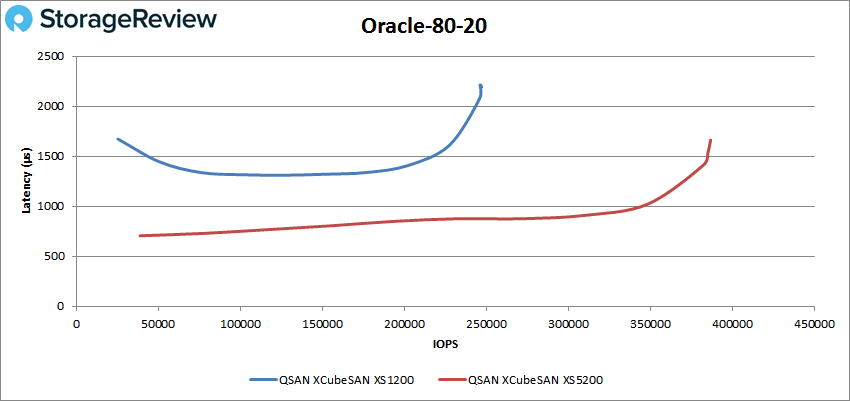

For the Oracle 80-20 run, the XS5226D made it to just shy of 350K IOPS before breaking 1ms and peaking at 386,844 IOPS with a latency of 1.66ms. The XS1200 was above 1ms throughout with a peak of 242,000 IOPS and a latency of 4.16ms.

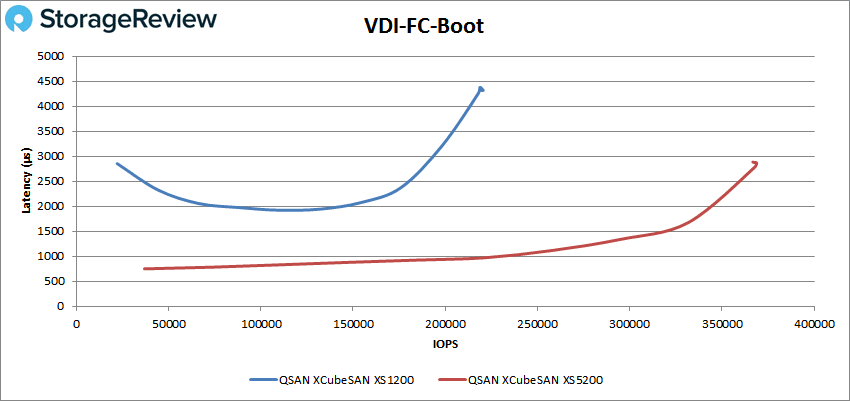

Next we switched over to our VDI clone test, Full and Linked. For VDI Full Clone Boot, the XS5226D straddled the line of 1ms for a bit before falling over at roughly 225K IOPS and peaking at 367,665 IOPS with a latency of 2.78ms. An impressive jump in performance compared to the XS1200’s 218K IOPS and 4.26ms latency.

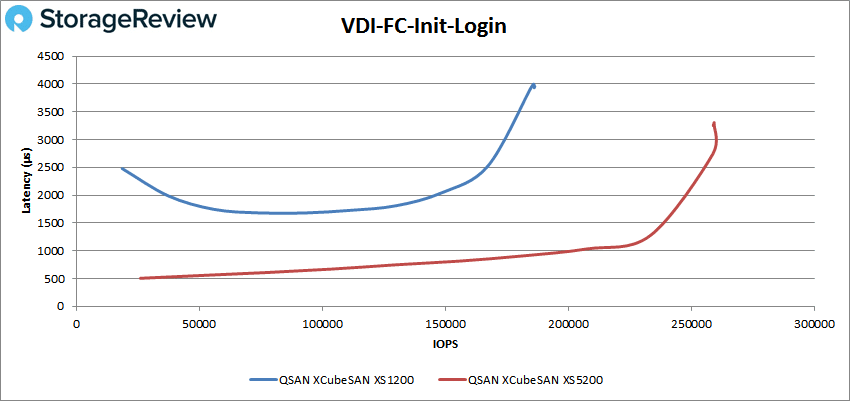

For the VCI FC Initial Login, the XS5226D had sub-millisecond latency performance until about 200K IOPS and peaked at about 260K IOPS with 3ms latency. The XS1200 peaked at 185,787 IOPS at 3.91ms latency in the same test.

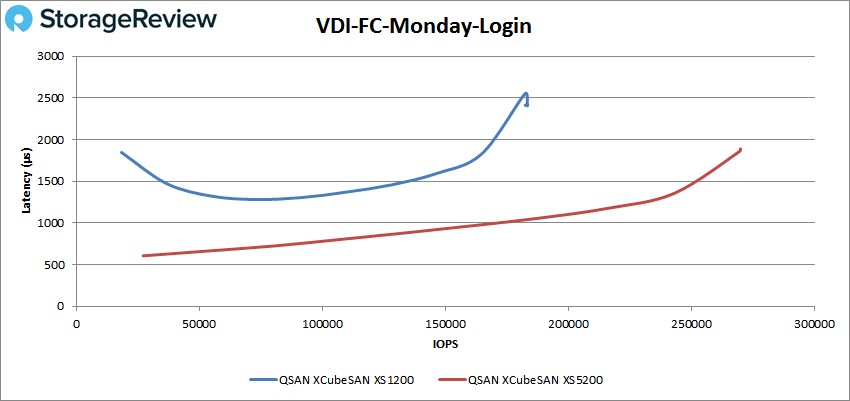

The VDI Full Clone Monday Login saw the XS5226D make it to about 163K IOPS under 1ms and peaking at 269,724 IOPS with a latency of 1.86ms. The previous controller was able to peak at 182,376 IOPS at 2.55ms latency.

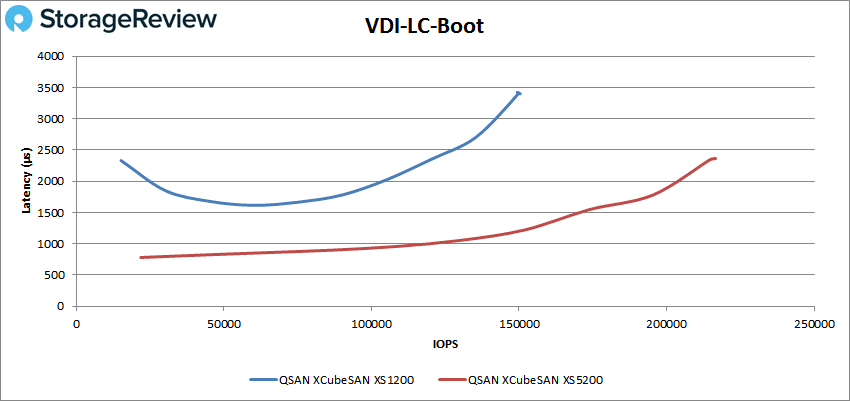

Switching over to VDI Linked Clone, the boot test showed the XS5226D made it until roughly 110K with sub-millisecond latency performance and peaked at 216,579 IOPS with a latency of 2.36ms. The XS1200 peaked at 149,488 IOPS with a latency of 3.39ms.

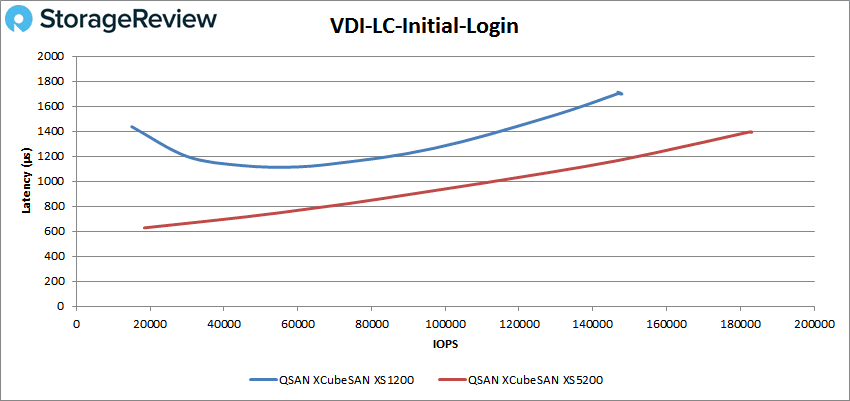

The VDI Linked Clone initial login also saw the XS5226D make it until roughly 110K with sub-millisecond latency performance and then it peaked at 182,425 IOPS with a latency of 1.39ms. Contrast this with the XS1200 that had a peak performance of 147,423 IOPS at 1.71ms latency.

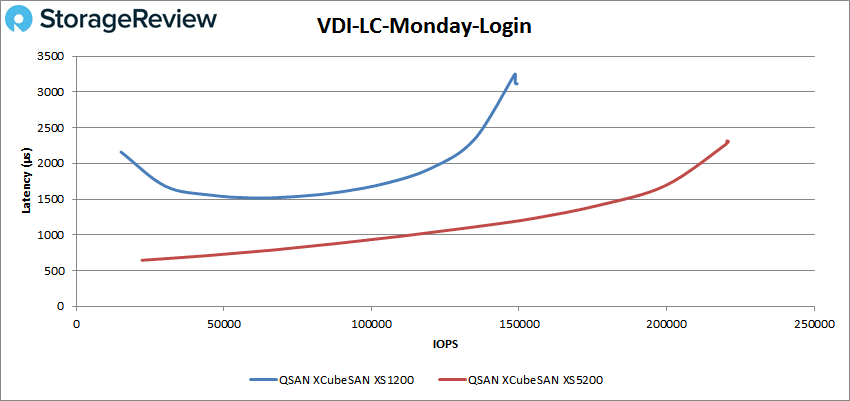

Finally, the VDI Linked Clone Monday Login had the XS5226D once again make it to roughly 110K with sub-millisecond latency performance and then it peaked at about 220K IOPS with a latency of 2.3ms. The XS1200 peaked at 148,738 IOPS with a latency of 3.2ms.

Conclusion

The QSAN XCubeSAN XS5226D is a dual active-active SAN that promises more performance than the XS1226D that was aimed at SMBs. For this review, we leveraged the same chassis with an upgraded controller. That being said, the design and build and management were the same and can be found in our original review. The XS5226D is aimed at more mission-critical workloads and has target use cases higher upstream than the XS1226D such as HPC, M&E, and virtualization. Using the same chassis means that all the connectivity and high availability benefits are the same.

Looking at performance, in our application workload analysis, the difference in controllers didn’t really translate into much of a difference in performance for our SQL Server benchmarks, although in other areas we saw massive gains. TPS for the XS1226 was 12,634.3 and for the XS5226 the score was only 0.4 TPS higher at 12,634.7. We saw a similar action with average latency with the smaller controller hitting 5.8ms and the larger hitting 5.0ms. With Sysbench, we saw much better performance out of the XS1226 in 4VM configurations but the XS5226 had better performance with more VMs with 32VM performance of 26,810.4 TPS, 41ms average latency, and 76.9ms worst-case scenario.

With our VDBench workloads there was a tremendous difference in almost all of our tests with the XS5226D clearly providing much more performance. In our 4K we saw the XS5226D controllers hit scores over 442K IOPS read and 294K IOPS write with latency as low as 8.03ms and 6.27ms respectively. 64K performance showed the controller hitting nearly 6GB/s read and nearly 5GB/s write. With our SQL workload, the controller had peak performance over 425K IOPS, 407K IOPS for 90-10, and 387K IOPS for 80-20. The Oracle workload also showed some really good numbers with peak performance over 381K IOPS, 407K IOPS for 90-10, and 386K IOPS for 80-20 with latencies between 1.56ms to 3.1ms. For our VDI Full Clone and Linked Clone, we looked at Boot, Initial Login, and Monday Login. For Boot performance, the XS5226D hit over 367K IOPS in FC and over 216K IOPS in LC. Initial Login showed about 260K IOPS peak performance FC and over 182K IOPS for LC. And Monday Login had the XS5226D controller with over 269K IOPS FC and 220K IOPS LC.

Overall, the XS5200 did quite well, taking full advantage of the Toshiba PX04 SAS3 SSDs we installed. Performance in total is very impressive, as 6GB/s read and 5GB/s write (64K sequential) out of an SMB SAN is very good. Of course, there’s somewhat of a compromise; the feature set, interface and software integrations with popular packages like VMware leave a little to be desired as you look more upmarket at enterprise needs. Be that as it may, the XS5200 offers a fantastic performance/cost profile that will get the job done just fine for much of the target audience.

The Bottom Line

The QSAN XCubeSAN with the XS5226D controller brings much higher performance to needed workloads, still with a relatively good price point.

Amazon

Amazon