NAB 2024 opened with tremendous flair, as tens of thousands of attendees flocked to the Las Vegas Convention Center to learn about the latest technology for Media and Entertainment professionals. Over the many years of NAB, one tenant has never changed—digital files are getting more significant, and teams need faster access to them both in the data center and the field. Thankfully, technology has made critical advances, like 61.44TB SSDs, that can solve the complexities involved with M&E workloads.

Working on a film or television show places a different stress level on storage than what’s typically seen in the data center. Teams on set need instant access to the raw footage for playback, pre-visualizing VFX in real time, checking continuity, and transcoding. The latter is the most storage-intensive. It’s not uncommon to process footage faster than real-time while offloading fresh footage from the cameras. Then, these massive files and their smaller proxy counterparts must be transported back to a post house where they can be ingested into long-term storage systems and made available to the post-production team.

CheetahRAID RAPTOR for On-Set Data Storage

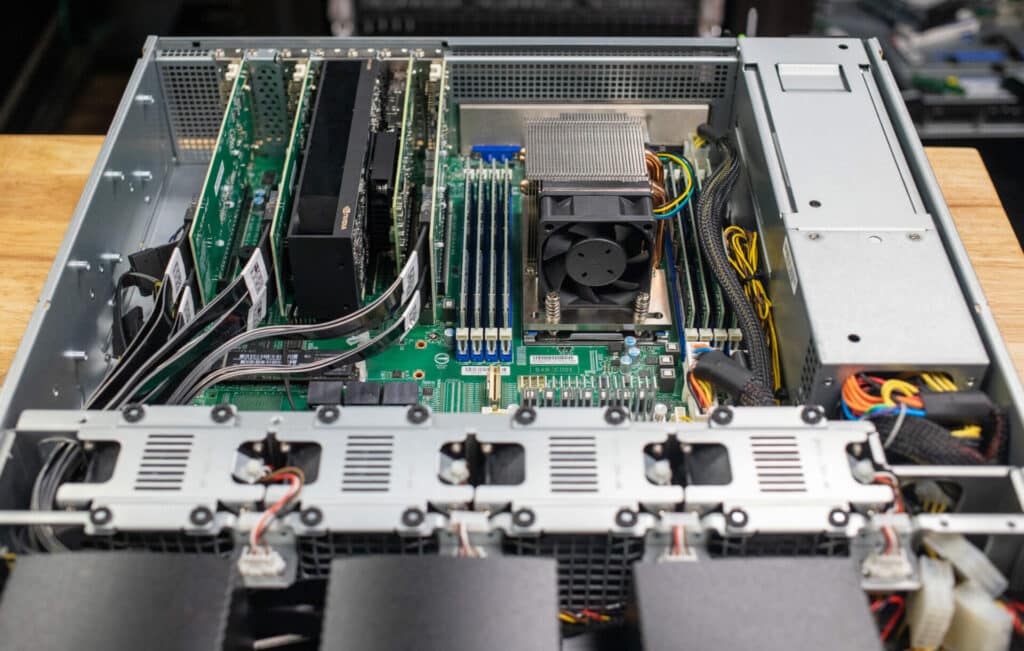

To help creative professionals in this ongoing endeavor, CheetahRAID has configured the perfect edge server for on-set data storage. The CheetahRAID RAPTOR is a rugged 2U short-depth server highlighted by three drive canisters supporting four SSDs each. After filming, the canisters can be removed and shipped to a post-house for quick ingestion. For this project, each canister contains 4x Solidigm P5336 61.44TB SSDs, which provide incredible density. That’s nearly 250TB of raw flash per canister.

The solution takes advantage of the Graid SR-1010 GPU-based RAID card and accompanying software to enable high-speed access to the storage. This is critical as the Graid card takes most of the RAID management away from the server’s CPU, freeing it up for more critical work. With the AMD EPYC 7003 processor inside the CheetahRAID server, having them available to execute application tasks is of the utmost importance.

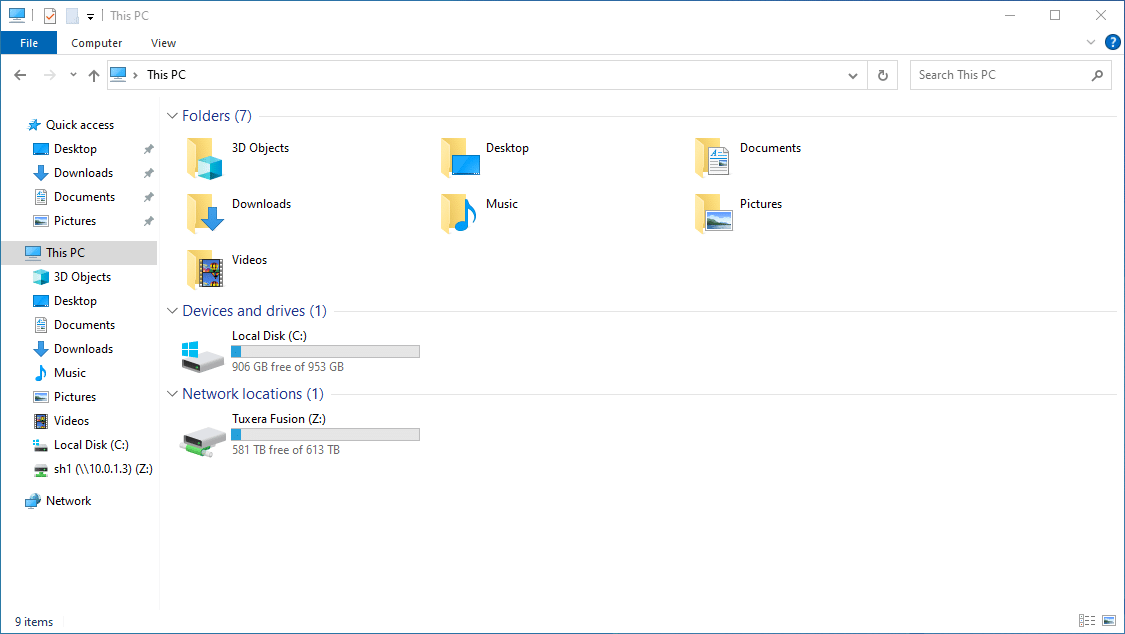

The server has an NVIDIA 100GbE networking card to share the storage with professional workstations. For this testing, we’re pairing twin HP Z4 Rack G5 rack workstations, each with a 100GbE NIC directly connected to the server. This should enable us to achieve a theoretical throughput of around 12.5GB/s for each workstation. Incredibly, nearly three-quarters of a petabyte of storage is available to each system.

To reliably deliver that much speed to each workstation, though, a robust protocol needs to be in place that can handle not just data movement but data movement at this scale. For that, the system uses Fusion File Share by Tuxera. Fusion File Share enables the CheetahRAID storage server, based on Linux, to provide SMB shares to workstations. It is significantly faster than the original Linux implementation of the SMB Server, Samba. And it’s not just raw throughput either; the editing process is much more sensitive to latency, where even minor hiccups can crash editing programs.

Why Fusion File Share?

Fusion File Share by Tuxera is a high-performance, scalable distributed file system that provides access to unstructured or file-based data over a network. It delivers support for Microsoft’s Server Message Block (SMB) protocol to public cloud platform-as-a-service (PaaS) offerings, software-defined storage (SDS) solutions, high-performance computing (HPC) applications, and enterprise-grade network-attached storage (NAS). Fusion has all the updated security and SMB capabilities to suit demanding organizational needs.

Tuxera’s Fusion File Share stands out as an alternative to traditional SMB server solutions like Samba, designed to focus on high performance and scalability. Its modular and multithreaded architecture enhances I/O throughput, minimizes CPU usage, reduces memory footprint, and supports managing multiple connections simultaneously. By leveraging SMB multichannel, compression, and SMB over RDMA (Remote Direct Memory Access), Fusion effectively eliminates common bottlenecks, offering a solution where high throughput, low latency, and efficient resource utilization are paramount. RDMA over Converged Ethernet (RoCE), or SMB Direct, also allows data packets to bypass CPU processing entirely, further reducing latency and CPU load for improved overall performance.

Fusion’s scalability is one of its core strengths, with its file share scale-out feature enabling dynamic adjustment of SMB and file server nodes to meet businesses’ changing needs. This is complemented by a robust fault tolerance framework, incorporating transparent failover and continuous availability to ensure uninterrupted service, even during server failures or maintenance activities. Moreover, Fusion’s support for the latest SMB 3.1.1 protocol, alongside earlier versions, ensures broad compatibility and interoperability across various network settings.

Fusion File Share offers a streamlined and secure approach to data management. It facilitates capacity planning with quota support, provides detailed user audits and access logs, and allows dynamic share configurations without system restarts. It supports multiple authentication mechanisms, including Kerberos, LDAP, and NTLM, and integrates seamlessly with Windows ACL and Active Directory for enhanced security and ease of management. This comprehensive feature set positions Tuxera’s Fusion as a highly reliable and efficient choice for organizations seeking superior performance and scalability from their SMB server solutions.

Samba vs. Fusion File Share Performance

The CheetahRAID RAPTOR storage server is built around a single-socket AMD EPYC Gen3 motherboard, running a 24-core 7443P CPU with 256GB of DDR4 RAM. The server has three canisters that house the storage of four SSDs (12 SSDs total). Inside each canister are 4x Solidigm P5336 61.44TB SSDs. These drives use direct NVMe connections to the motherboard via native NVMe risers.

The server uses Graid SupremeRAID SR-1010 for RAID management. To pipe out data over the network, there’s an NVIDIA Connect-X 6 Dx dual-port 100GbE card. This card offers up to 200Gb of bandwidth combined across both ports, giving us up to 20GB/s in and out of the box across our network fabric. The storage server runs Ubuntu 22.04.4 LTS.

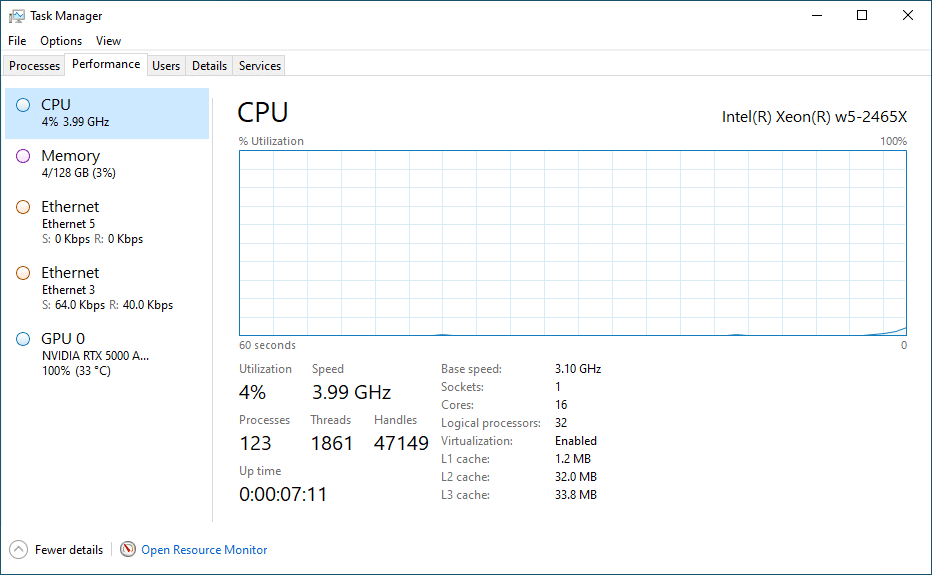

We leveraged two HP Z4 Rack G5 Workstations to act as loadgens in our testing environment. Each system had 16-core Intel Xeon W5-2465X CPUs and 128GB of DDR5 RAM. They also offer NVIDIA RTX 5000 Ada GPUs and NVIDIA ConnectX-6 Dx 100GbE NICs to build them into video-editing powerhouses. The systems were configured with Windows Server 2022. It’s worth noting that HP doesn’t support Windows Server and 100GbE NICs directly, but neither posed any issues during this testing.

The high-speed network fabric connects them to the CheetahRAID Raptor over 100GbE links, giving each client a 10GB/s link to shared storage. With 12 61.44TB Solidigm P5336 QLC SSDs in a Graid storage pool, we could share a massive 613TB volume with each host.

To measure the benefit of Tuxera’s Fusion software, we compared 1MB sequential read and write bandwidth and latency from each client interacting with the CheetahRAID storage host simultaneously. We used four FIO jobs on each client, each measuring the performance of a 50GB file. In total, that worked out to a 400GB footprint. Our baseline was measured using Samba version 4.15.13 against Fusion over TCP and again with Fusion leveraging RDMA offload.

The Samba read bandwidth baseline to each HP Z4 Rack G5 workstation measured 4GB/s and 3.8GB/s, for a total of 7.8GB/s. In a sequential write workload, we measured 2.4GB/s and 2.6GB/s for a total of 5GB/s. The average read latency measured 17.23ms, with the average write latency clocking in at 26.6ms.

Switching to Tuxera’s Fusion software had a massive impact, with no change needed on the client side. Looking at the TCP protocol, we measured 11.1GB/s and 11.1GB/s from each client, giving us 22.2GB/s in read bandwidth. This was the limit of the 100GbE connection to each host. For write bandwidth, we measured 5.7GB/s and 5.8GB/s, giving us a total of 11.5GB/s. Read latency averaged out to 6ms while write latency came in at 11.65ms.

In addition to TCP, Fusion File Share by Tuxera also supports RDMA. We measured the Fusion RDMA protocol, which gave us read bandwidth measuring 11.6GB/s and 11.6GB/s to each host, or 23.2GB/s. Write bandwidth came in at 5GB/s and 5.2GB/s, or a total of 10.2GB/s. Read latency in this setup was 5.8ms, while write latency was 13.2ms.

Comparing Samba to Fusion showed enormous gains for the Windows clients. Read bandwidth saw gains of almost 3x, with latency at just 33 percent of what Samba offered. Write bandwidth also improved 2.3x, with latency at just 44 percent of what we measured with Samba.

| Protocol | Metric | Client1 | Client2 | Total |

|---|---|---|---|---|

| Samba | Read Bandwidth | 4GB/s | 3.8GB/s | 7.8GB/s |

| Read Latency | 16.77ms | 17.68ms | 17.23ms | |

| Write Bandwidth | 2.4GB/s | 2.6GB/s | 5GB/s | |

| Write Latency | 27.5ms | 25.7ms | 26.6ms | |

| Fusion TCP | Read Bandwidth | 11.1GB/s | 11.1GB/s | 22.2GB/s |

| Read Latency | 6ms | 6ms | 6ms | |

| Write Bandwidth | 5.7GB/s | 5.8GB/s | 11.5GB/s | |

| Write Latency | 11.8ms | 11.5ms | 11.65ms | |

| Fusion RDMA | Read Bandwidth | 11.6GB/s | 11.6GB/s | 23.2GB/s |

| Read Latency | 5.8ms | 5.8ms | 5.8ms | |

| Write Bandwidth | 5GB/s | 5.2GB/s | 10.2GB/s | |

| Write Latency | 13.4ms | 13ms | 13.2ms |

Huge SSDs Keeping Pace for M&E

Massive SSDs like Solidigm’s 61.44TB P5336 bring substantial benefits to the media and entertainment industries, where speed and reliability are crucial for handling large files such as high-definition videos, complex graphics, and extensive audio tracks. Unlike traditional HDDs (Hard Disk Drives), SSDs offer faster data access times, superior read/write speeds, and greater I/O operations per second. This performance edge allows for more efficient editing, rendering, and processing workflows, significantly reducing the time it takes to load and work with large media files. The absence of moving parts in SSDs enhances their reliability and durability, making them less susceptible to mechanical failures and data loss (critical concerns when dealing with valuable media content).

Adopting dense SSDs in media production and post-production environments streamlines workflows, enabling real-time editing, color grading, and effects processing without compromising quality or efficiency. These drives can seamlessly handle multiple streams of 4K video, eliminating the need for proxy files or low-resolution placeholders. Furthermore, the compact form factor of SSDs, combined with their high storage capacities, simplifies data management by allowing entire projects to be stored on a single drive or a minimal array, thus making it easier to access and manage large volumes of data.

Beyond performance and capacity advantages, massive SSDs contribute to a more conducive production environment through their silent operation, lower heat output, and energy efficiency. These features are especially beneficial in densely packed or mobile editing suites, where noise minimization and cooling are ongoing concerns. The energy efficiency of SSDs not only reduces operational costs but supports a cooler, quieter workspace—enhancing the overall productivity and comfort of media professionals. In essence, massive SSDs are transforming the media and entertainment landscape, enabling faster, more reliable, and more efficient production processes that can keep pace with the increasing demand for high-quality digital content.

Bridge Digital is a Big Fan

In our own testing with the CheetahRAID rig, we saw impressive benefits for M&E workloads. But we wanted another opinion from the industry, to see if our findings were consistent with those who are knee-deep in on-set data management. We reached out to our friend Richie Murray, Founder and President of Bridge Digital.

Bridge Digital is a company with expertise in digital video workflows and the technologies to make them work better.

They help creators and owners of digital content build an infrastructure to create, manage, distribute, and monetize their video assets effectively. Bridge Digital’s solutions cover the entire digital media workflow — from ingest to final delivery.

Richie notes that “Performance, compatibility, and supportability are our customers’ highest priorities. That is exactly what this solution brings together. The portability of data that the CheetahRAID system provides is unmatched in the industry, all the while not giving up the speed and reliability of less ‘ship-friendly’ hardware.”

Richie went on to say, “Fusion File Share is more performant and is completely compatible with existing networked creative workstations. Moreover, the massive Solidigm SSDs paired with Graid’s RAID implementation both contribute to the idea that ‘this solution is greater than the sum of its parts.’ The combined solution is extremely compelling for modern M&E workloads.”

Conclusion

Tuxera’s Fusion File Share sequential speeds are great for getting footage offloaded and verified quickly, especially when shooting with many cameras. Fast storage is no stranger to on-set use, and speed alone won’t impress much, but the ability to be networked and have multiple systems offloading simultaneously without slowing down is a particular workflow that Tuxera Fusion excels at.

Most of the time on set, the limiting factor is not the storage but the camera media, and this bottleneck becomes problematic, especially when you start getting into a double-digit number of cameras. It’s not uncommon to have multiple systems offloading several cards at the same time to independent drives, which then need to be consolidated either on set to an identical pair of master drives or at the post house on their servers.

The ability to network many systems to the same Tuxera Fusion storage pool and offload simultaneously without slowing down is a huge time saver. More importantly, a standard NAS would struggle with the ability to have that shared storage scale efficiently with many clients if utilizing Samba, enabling offloading, transcoding, managing, reviewing, and QC to all to happen simultaneously.

While we’ve addressed the benefits this solution offers on set, it’s also critical to understand all of this comes with very little complexity. The CheetahRAID server, Solidigm QLC SSDs, Graid Technologies RAID management, and Tuxera protocol are all very easy to configure in a base Linux install. The entire solution comes together in minutes, not hours, and aggregates best-in-class technologies for M&E professionals.

Amazon

Amazon