When one thinks of Kubernetes (K8s), terms that often come to mind have to do with large-scale environments like “cloud-scale,” “unlimited expandability,” and even “ginormous.” However, the reality is that a significant portion of the IT world needs to start with much smaller K8s environments for development and production. To provide cloud-level convenience, flexibility, and scalability, as well as to achieve cost advantage for on-premises and hybrid cloud infrastructure, Supermicro has introduced a turn-key solution that uses their industry-leading hardware paired with top-of-the-line software integration. In this article, we will look at what comprises this Supermicro Rack Plug and Play solution, how it works, and the economics of running an in-house K8s platform versus a cloud-based one.

Supermicro Rack Plug and Play Cloud Infrastructure

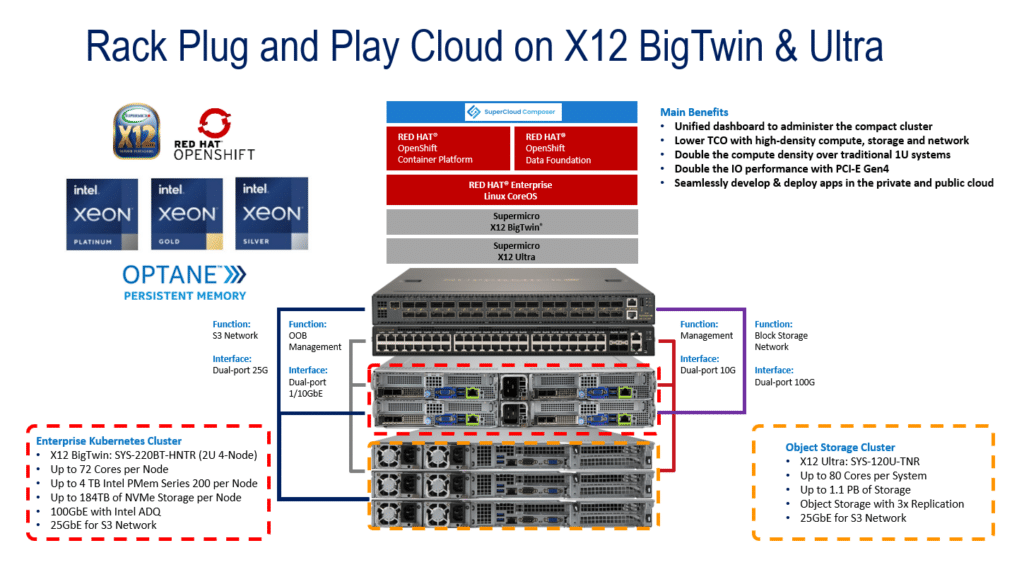

The four components that make up any K8s installation are compute, storage, networking and, software. To reach as many customers as possible, Supermicro has designed different system and rack-level configurations based on this program. The hardware for this solution takes advantage of Intel’s proven third-generation Xeon CPUs and Optane Persistent Memory (PMem), which we will touch on later. The architecture for their solution is composed of different classifications of compute nodes that follow the industry-standard classification:

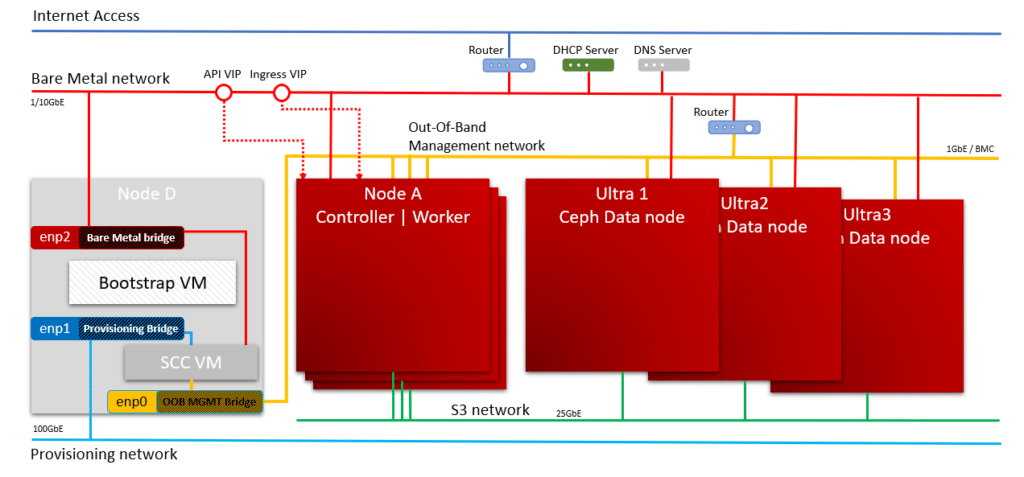

- Bastion Node & SCC VM enable the deployment, a bastion node was provisioned on one node to run the OpenShift installation. The bastion node runs Red Hat Enterprise Linux to host the scripts, files, and tools to provision the compact cluster. This node also powers a VM to host SuperCloud Composer to manage and monitor the OpenShift cluster.

- Master Node provides a highly available and resilient platform for the API server, controller manager server, and etc. To manage the K8s cluster and schedule its operation, multiple master nodes are required to ensure that the K8s cluster does not have a single point of failure.

- Infrastructure (Infra) Node isolates infrastructure workloads to allow separation and abstraction for maintenance and management purposes.

- Application Node runs the containerized applications.

- OpenShift Data Foundation (ODF) Node (formerly known as OpenShift Container Storage [OCS]) hosts the software-defined storage (SDS) that gives data a persistent place to live as containers spin up and down and across environments. ODF also supports file, block, or object storage.

Supermicro has architected a compact 3-node cluster based on their X12 BigTwin and has included X12 Ultra systems to offer additional storage capacity for the JumpStart program, which we’ll cover in this article. For an entry-level cluster, Ceph storage is not required.

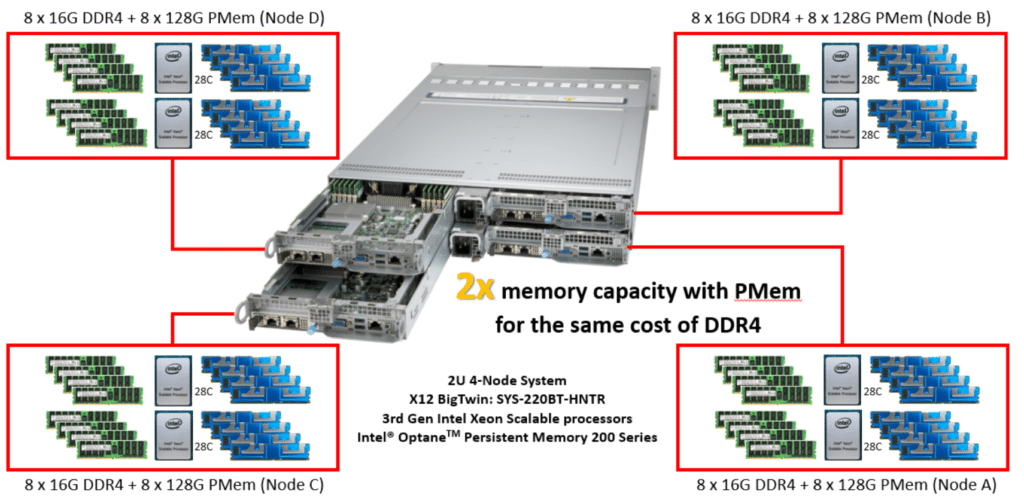

The X12 BigTwin is a 2U 4-node system with dual Intel Xeon Platinum 6338N processors. It contains 72 Intel compute cores, 4TB Intel Optane PMem, 512GB of DDR4 memory, and 184TB of NVMe-backed storage per node. For connectivity, it has 100Gb interfaces for the NVMe-oF, supporting persistent storage across 3 nodes and 25Gb to interface with the object storage cluster. Three of the X12 Ultra servers are storage nodes that can have up to 1.1 PB of object storage each and up to 80 compute cores. One of the verticals especially suited for this solution is media and entertainment for video streaming, content delivery, and analytics purposes.

As mentioned above, the BigTwin system uses Intel Optane PMem Series 200 and traditional DDR4 memory. By doing this, Supermicro can increase memory capacity as economically as possible, as Optane PMem is about half the price of DDR4 (per-GB).

It should be noted that the X12 BigTwin has a shared power and cooling design to reduce OPEX. Supermicro shared with us a specific use case about one of their customers in the financial sector. They were able to see a power savings of more than 20% with the X12 BigTwin systems compared to their X12 1U systems, as the BigTwin system only consumed 675W of power compared to the 980W that X12 1U system was drawing when running the same workload.

Big systems aren’t for everyone and Supermicro’s compact cluster is ideal for organizations seeking an entry-level, ready-to-deploy DevOps environment. Supermicro offers a free remote access program called Rack Plug and Play JumpStart for the compact cluster for IT and developers to test out their workflows and assess usability and performance. The X12 BigTwin can be customized to scale-out object storage with SYS-620BT-DNC8R, a 2U 2-Node SKU with 3.5” SAS/SATA drive support. With this configuration, 100Gb interfaces may not be needed to optimize performance per dollar. The compact cluster is also available as a total rack solution with Red Hat OpenShift pre-installed, which we will cover more in this article.

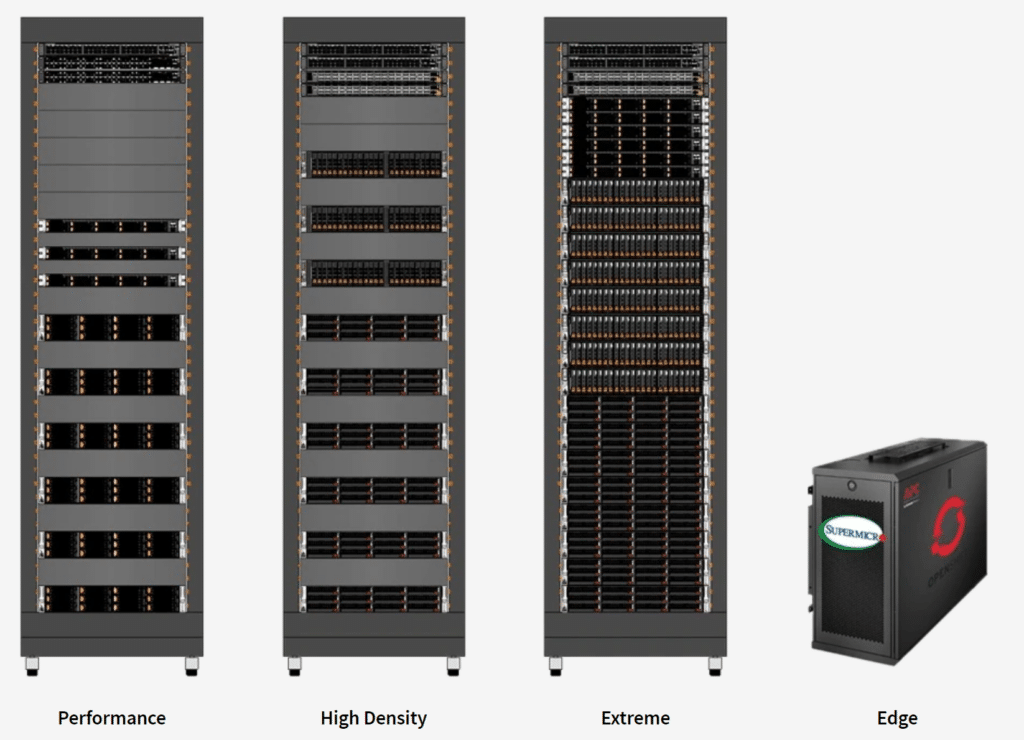

Should needs become smaller or grow larger, Supermicro has reference architectures for Edge, Regional, and Core data center solutions that make it easy to scale to the capacity needed.

Supermicro has designed four other Rack Plug and Play configurations – Edge, Performance, High Density, and Extreme (in order from the least to the greatest number of Intel compute cores).

Supermicro Rack Plug and Play Cloud Hands-On

To get a better idea of the hardware that comes with each configuration, we looked at the BOM for the Edge (SKU:SRS-OPNSHFT-3N), which is composed of three master nodes, three application nodes, and three ODF co-located nodes. To minimize the number of nodes, infrastructure nodes are omitted, and their duties are split amongst the other nodes.

This solution has 72 Intel compute cores, 768GB of RAM, and 138TB of storage (with 46TB of that being NVMe storage). For redundant interconnectivity, it has a dual 10Gb networking (four in total) for both management and data switched through dual SX350X-12 switches. All of this takes up just 6U of rack space and can be powered by two standard 120v electric circuits, which can commonly be found in an office or home.

Supermicro envisions the use-case for their edge configuration as running AI/ML at the edge and with target markets in retail, healthcare, manufacturing, and energy. We also believe that this would make a fine low-cost solution for small development teams and distributed content delivery networks.

The BOM for the Performance SKU (SRS-OPNSHFT-10) is composed of three master nodes, three co-located Infra nodes, three application nodes, and three ODF nodes. This solution has 288 Intel compute cores, 3TB of RAM, and 138TB of NVMe storage. For redundant interconnectivity, it has 1Gb networking (four total) for management and a quad 25Gb network for data that is switched through dual SX350X-12 switches, with management traffic being passed through an SSE-G3648BR switch. All of this takes up 42U of rack space.

The High-Density SKU (SRS-OPNSHFT-20) has 336 Intel compute cores with 3TB of RAM and 18 nodes. This High-Density SuperRack Solution is the scaled-out version of the compact cluster but uses two SYS-220BT-HNTR (2U 4-Node system) for controller and infrastructure nodes, and SYS-620BT-DNC8R (2U 2-Node system) as the application nodes.

To go even further, the Extreme SKU (SRS-OPNSHFT-30) has 640 Intel compute cores with 8TB of RAM and 22 nodes. Here are the full specs of all four Supermicro SKUs.

Supermicro Rack Plug and Play Software

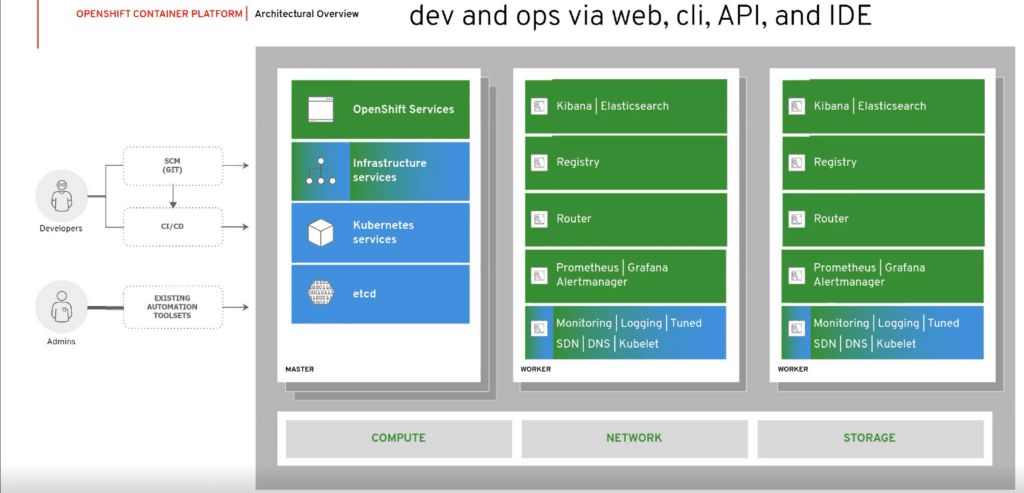

The software that comes pre-installed for all the SKUs is Red Hat’s uber-popular OpenShift, an enterprise-class K8s platform that has full-stack automation to manage a K8 deployment. Red Hat is a big supporter and player in the K8s community, and OpenShift is well regarded by the community. OpenShift not only allows for system-level management but for self-service provisioning for development teams as well. One of the benefits of OpenShift is that if you do decide you need to use public cloud resources, you can use the same interface workflows. This is huge, as it reduces the learning curve and can prevent errors due to a change in workflows.

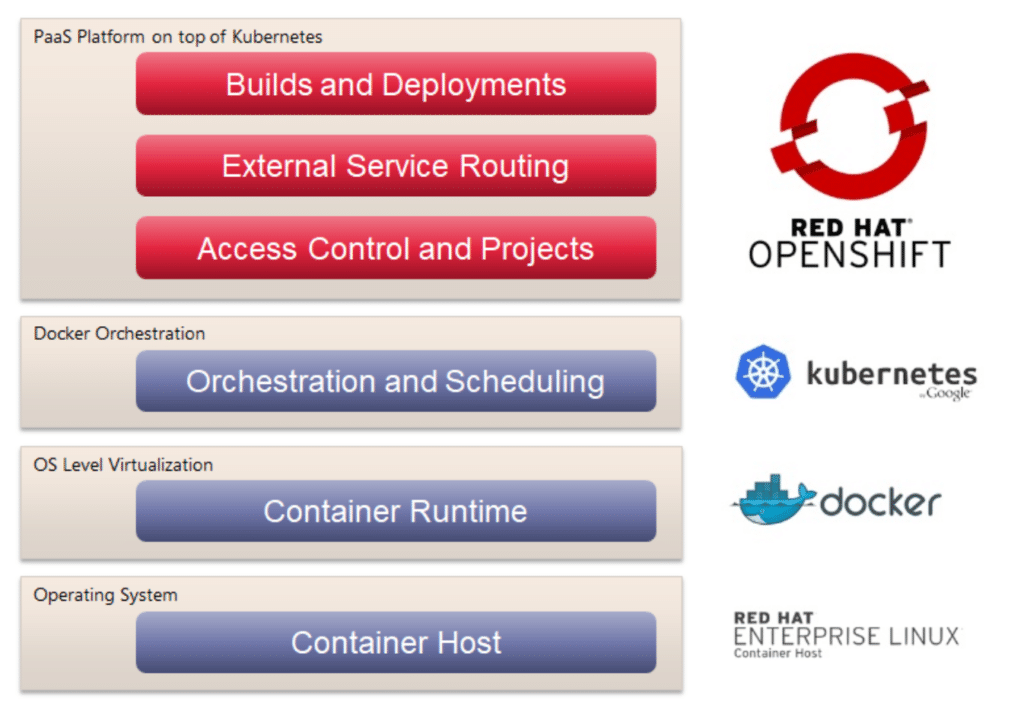

As a brief overview, OpenShift is a very mature product as it was originally developed over a decade ago and has been Red Hat’s Platform-as-a-Service (PaaS) during that time, and is entirely open source. Its software components are based on a highly-curated stack that uses the best and most popular components. For orchestration and scheduling, it uses K8s coupled with Docker for the container runtime and, of course, Red Hat for the OS.

A key component in the software stack is OpenShift Container Platform (OCP) and Red Hat Enterprise Linux CoreOS (RHCOS), both of which came with CoreOS (a company that Red Hat acquired back in 2018).

RHCOS is a stripped-down version of Red Hat Enterprise Linux that is specifically designed for container use. OCP is a platform as a service (PaaS) built for Linux containers that is orchestrated and managed by K8s. Both have been fully tested by Red Hat and are widely used in the field. These are key ingredients to reduce the bare-metal costs of this solution.

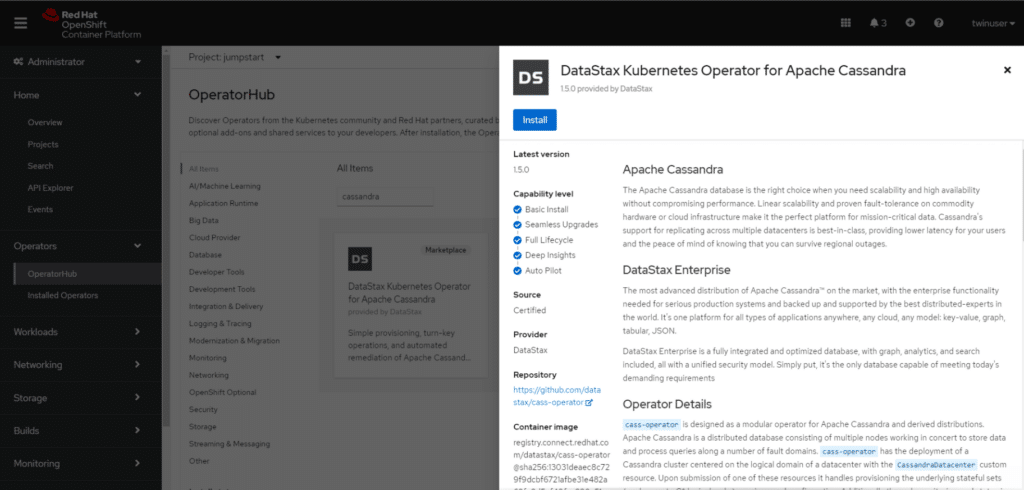

To speed the development and deployment along the way OpenShift includes the Operator framework. An Operator in K8s parlance is software that encapsulates the human knowledge required to deploy and manage an application on K8s. The Operator Framework is a set of tools and K8s components that aid in Operator development and central management on a multi-tenant cluster. OpenShift has Operators for popular applications such as Redis and Cassandra. By using operators considerable time and frustration can be saved when deploying an application regardless of the skill level of the person deploying it.

SuperCloud Composer

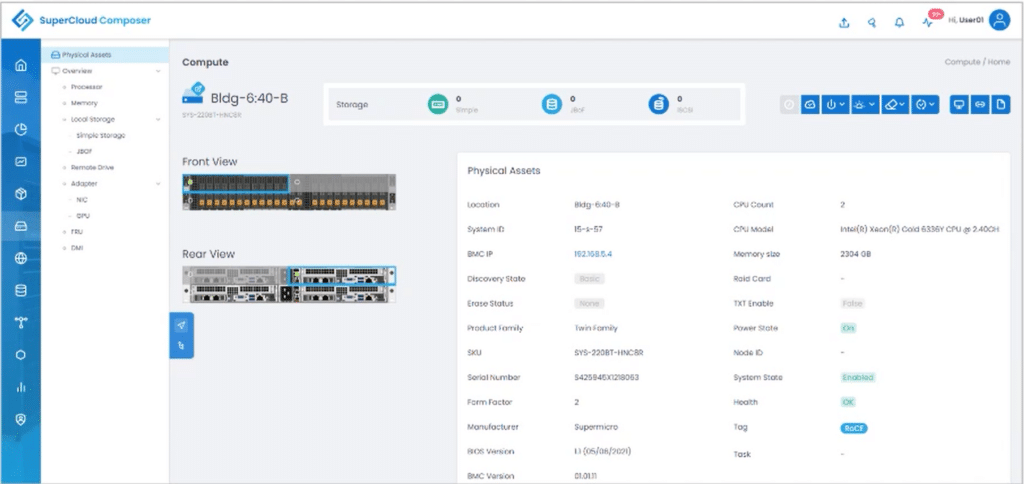

One of Supermicro’s hidden jewels is SuperCloud Composer (SCC), a single pane of glass that allows you to monitor and manage servers and deploy OSes on the computer. It has an API that allows others to use its Redfish-compatible integration, and it uses ranges from power management to asset management. This is part of the secret sauce that completes the solution stack by providing hardware-level support.

The prerequisites for Supermicro’s compact cluster are similar to Red Hat’s standard OpenShift installation. These prerequisites include, but are not limited to, the following:

- Ensuring network connectivity is in place

- Set up or install load balancers for the API and Ingress

- DNS entries in place for the cluster

- Any CLI tools you may need

- DHCP address reservation or use static IPs

The compact cluster is straightforward to provision if all prerequisites are met. In the future, Supermicro plans to release an Ansible Playbook to help orchestrate the setup process, including HW RAID 1 configuration for the NVMe M.2 boot drives and OpenShift cluster installation. Essentially, this will help to enable zero-touch provisioning, which we typically only see from ISVs with OEM appliance(s).

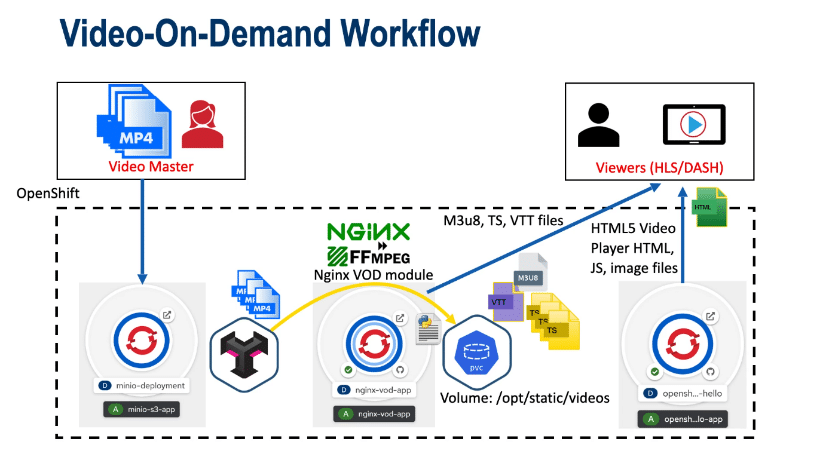

Node D is physically located within the 2U enclosure but has been isolated into a separate BMC network for Supermicro administrators who are actively supporting the JumpStart program. This node has a 1Gb NIC for the “Provisioning Bridge” for the SCC VM. Based on the network topology, the JumpStart program allows remote users to securely access the SCC VM and the OpenShift cluster to explore the capabilities of the cluster, including a Video-on-Demand demo running on a Pod. The demo workflow is illustrated below.

Supermicro Rack Plug and Play Cloud Jumpstart Program

Investing in a new solution always comes with uncertainties concerning workload and workflow compatibilities. To alleviate these fears, Supermicro has set up a robust Jumpstart program that allows prospective clients to have a chance to try out the solution before buying it.

Costs

Public clouds have thrived on the blanket misconception of being less expensive than on-premise deployments; however, for many situations, owning the hardware is more attractive when comparing its stable pricing scheme vs. the ongoing cost associated with a cloud solution. Yes, the cloud providers do have the advantage of scale where they can amortize the cost of their operations personnel over thousands of nodes. However, Supermicro has mitigated this by using OpenShift where Red Hat has taken on the burden of testing and maintaining the solution. This means Supermicro customers don’t have to validate updates and patches for their systems, which can be a time-consuming and thus costly process.

The pricing stability is also a factor that plays into Supermicro’s favor. There is certainly more than one horror story where a public cloud consumer has overspent their budget by over-consuming resources. The reality is with a highly-automated solution like K8s, a simple misconfiguration can spawn a host of applications that consume public cloud resources. In contrast, with an on-premise solution, this simply is not possible.

Not only is this solution cost-competitive with public clouds but Supermicro offers other suggestions to drive down costs such as running a bare-metal subscription of Red Hat Open Data Foundation (ODF) rather than running it on a hypervisor as it is usually less expensive and avoids a hypervisor tax.

We asked Supermicro about how customers can purchase OpenShift (the software component in their solution) and they said that it could either be included on a single invoice from them or customers could buy it directly from Red Hat.

Supermicro was kind enough to work up some numbers for us regarding the cost difference when deploying OpenShift on bare-metal or using a hypervisor. We were surprised to see how much money could be saved by running it on bare-metal vs. a hypervisor. As K8s applications need storage, they also included an estimate that only 4 cores for applications that need a file system, block storage of object storage.

The numbers show that it may be 3 to 9x more cost-effective to run OpenShift on bare-metal vs. running it on a hypervisor depending on how much compute and storage resources are required per cluster. There were two scenarios that were analyzed to estimate the cost savings. In the low-end of the spectrum, 16 OCP subscriptions (32 cores) and 2 ODF (4 cores) were factored in. On the high-end of the spectrum, all 64 cores per node were factored in for both compute and storage. Each node can potentially support 250 Pods by default. Supermicro recommends carefully planning out OpenShift deployments. A good place to start is this OpenShift 4.8 planning document.

Data Governance

Data locality is a tricky subject. Many companies and governments have very strong regulations about where data must reside. With an on-premise solution, you and any auditors can be assured, even to the point of laying on of hands, where data resides. Physically accessing the storage in a public cloud is simply not possible—period, end of story.

Conclusion

Supermicro identified a market and created a reliable affordable solution to fill it, as it has often done in the past. In this case, the market is for an on-premise K8s cluster using Intel’s proven third-generation Xeon processors for reliability in association with Intel Optane PMem to contain cost without affecting performance and Red Hat’s proven K8s software. These pre-defined solutions allow customers to quickly deploy a K8S cluster as these solutions come from Supermicro pre-architected, validated, and tested.

Their solution can be deployed in days rather than the weeks it would ordinarily take to deploy a roll-your-own solution, and as Supermicro’s solution has been extensively tested for compatibility, you will not run into any time or cost-busting gotchas that tend to occur during the deployment of new technology. Speaking of costs, the pricing model of Supermicro’s solution prevents the sticker shock that can come when using a public cloud.

No matter what your needs are, Supermicro has developed a solution that has you covered; from the Edge SKU designed for edge AI/ML works or small development teams, to the Extreme SKU designed for regional and core data centers for production workloads that can be used to replace public cloud deployments.

To get more information on Supermicro’s K8s solution, you can visit their web portal here.

Check out the Jumpstart Program to get hands-on with this solution.

Amazon

Amazon