Although virtualization has existed since the 1960s (thank you, IBM), virtual machines (VMs) became mainstream in the early 2000s. The idea was to enable better utilization of the available hardware resources by running multiple VMs on a single physical hardware platform.

Although virtualization has existed since the 1960s (thank you, IBM), virtual machines (VMs) became mainstream in the early 2000s. The idea was to enable better utilization of the available hardware resources by running multiple VMs on a single physical hardware platform.

VMware started developing virtualization software for x86-based architectures in the ’90s and released its initial virtualization product in 1999, VMware Workstation. The breakthrough for widespread adoption of VM technology began with the 2001 introduction of VMware ESX Server. Other vendors, like Microsoft and Citrix, entered the virtualization market to compete for market share.

Virtualization technology has continuously evolved, delivering innovations such as improved hardware utilization, enhanced flexibility, simplified software deployment, and substantial cost savings. Virtual machines have become a cornerstone across diverse landscapes, from data centers and enterprise IT systems to cloud computing platforms and even personal home setups. Most business-critical enterprise applications on virtualization platforms access shared storage via Fibre Channel.

However, each vendor had a proprietary management toolset, and most did not integrate with other platforms. So, managing hundreds or thousands of VMs was a nightmare with multiple tools, interfaces, and supported storage devices. Operations teams faced a management challenge with the added complexity of managing Fibre Channel (FC) fabrics and attempts to troubleshoot VM issues.

Making Management More Manageable

In 2007, VMware introduced ESXi in response to the changing landscape and demands in the virtualization market. ESXi improved upon the original VMware ESX server with increased efficiency, better security, streamlined management, integration, and market demands.

VMware has always had a hand in VM management, using various identifiers to uniquely identify virtual machines within its ecosystem. Initially, VMware used the unique MAC address to identify VMs, then moved on to BIOS Universally Unique Identifiers (UUIDs) that stored configuration data and included the VM Identifier. With ESXi 4.x, VMware introduced the concept of Instance UUIDs to track the VM instances during migration operations and across the vCenter Server.

With the release of VMware vSphere 5.x, VMware adopted a standardized approach to implementing universally unique VM-UUIDs (UUID-GUID format). These VM-UUIDs (aka VM-ID) were used to uniquely identify virtual machines across the entire vCenter Server environment.

Using the information in the unique identifier assigned to virtual machines (VM-IDs), managing a virtualized ecosystem and the associated storage area network (SAN) becomes easier for operations. Leveraging the detail in the VM-ID, administrators can identify and address individual VMs and perform critical tasks like provisioning storage, managing virtual machine lifecycles, and monitoring performance metrics. Such innovations towards management simplicity enabled VMware to become the market majority-share leader in server virtualization for enterprise data centers.

Fibre Channel VM-ID

Although VM-ID uniquely identifies a VM in a vCenter domain, this ID is not typically shared or available to other infrastructure components that the VM interacts with, like storage and networking devices. New capabilities in FC allow the VM-ID to be shared across the SAN fabric. In the latest releases of VMware vSphere, including versions 7.x and 8.x, VM-ID is a full-featured, robust technology offering numerous benefits when integrated with modern Fibre Channel infrastructure.

By leveraging VM-IDs, administrators can implement fine-grained control and monitoring of storage traffic within the Fibre Channel fabric. But it’s more than that. The VM-ID contains information administrators can utilize to fine-tune access control policies based on VM-IDs, ensuring secure and efficient data transfers between virtual machines and storage devices. VM-IDs also assist in troubleshooting and performance analysis by providing a means to track and isolate issues related to specific virtual machines.

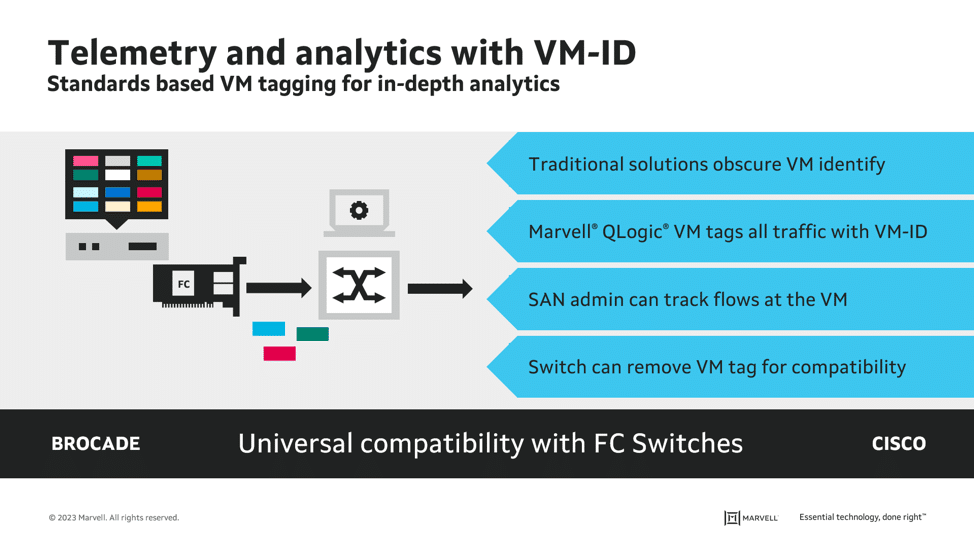

With their QLogic line of FC HBAs, HBA vendors like Marvell can leverage VM-IDs and enable the Fibre Channel network to integrate seamlessly with virtualization management platforms and tools from Brocade and Cisco.

VM-ID is Essential For Centralized SAN Management

Brocade developed the SANnav management solution to simplify and streamline SAN management and monitoring, utilizing VM-ID technology to deliver advanced analytics. However, VM-ID works with other SAN management solutions, such as Cisco’s Nexus Dashboard Fabric Controller (NDFC).

As with virtually every aspect of an IT administrator’s day and repeated with regularity, the sheer volume of data and complex storage infrastructures make managing SANs more intense and challenging. SANnav is a valuable tool for storage administrators, providing a centralized platform for SAN management. Leveraging the information provided within VM-ID enables administrators to control, configure, and troubleshoot their SAN deployments more efficiently and confidently.

As a comprehensive SAN management software solution, SANnav offers a range of features to facilitate effective storage infrastructure management. One of the key advantages of SANnav is its ability to provide a consolidated view of the entire SAN environment. Administrators can gain a holistic perspective on the interconnected network of switches, storage devices, and hosts, allowing for better understanding and control over the SAN fabric.

Marvell QLogic: Making HBAs Smarter

Smart host bus adapters play a critical role in SAN management and VM-IDs. Marvell has been designing and developing processors and system-on-chip (SoC) technologies that span many new data center services. They offer a broad, innovative portfolio of data infrastructure semiconductor solutions spanning compute, networking, security, and storage. Marvell’s Fibre Channel controllers and HBAs include full virtualization support with VM-IDs, allowing thousands of VMs to access shared storage via the same Fibre Channel chip.

Marvell QLogic Fibre Channel excels in performance and functionality for storage area networks in VMware. It streamlines VM deployment using VM-ID and supports multiple ports with simultaneous FC and FC-NVMe setups for optimal flexibility.

VM-IDs Deliver the Details

It’s essential to understand how integrating VM-ID with a SAN management solution gives administrators a more granular view of the entire SAN infrastructure. SANnav and VM-ID serve different purposes within the management structure of a storage network, complementing each other by providing a comprehensive approach to storage network management in a virtualized environment.

A VM-ID differentiates and identifies individual virtual machines, enabling virtualization platforms to allocate resources, manage the lifecycle of virtual machines, facilitate networking, and integrate with management tools. VM-ID allows administrators to track and manage virtual machines, assign specific configurations, and monitor performance.

Marvell QLogic VM-ID and SANnav

There is no argument that server virtualization has greatly benefited most organizations, but it has brought several challenges to the infrastructure team and application owners. Initially, application owners were skeptical that a virtualized platform could meet the needs of their applications, and there was continued resistance to relinquishing stand-alone servers in favor of a virtualized environment.

It’s fair to say application developers have not impacted server virtualization. However, there were still complaints about needing more visibility into actual metrics, primarily where I/O was concerned. The lack of visibility was due to the hypervisor (in the case of VMware vSphere ESXi server) abstracting the physical disk to virtual disks placed on a data store, with all the I/O to the data store from all the VMs on the hypervisor as aggregate. So, although the overall performance of the I/O subsystem on the server could be seen, the granular level of visibility to the actual VM and the application was unknown.

To gain visibility into those individual VM flows, the FC fabric provides a standards-based virtual machine application identifier tag, VM-ID, to each VM. Once the application ID is assigned to a VM, the VM and Marvell QLogic 32GFC and 64GFC HBA on the hypervisor use the VM-ID to tag all frames for that VM.

The VM-ID identifies the specific VM instance initiating the I/O and any follow-on I/Os destined for the target. The VM-ID tag can only be applied if the storage array supports VM-ID. The information in each VM-ID means SANnav can correlate it with performance metrics, allowing administrators to monitor individual VMs, track resource utilization, and quickly identify potential performance bottlenecks. The information provided by VM-ID provides administrators with the detail necessary to identify and troubleshoot an affected VM and take steps to resolve the issue quickly.

Working in concert, SANnav utilizes VM-ID information embedded into each FC packet by the Marvell QLogic FC-HBA to efficiently and effectively track the performance of individual VMs.

VMware ESXi in the Enterprise

Organizations employ VMware ESXi to create and manage virtual machines. ESXi is a bare-metal hypervisor that enables organizations to consolidate multiple VMs on a single server, providing flexibility, resource optimization, and easier management of an IT infrastructure.

There are numerous benefits to deploying ESXi in the enterprise and some drawbacks. With the ability to run multiple VMs on a single physical server, organizations can reduce hardware costs and maximize server utilization. This can save on power consumption, cooling, and space requirements. However, this is the most significant contributor to VM sprawl.

With ESXi, administrators can allocate computing resources, like CPU, memory, and storage, to VMs based on need. This flexibility ensures efficient resource utilization and avoids over-provisioning and underutilizing server resources. ESXi supports features like vSphere High Availability (HA) and vSphere Fault Tolerance (FT), delivering increased server availability and resilience.

ESXi utilizes VMware vCenter Server as the centralized management interface to monitor, provision, and manage virtualized environments. Without VM-ID, managing a virtualized infrastructure like this would be challenging, if not impossible.

VM Sprawl

ESXi can undoubtedly contribute to VM sprawl if not correctly managed. VMware addresses these concerns by providing tools and best practices for IT administrators. These tools include resource and capacity planning, lifecycle management, and policy-based automation.

VMware ESXi and vCenter are crucial to enterprise virtualization deployment, enabling organizations to meet server consolidation, resource optimization, HA, and management requirements. However, VM-ID is fundamental in identifying and differentiating individual VMs, allowing administrators to efficiently manage and optimize their virtualized infrastructure.

Beware The I/O Blender Effect

The I/O blender effect occurs in virtualized environments, including ESXi, when storage input/output (I/O) patterns become randomized and less predictable. This can be due to the simultaneous operation of multiple virtual machines (VMs) sharing the same physical host and accessing storage resources.

In a virtualized environment, multiple VMs running on a single host may send I/O requests to the underlying storage infrastructure at different times, with varying levels of intensity and frequency. When the hypervisor receives these I/O requests, they get aggregated and serialized before being sent to the storage system. As a result, the I/O patterns generated by the VMs become “blended” or mixed. This makes identifying noisy neighbors and culprits that cause congestion or head-of-line blocking difficult and time-consuming, often resulting in missed SLAs.

Mitigating the effects of this phenomenon can be achieved through various techniques such as:

- Implementing storage tiering

- Utilizing QoS mechanisms

- I/O optimization

- VM-ID technology in FC HBAs

What Role Does VM-ID Play?

Although the VM-ID does not directly affect the I/O blender effect, it significantly mitigates the impact. The VM-ID can be leveraged by implementing the following:

- Associate VM-IDs with specific storage QoS policies

- Mapping specific VM-IDs to dedicated storage resources

- Utilize VM-IDs for load balancing

- Use VM-IDs in conjunction with monitoring tools

So, even though the VM-ID does not impact the I/O blender effect directly, providing visibility and control over individual VMs, administrators can tailor storage provisioning and prioritize resources based on VM-IDs, resulting in improved performance, reduced contention, and better overall management of the I/O blender effect.

Marvell Continues to Innovate

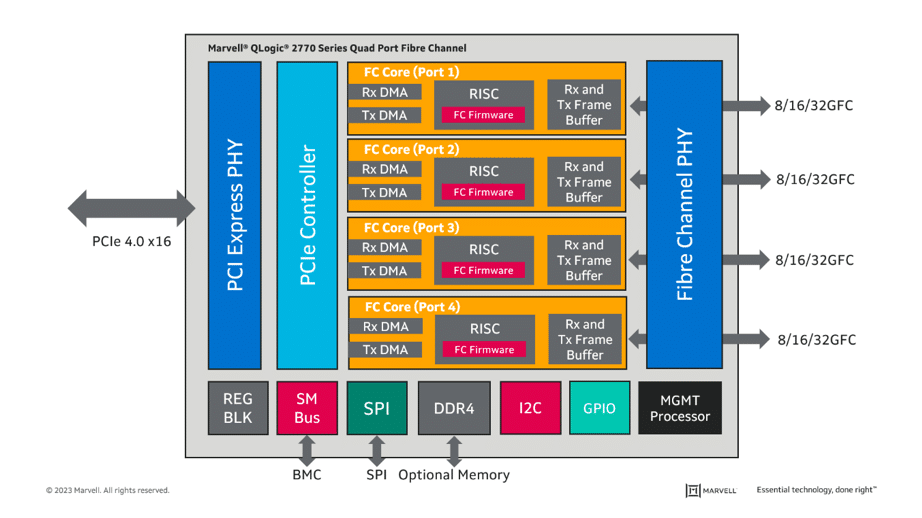

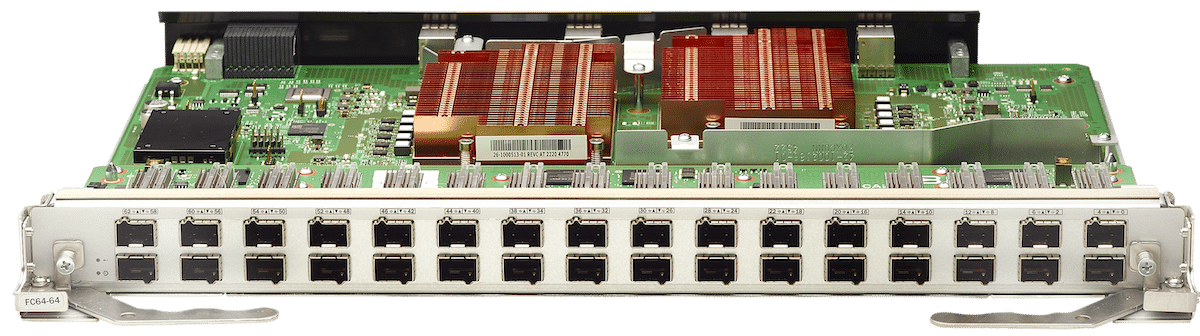

Marvell Fibre Channel HBAs offer performance and functionality for Fibre Channel Protocol (FCP) and NVMe over Fibre Channel (FC-NVMe). The HBAs are designed using isolated paths for each port, enabling line-rate performance per port with exceptional reliability. The adapters deliver millions of IOPS, microsecond latency, and full line rate throughput up to 64GFC. Marvell StorFusion™ and VM-ID technology enable simplified deployment and orchestration integration into Fibre Channel SANs.

Marvell 2770 Series Adapters Block Diagram with independent resources per port

Marvell StorFusion

Marvell StorFusion technology includes advanced capabilities enabled when deployed with supported Brocade and Cisco switches. Combining these solutions, SAN administrators can take advantage of enhanced features that improve availability, accelerate deployment, and increase network performance.

Starting with the QLE2690 HBA and further enhanced with the QLE2770 and QLE2870 HBA series, Marvell adapters have supported several standards-based virtualization features that optimize virtual server deployment, troubleshooting, and application performance.

Marvell VM-ID technology integrates easily with Brocade and Cisco switches, allowing customers to monitor and manage QoS in their Fibre Channel storage networks; for example, load balancing VM clusters with storage to ensure efficient use of the storage resources.

Starting with VMware ESXi 6.x, support for tagging I/O requests and responses with the VM-ID of the corresponding virtual machine provides complete visibility at the VM level.

Additionally, Marvell StorFusion Universal SAN Congestion Mitigation (USCM) technology, based on industry-standard Fabric Performance Impact Notifications (FPIN), allows the HBAs and switches in the SAN to identify and mitigate potential congestion issues within the fabric. Support for N_Port ID virtualization (NPIV) enables a single FC adapter port to provide multiple virtual ports for increased network scalability. Standard class-specific control (CS_CTL)- based QoS technology per NPIV port allows multi-level per VM bandwidth controls and guarantees. As a result, mission-critical workloads can be assigned a higher priority than less time-sensitive storage traffic for optimized performance.

Tagging I/O Frames for Fibre Channel VMs

Under the covers, FC VM-ID technology involves the FC HBA tagging I/O frames with VM tags and the FC switch reading those tags and recording statistics per VM. This brings several benefits, primarily improved visibility, resource allocation, and troubleshooting. Tagging I/O frames with VM tags delivers enhanced visibility of the traffic generated by the individual VM. This allows for effective monitoring, analysis, and management of storage traffic. With VM tagging, administrators get a clear view of resource allocation, implement QoS policies specific to an individual VM, simplify troubleshooting, and improve security and access control.

Fibre Channel VM-ID technology requires support for VM-ID in the FC HBA, FC switch, and storage array. The majority of modern FC HBAs and switches have support for VM-ID. However, VM-ID is only supported on storage arrays from NetApp and PureStorage, posing a challenge to this technology’s widespread adoption and deployment. Recent innovation on Brocade switches removes the VM-ID limitation with Tagless VM-ID or VM-ID+ technology.

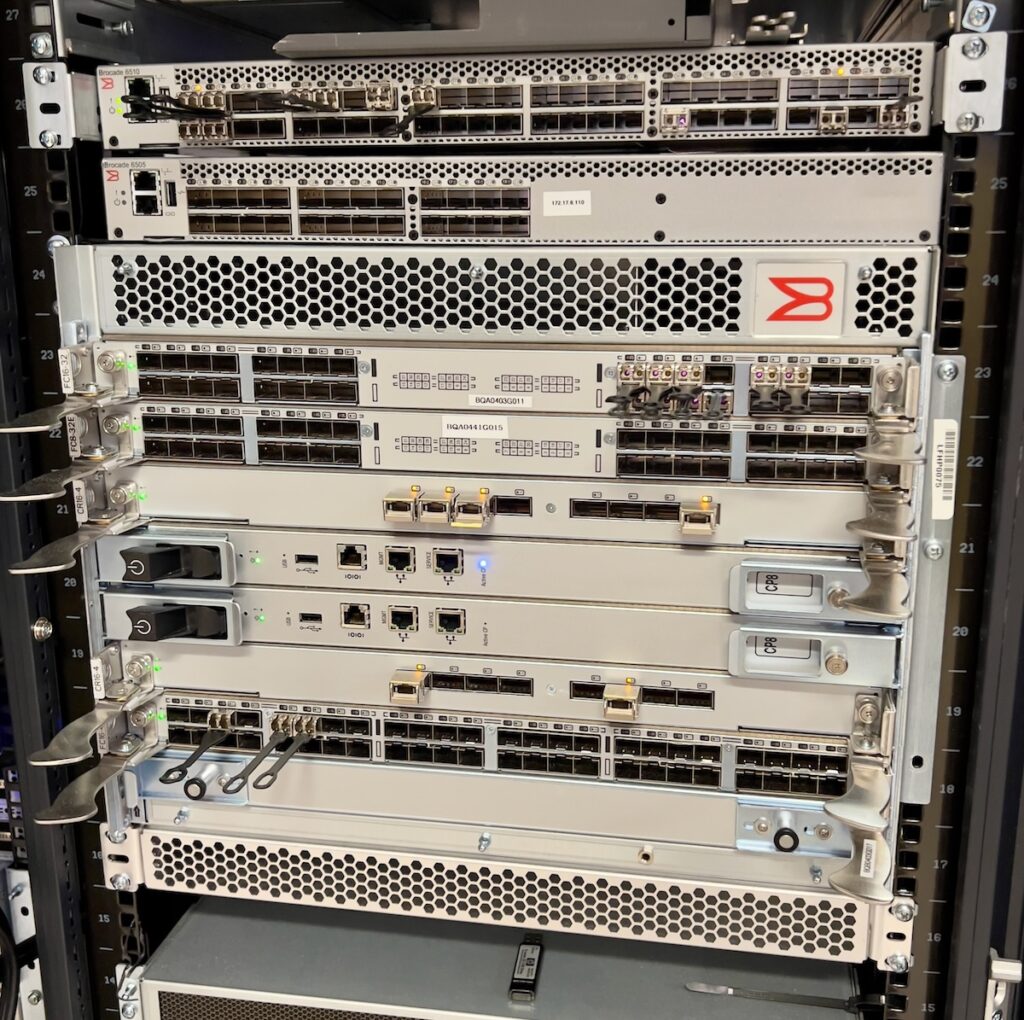

Marvell VM-ID at Flash Memory Summit 2023

At Flash Memory Summit (FMS), an annual international memory and storage showcase, we visited the Marvell booth to get a look at what they were working on. Alongside demos of SSD controllers, NVMe accelerators, and CXL chipsets was a live Fibre Channel VM-ID demo. The VM-ID demo was well attended by customers and partners. VM-ID for Fibre Channel is definitely taking off.

Tagless VM-ID or VM-ID+

As mentioned above, VM-ID+ removes the dependency on the storage array to support VM-ID tagging. VM-ID+ is configured on the ports of the SAN fabric where the storage array is connected. When VM-ID+ is enabled, frames sent from the hypervisor to the storage array have the VM-ID tag removed by the Brocade Gen 7 switch at the egress port attached to the storage array. The frames the storage array sends to the hypervisor have the VM-ID tag added by the fabric. The fabric switches maintain the mapping and the collection of VM telemetry data.

Tracking VMs With VM-ID From The Command Line

The Brocade FC switch commands that display current VMs and their statistics operating within the fabric:

A. Execute “appserver –show -all,” referenced output is shown below; the information reflects the six total VMs in operation using VM-ID; in this case, there are three VMs from each ESX host. The last line shows the total number of VMs.

sw0-G720:FID128: > appserver –show-all

————————————————————

Displaying results for Fabric

————————————————————

N_Port ID: 010300

Entity ID (ASCII) : 52 b3 0f fc 5a 05 47 a6-18 eb aa b4 b4 8f 9a 5f

Entity ID (Hex): 0x35322062332030662066632035612030352034372061362d3138206562206161206234206234203866203961203566

Application ID: 0x00000010 (16)

Entity Name:

Host Identifier:

Symbolic Data:

——————–

N_Port ID: 010300

Entity ID (ASCII) : 52 2c c3 8f c8 3f f5 75-a5 6c db bd 89 3a 95 13

Entity ID (Hex): 0x35322032632063332038662063382033662066352037352d6135203663206462206264203839203361203935203133

Application ID: 0x00000012 (18)

Entity Name:

Host Identifier:

Symbolic Data:

——————–

N_Port ID: 010300

Entity ID (ASCII) : 52 b1 ac 8d 2a aa 93 c4-5e 51 98 24 84 63 e0 c2

Entity ID (Hex): 0x35322062312061632038642032612061612039332063342d3565203531203938203234203834203633206530206332

Application ID: 0x00000018 (24)

Entity Name:

Host Identifier:

Symbolic Data:

——————–

N_Port ID: 010800

Entity ID (ASCII): 52 bb 51 48 8a 5c 98 33-7a 74 c6 d5 27 05 58 49

Entity ID (Hex): 0x35322062622035312034382038612035632039382033332d3761203734206336206435203237203035203538203439

Application ID: 0x00000010 (16)

Entity Name:

Host Identifier:

Symbolic Data:

——————–

N_Port ID: 010800

Entity ID (ASCII): 52 36 64 98 87 5d a5 c6-02 38 0a d7 85 42 3b 4b

Entity ID (Hex): 0x35322033362036342039382038372035642061352063362d3032203338203061206437203835203432203362203462

Application ID: 0x00000012 (18)

Entity Name:

Host Identifier:

Symbolic Data:

——————–

N_Port ID: 010800

Entity ID (ASCII) : 52 de 5b 4f a9 9f 98 12-65 4f e7 ca c5 78 c2 3c

Entity ID (Hex): 0x35322064652035622034662061392039662039382031322d3635203466206537206361206335203738206332203363

Application ID: 0x00000018 (24)

Entity Name:

Host Identifier:

Symbolic Data:

——————–

The application Server displays six entries.

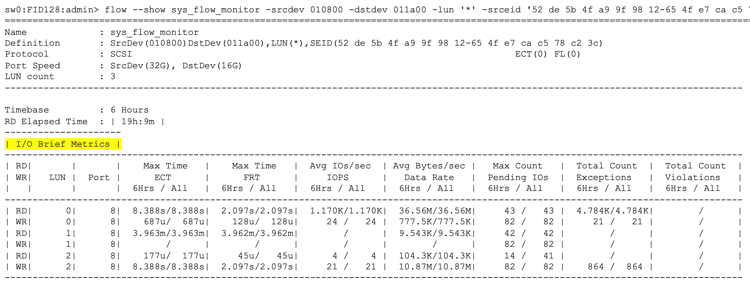

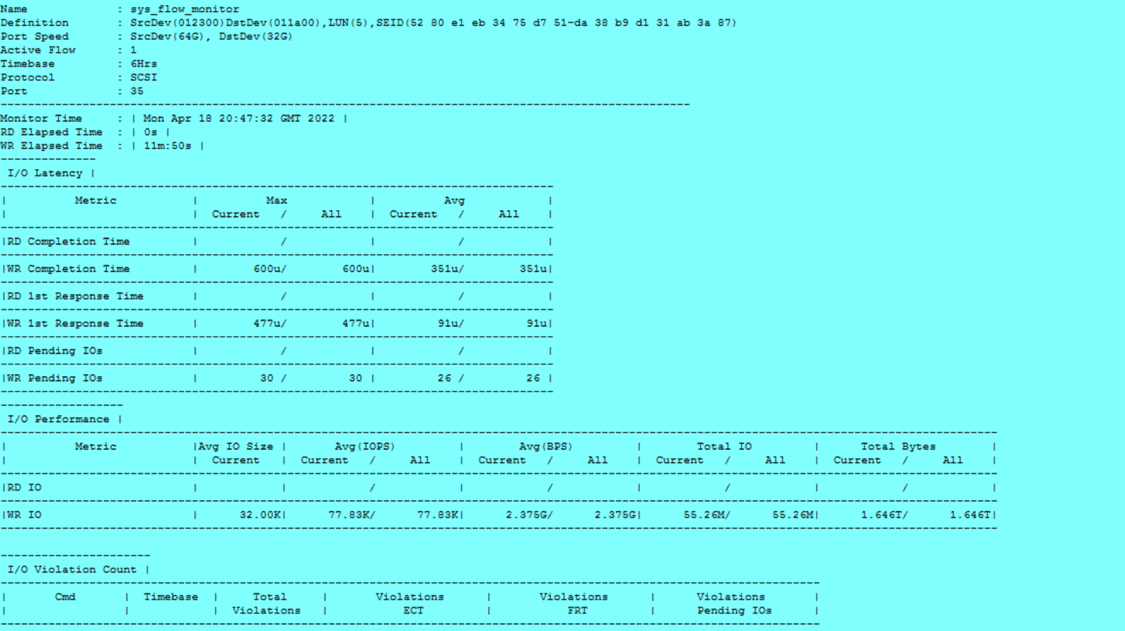

B. Brocade Analytics Engine reported stats for VMs

Use the commands below to detail the I/O metrics for each VM

C. Checking and setting a Brocade switch port to target for Tagless VMID (for storage arrays other than NetApp and PureStorage):

sw0-G720:FID128:admin> portcfgappheader -h

Usage:

portCfgAppHeader <[slot/]port> –enable/–disable

D. Running the command to a port that has been previously configured will show the following:

portcfgappheader 26 –enable

Same configuration for port 26

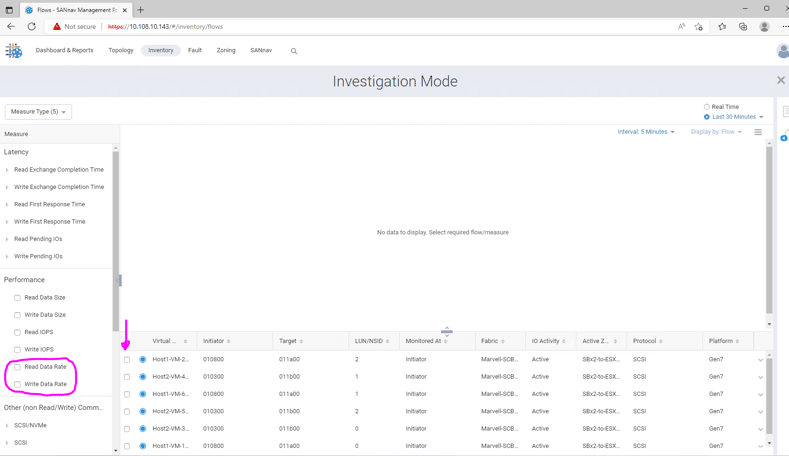

SANnav’s Investigation Mode provides insight into the operational performance of individual VMs. It collects and stores SAN performance statistics and telemetry data, then provides clear and intuitive time series graphs that plot key traffic metrics. It includes MAPS violation details for ports, links and trunks, extension tunnels and circuits, and flows to help users understand and investigate complex traffic pattern behaviors. In addition, it can collect metrics more frequently and in near real-time (at 10-second intervals) for selected ports.

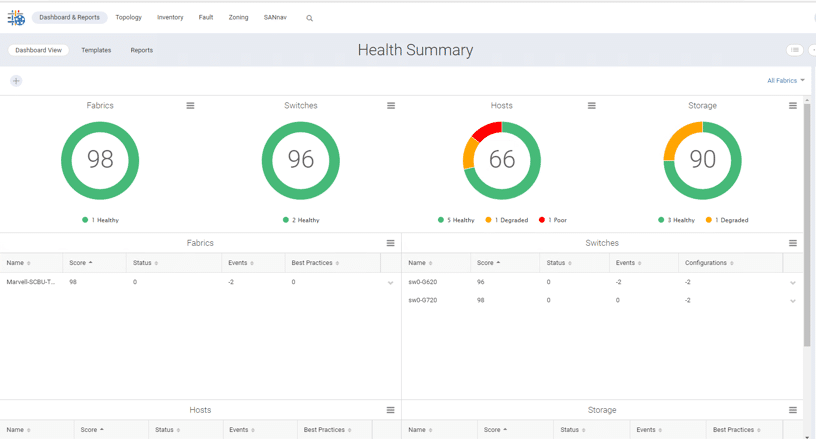

After logging in, the SANnav Dashboard view will display the fabric/switches that are being managed.

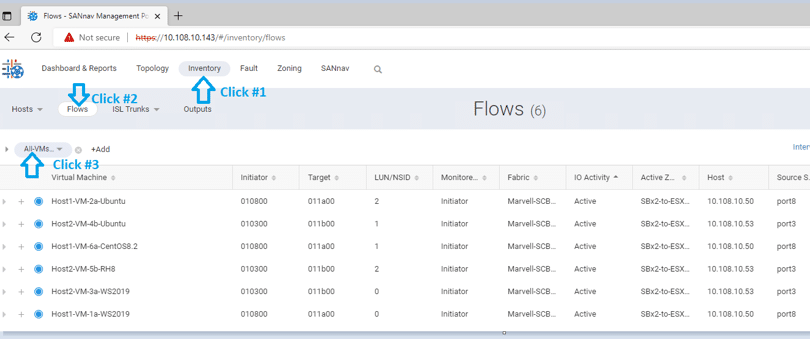

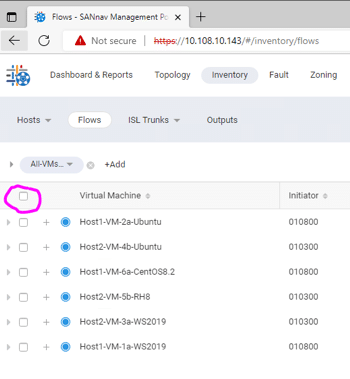

1. Navigate to the Inventory—> Flows —> select user-defined filter:

“All-VMs-flows” and detailed flows will be displayed as illustrated below:

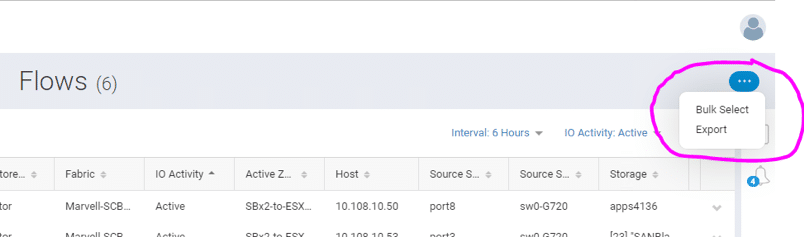

2. Click on the icon (…) on the top right corner to display a drop-down menu, then click “Bulk Select.”

3. In the banner area above all the VMs, click the checkbox to select all VMs.

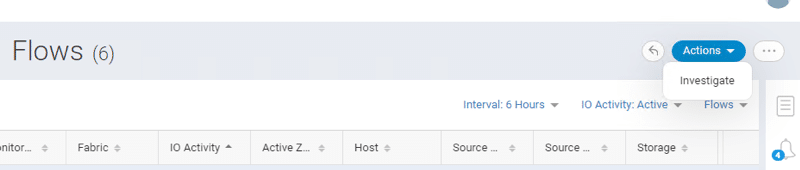

4. Once the VMs have been selected, click the Action button in the top-right, then select “Investigate.”

Within the Investigation Mode window:

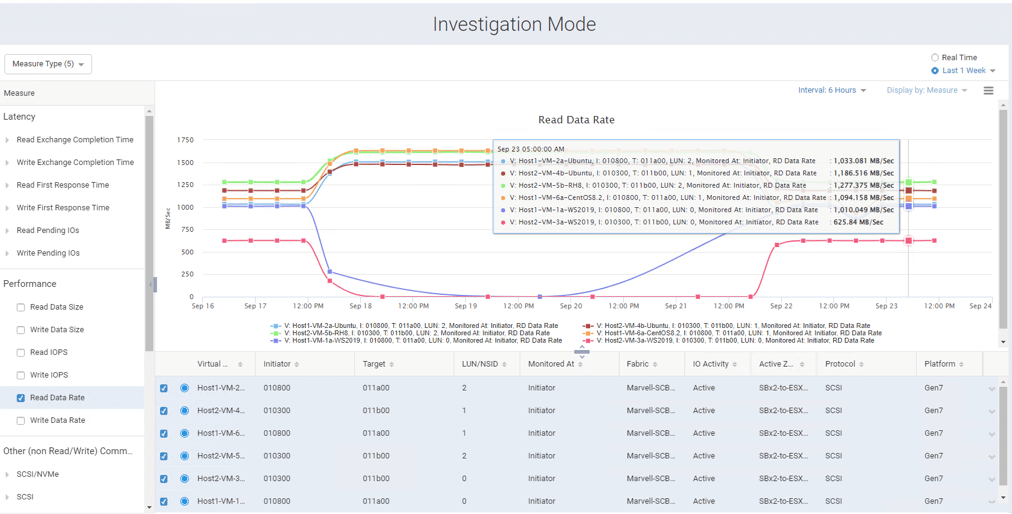

5. Click on the checkboxes for each of the VMs and click the “Read Data Rate” option from the left panel.

6. Next, click the down arrow next to the “Last 30 Minutes.”

7. Another window for “Select Date Range” will appear. Click on the left pre-defined option for “Last 1 week”, then click “Apply.”

8. Then click the down arrow next to the “Interval: 5 Minutes” and select the “6 Hours” option.

9. A detailed information view will be displayed for the six VMs and the associated traffic present within the fabric. Moving the cursor and hovering over a specific graphed time index will show the Read Data Rate Performance for each.

10. Then click the down arrow next to the “Interval: 5 Minutes” and select the “6 Hours” option.

11. A detailed information view will be displayed for the six VMs and the associated traffic present within the fabric. Moving the cursor and hovering over a specific graphed time index will show the Read Data Rate Performance for each.

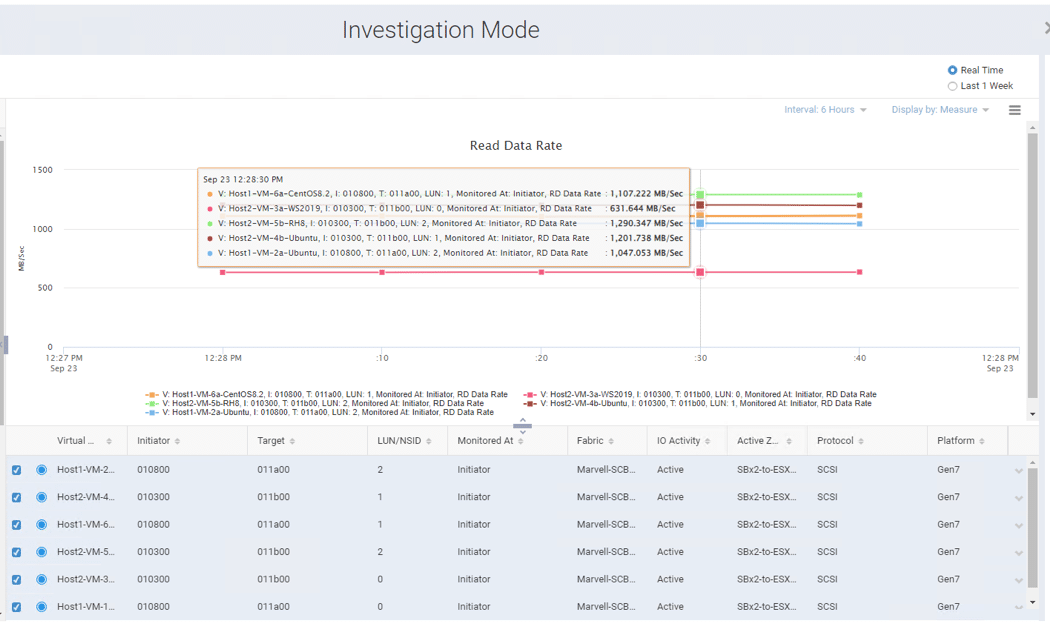

12. Click the “Real Time” option from the top right to display and refresh details every ten seconds.

The performance and IO profiles of individual VMs enabled by VM-ID, and shown in the above SANnav screens, enable SAN and storage administrators to gain visibility into the traffic patterns for each VM.

Marvell QLogic VM-ID technology and Brocade SANnav are innovation leaders in modern data management. With VMware ESXi, VM-ID’s seamless VM deployment and orchestration capabilities, and SANnav’s comprehensive storage management tools, businesses can confidently and easily navigate the complexities of virtualized environments.

These solutions empower organizations to harness the full potential of their data infrastructure, ensuring optimal performance, efficiency, and adaptability. As technology evolves, Marvell QLogic VM-ID and SANnav remain steadfast partners in the journey toward streamlined data management and enhanced operational excellence.

This report is sponsored by Marvell. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed