The Supermicro SuperServer 1029U-TN10RT is a 1U dual-processor complete system. Supermicro designed the SuperServer to address a slew of popular use cases like virtualization, databases, cloud computing and others that can benefit from high density compute power. The system has been updated to support second generation Intel Xeon Scalable CPUs and it’s one of the first to ship with support for Intel Optane DC Persistent Memory Modules (PMM).

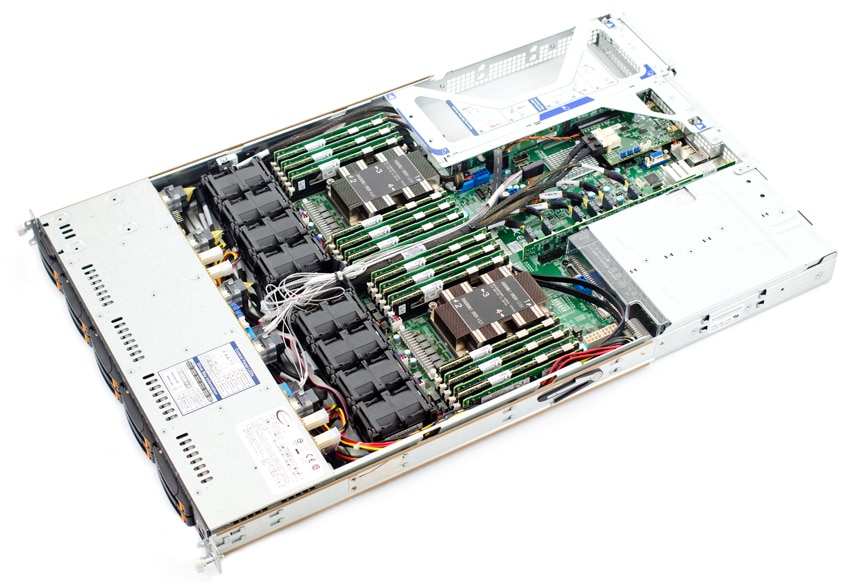

In addition for support for the latest Intel data center technologies, the system provides storage via ten hot swappable 2.5″ NVMe bays in the front. Internally Supermicro provides support for two M.2 slots, one SATA and one NVMe, though additional M.2 slots can be added as an option. The board supports 24 DIMM slots, which can be used in the traditional way with DRAM, or with PMEM as is the configuration in this review. Looking at connectivity, the system has two 10GBase-T LAN ports on board. Expansion for additional connectivity is available via two PCI-E 3.0 x16 (FH, 10.5″L) card slots.

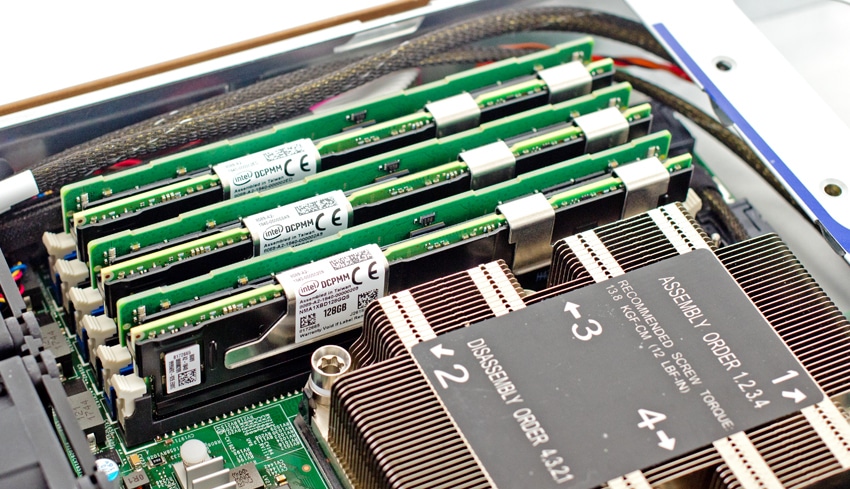

As noted, our review system features two Intel Xeon Scalable 8268 (2.9GHz, 24C) along with 12 DRAM sticks and 12 Intel Optane DC persistent memory modules. While it’s still very early in the persistent memory journey, this configuration of 4:1 persistent memory to DRAM using all of the memory slots on the board and two Intel CPUs is likely going to be a typical and recommended server configuration to take full advantage of these new technologies. In addition to these core components, the system under review includes ten Intel DC P4510 NVMe SSDs.

Supermicro SuperServer 1029U-TN10RT Specifications

- Chassis – Ultra 1U SYS-1029U-TN10RT

- CPU – 2 x Intel Xeon Scalable 8268 (2.9GHz, 24C)

- Storage – 10 x Intel DC P4510 2TB NVMe SSD, 1DWPD

- DRAM – 12 x 32GB DDR4-2933

- Persistent Memory – 12 x 128GB DDR4-2666 Intel Optane DC PMMs

- Network – 2 x 10GBaseT

Design and Build

As stated the Supermicro SuperServer 1029U-TN10RT is a dense 1U server that can house two of the new Intel Xeon Scalable processors. The majority of the front of the device is taken up with 2.5” NVMe drive bays, ten in total. On the right is the control panel with the power button, UID button, and status LEDs. Beneath are two USB 3.0 type-A ports.

Flipping the device around to the rear, two PSUs are on the left, followed by two 10GBase-T PJ45 LAN ports, two USB 3.0 ports, a dedicated LAN port for IPMI, a serial port, UID indicator and switch, video port and two PCIe slots.

The 2.5″ bays across the front are hot-swap capable and users can easily push the orange tab to extend out the handle for quick removal/installation. On this server and others from Supermicro, orange caddies indicate NVMe support.

With the SuperServer equipped with ten 2.5″ bays, our review system came supplied with 10 Intel P4510 2TB NVMe SSDs.

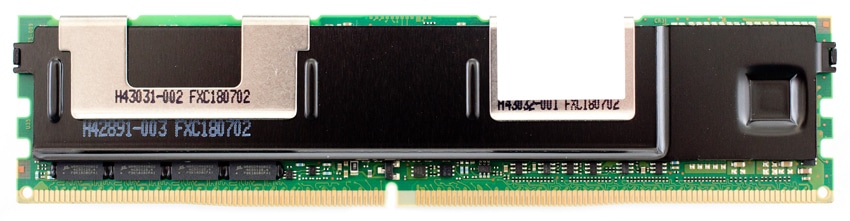

The Intel Optane persistent memory modules have the identical form factor as traditional DRAM. They don’t require additional power cabling or cooling. Heat spreaders are included as part of the persistent memory design, which follow the same design considerations as DRAM modules for width and height. So we shouldn’t see any new changes for slim servers with airflow cowls over the DRAM slots.

As with many Supermicro servers, the top cover comes off easily with two push buttons and removing attachment screws in the rear. This provides quick access to the new CPUs, RAM, installing a GPU or other PCIe devices, and importantly for this review, installing Intel Optane DC PMMs.

Performance

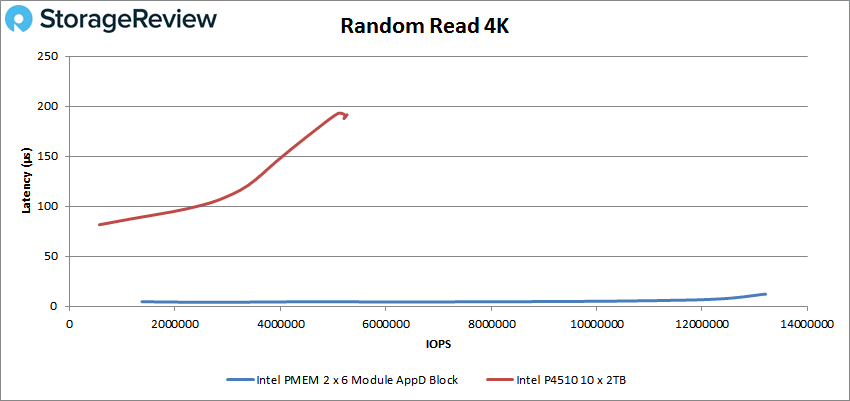

In our first look at the new Intel Optane DC persistent memory, we focus on measuring its performance in a fairly traditional form; comparing its block storage performance to standard issue NVMe SSDs. While there are different modes for persistent memory to operate in, we plan on focusing on specific usecases in the near future surrounding Memory Mode and App Direct byte-level. Specifically we position 12 128GB persistent memory modules, (6 per CPU) configured in two pools up against 10 Intel P4510 2TB NVMe SSDs. Our benchmark application in this scenario is still using vdbench with our four corners workloads as well as database workload profiles. Going forward we will be transitioning back to FIO as well as a database applications that make use of persistent memory directly.

In terms of our benchmark technical configuration, we group 6 persistent memory modules together to form a single pool (one pool per CPU) and allocate the full pool space to the persistent memory namespace. At the OS level we then pre-fill the raw persistent memory modules, partition them to 50% of their total size and perform our workloads on that smaller section. Workloads are then applied intended to show sustained performance, which mimics how application datasets would be operating on them.

Our first test is the 4K random read test here the persistent memory started at 1,371,386 IOPS at 4.6μs and went on to peak at 13,169,761 IOPS at a latency of only 12.1μs. While the Intel NVMe drives did well, a peak of 5,263,647 IOPS and a latency of 191.4μs the PMMs clearly crushed it at over twice the throughput and latency only 6% of the NVMe drives.

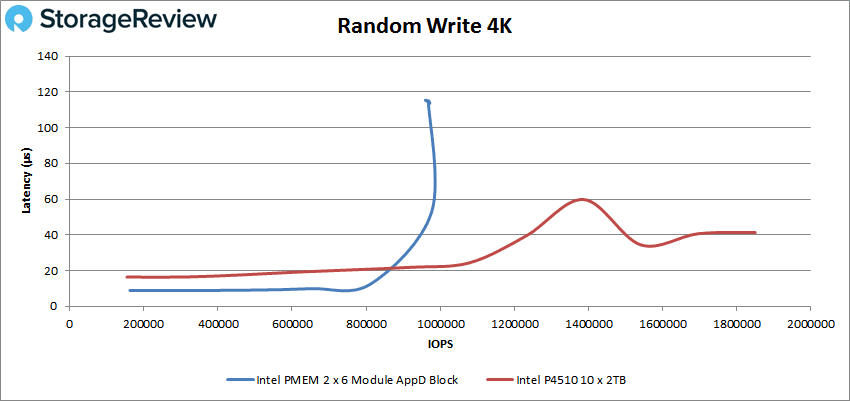

Looking at 4K random write we see a limitation of the technology when it comes to writes. As the above shows a dramatic performance increase, the persistent memory will hit peaks much faster in writes. Here the persistent memory started at 162,642 IOPS with a latency of 8.9μs and peaked around 980K IOPS at about 60μs latency before dropping off.

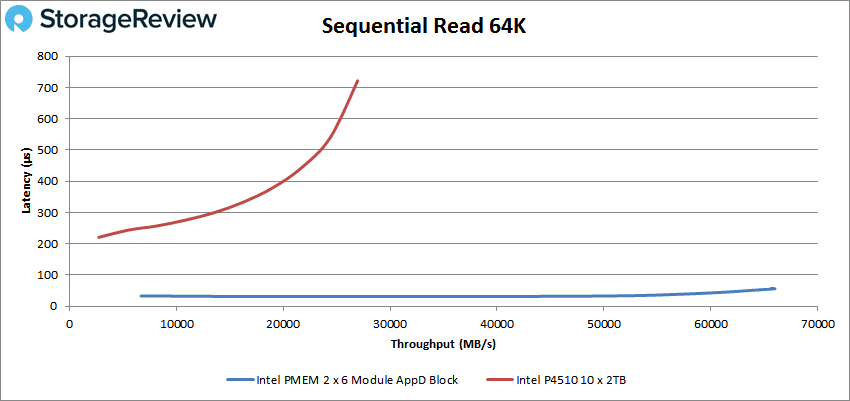

Switching over to sequential workloads, in 64K read the Optane DC PMMs started at 106,739 IOPS or 6.67GB/s at a latency of 31.9μs and went on to peak at 1,055,634 IOPS or 65.98GB/s at a latency of 57.2μs. Again NVMe drives performed well with peak scores of 431,252 IOPS or 26.6GB/s at a latency of 721.5μs but nowhere near that of the persistent memory.

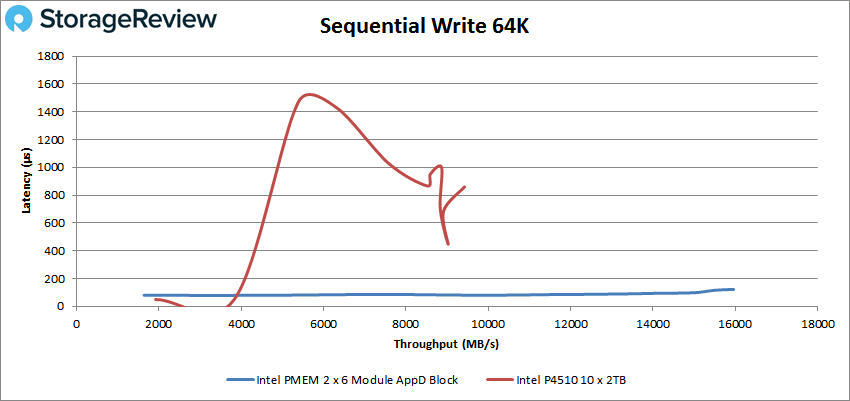

In 64K sequential writes the persistent memory started at 52,472 IOPS or 1.64GB/s at a latency of 78.8μs. The persistent memory modules went on to peak at 255,405 IOPS or 15.96GB/s at a latency of only 121.8μs. This is in contrast to the Intel P4510 group that spiked in latency as the drives were brought up to and over their saturation point.

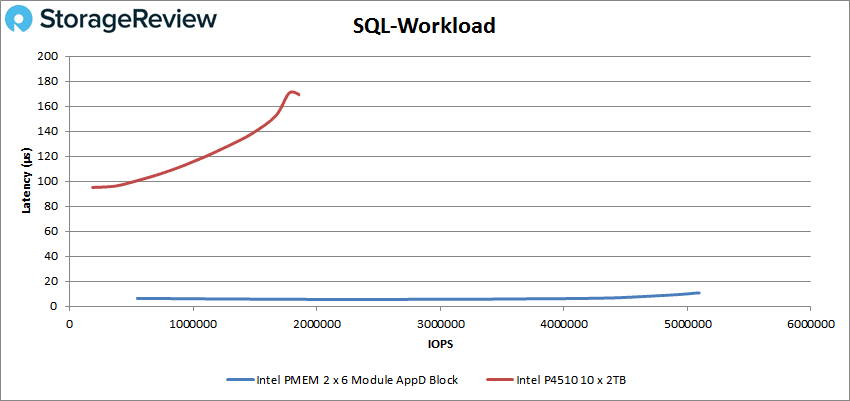

Next up are out SQL VDBench tests including SQL, SQL 90-20, and SQL 80-20. For SQL the persistent memory started at 547,821 IOPS at 6.4μs latency and went on to peak at 5,095,690 IOPS at a latency of 10.7μs. The NVMe drives again had strong performance with a peak performance of 188,170 IOPS and 170µs.

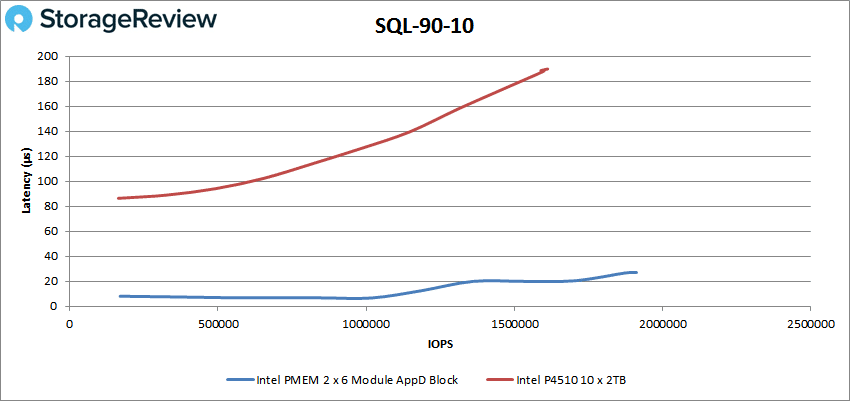

For SQL 90-10 the two comparisons were a bit closer in throughput though there is no question on latency, the persistent memory hands down has lower latency. The persistent memory started at 169,874 IOPS at a latency of 8.1μs and went on to peak at 1,911,900 IOPS with a latency of 27.1μs compared to the NVMe’s peak of 1,612,337 IOPS with a latency of 189.8μs.

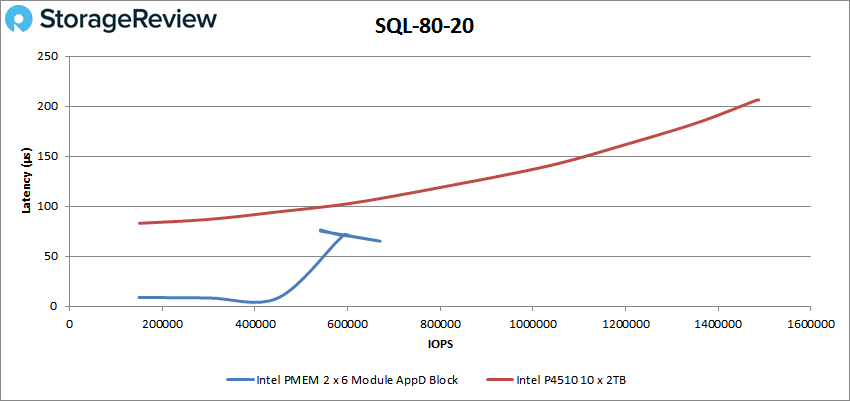

For SQL 80-20 the persistent memory had better peak latency, 65.3μs, but much lower throughput, 668,983 IOPS, versus the throughput of the NVMe drive, 1,482,554 IOPS at a latency of 206μs.

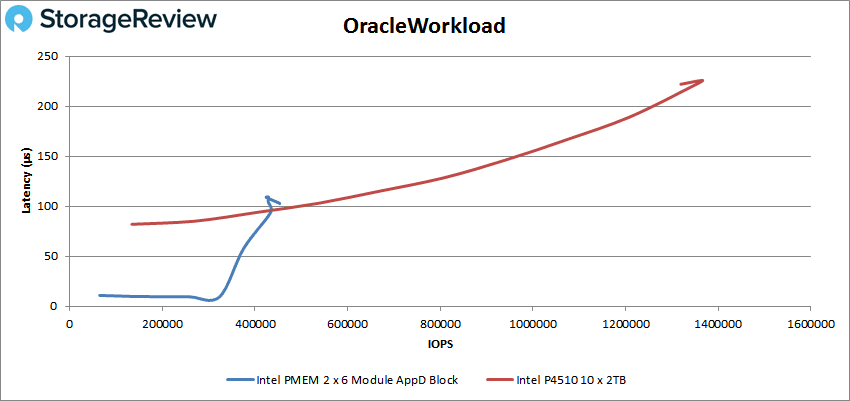

Our final batch of tests for this review is our Oracle workloads, Oracle, Oracle 90-10, and Oracle 80-20. The Oracle test showed the persistent memory to peak early at 453,449 IOPS with a latency of 103μs. The NVMe drive was able to go on to peak at 1,366,615 IOPS with a latency of 225.8μs.

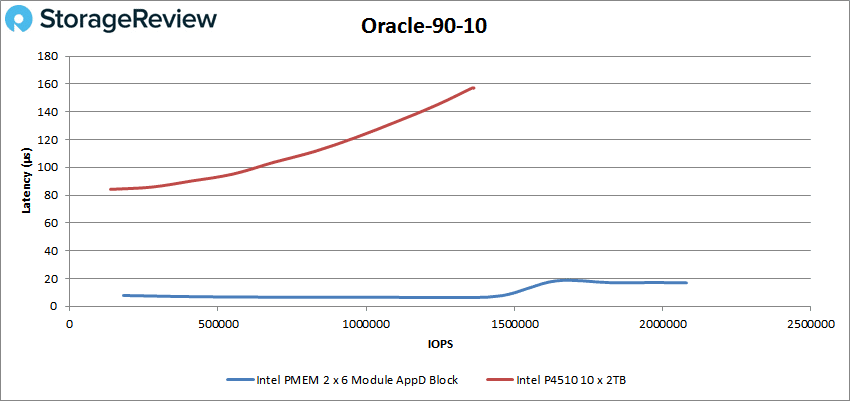

For Oracle 90-10, the persistent memory started at 181,455 IOPS with a latency of 7.8μs and went on to peak at 2,080,543 IOPS with a latency of only 16.9μs. Once again crushing the performance of the NVMe drive that peaked at 1,357,112 IOPS with a latency of 157.1μs.

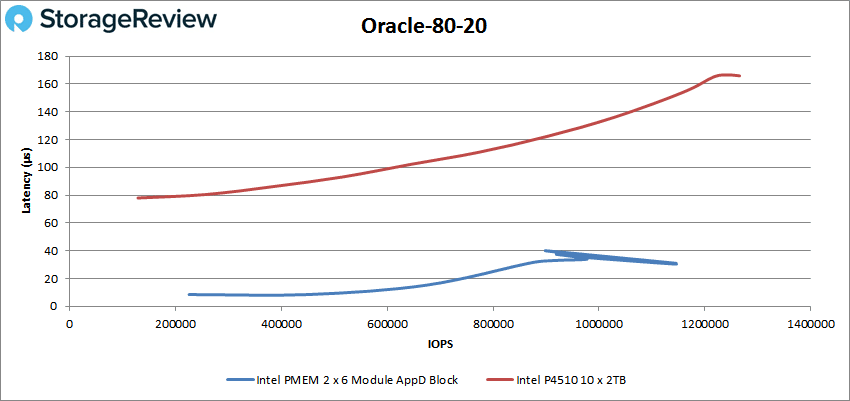

Finally for our Oracle 80-20 the persistent memory started at 225,492 IOPS at a latency of 8.5μs and went on to peak at 1,146,229 IOPS at a latency of 30.4μs. The NVMe drive had a lower throughput, 1,265,479 IOPS, but also a much higher latency, 165.9μs.

Conclusion

The Supermicro SuperServer 1029U-TN10RT is a dual-socket system with 10, 2.5” NVMe drive bays it fits in a 1U footprint. Aside from the 2.5” NVMe drive bays, the server can be configured two M.2 storage slots as well, one SATA and another NVMe. The server is designed for virtualization, databases, and cloud computing, amongst other use cases that leverage a dense form factor with high compute power. Speaking of compute, the server supports the newly released second generation Intel Xeon Scalable CPUs. By the CPUs are 24 DIMM slots. Aside from packing this server with lots of DRAM, the support for the new CPUs means support for Intel’s new Optane DC persistent memory modules.

Looking at performance, the Intel persistent memory modules were able to hit a level of performance not yet seen in our lab. Since Intel is more or less the only game in town at the moment with persistent memory we don’t have competitors or older versions to compare to. Instead we compared it to the Intel P4510 2TB NVMe drives as an example of what to expect when leveraging the new technology. In reads the PMMs blew away the NVMe technology with 4K reads being 13.2 million IOPS at only 12.1μs latency and the 64K sequential read hitting 66GB/s at only 57.2μs latency. Random write saw a bit of a limitation of the technology with the persistent memory quickly spiking up to 980K IOPS and roughly 60μs latency before dropping off, much lower than the NVMe drives. 64K writes however saw the persistent memory dominate with at 15.96GB/s at a latency of only 121.8μs. For SQL benchmarks the persistent memory crushed the NVMe drive in SQL (5,095,690 IOPS at a latency of 10.7μs) and SQL 90-10 (1,911,900 IOPS with a latency of 27.1μs). In our Oracle test the persistent memory showed a much higher score in Oracle 90-10 (2,080,543 IOPS with a latency of only 16.9μs) but fell behind in the other two tests from a throughput perspective. Something to note is latency. The highest peak latency for the persistent memory was 103μs and the lowest peak latency was 10.7μs.

There’s clearly every reason to be exceedingly enthusiastic when looking at the initial results in this review. We see the uplift from the new Xeon Scalable CPUs as a whole, but of course the Optane DC persistent memory modules are the stars here. As noted, this first look review isn’t intended to be the stopping point for how we evaluate systems with persistent memory; it’s only the beginning. We currently have gears in motion to look more deeply at application performance in this system and will continue to push the boundaries and best practices for evaluating Intel Optane DC persistent memory in both App Direct and Memory Modes. For now though, big kudos to Supermicro and their engineering team for putting this kit together so quickly and comprehensively, this is going to be a fun series of reviews.

Amazon

Amazon