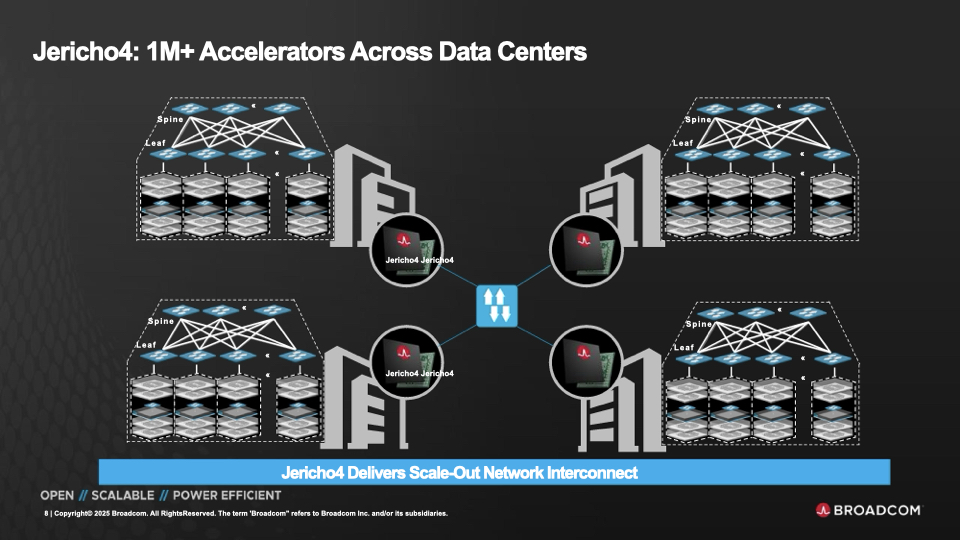

Broadcom has started shipping its Jericho4 switch family, positioning it as the most advanced Ethernet routing silicon available for scale-out AI across modern data centers. Designed specifically to meet the increasing demands of distributed AI infrastructure, Jericho4 delivers an impressive 51.2 Tbps of lossless, deep-buffered Ethernet. As a result, AI workloads can be easily scaled across racks, clusters, and even geographically separated locations without compromising reliability or performance.

Distributed AI

Artificial intelligence models are rapidly surpassing the capacity and physical limitations of individual data centers as they continue to grow and become more complex. An additional set of networking challenges has arisen due to the requirement to distribute XPUs (accelerators such as GPUs and TPUs) across multiple facilities, each of which draws megawatts of power. These include the need for lossless, low-latency, and high-bandwidth transmission across metropolitan and even regional distances.

Ram Velaga, Senior Vice President and General Manager of Broadcom’s Core Switching Group, emphasizes that the AI era requires networking solutions capable of supporting distributed computing environments with over a million XPUs. Velaga explained that the Jericho4 family is designed to enable AI-scale Ethernet fabrics that go beyond the boundaries of individual data centers, supporting long-distance RoCE (RDMA over Converged Ethernet) transport, advanced congestion control, and highly efficient interconnects.

Highlights and Differentiators

The Jericho4 family is built for scale-out across data centers, providing a range of features that meet the specific needs of AI workloads.

- 51.2 Tbps Scalable, Deep-Buffered Capacity: The Jericho4 family provides a staggering switching capacity of 51.2 Tbps, among the highest in the industry. This throughput is crucial for AI workloads that require moving large data volumes between thousands of GPUs or accelerators. Its “deep-buffered” feature allows it to manage significant data bursts without dropping packets, ensuring high performance and reliability in distributed AI training, even in the face of network congestion.

- 3.2T HyperPort Interfaces: HyperPort combines four 800 Gigabit Ethernet (800GE) ports into a single 3.2 Terabit per second (3.2T) channel, simplifying network design and management. This reduces the number of links required between switches and servers while minimizing packet reordering and network inefficiencies. As a result, users can achieve up to 40% faster job completion and up to 70% better network utilization, leading to quicker AI model training and more efficient use of computing resources.

- Line-Rate MACsec with 200K+ Security Policies: MACsec (Media Access Control Security) is a standard for encrypting data at the Ethernet layer. The Jericho4 switch supports MACsec at full line rate, allowing encryption and decryption without performance loss. With support for over 200,000 security policies, it enables detailed security controls, which are crucial for multi-tenant environments or “Neo Clouds.” This ensures the protection of sensitive AI data as it moves quickly across large, shared infrastructures.

- End-to-End Congestion Management and RoCE Lossless Transport: AI workloads are impacted by network congestion and packet loss, which can slow down distributed training. Jericho4’s deep-buffered architecture and hardware-based congestion management enable lossless transport using RoCE (RDMA over Converged Ethernet), ensuring reliable data transmission over distances greater than 100 kilometers. These features are crucial for connecting distributed data centers or AI clusters, providing consistent performance and reliability.

- 40% Power Reduction per Bit: Energy efficiency is crucial as data centers grow to support larger AI models and more users. Jericho4’s architecture achieves a 40% reduction in power consumption per bit of data transmitted compared to earlier generations. This lowers operational costs and helps organizations meet sustainability goals, allowing for AI infrastructure scaling without a significant increase in energy use..

- 200G PAM4 SerDes with Industry-Leading Reach: SerDes (Serializer/Deserializer) technology enables high-speed data transmission over copper or fiber links. The Jericho4 chip supports 200G PAM4 SerDes, allowing for faster data rates over longer distances than prior technologies. This allows switches and servers to connect across larger data center campuses or between buildings without sacrificing speed or reliability.

- Ultra Ethernet Consortium Compliance: The Ultra Ethernet Consortium promotes Ethernet standards for high-performance computing and AI. Jericho4’s compliance ensures seamless compatibility with other Ultra Ethernet devices, protecting investments and future-proofing the network for upcoming AI and cloud workloads.

- Endpoint Agnostic: Jericho4 is compatible with any network interface card (NIC) or XPU, such as GPUs or DPUs, that use Ethernet. This flexibility allows organizations to integrate various computing and storage endpoints, supporting diverse AI architectures and vendor solutions without being confined to one ecosystem.

- AI Networking: The Jericho4 architecture stands out for its ability to manage persistent, high-bandwidth AI traffic flows. By utilizing HyperPort technology, it removes traditional bottlenecks and inefficiencies, providing higher throughput and lower latency for scalable AI networks. This is especially important as organizations deploy AI workloads across campuses, metro areas, and even broader geographies.

Field deployments have demonstrated Jericho4’s reliability and effectiveness, supporting scalable AI designs over distances greater than 100 km. This establishes Jericho4 as a key technology for next-generation, distributed AI infrastructure.

A single Jericho4 system can scale to 36,000 HyperPorts, each operating at 3.2 Tb/s, with deep buffering, line-rate MACsec, and RoCE transport over distances greater than 100 kilometers. Deployment options include chassis-based systems with Jericho line cards, distributed scheduled fabrics (DSF) with Jericho leaves and Ramon spines, and fixed centralized systems, all leveraging Broadcom’s high-radix, low-latency, and power-efficient architecture.

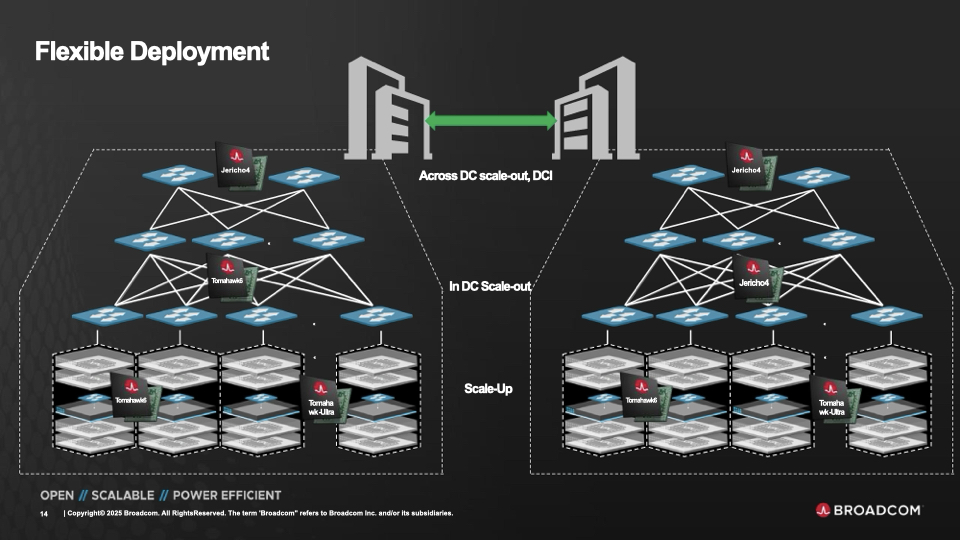

Part of Broadcom’s End-to-End Ethernet AI Platform

Jericho4 is a crucial part of Broadcom’s complete Ethernet AI platform, which also includes:

- Tomahawk 6: 102.4 Tbps switch for AI scale-out and scale-up.

- Tomahawk Ultra: 51.2 Tbps low-latency switch for HPC and AI scale-up.

- Thor Family: AI-optimized Ethernet NICs.

- Physical Layer Products: Including retimers, DSPs, and third-generation Co-Packaged Optics (CPO).

Together, these products offer an open, scalable platform for developing Ethernet-based AI infrastructure at any scale—from tightly interconnected GPU clusters to regional deployments.

Competition

Broadcom’s Jericho4 enters a competitive market where hyperscale data center operators and AI infrastructure providers aim to overcome the limitations of legacy InfiniBand and traditional Ethernet solutions. NVIDIA, with its InfiniBand-based Quantum and Spectrum-X Ethernet switches, continues to be a dominant player in AI networking, especially for tightly coupled GPU clusters. However, Ethernet’s openness, affordability, and ecosystem support are fueling a shift toward Ethernet-based AI fabrics, particularly for scale-out and multi-site deployments.

Other competitors, such as Cisco, with its Nexus series, and Arista Networks, are also investing heavily in high-performance, AI-optimized Ethernet switching. However, Broadcom’s deep-buffered, lossless architecture, combined with its leadership in silicon innovation and ecosystem integration, gives Jericho4 a compelling value proposition—especially for organizations aiming to develop open, scalable, and future-proof AI infrastructure.

Visit the Jericho4 product page for more information.

Amazon

Amazon