HPE has introduced an expanded generation of HPE Cray supercomputing solutions engineered to meet the performance density, efficiency, and operational needs of AI at scale. The portfolio centers on a multi-vendor, multi-workload blade architecture, unified system management software, and a high-performance interconnect fabric, forming a cohesive platform aimed at research institutions, government agencies, and large enterprises that accelerates discovery, simulation, and production AI.

Blending AI and HPC Architectures

HPE’s latest Cray platform aligns HPC and AI into a unified architecture that delivers deterministic performance at scale, with the operational controls expected by enterprise IT. In announcing the platform, Trish Damkroger, Senior Vice President and General Manager of HPC and AI Infrastructure Solutions at HPE, emphasized that blending AI and HPC architectures is essential to increase performance density and unlock new levels of scientific and technological progress. Summarized, HPE’s stance is clear: AI and supercomputing are converging, and HPE intends to lead with integrated systems that advance both research and real-world outcomes.

This expansion builds on last month’s HPE Cray Supercomputing GX5000 introduction, purpose-built for the integrated AI+HPC era, and now complemented by the HPE Cray Supercomputing Storage Systems K3000. The K3000 is notable as the first factory-built storage system to ship with embedded DAOS (Distributed Asynchronous Object Storage) open-source software, designed to sustain performance for I/O-intensive AI and simulation workloads.

Rapid Adoption Across Leading Research Centers

Early traction for the platform includes selections by the High-Performance Computing Center of the University of Stuttgart (HLRS) and the Leibniz Supercomputing Center (LRZ) of the Bavarian Academy of Sciences and Humanities. Their next-generation systems, HLRS’s Herder and LRZ’s Blue Lion, will leverage the HPE Cray Supercomputing GX5000. Taken together, these deployments underscore momentum among tier-one European HPC sites looking to expand AI-driven discovery with production-grade HPC rigor.

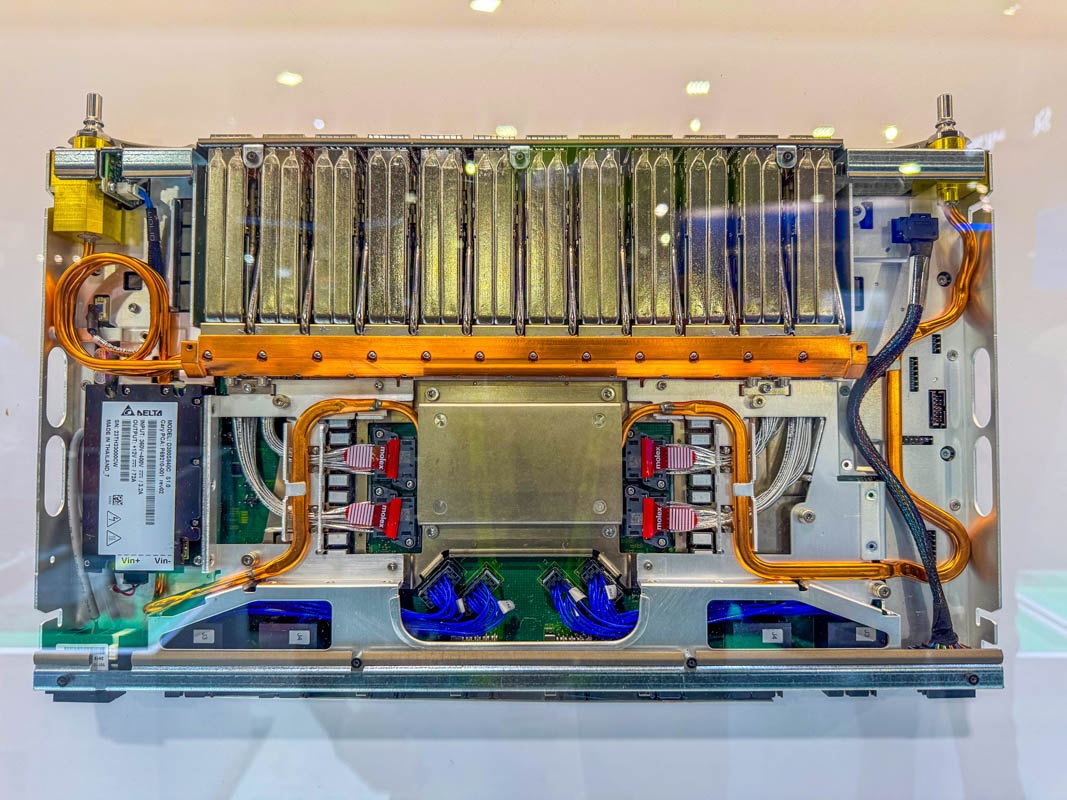

New Direct Liquid-Cooled, Multi-Vendor Compute Blades

HPE is introducing three new compute blades that can be mixed within an HPE Cray GX5000 rack to align with workload profiles, budgets, and facility constraints. All blades employ direct liquid cooling, offer two onboard NVMe SSDs for node-local acceleration, and integrate four or eight HPE Slingshot 400 Gbps endpoints for high-throughput, low-latency fabric connectivity. Supported processors span leading GPUs—NVIDIA Rubin and AMD Instinct MI430X—and AMD EPYC “Venice” CPUs, enabling precise matching of compute to workload characteristics.

- HPE Cray Supercomputing GX440n Accelerated Blade: Designed as a universal AI-HPC engine, this blade combines four NVIDIA Vera CPUs with eight NVIDIA Rubin GPUs. With up to 24 blades per rack, configurations can scale to 192 GPUs per rack—targeting large-scale AI training, multi-node inference, and accelerated simulation workflows.

- HPE Cray Supercomputing GX350a Accelerated Blade: Optimized for mixed-precision computing, the GX350a pairs a single AMD EPYC SP7 600W “Venice” CPU (up to 256 cores) with four AMD Instinct MI430X GPUs from the MI400 series. This blend targets AI training and HPC acceleration, with strong FP16/BF16 performance, while retaining double-precision capability for simulation stages—up to 28 blades per rack yield 112 AMD MI430X GPUs.

- HPE Cray Supercomputing GX250 Compute Blade: Built for CPU-only, double-precision heavy workloads (e.g., CFD, weather, and traditional simulation), the GX250 integrates eight AMD EPYC “Venice” CPUs per blade, each up to 256 cores. With up to 40 blades per rack, it appeals to facilities consolidating large MPI jobs with predictable scaling behavior.

Flexible rack-level mixing of these blades enables tailored system design, ranging from GPU-dense AI training islands to CPU-led simulation pools, without stranding power, cooling, or interconnect resources.

Unified, Multi-Tenant Systems Management

The HPE Supercomputing Management Software provides enterprise-grade multi-tenancy and security across bare-metal, virtualized, and containerized environments. The goal is to maximize utilization while giving operators strong isolation controls for users, projects, and workloads. Power and energy management are first-class features. The software provides real-time and historical telemetry, cost estimation, and hooks for power-aware scheduling, enabling organizations to optimize energy efficiency and forecast expenses.

The operational scope spans the full system lifecycle, including provisioning, monitoring, power/cooling oversight, and elastic scaling, with enhanced security controls and improved reporting. For technical operations teams, the net effect is a single control plane designed for mixed AI and HPC estates at high utilization.

HPE Slingshot 400 for GX5000-Based Systems

Now available for HPE Cray Supercomputing GX5000 clusters, HPE Slingshot 400 advances the interconnect fabric with a direct liquid-cooled switch blade architecture devised for high-density deployments and sustained throughput under composite AI+HPC loads. Each switch blade offers 64 ports at 400 Gbps, and chassis-level configurations support:

- 8 switches with 512 ports

- 16 switches with 1,024 ports

- 32 switches with 2,048 ports

First announced last year, Slingshot 400 is designed to leverage the GX5000’s higher-performance topology, reducing latency, enhancing sustained bandwidth, and improving reliability, while maintaining cost control. For multi-tenant environments and mixed workflows, the combination targets predictable performance at scale.

DAOS-Backed Storage to Accelerate I/O-Bound AI

The HPE Cray Supercomputing Storage Systems K3000 leverages HPE ProLiant Compute DL360 Gen12 servers with embedded DAOS to prioritize low-latency object access, high parallelism, and linear scaling—attributes crucial for I/O-bound AI pipelines and simulation checkpoints. The platform balances compute per storage node with memory and NVMe density to keep GPUs and CPUs fed under concurrent access patterns.

Configurable options allow tuning for performance or capacity:

- Performance-optimized DAOS storage servers with 8, 12, or 16 NVMe drives

- Capacity-optimized DAOS storage servers with 20 NVMe drives

- NVMe drive sizes: 3.84 TB, 7.68 TB, or 15.36 TB

- DRAM options: 512 GB, 1,024 GB, or 2,048 GB, aligned to drive counts and workload profiles

Interconnect choices reflect heterogeneous data center networks and workflow needs:

- HPE Slingshot 200

- HPE Slingshot 400

- InfiniBand NDR

- 400 Gigabit Ethernet

By bringing DAOS into a factory-integrated, vendor-supported system, HPE aims to shorten deployment timelines, reduce integration complexity, and elevate sustained performance for data-intensive AI and HPC.

Availability

- HPE Cray Supercomputing Storage Systems K3000 with HPE ProLiant Compute servers: early 2026

- HPE Cray Supercomputing GX440n Accelerated Blade, GX250 Compute Blade, and GX350a Accelerated Blade: early 2027

- HPE Supercomputing Management Software: early 2027

- HPE Slingshot 400 for GX5000-based systems: early 2027

HPE’s next-gen Cray portfolio is positioned to collapse traditional AI/HPC silos, delivering higher performance density per rack and streamlining operations for institutions scaling both simulation and AI training/inference on a common, high-efficiency platform.

Amazon

Amazon