At the GTC DC25 event, HPE announced an expanded portfolio of NVIDIA AI Computing by HPE. This expansion aims to simplify the deployment and scaling of AI across governments, regulated industries, and businesses. The new offerings help organizations build secure and private AI infrastructure more quickly and effectively. They provide turnkey AI factory solutions, unified data strategies, and updated server platforms with the latest NVIDIA AI infrastructure and software.

AI adoption is growing across many sectors. However, almost 60 percent of organizations have scattered AI goals and strategies. Similarly, many lack effective data management for AI, as noted in the 2025 Architecting an AI Advantage report. HPE and NVIDIA are collaborating to address these issues with a wide range of HPE solutions for AI factories. These solutions support everyone from developers to sovereign AI cloud builders, helping organizations create a complete AI infrastructure strategy.

To facilitate broader AI adoption in businesses, technology must directly address the primary challenges that organizations encounter, such as complex deployments and the management of sensitive data. Fidelma Russo, EVP and CTO at HPE, emphasized the importance of addressing challenges like these to accelerate enterprise AI adoption. She highlighted their partnership with NVIDIA, which offers full-stack private AI factories that simplify operations, enable rapid scaling, and ensure compliance.

Justin Boitano, Vice President of Enterprise Software at NVIDIA, views AI factories as essential infrastructure for the era of intelligence. These factories are meant to create large-scale tokens of intelligence. He mentioned that NVIDIA and HPE are developing full-stack systems that include NVIDIA Blackwell, networking software, and AI Data Platform reference designs. These advancements are designed to enable agentic AI, improve automation, and drive digital transformation across various industries.

The second generation of HPE Private Cloud AI, co-developed with NVIDIA, is now available in a smaller form factor. This new version accelerates AI time-to-value for all enterprises. New features for the turnkey AI factory include:

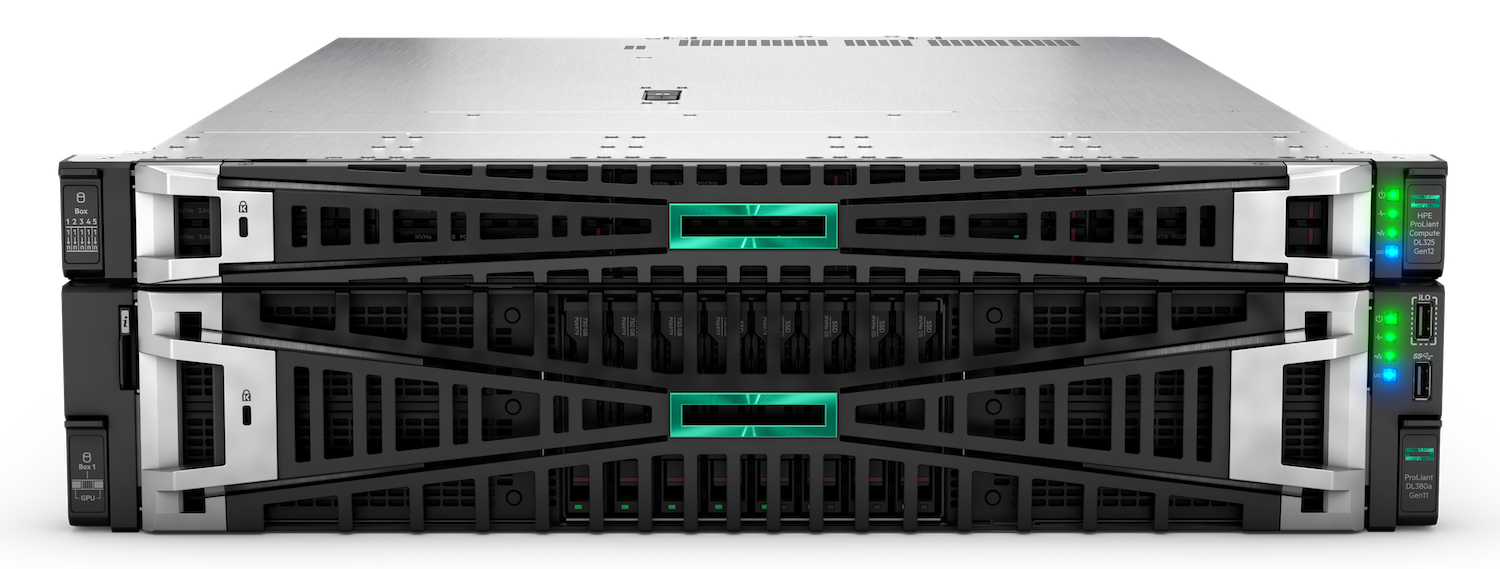

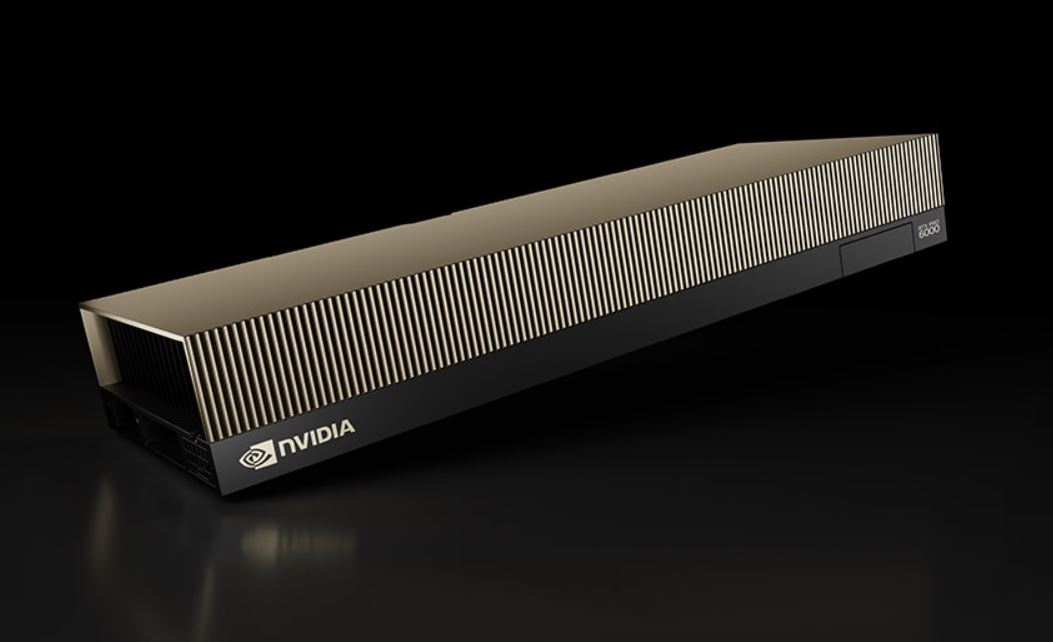

- Industry-leading AI Performance: HPE Private Cloud AI now includes the new ProLiant Compute DL380a Gen12 servers and NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs. These deliver three times better price-to-performance for enterprise AI workloads. The HPE ProLiant Compute DL380a Gen12 servers have achieved top rankings in MLPerf Inference v5.1 benchmarks, including record performance with RTX PRO 6000.

- AI Factory Deployments for Governments: HPE Private Cloud AI and HPE’s AI factory for sovereigns will support the new NVIDIA AI Factory for Government. This full-stack, end-to-end reference design meets the compliance requirements of high-assurance organizations. It also provides guidance for deploying and managing multiple AI workloads on-premises and in hybrid cloud environments.

- Secure AI for Sovereign Organizations: New air-gapped management allows for network-isolated cloud environments. These secure, compliant deployments are often required by governments, sovereign entities, and regulated industries.

- Reimagined Customer Experiences: HPE Services offers a new service that uses HPE Private Cloud AI and NVIDIA NeMo. This service will help create digital avatar assistants to improve customer interaction and business support for smart cities and industries, including retail, manufacturing, healthcare, banking, finance, and education.

- Accelerated AI Outcomes: A new system adoption accelerator service for HPE Private Cloud Developer Edition provides comprehensive post-installation functional testing. This includes sample pre-built pipelines and knowledge transfer sessions for data science teams.

With the Town of Vail as the pilot customer, HPE has launched the HPE Agentic Smart City Solution. This is a unified AI-driven solution that helps cities transition from isolated pilot projects to citywide smart infrastructure using secure, integrated, and scalable agentic AI systems. SHI, NVIDIA, and HPE’s validated partners are working with the Town of Vail to enhance accessibility compliance, permitting, and wildfire detection through HPE Private Cloud AI.

HPE’s unified data layer accelerates the AI data lifecycle by integrating the global namespace with HPE Data Fabric Software and enhancing data intelligence with the HPE Alletra Storage MP X10000.

HPE is revealing new features for its unified data layer that support data governance with agentic AI and unstructured data storage in air-gapped environments. The unified data layer accelerates the AI data lifecycle by integrating the global namespace with HPE Data Fabric Software and improving data intelligence with the HPE Alletra Storage MP X10000. When deployed through the NVIDIA AI Data Platform, which includes NVIDIA’s accelerated computing, networking, and AI software, businesses can efficiently supply applications, models, and agents with AI-ready data.

- Secure-by-design Data Storage for AI: The X10000 can now be managed in air-gapped settings, providing a cloud-like management experience in spaces that require limited access to external networks.

- GPU-Accelerated Access: The X10000 supports the new NVIDIA S3oRDMA solution, offering up to twice the performance through remote direct memory access (RDMA) transfers between the GPU and system memory, as well as between the GPU and the X10000.

- Agentic Governance: To simplify how organizations manage and federate data securely, HPE Data Fabric now includes agentic AI-powered governance. Using Model Context Protocol (MCP), HPE Data Fabric allows secure model-to-data interactions, consistent compliance enforcement, and faster insights. The global namespace unifies structured, semi-structured, and unstructured data, creating a unified “data without borders” for AI pipelines.

To meet the demands of deploying AI at scale, HPE is introducing new offerings in the NVIDIA AI Computing by HPE portfolio. These offerings provide deployment options for developers, enterprises, and government entities.

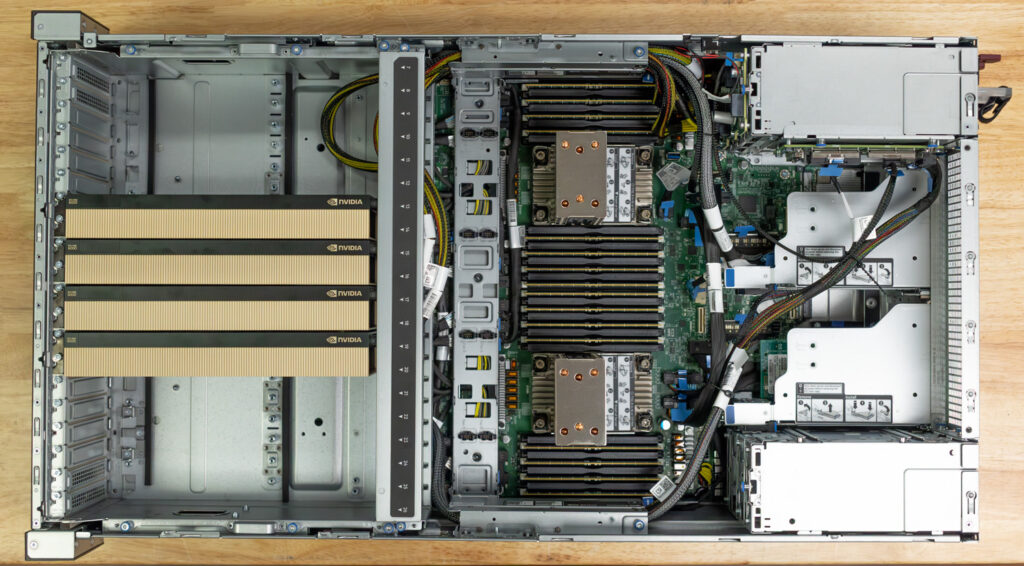

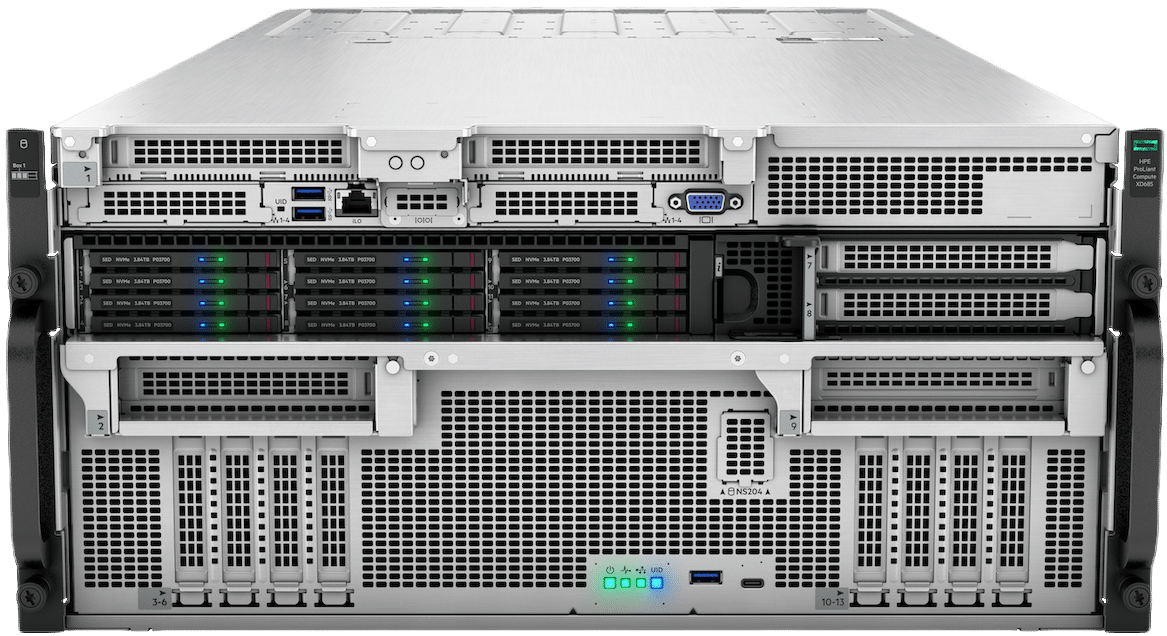

- HPE ProLiant Compute XD685 now supports eight NVIDIA Blackwell Ultra (B300 GPUs) in a 5U, direct-liquid cooled HGX chassis. HPE Integrated Lights Out (iLO) provides built-in security, while HPE Performance Cluster Manager supports integrated cluster management. The XD685 is designed for AI service providers, neoclouds, and ambitious enterprises that require setting up large, secure, and validated AI clusters.

- NVIDIA GB300 NVL72 by HPE offers groundbreaking performance for AI models exceeding 1 trillion parameters. It is available for order and is expected to ship in December 2025.

- HPE ProLiant Compute DL380 Gen12 Server Premier Solution for Azure Local will deliver a secure hybrid cloud infrastructure. This allows enterprises to run Azure services anywhere while accelerating AI innovation. The new solution integrates specific Azure services and AI features directly into the data center, while also supporting NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs for AI, graphics, and VDI workloads.

HPE is enhancing AI and graphics performance across eight HPE ProLiant platforms by offering a wide range of NVIDIA-accelerated computing options. This includes the powerful RTX PRO 6000 Blackwell Server Edition and the compact NVIDIA RTX A1000 GPU. These options enable organizations in research, education, healthcare, finance, media, manufacturing, and retail to enhance demanding workloads with scalable, high-performance infrastructure.

HPE ProLiant and HPE Cray servers will quickly integrate with the latest NVIDIA offerings, including NVIDIA Rubin CPXs and NVIDIA Vera Rubin CPX platforms. Future HPE server models will support NVIDIA ConnectX-9 SuperNIC and NVIDIA BlueField-4. NVIDIA BlueField-4 is a DPU created for gigascale AI factories, delivering 800 Gb/s throughput and featuring multi-tenant networking, AI runtime security, rapid data access, and high-performance inference processing.

Amazon

Amazon