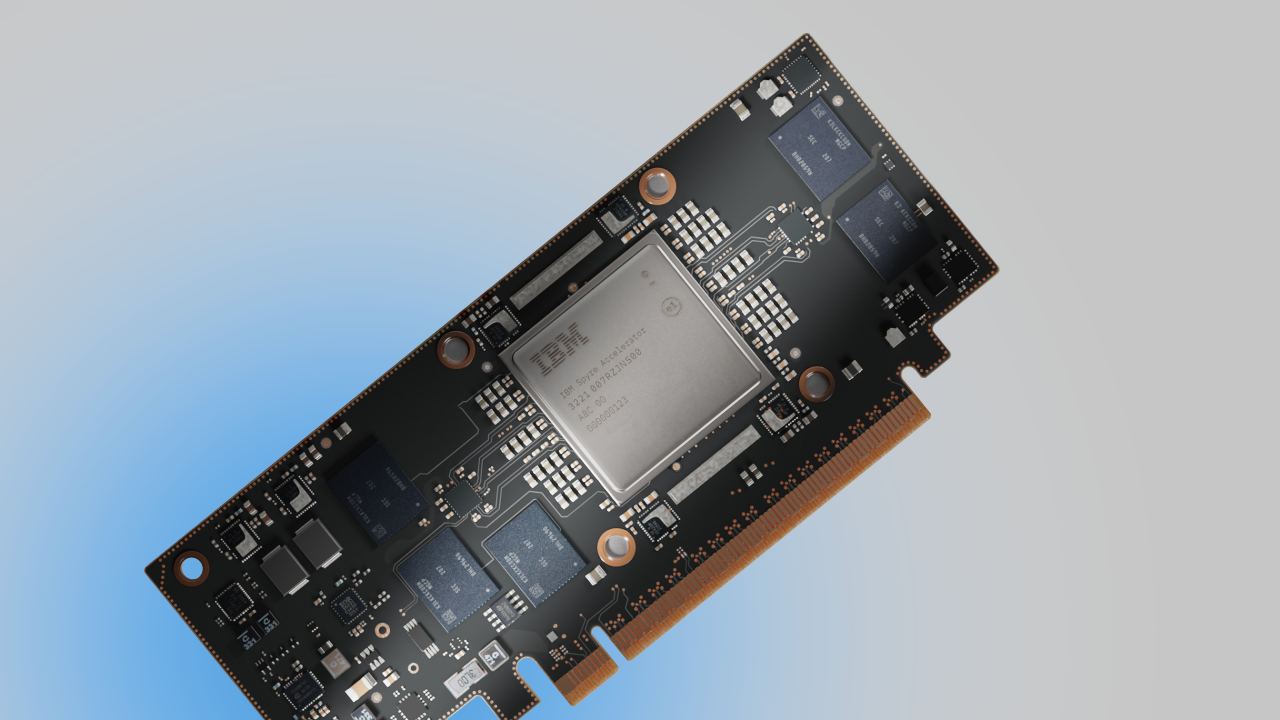

IBM has introduced the IBM Spyre Accelerator, a low-latency inference engine designed for generative and agentic AI where responsiveness, security, and uptime are mandatory. Spyre is designed for enterprises seeking to integrate AI with their existing systems of record, allowing sensitive data to remain on-platform without compromising performance.

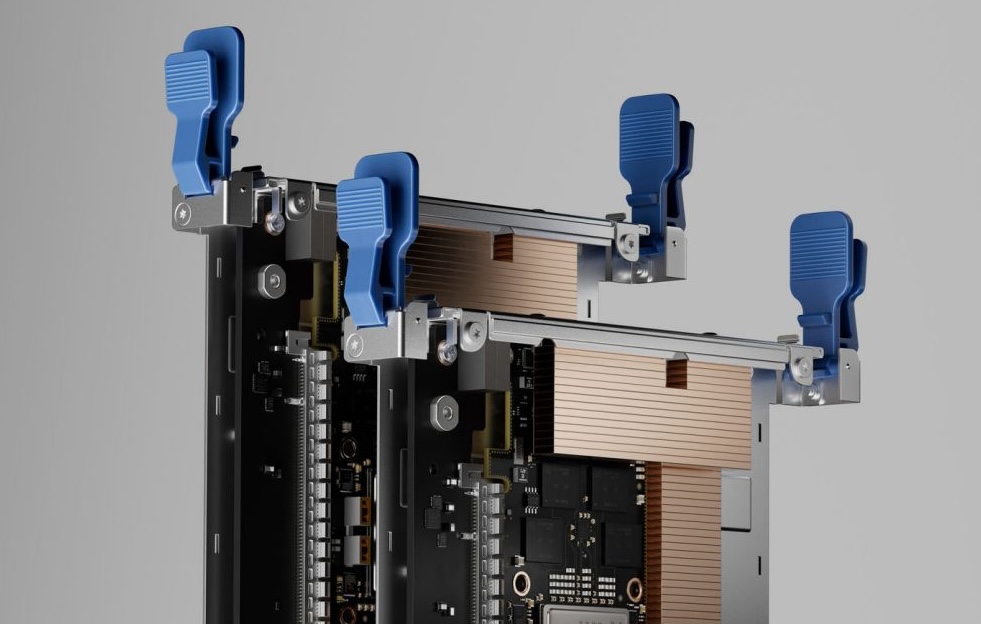

General availability begins October 28 for IBM z17 and LinuxONE 5, with Power11 to follow in early December. The accelerator is a 5nm, 32-core SoC packaged on a 75-watt PCIe card. IBM supports clusters of up to 48 cards per IBM Z or LinuxONE system and up to 16 cards per IBM Power system, enabling scale-out for multi-model inference adjacent to transactional workloads, such as fraud detection, retail automation, and risk scoring. Spyre represents the productization of IBM’s AI Hardware Center research, tuned for on-premises deployments where data locality, security, and energy efficiency are foundational requirements.

From Logic Pipelines to Agentic AI

Enterprise workflows are shifting away from deterministic logic chains toward agentic AI that perceives context, plans, and acts in real time. These agentic systems require low-latency inference and a predictable quality of service, even as core systems maintain high transaction volumes. IBM’s approach is to position dedicated inference hardware directly on the platforms where critical data already resides. Keeping data on IBM Z, LinuxONE, and Power reduces risk and compliance overhead by avoiding unnecessary data egress and external processing. Running generative and agentic inference close to the data also minimizes backhaul and network-induced variability, which is often where tail latency creeps in. The overarching goal is to add AI-driven decisioning into existing OLTP, batch, and messaging flows without compromising throughput, change windows, or service levels.

Spyre Architecture

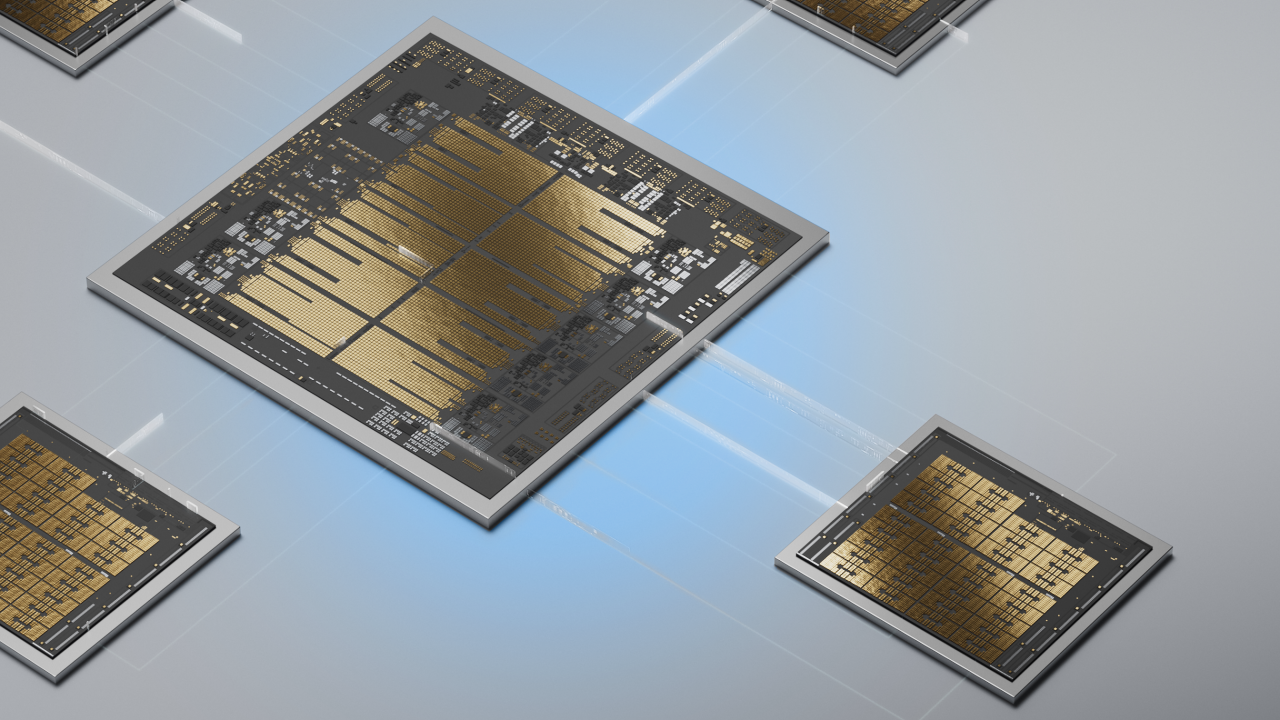

Spyre is a commercial system-on-a-chip (SoC) developed from prototypes created by IBM Research. Built on a 5nm process with 25.6 billion transistors and 32 accelerator cores, it is optimized for concurrent, low-latency inference rather than model training. The device is delivered as a 75-watt PCIe add-in card, giving infrastructure teams a familiar deployment and service model.

IBM has engineered scale-out configurations that allow up to 48 cards in an IBM Z or LinuxONE 5 system and up to 16 cards in an IBM Power11 system, enabling multi-model serving and tenant isolation within a single platform footprint. On the software side, Spyre integrates with IBM’s enterprise stack for model serving, security, and observability, while Power adds a one-click catalog to fast-track service deployment. The combination is designed to accelerate time-to-value while maintaining AI services within existing operational and compliance boundaries.

Platform Integration

IBM Z and LinuxONE: Co-location with Telum II

On z17 and LinuxONE 5, Spyre operates alongside the Telum II processor complex to keep inference co-resident with transactional data and event streams. This arrangement preserves existing security domains and audit trails, which are essential in regulated environments. By running inference locally, organizations minimize trips to external accelerators and prevent additional latency from impacting transaction SLAs. The system can scale up to 48 cards, supporting multiple models and workloads simultaneously. It provides logical isolation to cater to various business units or regulatory requirements. This is especially relevant for payment flows, fraud prevention, claims handling, and supply chain events where every millisecond counts and expanding the compliance perimeter is not desirable.

IBM Power11: Catalog-Driven AI Services and MMA Synergy

On Power11 servers, Spyre integrates with an AI services catalog designed for rapid adoption within established Power estates. Administrators can deploy services with a single click, aligning with how Power customers traditionally operationalize middleware, analytics, and line-of-business applications. The pairing of Spyre with Power’s on-chip MMA accelerator improves data conversion and preprocessing throughput, which are critical stages for generative AI pipelines and complex process integrations.

IBM cites an example of throughput where, at a prompt size of 128, the system can process over eight million documents for knowledge base integration in approximately one hour. For enterprises developing RAG systems or conducting large-scale enterprise search, the focus is on accelerating data preparation and ingestion, rather than just the inference step. The overall fit targets mixed estates, such as manufacturing, retail, healthcare, and ERP-heavy deployments, where predictable throughput and serviceability are key.

From IBM AI Hardware Center to Field Deployments

Spyre demonstrates how IBM transforms research into practical products. The technology began as a prototype developed by IBM Research, then matured through iterative cluster deployments at the Yorktown Heights campus and collaborations with the University at Albany’s Center for Emerging Artificial Intelligence Systems. IBM Infrastructure subsequently productized the design for IBM Z, LinuxONE, and Power, aligning the silicon with enterprise requirements such as security, reliability, lifecycle management, and serviceability. IBM executives characterize Spyre as infrastructure for multi-model AI, emphasizing the secure and resilient scaling of generative and agentic workloads on platforms already trusted with core data and transactions.

Spyre’s value is inseparable from IBM’s operational posture. By running inference where data is created and governed, organizations limit data egress and reduce compliance complexity. IBM Z and LinuxONE provide cryptography, isolation, logging, and audit capabilities that are already integral to many customers’ regulatory frameworks. Power contributes a robust security and management stack suited to mixed analytics and transactional estates. Clustering support, logical partitioning, and first-party observability tools align with mainframe and Power operational norms, enabling AI services to be deployed, monitored, and serviced like any other tier-one workload. For many buyers in finance, healthcare, and the public sector, these operational assurances take precedence over raw TOPS or synthetic benchmarks.

Performance and Scalability Considerations

Although IBM has not released comprehensive performance details for each model, the architectural indicators are evident. Spyre targets low-latency inference paths optimized for agentic loops and event-driven pipelines, where concurrency and tail latency control are more important than peak throughput alone. Scale-out via PCIe card clusters enables teams to size deployments for multi-model serving, tenant isolation, and future growth without requiring platform redesign.

On Power, the MMA-Spyre combination accelerates data preparation and conversion, which often dominates the end-to-end time for RAG and enterprise search. Enterprises should structure proofs of concept to mirror production conditions: model sizes and token windows, request concurrency, interaction with transactional loads, and telemetry for tail latency under contention.

Barry Baker, COO of IBM Infrastructure and GM of IBM Systems, emphasized the company’s focus on developing infrastructure for emerging AI workloads. He highlighted the Spyre Accelerator’s role in enhancing systems to support multi-model AI, including generative and agentic AI. He added that this innovation aims to enable clients to scale mission-critical AI workloads securely, resiliently, and efficiently, while maximizing the value of enterprise data.

Mukesh Khare, General Manager of IBM Semiconductors, notes that IBM launched its AI Hardware Center in 2019 to address the growing computational demands of AI, even before the recent AI boom. He celebrates the commercialization of the center’s first chip, designed to enhance performance for IBM’s mainframe and server clients.

Use Cases

Financial services teams can utilize Spyre for real-time fraud detection, which operates directly against on-platform datasets, allowing for model refreshes and policy updates without expanding the audit perimeter. In retail and logistics, agentic assistants can coordinate inventory and fulfillment decisions with consistent response times by keeping inference local to the operational systems. Healthcare and government organizations can deploy generative summaries and decision support within strict privacy and residency constraints, avoiding data movement that would otherwise trigger additional compliance work. In industrial and ERP-heavy environments, Spyre can power process automation and anomaly detection close to SAP, Oracle, and plant telemetry systems running on Power, ensuring deterministic behavior and maintainability.

IBM Infrastructure leadership presents Spyre as foundational infrastructure for the AI shift, designed to support multi-model generative and agentic workloads at enterprise scale with strong security and resiliency guarantees. IBM Semiconductors’ leadership describes Spyre as the first commercialization milestone from the AI Hardware Center, anticipating a continuing cadence of hardware innovation for IBM’s mainframe and server customers.

Bottom Line

Spyre is IBM’s on-premises answer for low-latency inference in environments that cannot compromise on security, reliability, or data locality. The hardware scale, platform-native integrations, and operational model align with IBM Z, LinuxONE, and Power estates that seek agentic AI within the same blast radius as core transactions. The next step for buyers is evidence: proofs of concept that validate latency, concurrency, and governance advantages under real workloads. If performance in the field matches the brief, Spyre offers a pragmatic path to embed generative and agentic AI directly into mission-critical systems.

Availability

Spyre will be generally available for IBM z17 and LinuxONE 5 starting October 28. IBM Power11 systems will receive Spyre in early December. This staggered release gives mainframe and LinuxONE customers the earliest path to on-platform inference, with Power estates following shortly thereafter.

Amazon

Amazon