One of the lesser-known products Mellanox was working on before the NVIDIA acquisition was a data processing unit (DPU) dubbed BlueField. The pitch six years ago is similar to the one today, giving storage (and now accelerators) direct access to the network without traditional x86 architecture in the way. After all, CPUs are better used for applications than PCIe traffic lane management. Adoption of BlueField has been very slow however; there are less than a handful of commercial ventures leveraging DPUs today. NVIDIA has a new push to help change that.

Why DPUs in the First Place?

The allure of the DPU is pretty mesmerizing, and that’s why NVIDIA is investing heavily in its success. Compared to the more traditional high-speed Ethernet NIC most know and love, DPUs simply have more processing power on board, making them look more like mini-computers than data movement vehicles. To be fair, though, in the context of storage, the DPUs main goal is to move data quickly. It’s just that now, this action can be done in a JBOF, with no x86 needed at all.

NVIDIA DPU in VAST Data Node

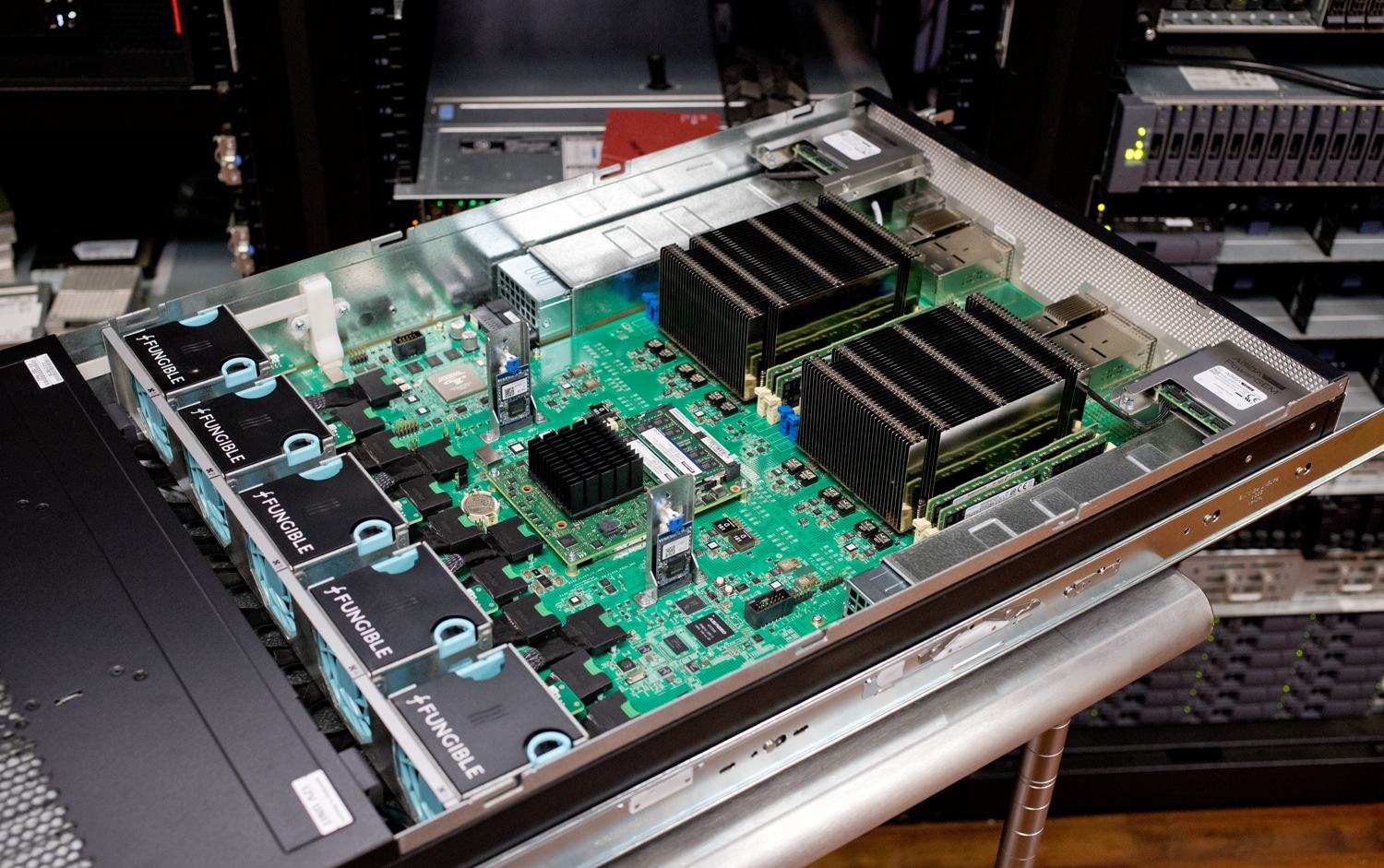

We’ve seen this recently in a few cases, one using the NVIDIA product and another rolling their own DPU. VAST Data is leveraging the NVIDIA DPU in their data nodes, which are now extremely dense 1U boxes that can share 675TB of raw flash. There are alternative routes, though; Fungible has a plan for disaggregation, leveraging its own DPU. We’ve had their storage array in the lab, and they’ve also recently announced a GPU effort.

Fungible Storage Array – DPUs Inside

If all of this DPU conversation sounds overwhelming, it’s hard to blame you. There hasn’t been a fundamental shift in the way data is managed and moved in a very long time. We have a podcast with NVIDIA on DPUs from a storage perspective that’s a great primer on what’s going on in the market.

DPUs are Hard

Even after six years or more of work, we’ve only seen two examples of DPUs working well hands-on and can name probably less than a handful of companies even dabbling past lab validation. Why is that? From what we’re told by systems vendors, it’s really difficult to leverage BlueField. There’s an immense amount of software work to be done, and to date, the cards are not a simple drop-and-go product, so the lift is much heavier. Couple this and the fact that traditional storage companies are reluctant to embrace leading-edge tech combined with each DPU requiring a different coding approach and we have little DPU adoption.

NVIDIA recognizes this, of course, and desperately needs faster vehicles to move data into their GPUs. To be fair, customers want this as well, especially in HPC workloads where keeping expensive GPU investments operational full-time is a price objective. So NVIDIA yesterday has made an effort to help ease this adoption pain.

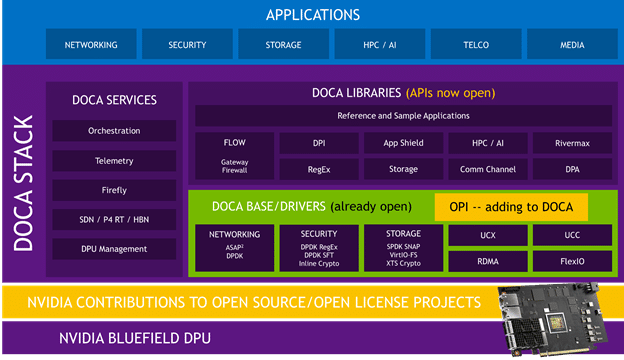

NVIDIA has become a founding member of the Linux Foundation’s Open Programmable Infrastructure (OPI) project. Fundamentally, NVIDIA has made its DOCA networking software APIs widely available. This means the weighty integration work to get operational on a DPU should conceivably be faster now.

The “OPI project aims to create a community-driven, standards-based, open ecosystem for accelerating networking and other data center infrastructure tasks using DPUs,” according to a blog post from NVIDIA. This is, of course, a good thing. Organizations and systems vendors that previously found the DPU wall too high to leap should now have a much easier path toward DPU adoption.

NVIDIA went on to share what they intend to provide;

What’s Next for DPUs?

Based on what we’ve seen from VAST and Fungible, the DPU world is very real and ready to make a massive impact on the data center and cloud. Adoption has been challenging because software integration is difficult. Further, the hardware isn’t a drop-in replacement for NICs. Not that it’s positioned to be that way, but this isn’t like going from 25GbE to 200GbE by swapping NICs. The effort to integrate DPUs is nowhere close to trivial.

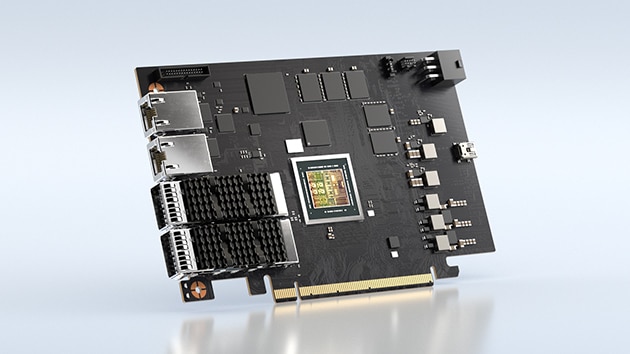

NVIDIA DPU

Initiatives like this should help the industry along, even if only on NVIDIA silicon. DPUs offer so much potential when it comes to making infrastructure faster, more secure, and ultimately more efficient. With almost every large organization working on green initiatives, the data center is a good place to start embracing modern infrastructures that don’t have the same dependencies as the legacy stack.

We’re hopeful that this move towards open source shakes free the DPU logjam because what’s possible with this technology is quite remarkable.

Amazon

Amazon