The NVIDIA H100 Tensor Core GPU’s debut on the MLPerf industry-standard AI benchmarks set world records in inference on all workloads by delivering up to 4.5x more performance than previous generation GPUs. NVIDIA A100 Tensor Core GPUs and the NVIDIA Jetson AGX Orin module for AI-powered robotics delivered overall leadership inference performance across all MLPerf tests: image and speech recognition, natural language processing, and recommender systems.

The H100, aka Hopper, raised the bar in per-accelerator performance across all six neural networks demonstrating leadership in throughput and speed in separate server and offline tests. Thanks partly to its Transformer Engine, Hopper excelled on the BERT model for natural language processing. It’s among the largest and most performance-hungry of the MLPerf AI models.

These inference benchmarks mark the first public demonstration of H100 GPUs, available later this year. The H100 GPUs will participate in future MLPerf rounds for training.

A100 GPUs show leadership

NVIDIA A100 GPUs, available today from major cloud service providers and systems manufacturers, continued to show overall leadership in mainstream performance on AI inference by winning more tests than any submission in data center and edge computing categories and scenarios. In June, the A100 also delivered overall leadership in MLPerf training benchmarks, demonstrating its abilities across the AI workflow.

Since their July 2020 debut on MLPerf, A100 GPUs have advanced their performance by 6x, thanks to continuous improvements in NVIDIA AI software. NVIDIA AI is the only platform to run all MLPerf inference workloads and scenarios in data center and edge computing.

Users need versatile performance

NVIDIA GPU performance leadership across all significant AI models validates the technology for users, as real-world applications typically employ many neural networks of different kinds. For instance, an AI application may need to understand a user’s spoken request, classify an image, make a recommendation, and then deliver a response as a spoken message in a human-sounding voice. Each step requires a different type of AI model.

The MLPerf benchmarks cover these and other popular AI workloads and scenarios, including computer vision, natural language processing, recommendation systems, and speech recognition. MLPerf results help users make informed buying decisions based on the specific tests ensuring users get a product that delivers dependable and flexible performance.

The MLPerf benchmarks are backed by a broad group that includes Amazon, Arm, Baidu, Google, Harvard, Intel, Meta, Microsoft, Stanford, and the University of Toronto.

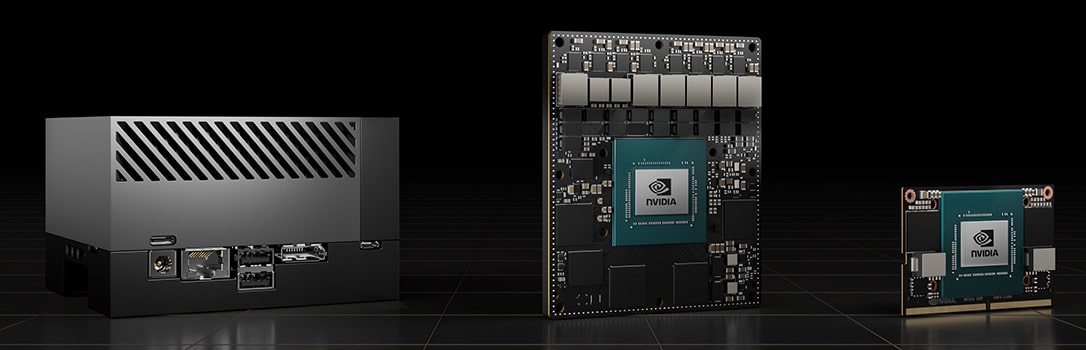

Orin leads at the edge

In edge computing, NVIDIA Orin ran every MLPerf benchmark, winning more tests than any other low-power system-on-a-chip delivering as much as a 50% increase in energy efficiency compared to the April debut on MLPerf. In the previous round, Orin ran up to 5x faster than the prior-generation Jetson AGX Xavier module while delivering an average of 2x better energy efficiency.

Orin integrates into a single chip an NVIDIA Ampere architecture GPU and a cluster of powerful Arm CPU cores. It’s available today in the NVIDIA Jetson AGX Orin developer kit and production modules for robotics and autonomous systems. It supports the full NVIDIA AI software stack, including platforms for autonomous vehicles (NVIDIA Hyperion), medical devices (Clara Holoscan), and robotics (Isaac).

Broad NVIDIA AI ecosystem

The MLPerf results show the industry’s broadest ecosystem backs NVIDIA AI in machine learning. More than 70 submissions in this round ran on the NVIDIA platform, and Microsoft Azure submitted results running NVIDIA AI on its cloud services.

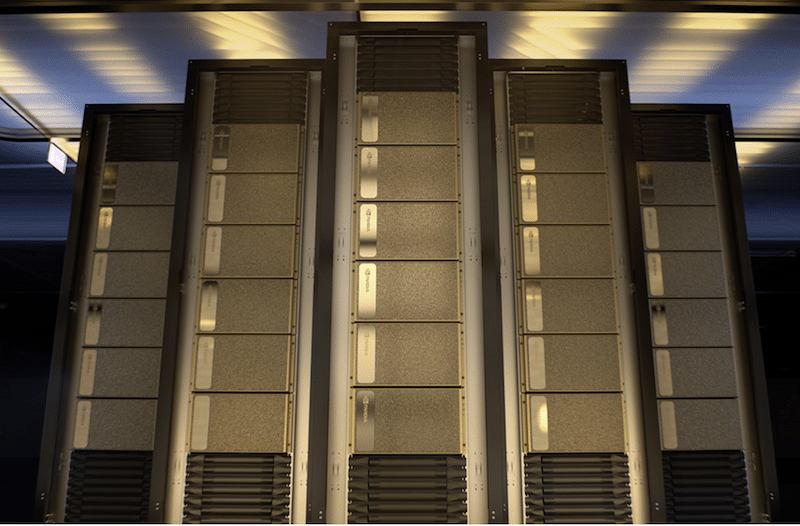

In addition, 19 NVIDIA-Certified Systems appeared in this round from 10 systems makers, including ASUS, Dell Technologies, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Lenovo, and Supermicro. Their work demonstrates excellent performance with NVIDIA AI in the cloud and on-premises.

MLPerf is a valuable tool for customers evaluating AI platforms and vendors. Results in the latest round demonstrate that the performance these partners deliver will grow with the NVIDIA platform. All the software used for these tests is available from the MLPerf repository so that anyone can get these results. Optimizations are continuously folded into containers available on NGC, NVIDIA’s catalog for GPU-accelerated software. NVIDIA TensorRT, used by every submission in this round to optimize AI inference, is located in the catalog.

We’re run our own edge MLperf results recently on Supermicro and Lenovo platforms with the T4 and A2 GPUs inside.

Amazon

Amazon