This year at ISC Digital NVIDIA made a handful of announcements surrounding its A100 GPU. Along with this, the company announced a new AI platform for supercomputing, NVIDIA Mellanox UFM Cyber-AI Platform. And NVIDIA crushed yet another benchmark.

Last month, NVIDIA announced its new Ampere architecture and its 7nm GPU, the NVIDIA A100. The company released its own A100 system at the same time. NVIDIA announced a PCIe form factor for the A100 that allows all the top vendors to release their own version of A100. These vendors and their systems include:

- ASUS will offer the ESC4000A-E10, which can be configured with four A100 PCIe GPUs in a single server.

- Atos is offering its BullSequana X2415 system with four NVIDIA A100 Tensor Core GPUs.

- Cisco plans to support NVIDIA A100 Tensor Core GPUs in its Cisco Unified Computing System servers and in its hyperconverged infrastructure system, Cisco HyperFlex.

- Dell Technologies plans to support NVIDIA A100 Tensor Core GPUs across its PowerEdge servers and solutions that accelerate workloads from edge to core to cloud, just as it supports other NVIDIA GPU accelerators, software and technologies in a wide range of offerings.

- Fujitsu is bringing A100 GPUs to its PRIMERGY line of servers.

- GIGABYTE will offer G481-HA0, G492-Z50 and G492-Z51 servers that support up to 10 A100 PCIe GPUs, while the G292-Z40 server supports up to eight.

- HPE will support A100 PCIe GPUs in the HPE ProLiant DL380 Gen10 Server, and for accelerated HPC and AI workloads, in the HPE Apollo 6500 Gen10 System.

- Inspur is releasing eight NVIDIA A100-powered systems, including the NF5468M5, NF5468M6 and NF5468A5 using A100 PCIe GPUs, the NF5488M5-D, NF5488A5, NF5488M6 and NF5688M6 using eight-way NVLink, and the NF5888M6 with 16-way NVLink.

- Lenovo will support A100 PCIe GPUs on select systems, including the Lenovo ThinkSystem SR670 AI-ready server. Lenovo will expand availability across its ThinkSystem and ThinkAgile portfolio in the fall.

- One Stop Systems will offer its OSS 4UV Gen 4 PCIe expansion system with up to eight NVIDIA A100 PCIe GPUs to allow AI and HPC customers to scale out their Gen 4 servers.

- Quanta/QCT will offer several QuantaGrid server systems, including D52BV-2U, D43KQ-2U and D52G-4U that support up to eight NVIDIA A100 PCIe GPUs.

- Supermicro will offer its 4U A+ GPU system, supporting up to eight NVIDIA A100 PCIe GPUs and up to two additional high-performance PCI-E 4.0 expansion slots along with other 1U, 2U and 4U GPU servers.

Downtime is something that can plague any data center but can be seen as worse in supercomputing data centers. NVIDIA is looking to minimize downtime in InfiniBand data centers with the release of NVIDIA Mellanox UFM Cyber-AI Platform. As the name implies, the platform is an extension of the UFM portfolio and leverages AI to detect security threats and operational issues, as well as predict network failures. Not only does the platform detect issues and threats (including hackers), it automatically takes corrective actions to fix the issues.

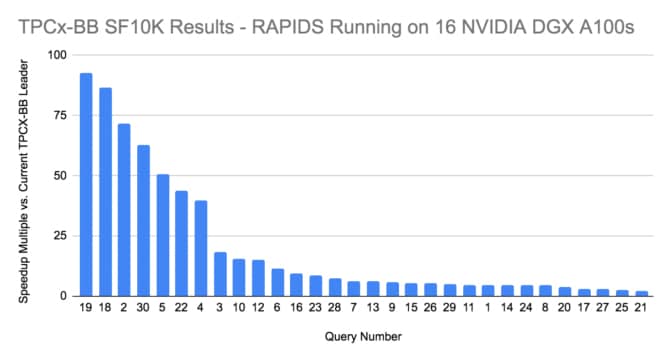

NVIDIA proudly announced that it was able to shatter yet another benchmark, this one surrounding Big Data Analytics known as TPCx-BB. NVIDIA used the RAPIDS suite of open-source data science software libraries powered by 16 NVIDIA DGX A100 systems. They were able to run the benchmark in 14.5 minutes versus the previous record of 4.7 hours. Or 19.5 times faster

Amazon

Amazon