At ISC 2025, NVIDIA announced several supercomputer milestones. The star of the show was Jupiter, powered by the Grace Hopper platform, ranked as the fastest supercomputer in Europe. Jupiter has achieved over a 2x speedup for high-performance computing and AI workloads compared to the next-fastest system.

JUPITER is set to become Europe’s first exascale supercomputer, capable of performing approximately one quintillion floating-point operations per second (FP64) shortly. This system enables faster simulation, training, and inference of the largest AI models, including those for climate modeling, quantum research, structural biology, computational engineering, and astrophysics, empowering European enterprises and nations to advance scientific discovery and innovation.

Among the top five systems on the TOP500 list of the world’s fastest supercomputers, JUPITER ranks as the most energy-efficient, achieving 60 gigaflops per watt.

Comprising nearly 24,000 NVIDIA Grace Hopper Superchips and interconnected with the NVIDIA Quantum-2 InfiniBand networking platform, JUPITER is expected to achieve over 90 exaflops of AI performance. It is based on Evidens’ BullSequana XH3000 liquid-cooled architecture.

According to Jensen Huang, Founder and CEO of NVIDIA, AI will supercharge scientific discovery and industrial innovation. He added that by partnering with Jülich and Eviden, they can build Europe’s most advanced AI supercomputer, which will enable leading researchers, industries, and institutions to expand human knowledge, accelerate breakthroughs, and drive national advancement.

Built for Scientific Breakthroughs

JUPITER is owned by the EuroHPC Joint Undertaking and hosted by the Jülich Supercomputing Centre at the Forschungszentrum Jülich facility in Germany

Anders Jensen, executive director of the EuroHPC Joint Undertaking, stated that JUPITER’s exceptional computing capabilities mark a significant advancement for Europe in science and technology, facilitating key research in areas such as climate modeling, energy systems, and biomedical innovation.

Thomas Lippert, co-director of the Jülich Supercomputing Centre, states that JUPITER represents a significant advancement for European science and technology. It utilizes NVIDIA’s computing and AI platforms to enhance foundational model training and high-performance simulation, helping researchers in Europe tackle complex challenges.

Early testing of JUPITER was conducted with the Linpack benchmark, which was also used to determine the TOP500 ranking.

The JUPITER supercomputer unites NVIDIA’s end-to-end software stack to solve challenges in areas including:

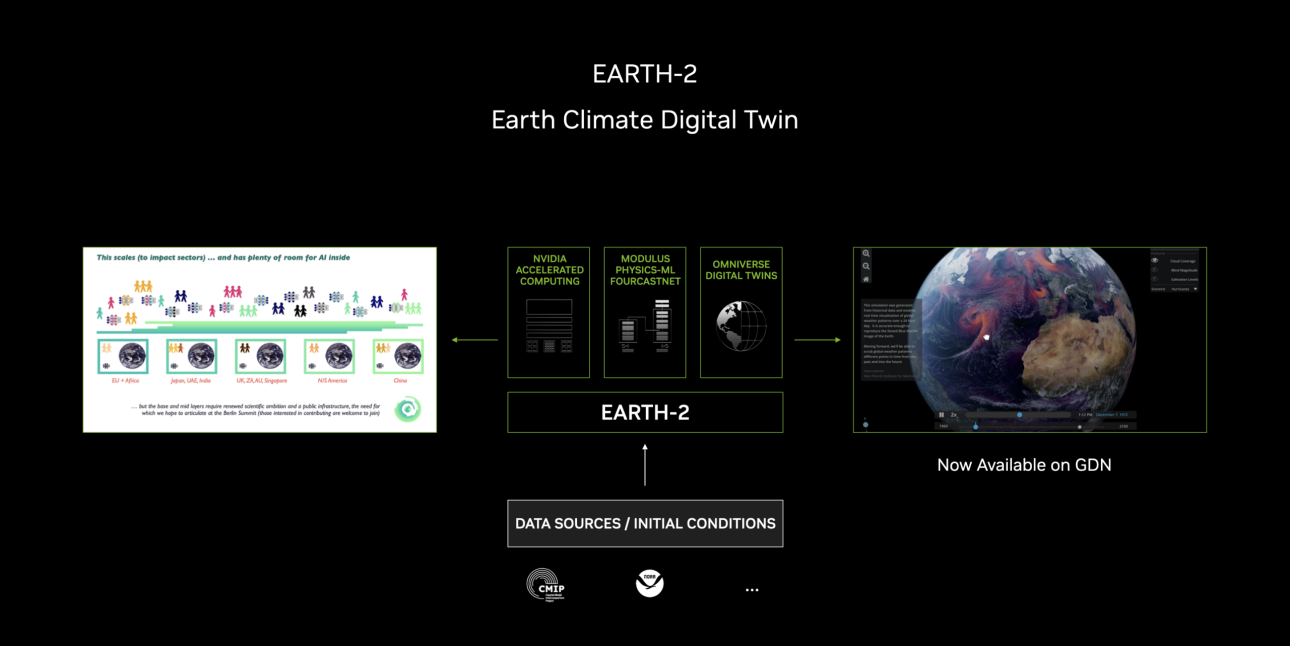

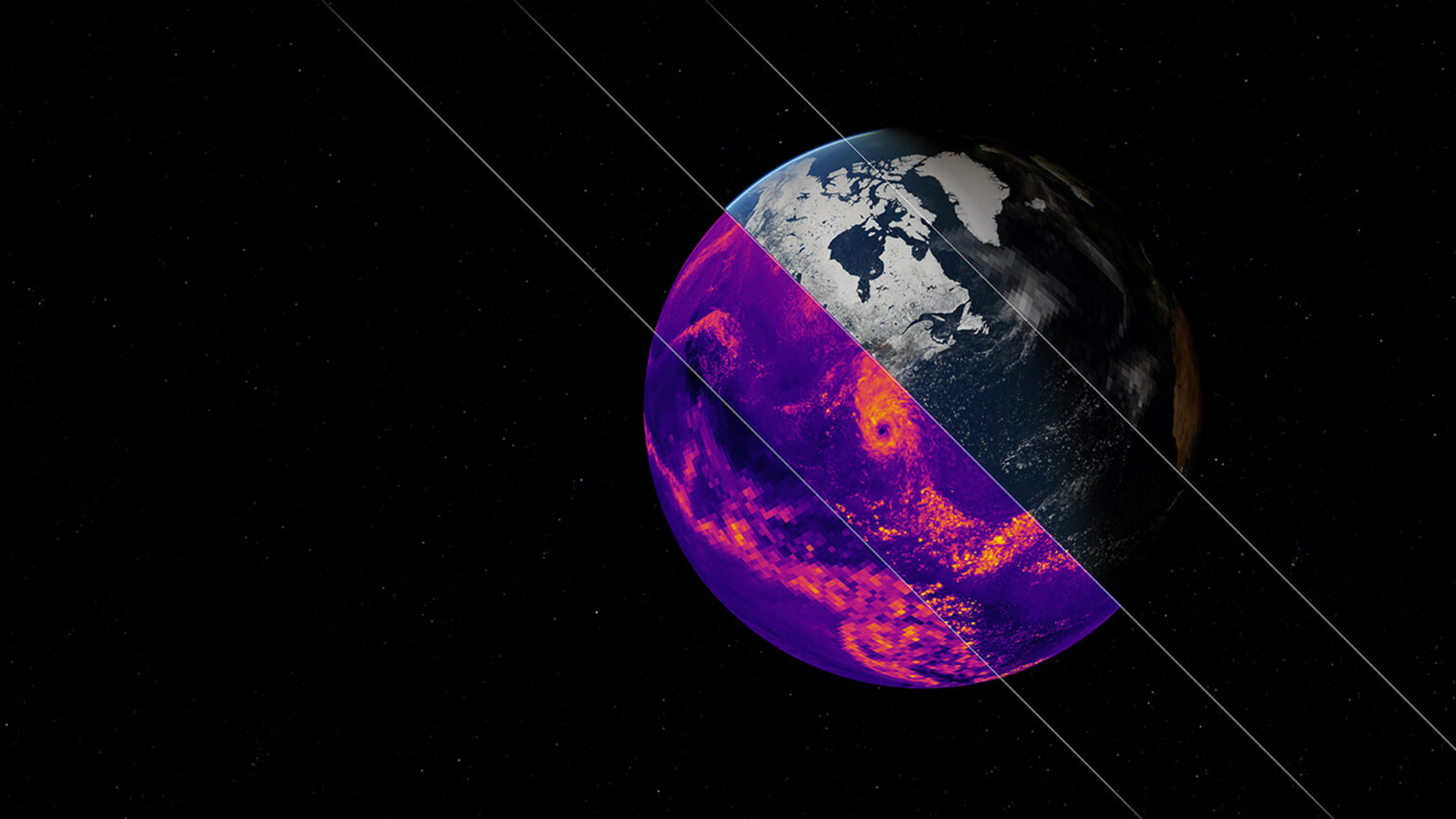

- Climate and weather modeling: Enables high-resolution, real-time environmental simulations and visualization, using the NVIDIA Earth-2 open platform. This contributes to global community initiatives, such as the Earth Virtualization Engines project, which aims to create a digital twin of the Earth to better understand and address climate change.

- Quantum computing research: Advances quantum algorithms and hardware development with powerful tools such as the NVIDIA CUDA-Q™ platform and the NVIDIA cuQuantum software development kit.

- Computer-aided engineering: Reinvents product design and manufacturing through AI-driven simulation and digital twin technologies, powered by the NVIDIA PhysicsNeMo™ framework, NVIDIA CUDA-X™ libraries, and the NVIDIA Omniverse™ platform.

- Drug discovery: Streamlines the creation and deployment of AI models vital to pharmaceutical research through the NVIDIA BioNeMo™ platform, accelerating time to insight in biomolecular science and drug discovery.

Blue Lion and Doudna Supercomputers Will Run on NVIDIA Vera Rubin

Two new supercomputers, one new architecture. Vera Rubin is designed to transform how science is conducted, in real-time, at a massive scale.

Germany’s Leibniz Supercomputing Centre, LRZ, is gaining a new supercomputer that offers approximately 30 times the computing power of SuperMUC-NG, the existing high-performance computer at LRZ. It is named Blue Lion and will operate on the NVIDIA Vera Rubin architecture.

The specifics of the new supercomputer were kept quiet until now. LRZ, part of the Gauss Centre for Supercomputing, Germany’s leading HPC institution, had only hinted its next system would use “next-generation” NVIDIA accelerators and processors. That next generation is Vera Rubin, NVIDIA’s upcoming AI and accelerated science platform.

The Vera Rubin platform was in the news recently when the Lawrence Berkeley National Lab unveiled Doudna, its next flagship system, which Vera Rubin will also power.

Vera Rubin is a superchip that combines the Rubin GPU, the successor to NVIDIA Blackwell, and the Vera CPU, NVIDIA’s first custom CPU designed to work in lockstep with the GPU.

Together, they create a platform designed to merge simulation, data, and AI into a single, high-bandwidth, low-latency engine for science. It integrates shared memory, coherent compute, and in-network acceleration, and is set to launch in the second half of 2026.

Blue Lion: Built by HPE

HPE is developing a system that will utilize next-generation HPE Cray technology and incorporate NVIDIA GPUs within a system featuring robust storage and interconnectivity. This architecture leverages HPE’s 100% fanless direct liquid-cooling systems, which utilize warm water delivered through pipes to efficiently cool the supercomputer.

It’s designed for researchers working in climate, turbulence, physics, and machine learning, incorporating workflows that merge traditional simulations with modern AI. Jobs can scale throughout the entire system. Heat generated from the racks will be reused to warm nearby buildings.

And it’s not just local. Blue Lion will support collaborative research projects across Europe.

Don’t forget Doudna

Recently, we published news about the NVIDIA and Dell Technologies supercomputer, Doudna, which will support large-scale HPC workloads in molecular dynamics, high-energy physics, and AI training and inference. Doudna is a Dell system powered by NVIDIA’s next-generation Vera Rubin platform, providing an environment for developing cutting-edge scientific research workflows.

The Doudna supercomputer will deliver over ten times the performance of Perlmutter, NERSC’s current flagship supercomputer. Doudna will be built using state-of-the-art technology, including Dell’s most advanced ORv3 direct liquid-cooled server technology and the NVIDIA Vera-Rubin CPU-GPU platform.

The system, named after biochemist and Nobel Laureate Jennifer Doudna, is expected to be available in 2026. The name was given in recognition of her work in the field of gene-editing technology, specifically CRISPR.

The new supercomputer will accelerate the design of advanced materials, biomolecular modeling, and fundamental physics.

Quantum simulation tools, including NVIDIA’s CUDA-Q platform, will enable the development, modeling, and verification of quantum algorithms on quantum computers at scale.

NVIDIA Earth-2 Generative AI Foundation Model

NVIDIA unveiled a first-of-its-kind AI model designed to transform climate modeling and analytics for improved prediction, understanding, and response to climate change. cBottle, short for Climate in a Bottle, is the world’s first generative AI foundation model designed to simulate global climate at a kilometer resolution.

Part of the NVIDIA Earth-2 platform, the model can generate realistic atmospheric states that can be conditioned on inputs such as the time of day, day of the year, and sea surface temperatures. This provides a new approach to understanding and anticipating Earth’s most complex natural systems.

The Earth-2 platform features a software stack and tools that combine the power of AI, GPU acceleration, physical simulations, and computer graphics to deliver enhanced capabilities. This enables the creation of interactive digital twins for simulating and visualizing weather, as well as providing climate predictions on a planetary scale. With cBottle, these predictions can be made thousands of times faster and with greater energy efficiency than traditional numerical models, without compromising accuracy.

cBottle was field-tested at the World Climate Research Programme Global KM-Scale Hackathon. The event was organized across eight countries and ten climate simulation centers, aiming to advance the analysis and development of high-resolution Earth-system models while broadening access to high-resolution, high-fidelity climate data.

Revolutionizing Climate Modeling With AI

Climate informatics is traditionally time-, labor-, and compute-intensive, requiring sophisticated analysis of tens of petabytes of data storage.

However, cBottle incorporates NVIDIA GPU acceleration and a highly optimized NVIDIA Earth-2 stack. It utilizes advanced AI to compress massive amounts of climate simulation data, reducing petabytes of data by up to 3,000 times for an individual weather sample. That translates to a 3,000,000x data size reduction for a collection of 1,000 samples.

cBottle was trained on high-resolution physical climate simulations and measurement-constrained estimates of observed atmospheric states from the last 50 years.

The model can fill in missing or corrupted climate data, correct biased climate models, super-resolve low-resolution climate data, and synthesize information based on patterns and prior observations. cBottle’s extreme data efficiency allows training on just four weeks of kilometer-scale climate simulations.

Using cBottle in NVIDIA Earth-2, developers can build climate digital twins to explore and visualize kilometer-scale climate data interactively, as well as predict potential scenarios with low latency and high throughput.

NVIDIA is not alone in the Supercomputer Biz

El Capitan: NNSA’s first exascale machine

Lawrence Livermore National Labs (LLNL) has been a dominant figure in the Top500 high-performance computing rankings. LLNL began installing components in May 2023 for NNSA’s first exascale supercomputer, El Capitan. An exascale supercomputer can perform at least one quintillion double-precision (64-bit) operations per second (1 exaflop). Deployed in 2024, El Capitan is ranked as the world’s most powerful supercomputer, capable of performing more than 2.79 exaflops per second.

El Capitan’s purpose

El Capitan was a collaboration among the three NNSA labs—Livermore, Los Alamos, and Sandia. Its capabilities help researchers ensure the safety, security, and reliability of the nation’s nuclear stockpile without underground testing. The machine is essential for designing and managing a modernized stockpile and other critical national security missions. Research conducted on El Capitan also supports unclassified mission areas critical to national security, such as material discovery, high-energy-density physics, nuclear data, material equations of state, and conventional weapon design.

El Capitan and Tuolumne super computers

To ensure its full computing potential, LLNL is investing in cognitive simulation capabilities, including artificial intelligence (AI) and machine learning (ML) techniques, which will benefit both unclassified and classified missions.

Tuolumne and RZAdams

Research conducted on El Capitan’s largest “sibling” system, Tuolumne, supports various unclassified projects in energy security, earthquake simulations, cancer drug discovery, and other areas of public interest. Similarly, El Capitan’s smaller, unclassified “sibling” system, RZAdams, supports both weapons and non-weapons missions. Both systems were acquired under the El Capitan contract and arrived in 2024.

El Capitan’s novel systems’ software strategy

El Capitan is the first ASC Advanced Technology System, which encompasses ASC’s largest systems, to utilize the TOSS, the Tri-Lab Operating System Software, the same environment and operating system employed by ASC’s commodity technology machines. This advancement streamlines system administration and enhances user experiences.

Locating El Capitan

Installing the HPE/AMD system required the efforts of hundreds of people, as well as partnerships between the public and private sectors. Years of careful planning and preparation paved the way for its successful arrival, which included a massive construction upgrade of power and water to LLNL’s HPC facility.

While it is one of the world’s most energy-efficient supercomputers, El Capitan still requires approximately 30 megawatts (MW) of energy to run at peak, enough power to support a mid-sized city.

Amazon

Amazon