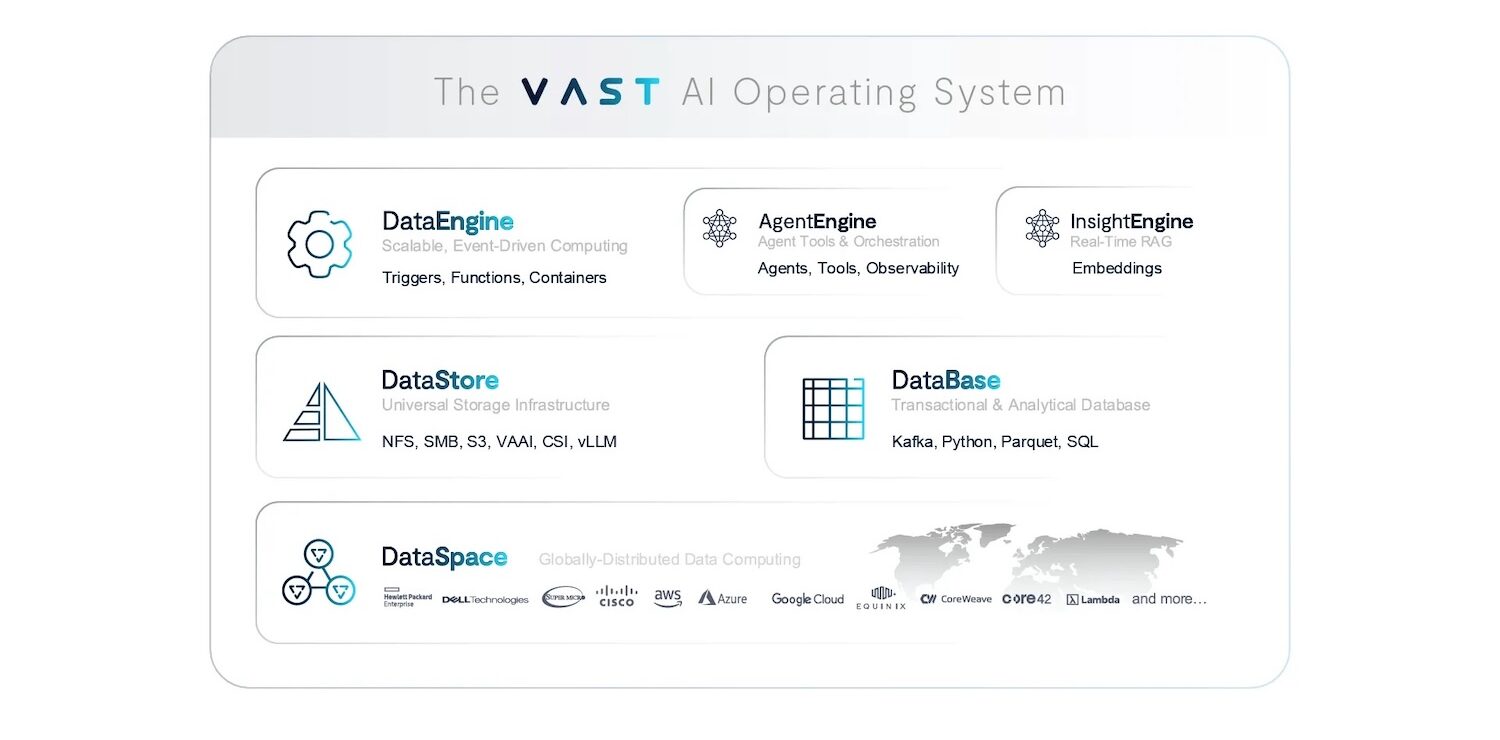

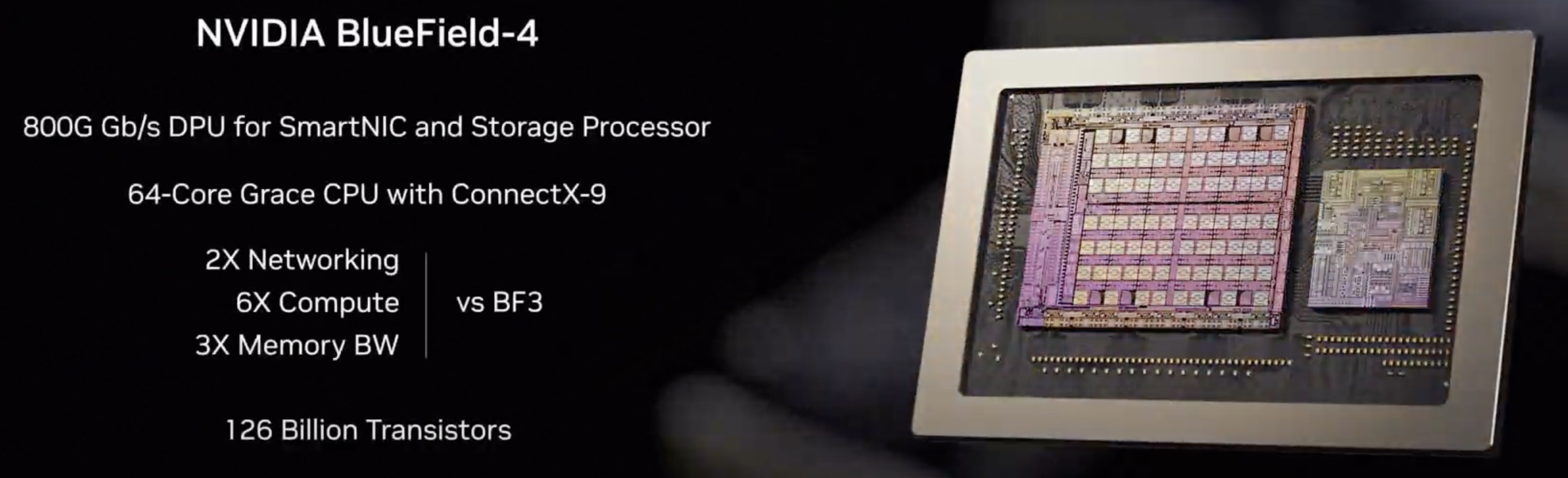

VAST Data has launched a new inference architecture that supports the NVIDIA Inference Context Memory Storage Platform. This system focuses on AI applications that involve ongoing, multi-turn agent-driven sessions. VAST presents this platform as a storage class designed for AI, enhancing access to key-value (KV) cache, enabling fast sharing of inference context between nodes, and improving power efficiency. The design utilizes NVIDIA BlueField-4 DPUs and Spectrum-X Ethernet networking, while the VAST AI Operating System (AI OS) provides the necessary software to manage this shared infrastructure resource effectively.

For more on the importance of KV Cache, check out this deep dive we completed with Pliops.

The announcement signals a change in the factors that limit inference performance in real-world applications. As inference shifts from simple prompts to ongoing reasoning across multiple agents and users, the idea that context stays within a single GPU server becomes outdated. In these situations, overall performance increasingly relies on how well inference history is stored, restored, reused, extended, and shared during ongoing operations. The KV cache evolves from an internal optimization to a primary data path, affecting time-to-first-token (TTFT), GPU usage, and cluster efficiency.

Rebuilding the Inference Data Path Around DPUs

VAST’s strategy is to run the VAST AI OS software directly on NVIDIA BlueField-4 DPUs. Rather than viewing storage and context services as external components accessed through a typical client-server model, the architecture integrates these data services directly into the GPU server, where inference occurs, while also supporting dedicated data node configurations. This approach aims to minimize bottlenecks and eliminate unnecessary copies and delays that slow performance as concurrency increases.

This DPU-native design explicitly addresses the practical needs of large inference systems. When hundreds or thousands of sessions run at the same time, managing the KV cache turns into continuous input/output (I/O) work. The system must quickly read context to keep sessions running, store it for long-term use, and share it among workers to prevent duplicate processing when the infrastructure funnels operations through slower pathways or requires extensive coordination. TTFT increases, causing GPUs to wait rather than process. VAST asserts that moving the data plane to the server via BlueField-4 yields more efficient context traffic, higher throughput, and more reliable latency, closer to the GPU processing loop.

VAST also emphasizes an efficient route from GPU memory to persistent NVMe storage using RDMA fabrics. This effectively allows context to transfer smoothly between GPU servers and storage, reducing the repetitive data handling common in traditional systems. The aim is to ensure context movement stays “in band” with high-performance networking while minimizing CPU involvement and client-side overhead.

Shared, Coherent Context at Scale

The new architecture works alongside VAST’s parallel Disaggregated Shared Everything (DASE) design. VAST explains that this allows each host to access a shared, globally consistent context namespace without the coordination costs that typically arise at scale. In long-lived, agent-driven inference, this detail becomes crucial. Context is not only large but increasingly shared.

Agentic systems often require simultaneous tool use, handoffs between multiple agents, and memory-like behavior during interactions. This necessitates reusing context rather than recreating it and sharing it among inference workers rather than tying it to a single node. If systems cannot maintain context locality due to scheduling, eviction, or failure recovery, they must quickly restore and continue sessions without reprocessing lengthy prompt histories. In this way, the KV cache transforms into a vital cluster resource, shifting the challenge to making it widely accessible without becoming a synchronization bottleneck.

VAST’s perspective is that combining DPU-based data services with a shared-everything storage setup creates a globally accessible context layer that stays efficient as the number of concurrent sessions grows. Instead of scaling by adding isolated context sections, the platform enables clusters to expand while still benefiting from reuse and sharing.

Context as the New Performance Envelope

VAST executive John Mao noted that the competitive advantage for large inference systems is shifting from sheer computing power to memory and context systems. He summarized that the most effective clusters won’t just be those with the highest peak FLOPS; they will be those capable of real-time context sharing and management. He also highlighted the importance of instant, on-demand access to context as a key performance area, warning that without quick context retrieval and reuse, GPUs remain idle and efficiency suffers.

This view aligns with many operators’ experiences as models scale and session lengths increase. Inference fleets are often configured for maximum token production, but the experience is shaped by tail latency and TTFT under stress. As prompts lengthen and sessions persist, the time spent on context management rises. If context cannot be quickly restored or reused, GPU cycles get wasted on re-establishing state or waiting for data to move. VAST’s architectural approach treats the KV cache as shared infrastructure, reducing these issues and boosting overall cluster efficiency.

Moving From Experimentation to Governed, Production Inference

VAST is also presenting the platform as a transition from experimental deployments to production-ready inference services, including in regulated settings. As inference becomes a revenue-generating service with specific security and compliance requirements, managing the KV cache requires more than speed; it requires oversight.

In long sessions, the context may include sensitive prompts, reasoning steps, tool outputs, and user-provided information. Businesses increasingly require policy controls, isolation measures, audit trails, and lifecycle management for that context. VAST claims that these AI-native data services are built into the AI OS, enabling organizations to treat the KV cache as a manageable resource rather than a temporary state.

From an operational perspective, VAST aims to address common challenges in large inference environments: context rebuild storms that occur when caches are lost or cannot be shared, and wasted GPU time when context becomes the bottleneck rather than computation. The company believes that enhancing context persistence and reuse will reduce recomputation and increase usage, thereby improving both throughput per watt and throughput per dollar as context sizes and session concurrency rise.

NVIDIA: Context Management Drives Scaling Economics

Kevin Deierling, Senior Vice President of Networking at NVIDIA, emphasized the importance of context in AI, likening it to human memory. He stated that managing context effectively is essential for scaling multi-turn and multi-user inference, which in turn changes how context memory is handled at scale. He noted that VAST Data AI OS, in combination with NVIDIA BlueField-4, supports this by providing a high-throughput, stable data path as workloads increase. This partnership is important because it integrates DPUs and Ethernet fabric into the core inference context, not just for primary storage access or networking. The key point is that context now poses a challenge for both networking and storage, in addition to being a GPU issue, and modern inference setups will require focused acceleration and reliable data movement to keep GPUs operating efficiently.

If VAST’s architecture provides the promised benefits, the real effect will be less about peak benchmark scores and more about consistent service performance: lower TTFT when many sessions are active, fewer performance drops as context increases, better utilization by reducing context rebuilding, and a more straightforward path to multi-node inference where context sharing is an advantage rather than a drawback. It also pushes inference infrastructure toward a more “AI-native” model, treating the KV cache as a managed memory tier, supported by persistent NVMe and shared across nodes with tight latency limits.

VAST Forward Conference

VAST Data will present its strategy at the first VAST Forward user conference, scheduled for February 24–26, 2026, in Salt Lake City, Utah. The company plans to include detailed technical sessions, hands-on labs, and certification programs, featuring VAST leadership, customers, and partners.

Amazon

Amazon