A few years ago while attending EMC World, I had the chance to meet with Chuck Hollis and several other VMware employees to talk about an idea they had. They wanted to make use of untapped local storage resources inside an ESX host. Rather than simply holding the ESX boot image, they wanted to make the resource usable for virtual machines inside the entire ESX cluster. We talked over what seemed like a clustered file system that could protect data across ESX hosts. I pointed out that spinning disks are slow and they talked about using SSD drives to accelerate I/O operations. It was an interesting idea and I was curious if anything would ever come of it. As you might have guessed something did come of it: Virtual SAN. With the initial release of VSAN I was interested but, admittedly, a bit underwhelmed. Here we are, a year after general availability, and VSAN has taken a leap forward with the latest update to VSAN 6.0.

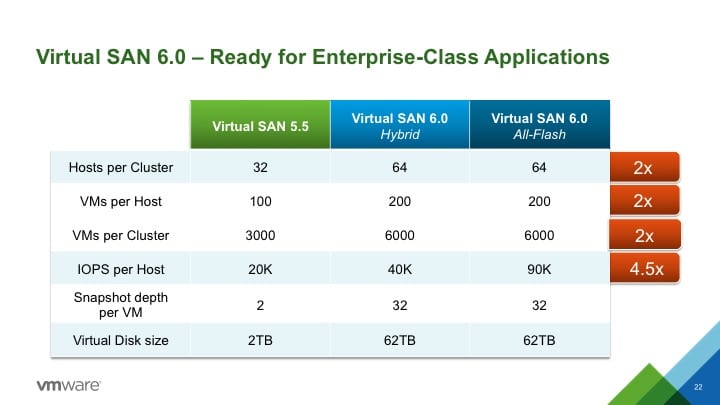

Of course VSAN 6.0 is better, faster, and bigger. In alignment with vSphere 6.0, VMDKs can get up to 62TB and can have up to 64 hosts in the cluster. The number of virtual machines on a node has doubled from 100 to 200, with a maximum of 6,400 per cluster. More nodes mean more capacity and more performance. By using 4TB drives puts the limit around 9 petabytes of raw capacity.

VSAN 6.0 introduces a new Storage Policy Based Management model, which allows for policies at a per-VM level rather than the entire datastore. This allows each VM to have its own settings for thing like availability, performance, and thin provisioning. These are dynamic settings, so, if a VM suddenly needs extra protection then the VSAN will adapt. Compared to previous versions, this new approach is far simpler and allows for more granular control.

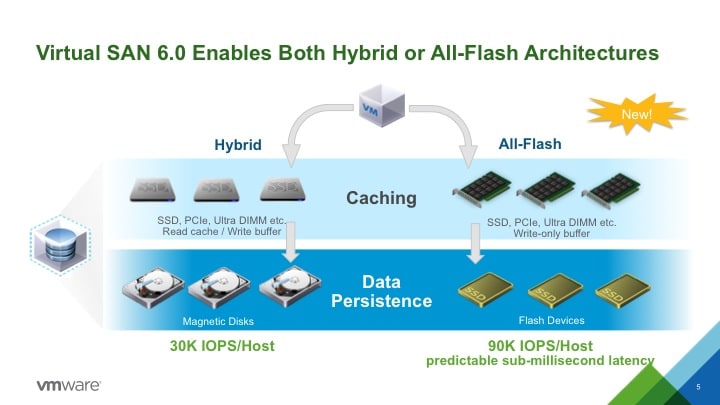

Historically, VSAN has operated in a hybrid configuration using traditional magnetic drives as capacity storage, with flash as a read cache to accelerate performance. In order to achieve the highest performance, an all-flash configuration has been introduced. To keep the cost of this option down, the role of capacity will be played by a cost effective MLC drive. VSAN still requires a caching tier, but not for performance. Instead, the idea is to minimize write workload on the capacity tier and extend its life. It’s important to consider the write nature of the workload.

In an effort to improve usefulness in blade servers, VSAN 6.0 now has a High Density Direct Attached Storage option. I don’t see this as a great option since VMware still recommends that all servers in a VSAN cluster have the same storage configuration. Using that many external JBOD enclosures uses a significant amount of blade chassis real estate, so, this could be a very expensive solution.

VSAN 6.0 is now rack aware. By creating Fault Domains, which represent a minimum of three racks, VSAN will be intelligent enough to distribute data across these racks. This will help protect against power failures, storage controller problems, and network failure.

What's next headlineCould the next version of VSAN be used in conjunction with an external storage array? In fact, this is something Chuck Hollis talked about more than a year ago on his blog. Now that we have an expanded Fault Domain, will the next step be a stretched VSAN cluster? I can see a future where VSAN is extended into vCloud Air as a disaster recovery simplification. Noticeability missing in VSAN 6.0 is any type of data reduction technology and other advanced data services. With the recent developments in VSAN 6.0, it is clear VMware is heavily invested in the technology. I look forward to what the next release may bring.

About the Author

Mark May is a storage engineer in Cincinnati, OH. He has worked in Enterprise Storage and Backup for over 15 years. He is an EMC Elect, Cisco Champion, and avid technologist. In his free time he likes to help others understand the ins and outs of the ever changing storage industry. He can be found online in a variety of places, but the two most likely are his personal blog and twitter @cincystorage.

Amazon

Amazon