At SC25, WEKA introduced the next generation of its WEKApod appliances, targeting organizations that need to scale AI and high-performance computing while keeping a tight handle on cost, power, and footprint. The new WEKApod Prime and WEKApod Nitro platforms are positioned to address a growing infrastructure efficiency crisis, in which GPU investments frequently outpace the storage and networking systems needed to keep them fully utilized.

The design goal for this generation is clear: remove the traditional trade-off between performance and cost while increasing density and efficiency so every rack unit and kilowatt delivers more usable AI capacity.

The Infrastructure Efficiency Crisis

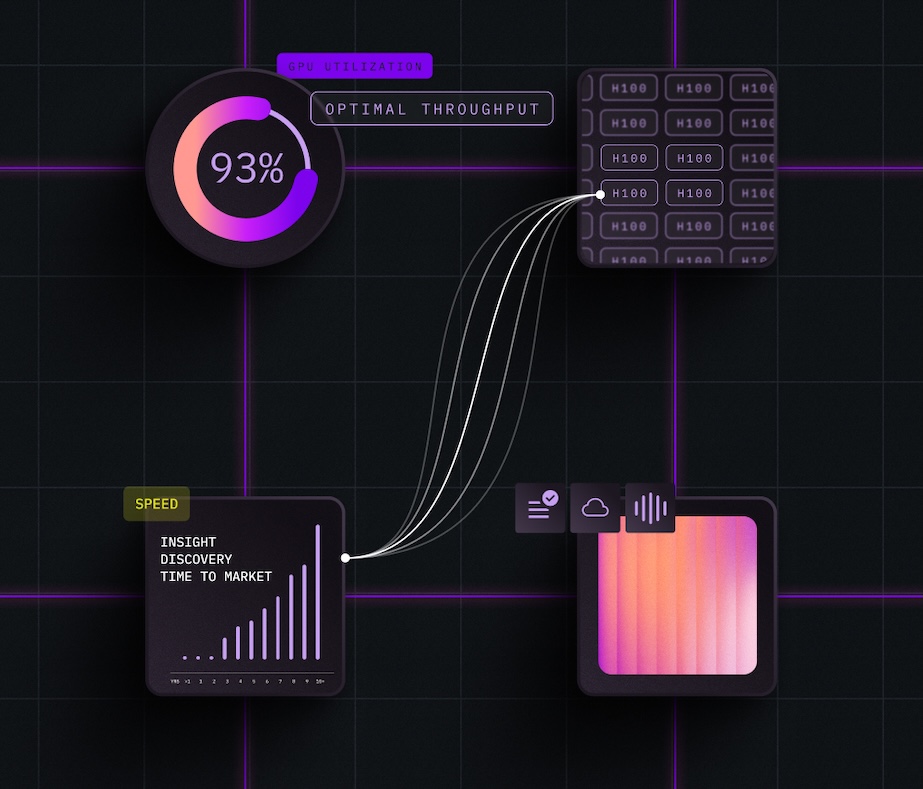

Enterprises and AI cloud providers are pressured to demonstrate returns on rapidly growing AI infrastructure investments. Expensive GPUs often sit underutilized due to storage bottlenecks, extended training cycles lengthen time-to-value, inference costs erode margins, and cloud spending climbs as unstructured data volumes increase.

At the same time, data centers face hard limits in rack space, power delivery, and cooling capacity. Simply adding more hardware is not a sustainable strategy. Storage systems that require a choice between extreme performance and manageable cost no longer align with the economics of large-scale AI deployments. Every node in an AI factory must contribute to higher GPU utilization and lower cost per model trained and served.

The next-generation WEKApod family is designed to tackle this problem directly, combining dense, power-efficient hardware with WEKA’s NeuralMesh software stack to keep GPUs busy and infrastructure costs in check.

WEKApod Prime: Mixed-Flash Economics Without Performance Penalties

WEKApod Prime is positioned as the primary workhorse for organizations that want to improve storage economics without sacrificing performance. It delivers a reported 65% improvement in price-to-performance by intelligently placing data across a mix of flash media and optimizing writes based on workload characteristics.

Prime uses WEKA’s AlloyFlash technology to combine multiple drive classes into a single configuration. Instead of building a traditional multi-tier cache hierarchy with explicit data movement and the usual write penalties, AlloyFlash provides consistent performance across operations. Writes are designed to sustain full performance rather than being throttled under load, which is crucial for training checkpoints, logging, and other write-heavy AI workflows.

From a physical design perspective, WEKApod Prime supports up to 20 drives in a 1U server or 40 drives in a 2U form factor. This enables high capacity and performance density in limited spaces. WEKA reports 4.6 times higher capacity density than the previous generation, along with 5 times higher write IOPS per rack unit. Power efficiency is also a key focus, offering 4 times better power density at approximately 23,000 IOPS per kW and around 1.6 PB per kW, with a 68 percent reduction in power consumption per terabyte.

For operators designing modern AI clusters, this translates into a storage layer that can keep pace with GPU throughput while fitting within existing power and space envelopes. For write-intensive jobs such as model training, checkpointing, and data preprocessing, the storage system is less likely to become a limiter, leaving accelerators idle.

Early adopters such as the Danish Centre for AI Innovation (DCAI) are using WEKApod Prime to achieve what they describe as hyperscaler-class throughput and efficiency within a compact footprint. DCAI highlights the ability to increase AI capacity per kilowatt and per square meter as a direct contributor to the economic viability of their infrastructure and to faster AI deployment for customers.

WEKApod Nitro: Performance Density for AI Factories

Where Prime focuses on price-performance and capacity efficiency, WEKApod Nitro is designed for AI factories running hundreds or thousands of GPUs that need maximum performance density. Nitro is built on updated hardware that delivers roughly 2x the performance and 60% better price-to-performance compared with the previous generation.

Nitro configurations include the NVIDIA ConnectX-8 SuperNIC, providing 800 Gb/s of network throughput in a 1U form factor populated with 20 TLC SSDs. This high-bandwidth design targets environments where network and storage must match the scale-out characteristics of GPU clusters to avoid bottlenecks during large-scale training and high-throughput inference.

Both Prime and Nitro are available as turnkey NVIDIA DGX SuperPOD and NVIDIA Cloud Partner (NCP) certified appliances. For technical sales teams and solution architects, this is significant because it can compress deployment timelines. Instead of spending weeks on integration, tuning, and validation work, customers can consume WEKApod as a pre-validated component in DGX-based and NCP-aligned architectures, reducing time to production and accelerating time-to-revenue for new AI services.

NVIDIA positions its Spectrum-X Ethernet and ConnectX-8 networking as a core foundation of the WEKApod architecture. From a system design perspective, this reinforces the emphasis on removing data bottlenecks between storage, fabric, and GPU nodes to optimize overall AI performance.

NeuralMesh and Software-Defined Flexibility

Under the hardware platforms, WEKApod is powered by NeuralMesh, which WEKA describes as the first storage system purpose-built to accelerate AI at scale. NeuralMesh is responsible for providing the software-defined storage layer, along with enterprise features expected in modern environments.

WEKApod appliances expose NeuralMesh capabilities through ready-to-deploy, pre-validated configurations and an enhanced setup utility, designed to deliver a plug-and-play experience rather than a complex integration project. Organizations can begin with as few as eight servers and grow to hundreds as demand increases, without re-architecting the storage environment.

NeuralMesh includes core enterprise features such as distributed data protection, instant snapshots, multiprotocol access, automated tiering, encryption, and hybrid cloud support. This means AI-optimized performance and density do not come at the expense of data resilience, governance, or operational flexibility.

By combining these software capabilities with high-density hardware, WEKApod aims to serve not just as a high-performance file or object store but as a foundational data platform for AI factories and large-scale object storage and AI data lake deployments.

Addressing Business Outcomes for Different Customer Segments

The business impact of the next-generation WEKApod family is framed differently for each target audience, but it centers on better infrastructure ROI and higher utilization of existing investments.

For AI cloud providers and emerging Neoclouds, the 65% improvement in price-to-performance is intended to translate directly into higher margins on GPU-as-a-service offerings. The ability to support more customers on the same infrastructure while maintaining performance SLAs improves profitability and capacity planning. The turnkey nature of WEKApod deployments also reduces onboarding time for new customers, enabling faster time-to-revenue.

For enterprises deploying internal AI factories, WEKApod is positioned as a way to compress deployment cycles from months to days. Turnkey integration, pre-validation, and enterprise features allow IT teams to stand up storage for AI workloads without deep, specialized expertise in parallel file systems or performance tuning. At the same time, improved density and efficiency can reduce power consumption per terabyte by up to 68% and avoid one-to-one scaling of the data center footprint as AI capabilities grow.

For AI builders and researchers, the key value proposition is higher GPU utilization and shorter training cycles. WEKApod’s design aims to support GPU utilization rates above 90 percent by ensuring that the storage layer and fabric are not bottlenecks. Faster model training and reduced iteration time provide a competitive advantage in environments where speed of experimentation drives business outcomes. Lower inference costs, supported by efficient storage and networking, can also make new AI applications economically viable that might otherwise be cost-prohibitive at scale.

Ajay Singh, Chief Product Officer at WEKA, frames these updates as critical to making AI investments pay off. In his view, WEKApod Prime delivers significantly better price-to-performance without sacrificing throughput, while WEKApod Nitro increases performance density to better utilize GPUs. Collectively, this supports faster model development, higher inference throughput, and improved ROI on compute infrastructure.

The new WEKApod Prime and Nitro appliances position WEKA firmly in the AI and HPC storage market, targeting the intersection of high performance, density, and efficiency. Prime focuses on breaking the traditional link between performance and cost using intelligent mixed-flash management and AlloyFlash. At the same time, Nitro targets GPU-rich AI factories with high-performance networking and compact form factors.

Together with the NeuralMesh software suite and ready-to-use, NVIDIA-certified configurations, WEKApod aims to solve both technical and business challenges: keeping GPUs engaged, managing data center power and space, and reducing the time needed to deliver AI-powered services to market. For technical sales teams and solution architects, the value proposition focuses on higher utilization, better margins, and faster deployment, supported by measurable gains in density, efficiency, and price-to-performance.

Amazon

Amazon