The GIGABYTE R181-2A0 is a 1U server built around the second generation Intel Xeon Scalable processors. The second gen Xeon CPUs bring their set of benefits to the server that contain the usual suspects of Optane DC Memory support, higher CPU frequencies and the performance that can come with that, faster DDR4 speed, and Intel Deep Learning Boost. The server is super flexible and is aimed toward an application connected to shared storage, or perhaps HPC use cases.

From a hardware perspective, we mentioned the CPUs, the server has 24 DIMM slots, three PCIe expansion slots, and two OCP Gen3 mezzanine slots, and redundant 1200W 80 Plus platinum PSUs. For storage, GIGABYTE is going with either SAS or SATA versus tossing NVMe in everything. Basically, not everyone wants or needs NVMe or the cost associated with it. And for those customers, this server fits the bill.

We did a video overview here:

Our particular build consists of two Intel 8280 CPUs, 384TB of memory, and a Memblaze PBlaze5 C926 edgecard NVMe SSD.

GIGABYTE R181-2A0 Specifications

| Form Factor | 1U |

| Dimensions (WxHxD, mm) | 438 x 43.5 x 730 |

| Motherboard | MR91-FS0 |

| CPU | 2nd Generation Intel Xeon Scalable and Intel Xeon Scalable Processors Intel Xeon Platinum Processor, Intel Xeon Gold Processor, Intel Xeon Silver Processor and Intel Xeon Bronze Processor CPU TDP up to 205W |

| Socket | 2 x LGA 3647 Socket P |

| Chipset | Intel C621 Express Chipset |

| Memory | 24 x DIMM slots DDR4 memory supported only 6-channel memory architecture RDIMM modules up to 64GB supported LRDIMM modules up to 128GB supported Supports Intel Optane DC Persistent Memory (DCPMM) 1.2V modules: 2933/2666/2400/2133 MHz |

| LAN | 2 x 1Gb/s LAN ports (Intel I350-AM2) 1 x 10/100/1000 management LAN |

| Storage | 10 x 2.5″ SATA/SAS hot-swappable HDD/SSD bays Default configuration supports: 10 x SATA drives or 2 x SATA drives 8 x SAS drives SAS card is required for SAS devices support |

| SATA | 2 x 7-pin SATA III 6Gb/s with SATA DOM supported By using pin_8 or external cable for power function |

| SAS | Supported via add-on SAS Card |

| RAID | Intel SATA RAID 0/1/10/5 |

| Expansion Slots | Riser Card CRS1021: – 2 x PCIe x8 slots (Gen3 x8), Low profile half-length Riser Card CRS1015: – 1 x PCIe x16 slot (Gen3 x16), Low profile half-length 2 x OCP mezzanine slots – PCIe Gen3 x16 – Type1, P1, P2, P3, P4, K2, K3 |

| Internal I/O | 2 x Power supply connectors 4 x SlimSAS connectors 2 x SATA 7-pin connectors 2 x CPU fan headers 1 x USB 3.0 header 1 x TPM header 1 x VROC connector 1 x Front panel header 1 x HDD back plane board header 1 x IPMB connector 1 x Clear CMOS jumper 1 x BIOS recovery jumper |

| Front I/O | 1 x USB 3.0 1 x Power button with LED 1 x ID button with LED 1 x Reset button 1 x NMI button 2 x LAN activity LEDs 1 x HDD activity LED 1 x System status LED |

| Rear I/O | 2 x USB 3.0 1 x VGA 1 x COM (RJ45 type) 2 x RJ45 1 x MLAN 1 x ID button with LED |

| Backplane I/O | 10 x SATA/SAS ports Bandwidth: SATAIII 6Gb/s or SAS 12Gb/s per port 2 x U.2 ports( Reserved) Bandwidth: PCIe Gen3 x4 per port( Reserved) |

| TPM | 1 x TPM header with LPC interface Optional TPM2.0 kit: CTM000 |

| Power Supply | 2 x 1200W redundant PSUs 80 PLUS Platinum AC Input: – 100-240V~/ 12-7A, 50-60Hz DC Input: – 240Vdc/ 6A DC Output: – Max 1000W/ 100-240V~ +12V/ 80.5A +12Vsb/ 3A – Max 1200W/ 200-240V~ or 240Vdc input +12V/ 97A +12Vsb/ 3A |

| Weight | 13 kg |

| System Fans | 8 x 40x40x56mm (23’000rpm) |

| Operating Properties | Operating temperature: 10°C to 35°C Operating humidity: 8-80% (non-condensing) Non-operating temperature: -40°C to 60°C Non-operating humidity: 20%-95% (non-condensing) |

GIGABYTE R181-2A0 Design and Build

As stated, the GIGABYTE R181-2A0 has a 1U form factor. Across the front of the server are ten 2.5” SATA/SAS drive bays. On the left are indicator lights, power button, ID button, Reset button, NMI button, and a USB 3.0 port.

The rear of the server has two PSUs on the left, a VGA port, two USB 3.0 ports, two RJ45 ports, a COM port, a MLAN port, an ID Button, and the three low profile expansion slots near the top as well as the two OCP slots near the bottom.

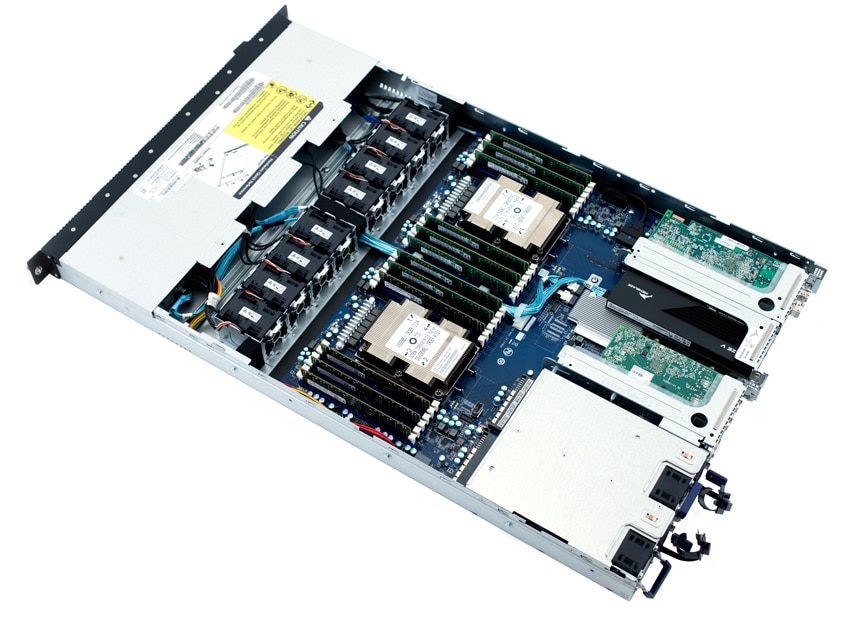

Opening up the server we immediately see the CPUs and RAM in the middle. This gives users easy access to the riser cards and any expansion slots they need. We’ve installed a dual-port 16Gb FC HBA to connect to shared storage, a Mellanox Connect-X 4 dual-port 25GbE NIC as well as our Memblaze PBlaze5 SSD.

Management

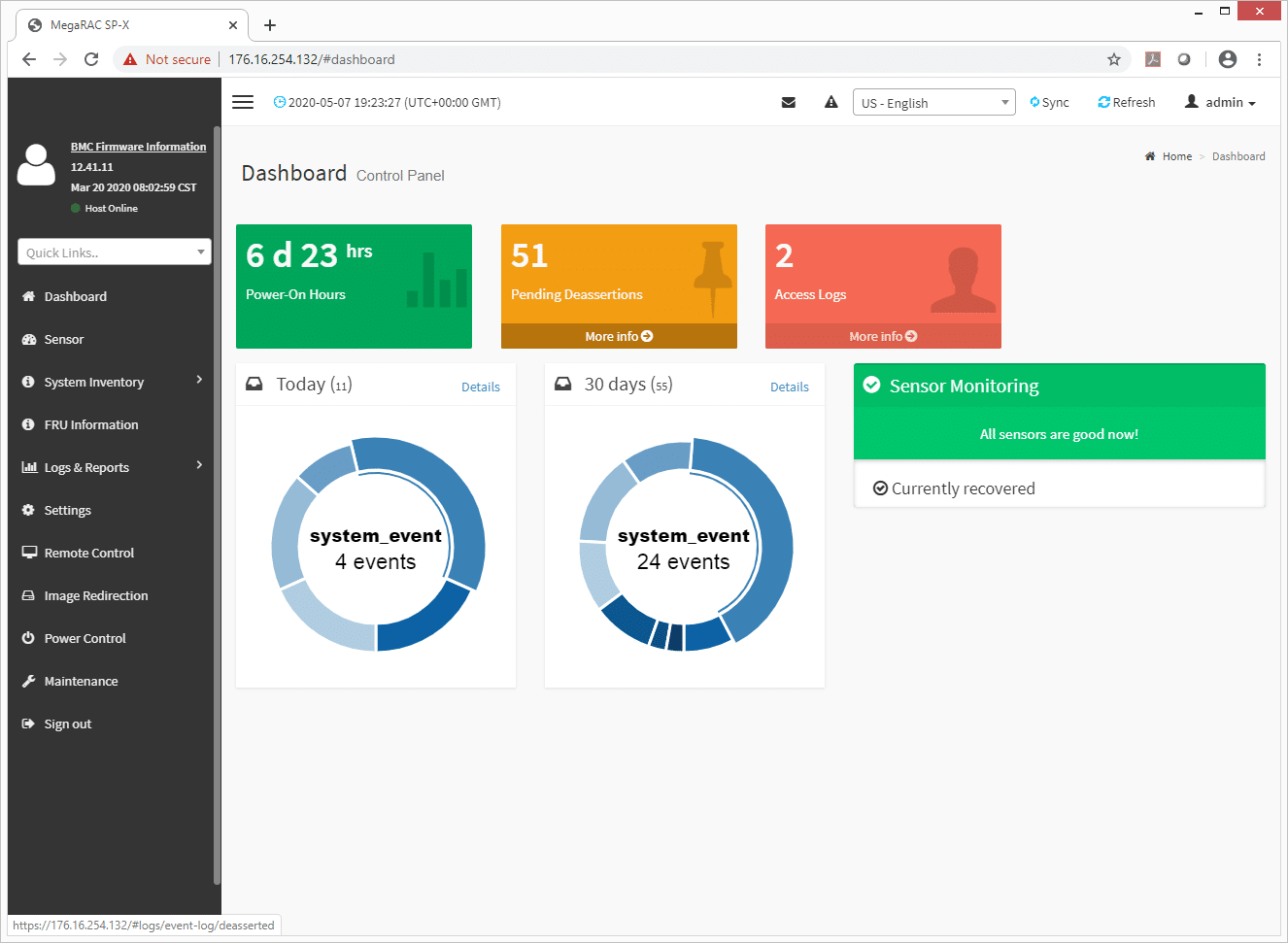

The GIGABYTE R181-2A0 has its own GSM remote management software but can also leverage AMI MegaRAC SP-X platform for BMC server management. We will be using the MegaRAC for this review. For a more in-depth look at AMI MegaRAC SP-X in a GIGABYTE server, check out our GIGABYTE R272-Z32 AMD EPYC Rome server review.

From the main management screen one can view quick stats on landing page and see several main tabs running down the left side including: Dashboard, Sensor, System Inventory, FRU Information, Logs & Reports, Settings, Remote Control, Image Redirection, Power Control, and Maintenance. The first page is the dashboard. Here one can easily see the up time of the BMC, pending deassertions, access logs and how many issues are up, sensor monitoring, and the drive slots and how many events they’ve had over the last 24 hours as well as 30 days.

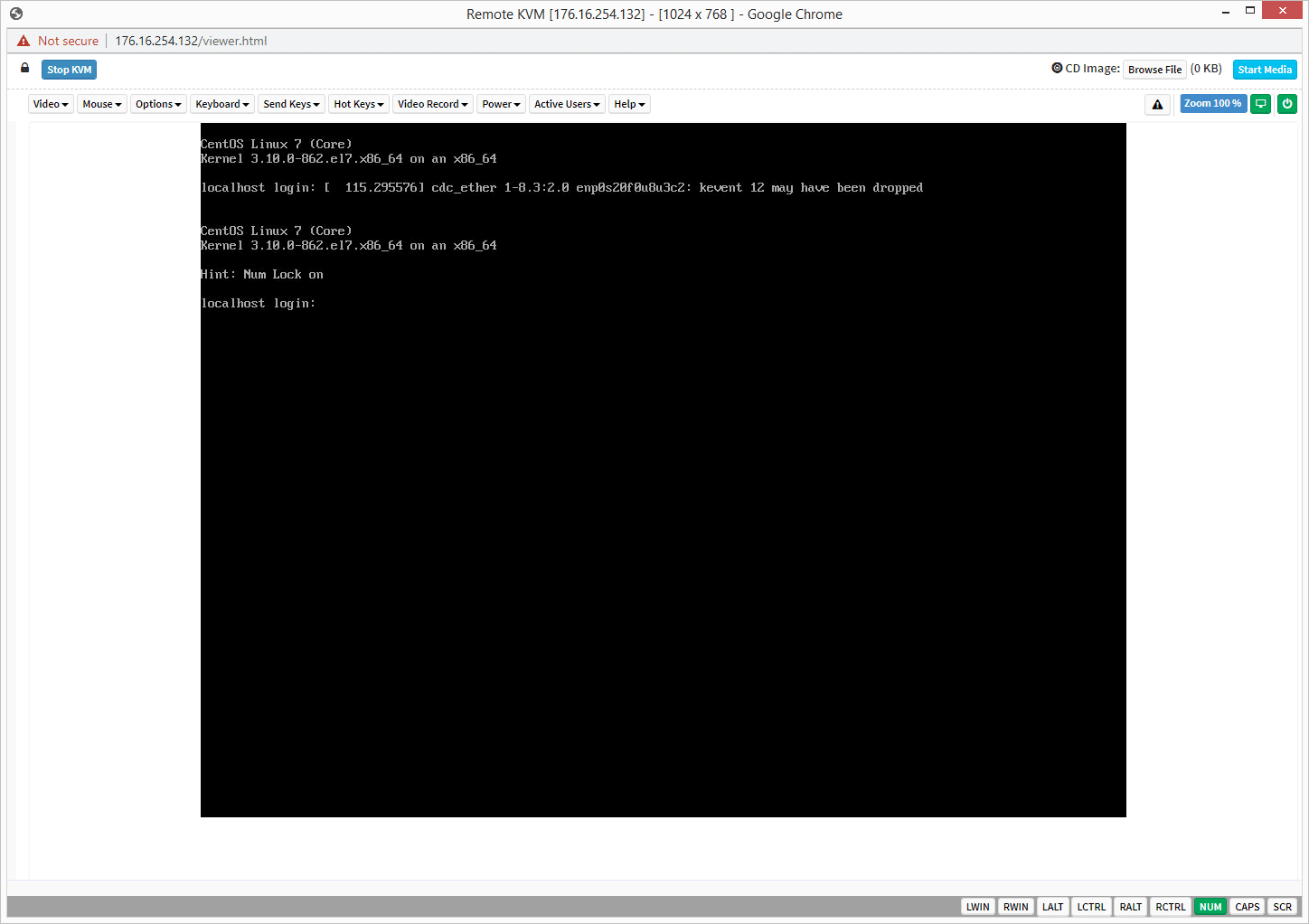

Once launched, the remote console gives users remote access to the server OS, which in our example is a Linux loading screen. Remote console windows are an invaluable tool in a datacenter where you want local control without having to haul over a monitor, keyboard and mouse crash cart to do so. Visible in the top right of the window is the CD image feature which lets you mount ISOs from your local system to be remotely accessible on the server for loading software.

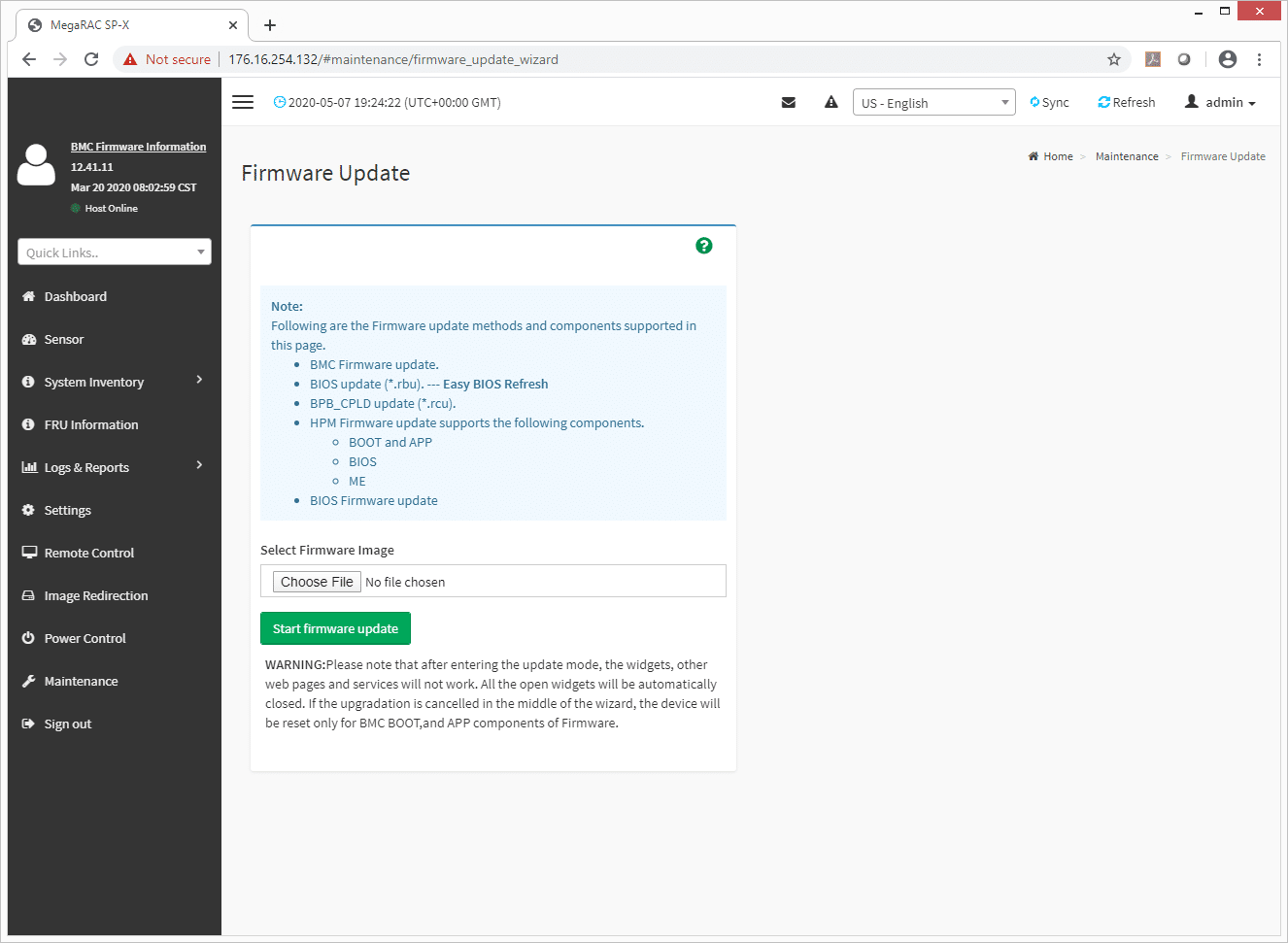

Through the maintenance tab users can find BIOS information and information on firmware.

GIGABYTE R181-2A0 Performance

GIGABYTE R181-2A0 Configuration:

- 2 x Intel 8280 CPU (28-core, 2.7GHz)

- 12 x 32GB 2933MHz, 6 DIMMs per CPU

- 1 x 6.4TB Memblaze PBlaze5 C926 NVMe SSD

- VMware ESXi 6.7u3

- CentOS 7 (1908)

SQL Server Performance

StorageReview’s Microsoft SQL Server OLTP testing protocol employs the current draft of the Transaction Processing Performance Council’s Benchmark C (TPC-C), an online transaction processing benchmark that simulates the activities found in complex application environments. The TPC-C benchmark comes closer than synthetic performance benchmarks to gauging the performance strengths and bottlenecks of storage infrastructure in database environments.

Each SQL Server VM is configured with two vDisks: 100GB volume for boot and a 500GB volume for the database and log files. From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM and leveraged the LSI Logic SAS SCSI controller. While our Sysbench workloads tested previously saturated the platform in both storage I/O and capacity, the SQL test looks for latency performance.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs, and is stressed by Dell’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across our servers.

SQL Server Testing Configuration (per VM)

- Windows Server 2012 R2

- Storage Footprint: 600GB allocated, 500GB used

- SQL Server 2014

-

- Database Size: 1,500 scale

- Virtual Client Load: 15,000

- RAM Buffer: 48GB

- Test Length: 3 hours

- 2.5 hours preconditioning

- 30 minutes sample period

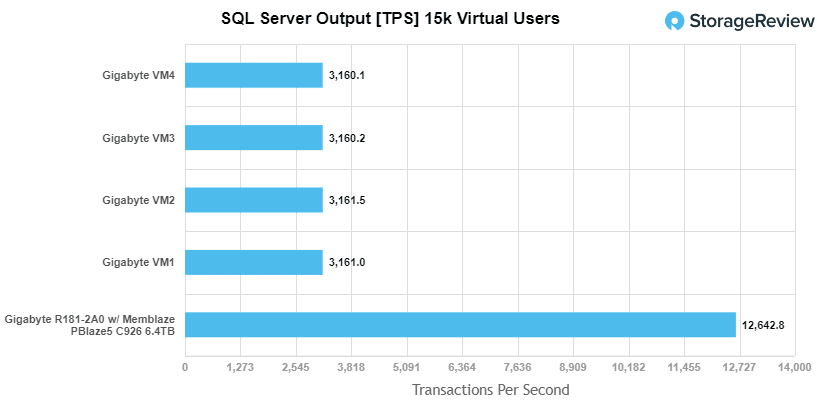

For our transactional SQL Server benchmark, the GIGABYTE had an aggregate score of 12,643.8 TPS with individual VMs ranging from 3,160.1 TPS to 3,161.5 TPS.

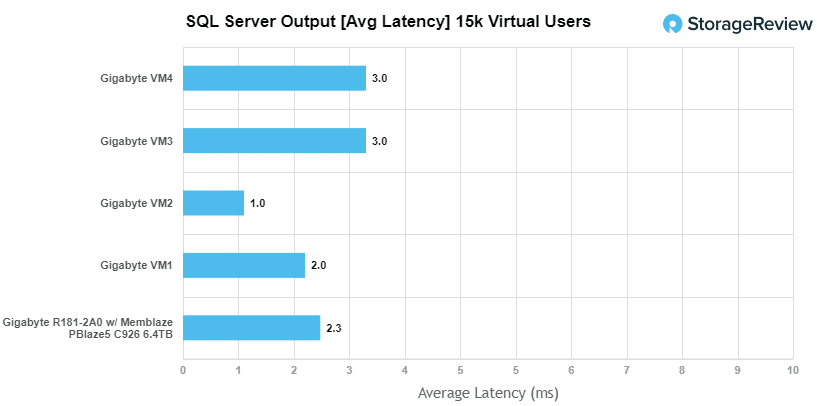

For SQL Server average latency the server had an aggregate score of 2.3ms with VMs ranging from 1ms to 3ms.

Sysbench MySQL Performance

Our first local-storage application benchmark consists of a Percona MySQL OLTP database measured via SysBench. This test measures average TPS (Transactions Per Second), average latency, and average 99th percentile latency as well.

Each Sysbench VM is configured with three vDisks: one for boot (~92GB), one with the pre-built database (~447GB), and the third for the database under test (270GB). From a system resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM and leveraged the LSI Logic SAS SCSI controller.

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Percona XtraDB 5.5.30-rel30.1

-

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

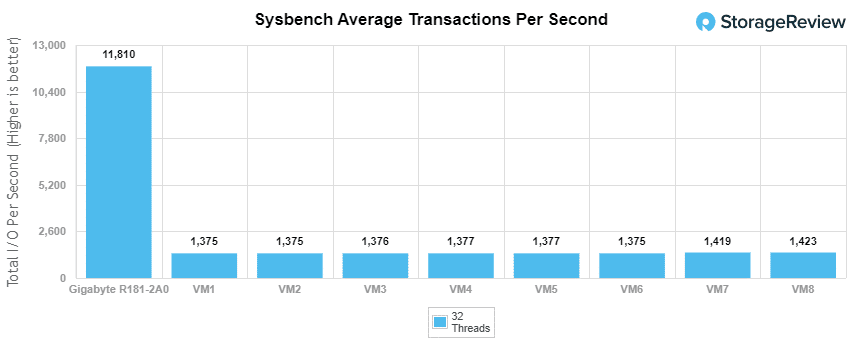

With the Sysbench OLTP the GIGABYTE R181-2A0 had an aggregate score of 11,096.4 TPS with individual VMs running between 1,375 TPS to 1,422.72 TPS.

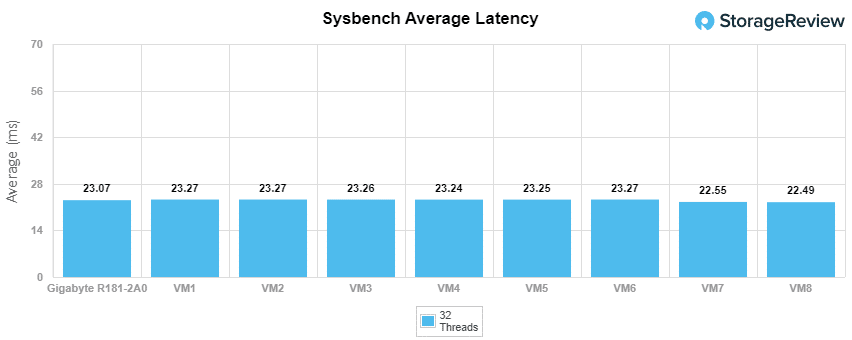

For Sysbench average latency the server had an aggregate score of 23.1ms with individual VMs running from 22.5ms to 23.3ms.

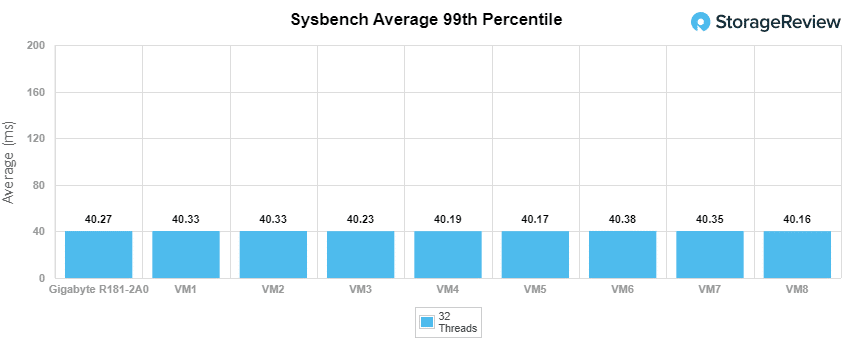

For our worst-case scenario latency (99th percentile) the GIGABYTE had an aggregate score of 40.3ms with individual VMs running from 40.2ms to 40.4ms.

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

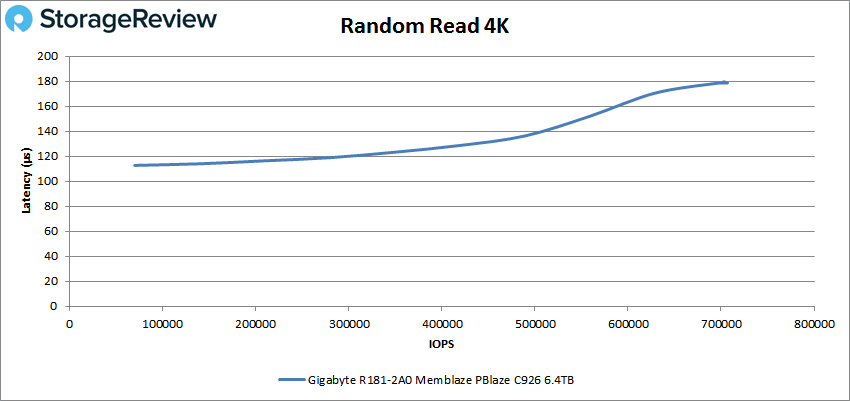

With random 4K read, the GIGABYTE R181-2A0 started strong with a peak at 706,664 IOPS with a latency of 178.6µs.

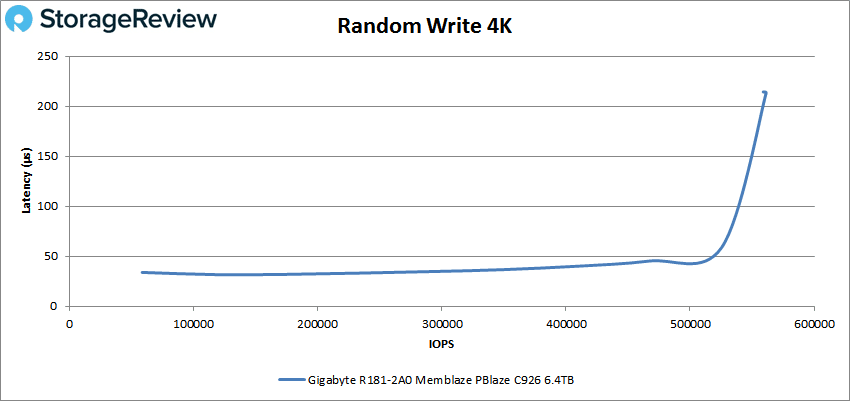

For 4K random write the server started at 58,406 IOPS at only 33.9µs and stayed below 100µs until near its peak that happened to be 561,280 IOPS and 213.3µs.

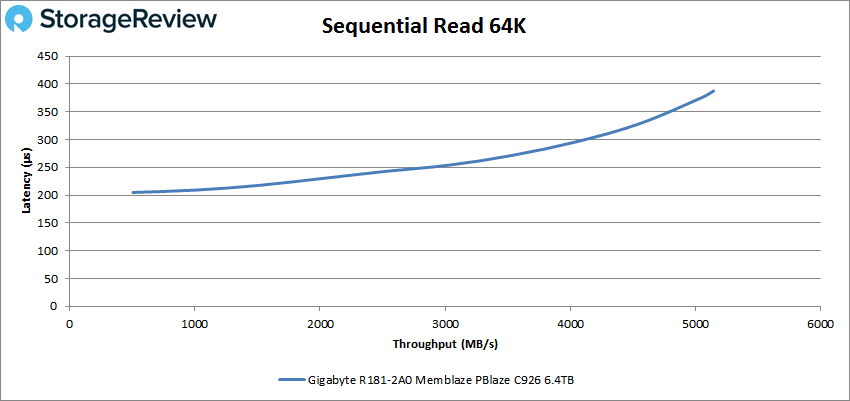

Next up is sequential workloads where we looked at 64k. For 64K read the GIGABYTE peaked at 82,271 IOPS or 5.1GB/s at a latency of 387.4µs.

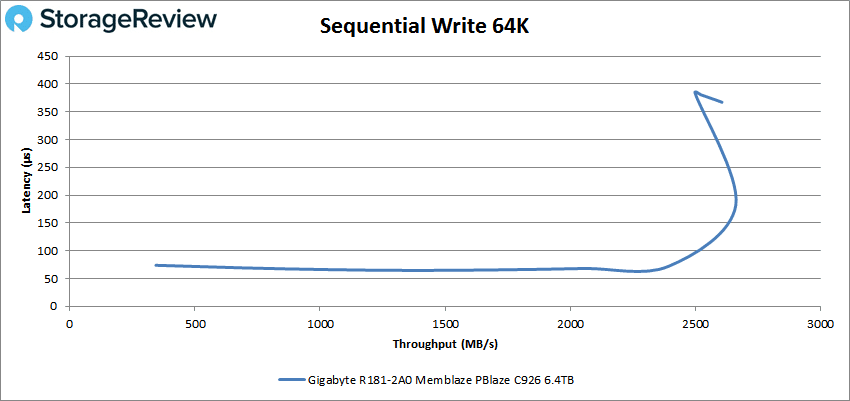

64K sequential writes saw the server hit of about 43K IOPS or roughly 2.7GB/s at 182µs latency before dropping off some.

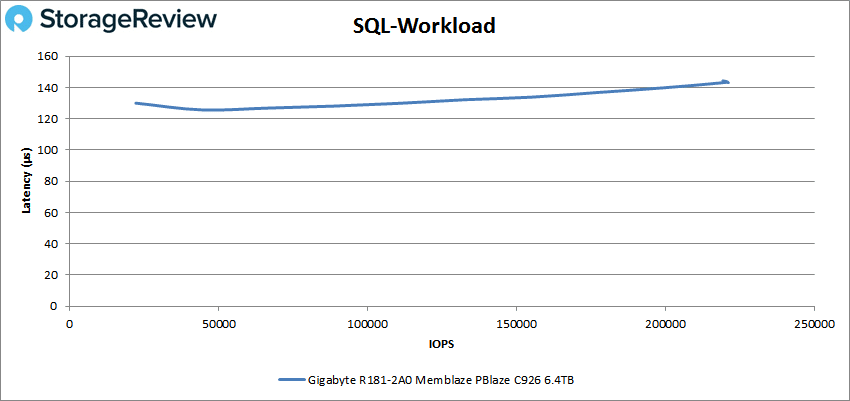

Our next set of tests are our SQL workloads: SQL, SQL 90-10, and SQL 80-20. Starting with SQL, the server peaked at 220,712 IOPS with a latency of 143µs.

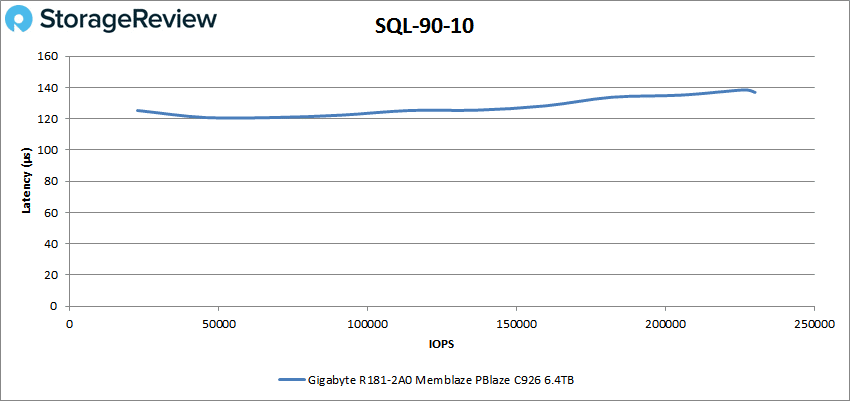

For SQL 90-10 we saw a peak performance of 230,152 IOPS at a latency of 137µs.

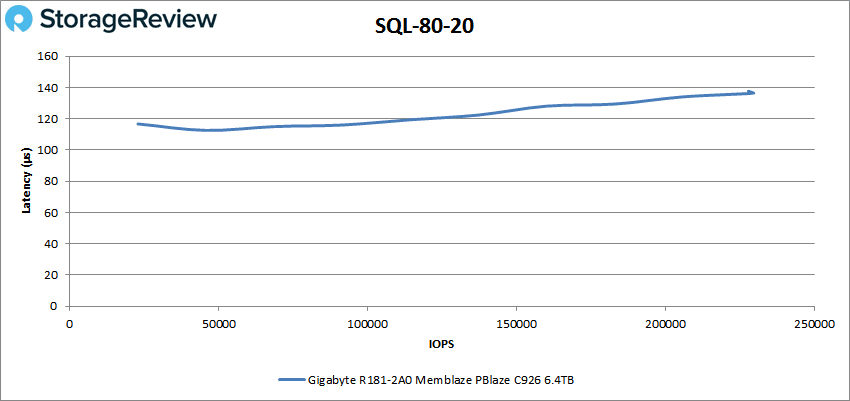

SQL 80-20 continued the strong performance with a peak of 229,724 IOPS with 136µs for latency.

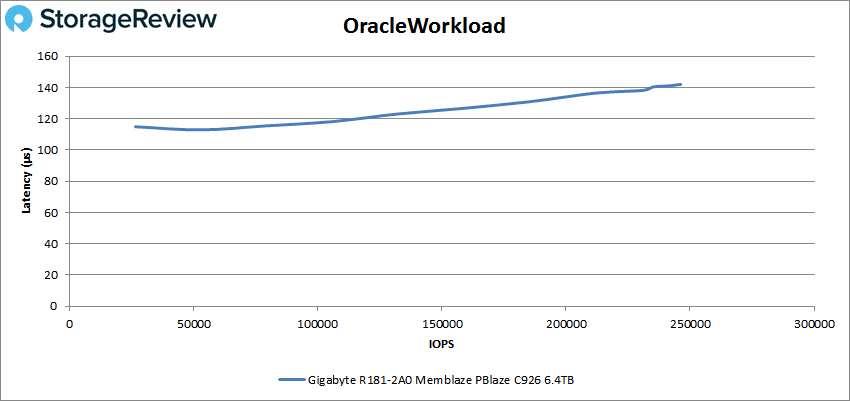

Next up are our Oracle workloads: Oracle, Oracle 90-10, and Oracle 80-20. Starting with Oracle, the server peaked at 246,191 IOPS with a latency of 142µs.

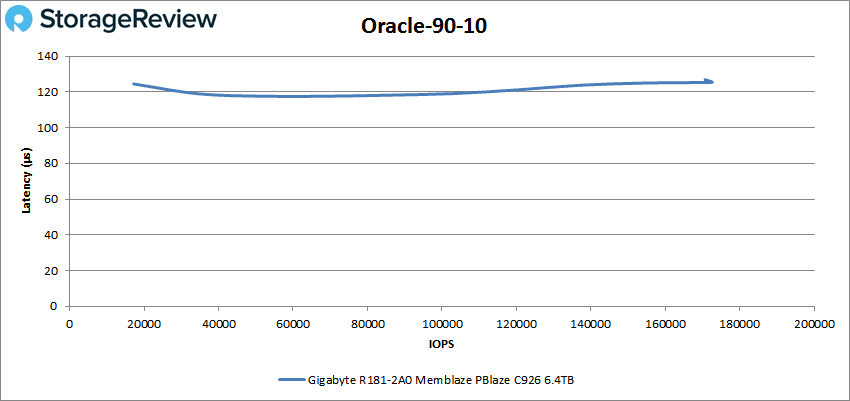

With Oracle 90-10 the GIGABYTE was able to hit 172,642 IOPS with latency at 125.5µs.

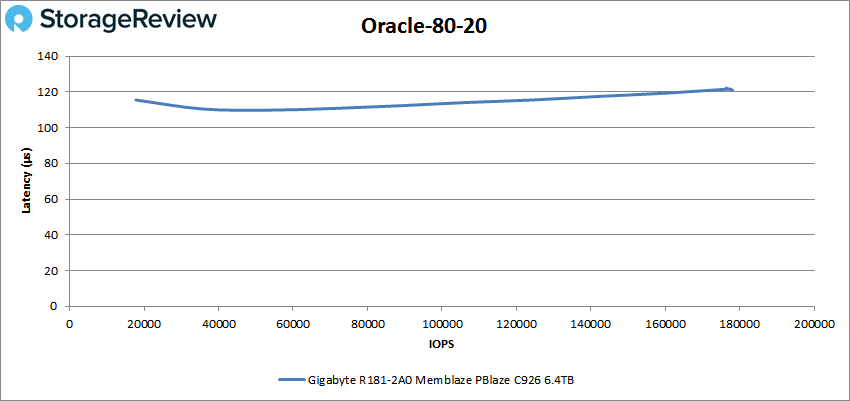

Oracle 80-20, the last Oracle benchmark, saw the server hit a peak of 178,108 IOPS at 121µs for latency.

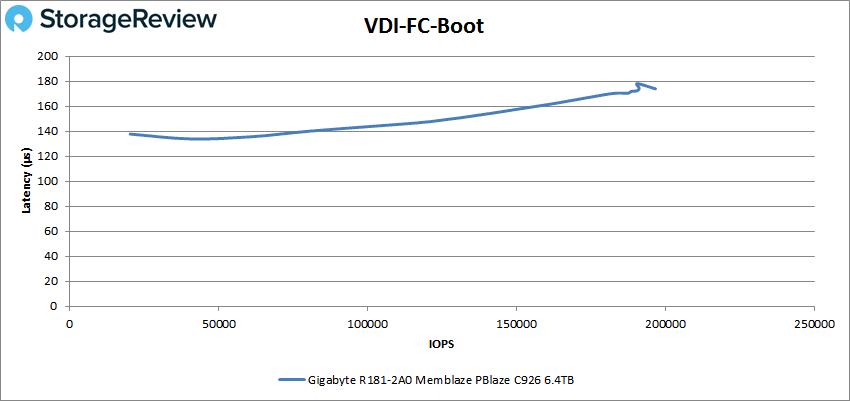

Next, we switched over to our VDI clone test, Full and Linked. For VDI Full Clone (FC) Boot, the GIGABYTE R181-2A0 peaked at 196,719 IOPS with a latency of 174µs.

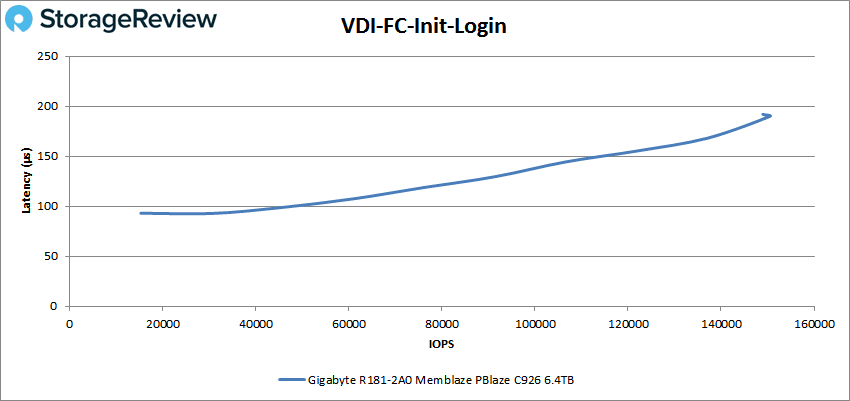

For VDI FC Initial Login the server peaked at 150,518 IOPS with 190µs for latency.

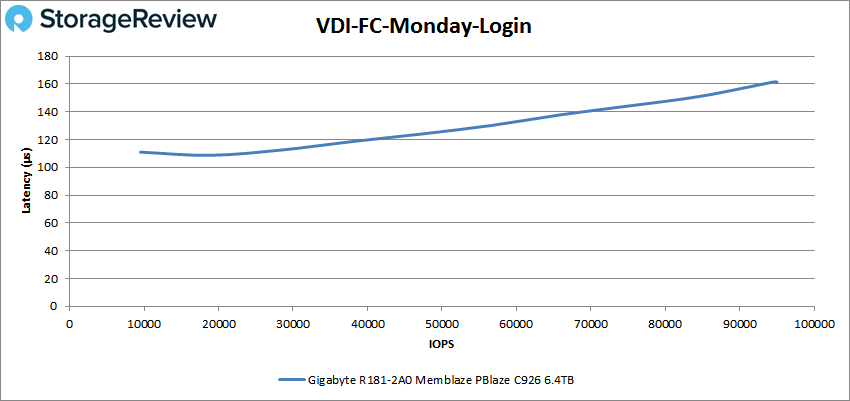

VDI FC Monday Login saw the server peak at 94,813 IOPS with a latency of 161.3µs.

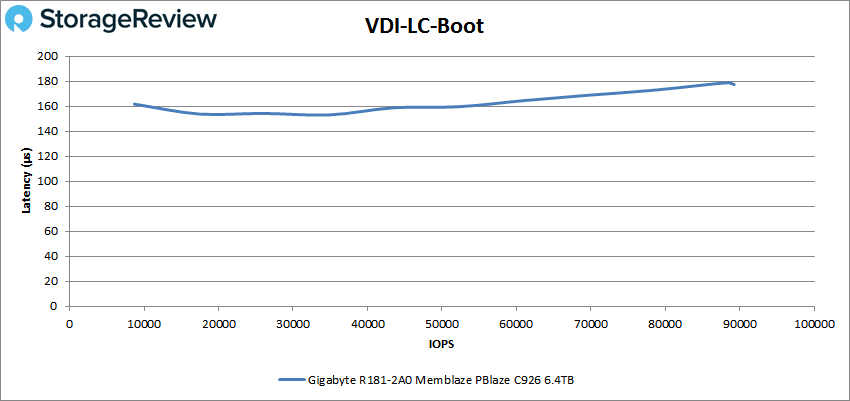

Switching to VDI Linked Clone (LC) Boot, the GIGABYTE was able to hit 89,269 IOPS with a latency of 177.4µs.

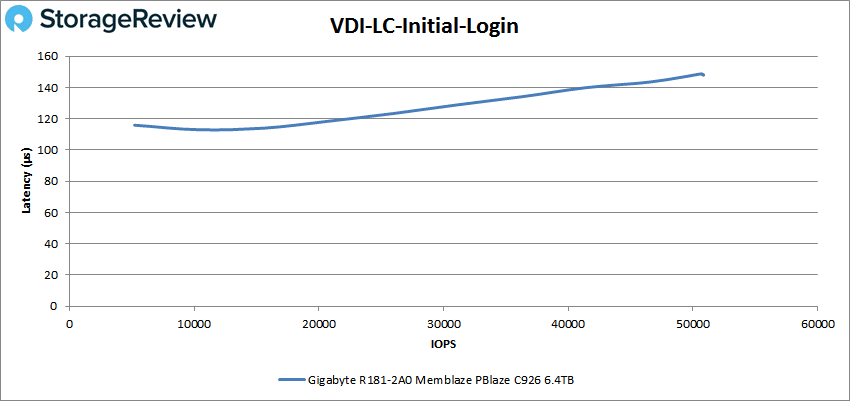

With VDI LC Initial Login the server hit 50,860 IOPS at 148µs.

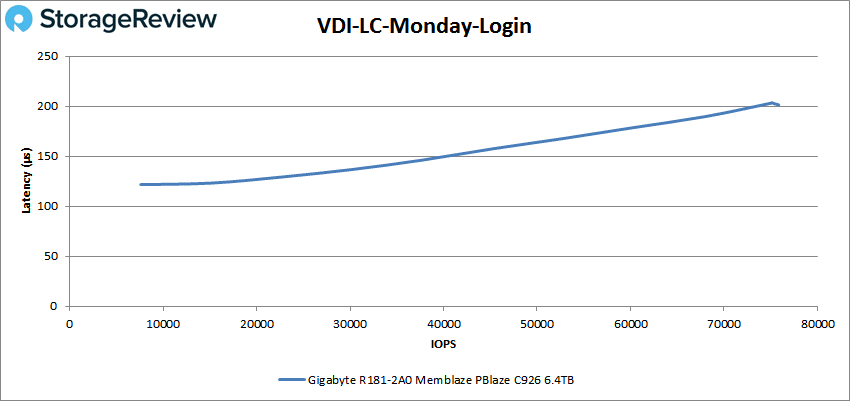

Finally, VDI LC Monday Login saw the GIGABYTE hit 75,850 IOPS at a latency of 201.3µs.

Conclusion

Conclusion

The GIGABYTE R181-2A0 is another server that is built around Intel’s second-generation Xeon Scalable processors and all the benefits leveraging those CPUs provide. Aside from being Intel Xeon Scalable-centric, this 1U server is very flexible. Though only 1U, the server has can house two Intel Xeon Scalable CPUs, 24 DIMMs of RAM, 10 2.5” drive bays for SATA or SAS drives, and has three PCIe expansion slots, and two OCP mezzanine slots. The server is aimed at being an application workhorse connected to shared storage, or perhaps HPC use cases.

For performance we ran both our Application Workload Analysis and our VDBench tests. While the front drive bays don’t support NVMe the expansion slots do and we added an NVMe drive in to maximize the server performance potential. In our SQL Server benchmark the server had aggregate scores of 12,642.8 TPS and an average latency of 2.3ms. For Sysbench we saw aggregate scores of 11,810 TPS, an average latency of 23.1ms, and a worst-case scenario latency of 40.3ms.

For VDbench the small server continued to hit fairly good numbers with highlights being 707K IOPS for 4K read, 561K IOPS for 4K write, 5.1GB/s for 64K read, and 2.7GB/s for 64K write. In our SQL tests the R181-2A0was able to hit peaks of 221K IOPS, 230K IOPS in SQL 90-10, and 230K IOPS in SQL 80-20. For Oracle the numbers were 246K IOPS, 173K IOPS in Oracle 90-10, and 178K IOPS in Oracle 80-20. In our VDI clone tests the number began to fade some but stayed over 100K IOPS in the VDI FC boot (197K IOPS) and VDI FC Initial Login (151K IOPS).

For those looking for plenty of flexibility in a compact form factor, they need to look no further than the GIGABYTE R181-2A0.

Conclusion

Conclusion

Amazon

Amazon