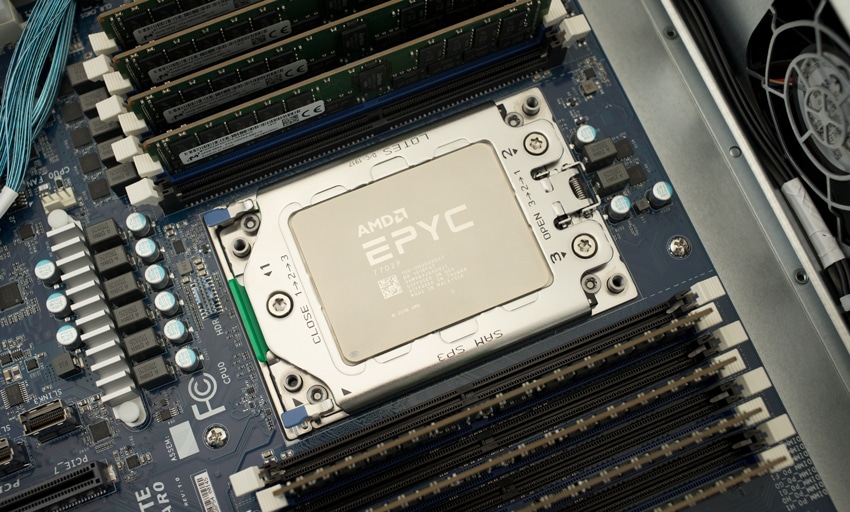

As AMD rolled out their new EPYC Rome 7002 CPU series today several vendors have announced servers that support the new technology, including GIGABYTE. In fact, GIGABYTE has released an entire series of rack servers that support the EPYC Rome, the R-Series. The R-series is a general-purpose server family with a balance of resources. The series offers both 1U and 2U servers with a variety for storage media combinations. For this particular review we will be looking at the GIGABYTE R272-Z32 Server sporting 24 U.2 NVMe bays.

From the hardware side of things, the server leverages GIGABYTE’s EPYC Rome MZ32-AR0 server motherboard. The motherboard fits a single AMD EPYC 7002 SoC as well as 16 DIMM slots for DDR4 memory. The server has 24 slots for NVMe storage, hot-swappable, as well as two slots in the rear for SATA SSDs or HDDs. For expansion the motherboard comes with seven PCIe expansion slots and one Mezzanine connector, giving customers room to grow or add the accessories they need. In the server as configured the NVMe bays consume most of the available PCIe slots as well as the Mezzanine slot for PCIe lanes to the front backplane. In the end customers are left with three PCIe slots for true expansion.

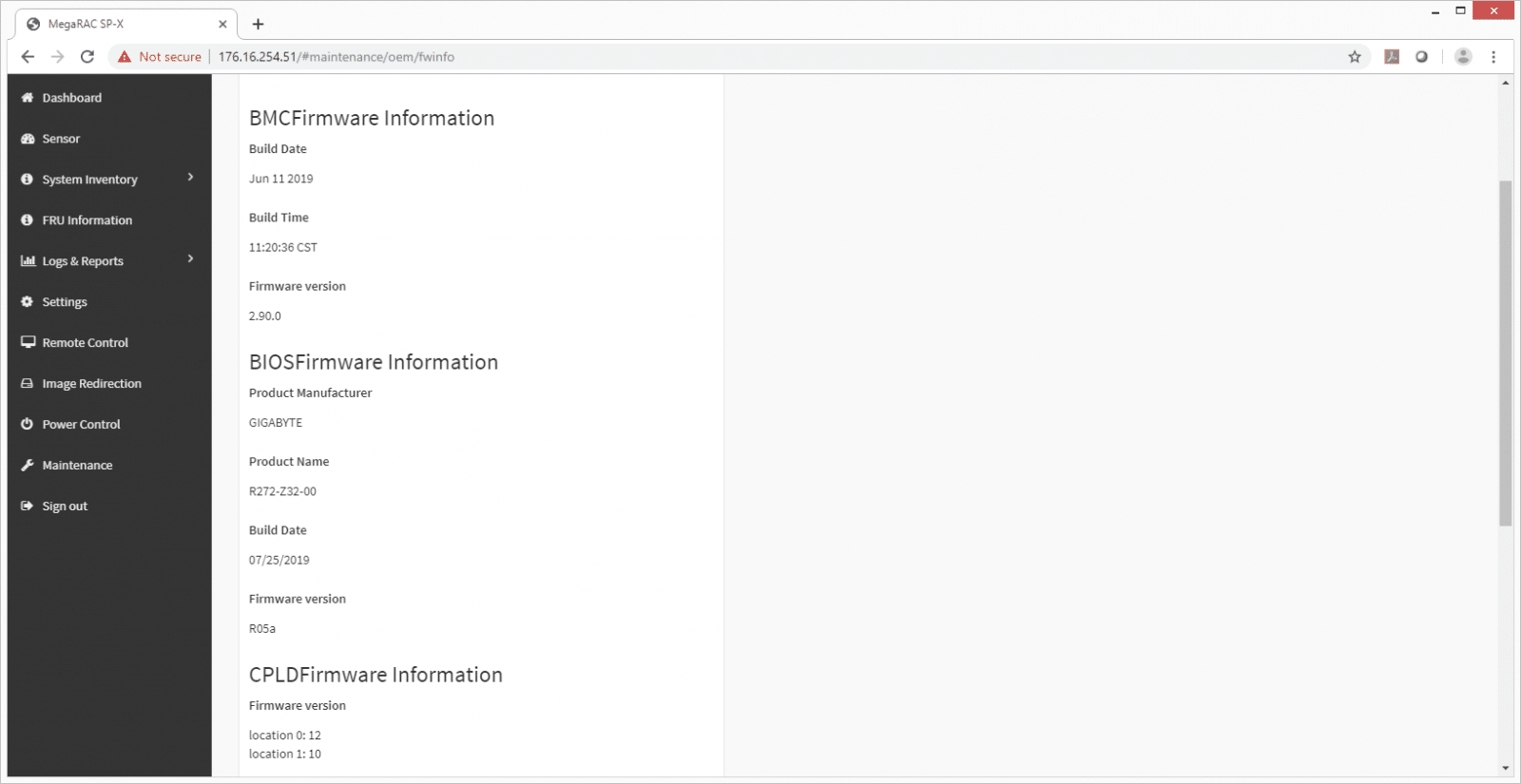

Like all GIGABYTE servers, the R272-Z32 utilizes GIGABYTE Server Management (GSM) for its remote management software. The GIGABYTE AMD EPYC Rome server can also leverage AMI MegaRAC SP-X platform for BMC server management. This intuitive and feature rich browser-based GUI comes with several notable features including RESTful API support, HTML5-based iKVM, detailed FRU information, pre-event automatic video recording, and SAS/RAID controller monitoring.

For our particular build we are leveraging the AMD EPYC 7702P CPU. For RAM we leveraged 8 32GB 3200MHz Micron branded DDR4 modules. For storage we used 12 Micron Pro 9300 SSDs, the 3.84TB capacity version.

GIGABYTE R272-Z32 Server Key Specifications

| CPU | AMD EPYC 7002 |

| Form factor | 2U |

| Mother board | EATX MZ32-AR0 |

| Memory | 16 x DIMM slots |

| Drive Bays | |

| Front | 24 x 2.5” hot-swap U.2 NVMe SSD |

| Rear | 2 x 2.5: hot-swap HDD |

| Expansion slots | |

| 7 x Low-profile Slot | (Slot7)PCIe x16 slot @Gen4 x16 s/w with 4 x Slim-SAS 4i from 4 x U.2 (Slot6)PCIe x16 slot @Gen4 x16 (Slot5)PCIe x16 slot @Gen4 x8 (Slot4)PCIe x16 slot @Gen4 x16 (Slot3)PCIe x16 slot @Gen4 x16 (Slot2)PCIe x8 slot @Gen3 x8 (Slot1)PCIe x16 slot @Gen3 x16 mezzanine @Gen3 x16 (Type 1, P1,P2,P3,P4; Type2 P5 with NCSI support) |

| Backplane | U.2 HDD Backplane (CBP20O5+CEPM080x3) |

| IO Connector | Rear 1 x VGA, 1 x COM, 2 x 1G LAN, 1 x MLAN, 3 x USB3.0, 1 x ID Button Internal 1 x COM, 1 x TPM, 1 x USB3.0(2ports), 1 x USB2.0(2ports) |

| Power Supply | Redundant 1200W 80+ Platinum |

| System Cooling | 4 x 8cm Easy-swap counter-rotating FAN |

| Dimension | 87.5 x 438 x 660 mm |

GIGABYTE R272-Z32 Design and Build

Starting with the front we will work our way through the inside to the back of the server and detail all the features. The front of the server features 24 2.5-inch U.2 NVMe bays, two USB 3.0 ports, a power button, a recessed reset button, and an ID button. The ID button is useful in a datacenter as there is an LED visible from both the front and back of the server. In a room of a few dozen servers the ID indicator will light up to help you identify the machine you are working on.

On the inside we have 16 DDR4 slots and 7 PCIe slots on a single processor motherboard. All the slots on the motherboard are Gen 4, which double the speeds of the previous generation. As each NVMe bay needs its own PCIe connection to the motherboard our configuration includes five daughter boards to supply the NVMe bays with connectivity. For user customization there are three open PCIe slots, all half height. Of the three open slots, one is mechanically and electrically x8. The other two slots are mechanically x16, one is x8 electrically and the other is x16 electrically. With the PCIe cabling the airflow to the cards could be limited, so these are more for networking connectivity, with a lower LFM airflow requirement versus a GPU that would require additional cooling. Closer to the front of the server there is a row of 4 chassis fans that are field hot swappable.

Finally, the back of the server. It’s pretty standard stuff as far as servers go. There are three USB 3.0 ports, two 1GbE ports, one management port, an ID switch, a serial port, a VGA port, two SATA bays, and two 1200 watt power supplies. While on the front there are NVMe bays with expensive high-performance storage the SATA bays on the back provide high capacity low cost storage for boot drives. Matching what was seen on the front is a corresponding ID button on the back. Its nice to see the serial port still holding on with a next-generation platform for the legacy products that still leverage it.

GIGABYTE R272-Z32 Management

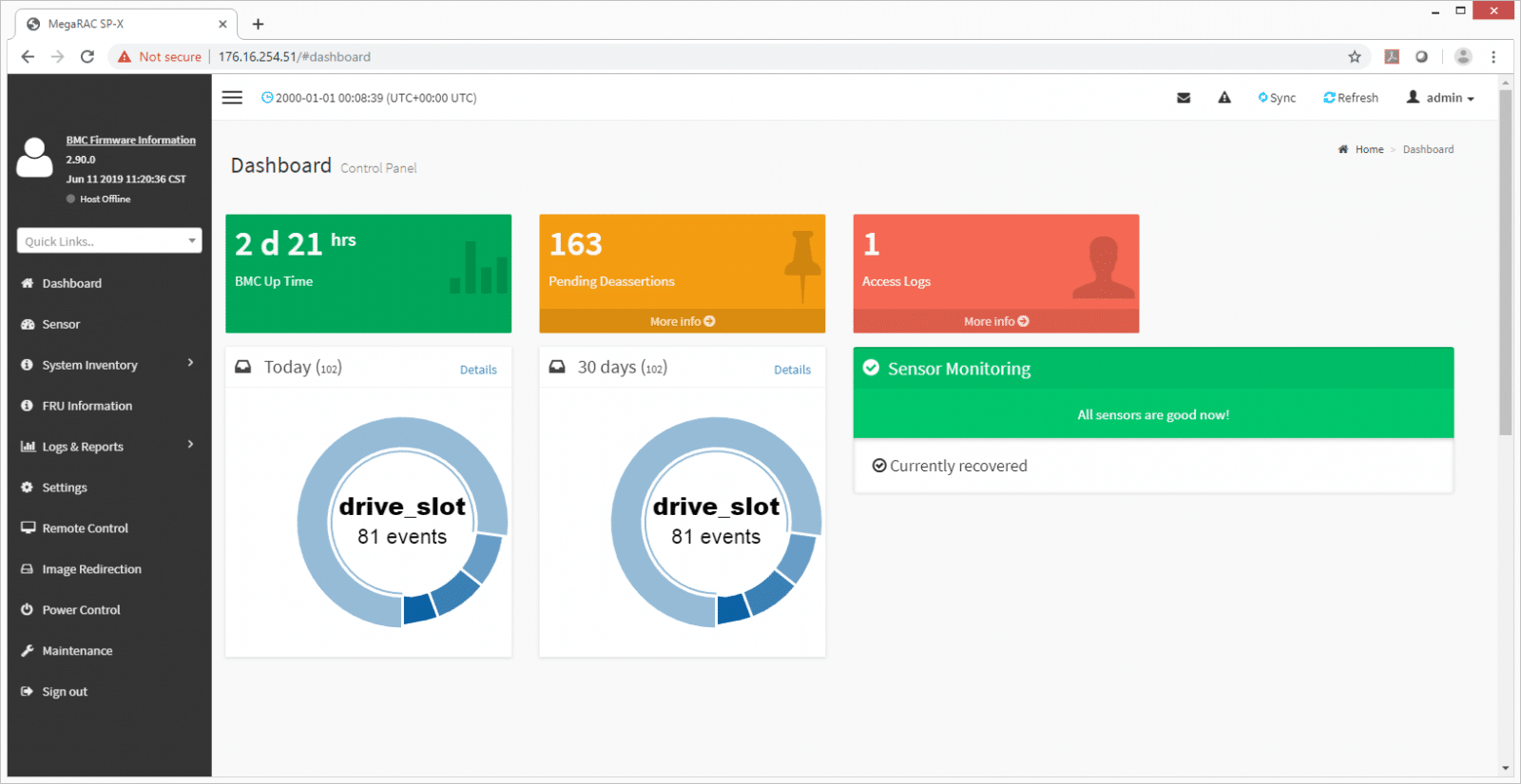

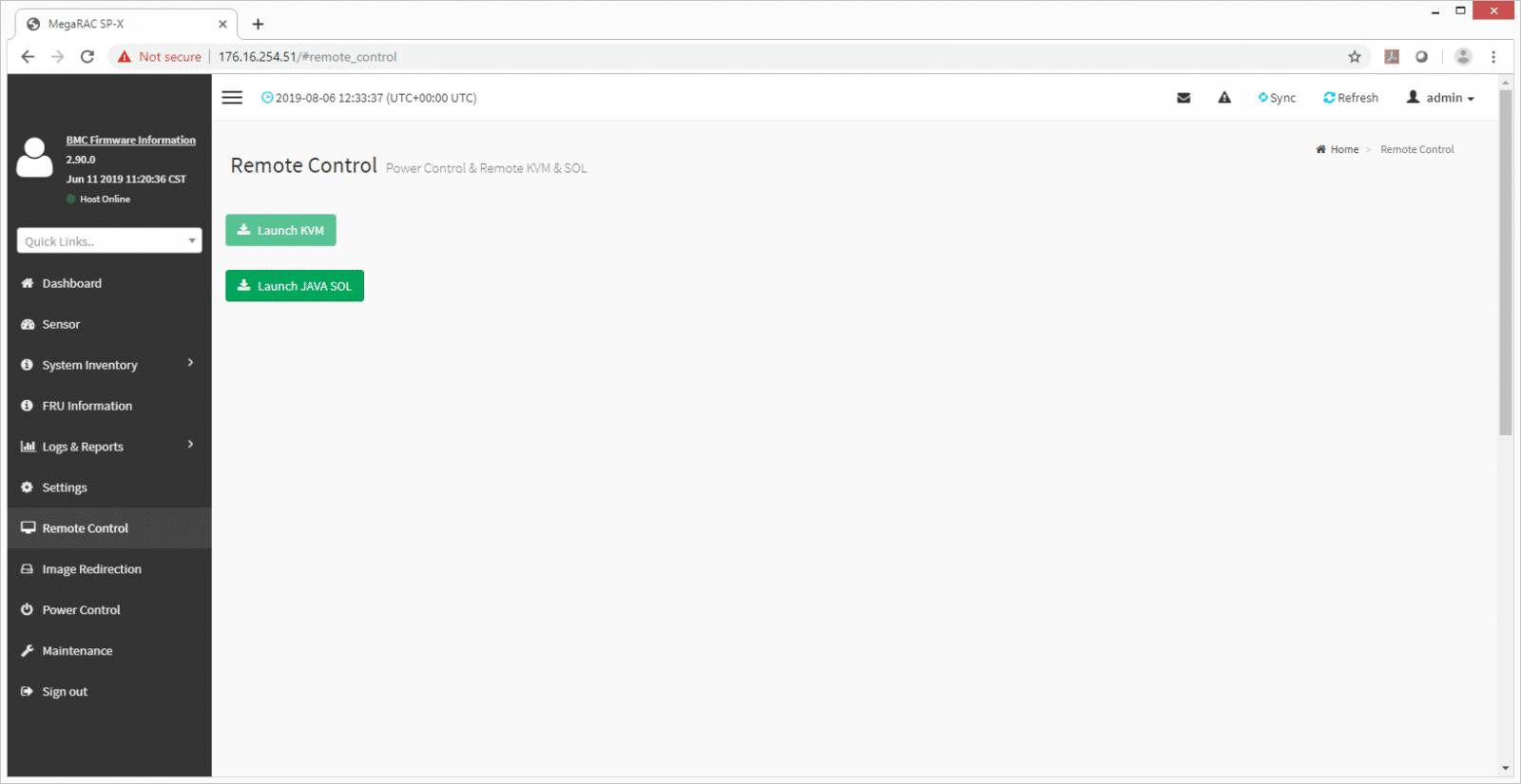

As stated the GIGABYTE R272-Z32 has its own GSM remote management software but can also leverage AMI MegaRAC SP-X platform for BMC server management. We will be using the MegaRAC for this review looking at two components of the KVM: The management screen and its associated landing pages as well as the remote console popup window for Server OS management and loading software.

From the main management screen one can view quick stats on landing page and see several main tabs running down the left side including: Dashboard, Sensor, System Inventory, FRU Information, Logs & Reports, Settings, Remote Control, Image Redirection, Power Control, and Maintenance. The first page is the dashboard. Here one can easily see the uptime of the BMC, pending assertions, access logs and how many issues are up, sensor monitoring, and the drive slots and how many events they’ve had over the last 24 hours as well as 30 days.

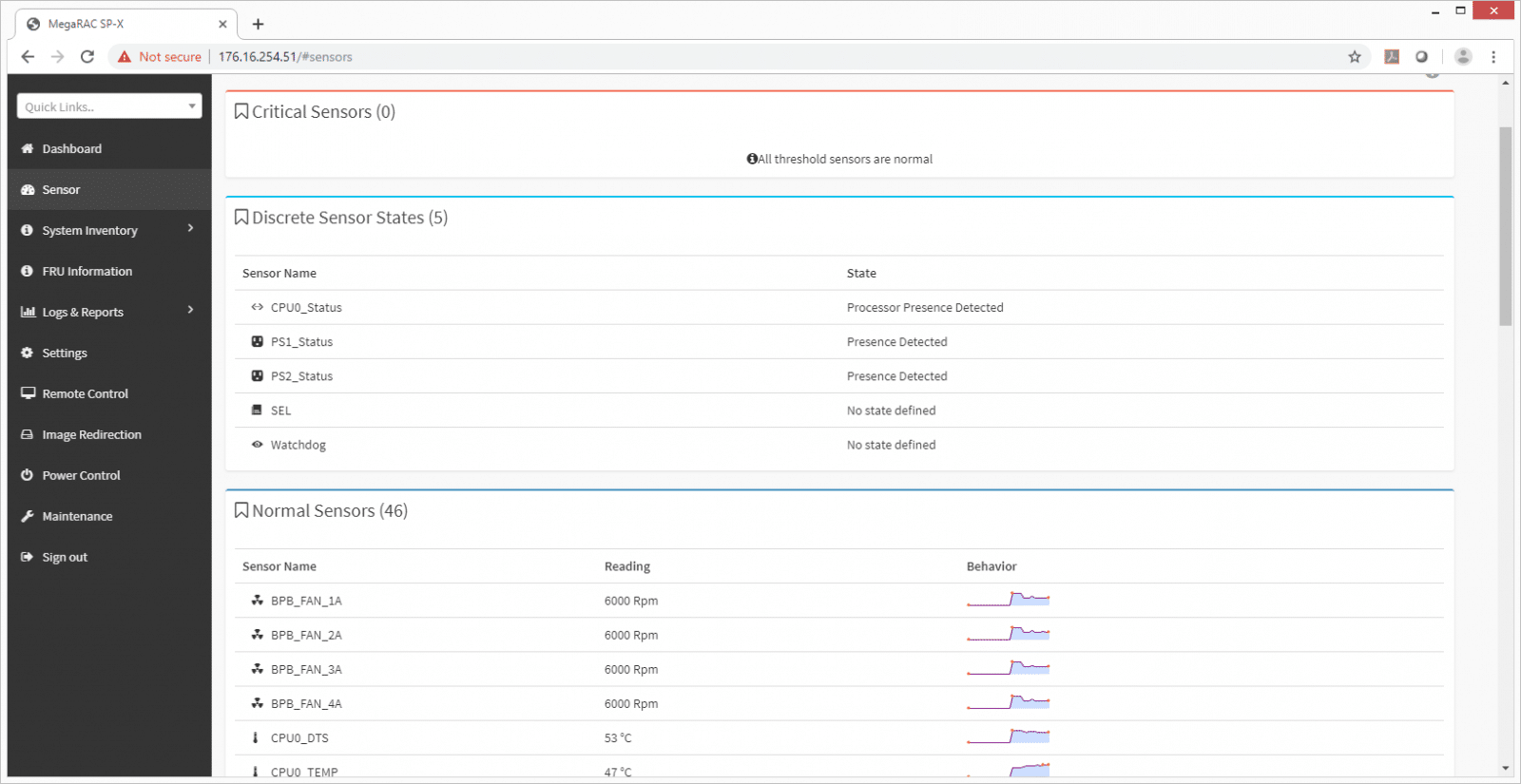

Clicking on the sensors, users can quickly see the discrete sensors and their current state. Users can also see normal sensors and how they are currently reading and behaving (e.g. a fan RPM and when it came on).

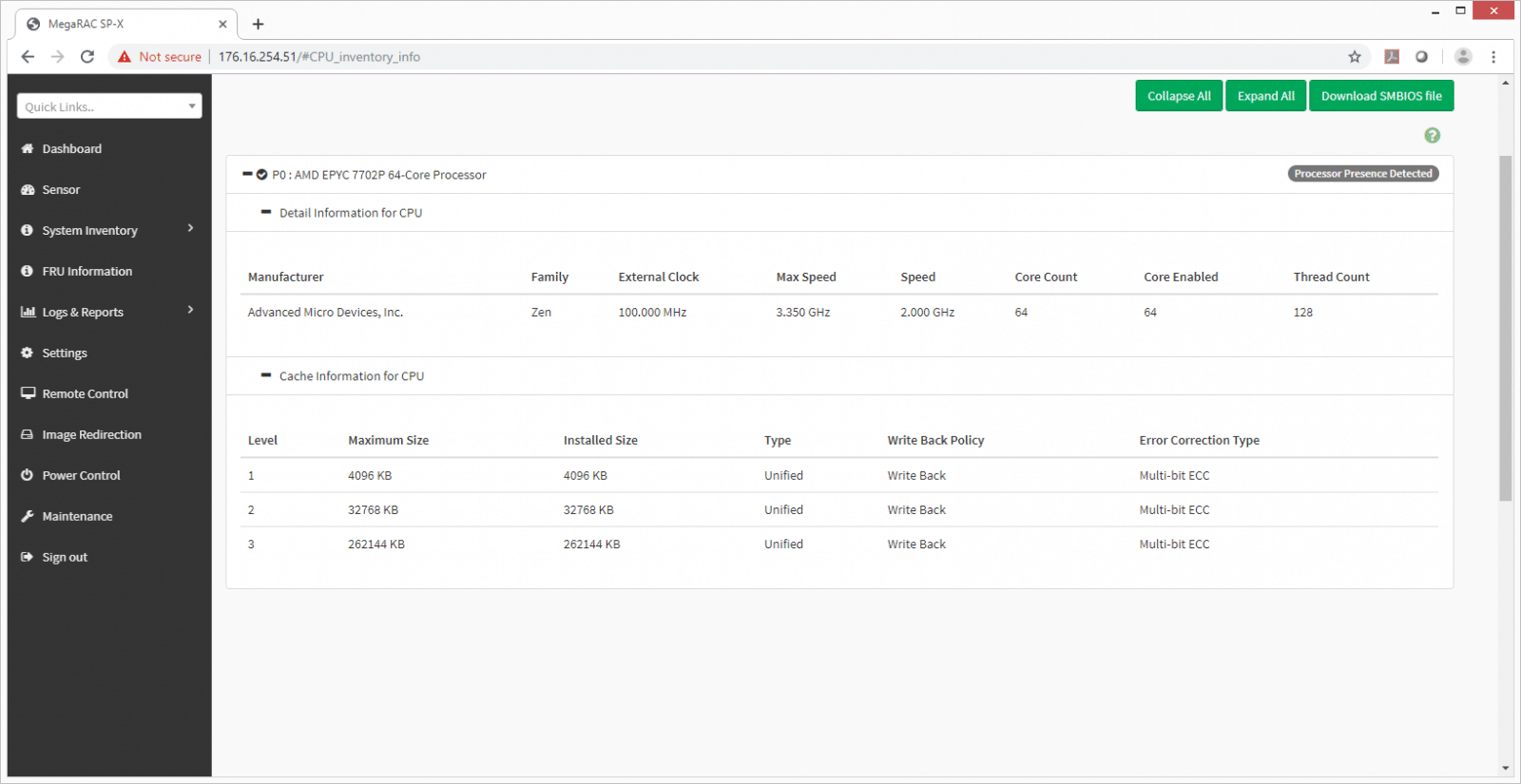

The System Inventory tab lets admins see various hardware in the server. Clicking on the CPU gives detailed information about which one it is, AMD EPYC 7702P in this case. Users can also see cache information for the CPU.

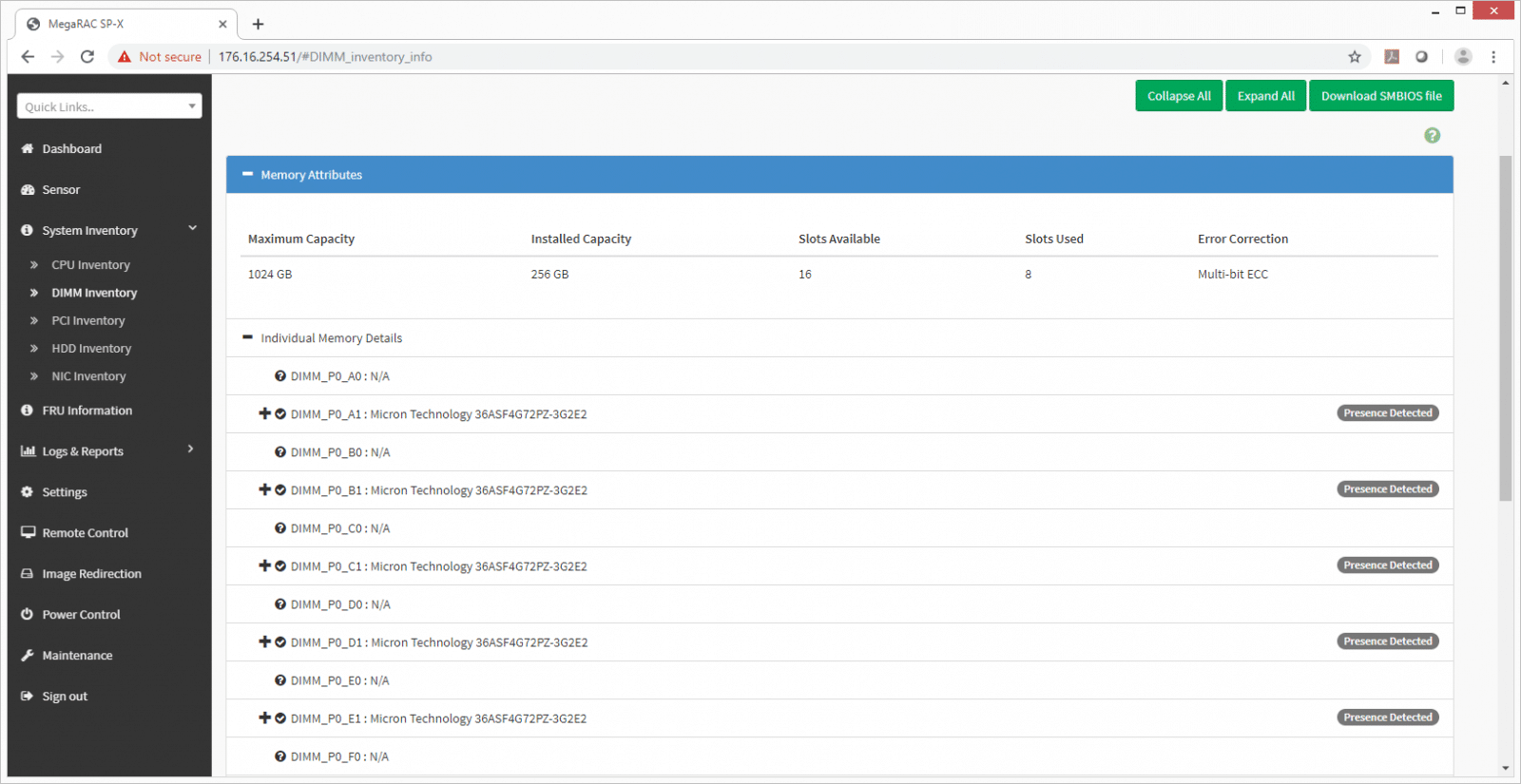

Much like the CPU, the DIMM inventory subtab gives detailed information on the RAM including the maximum possible, how much is installed on which slots, whether or not it is ECC, as well as details into individual DIMMs.

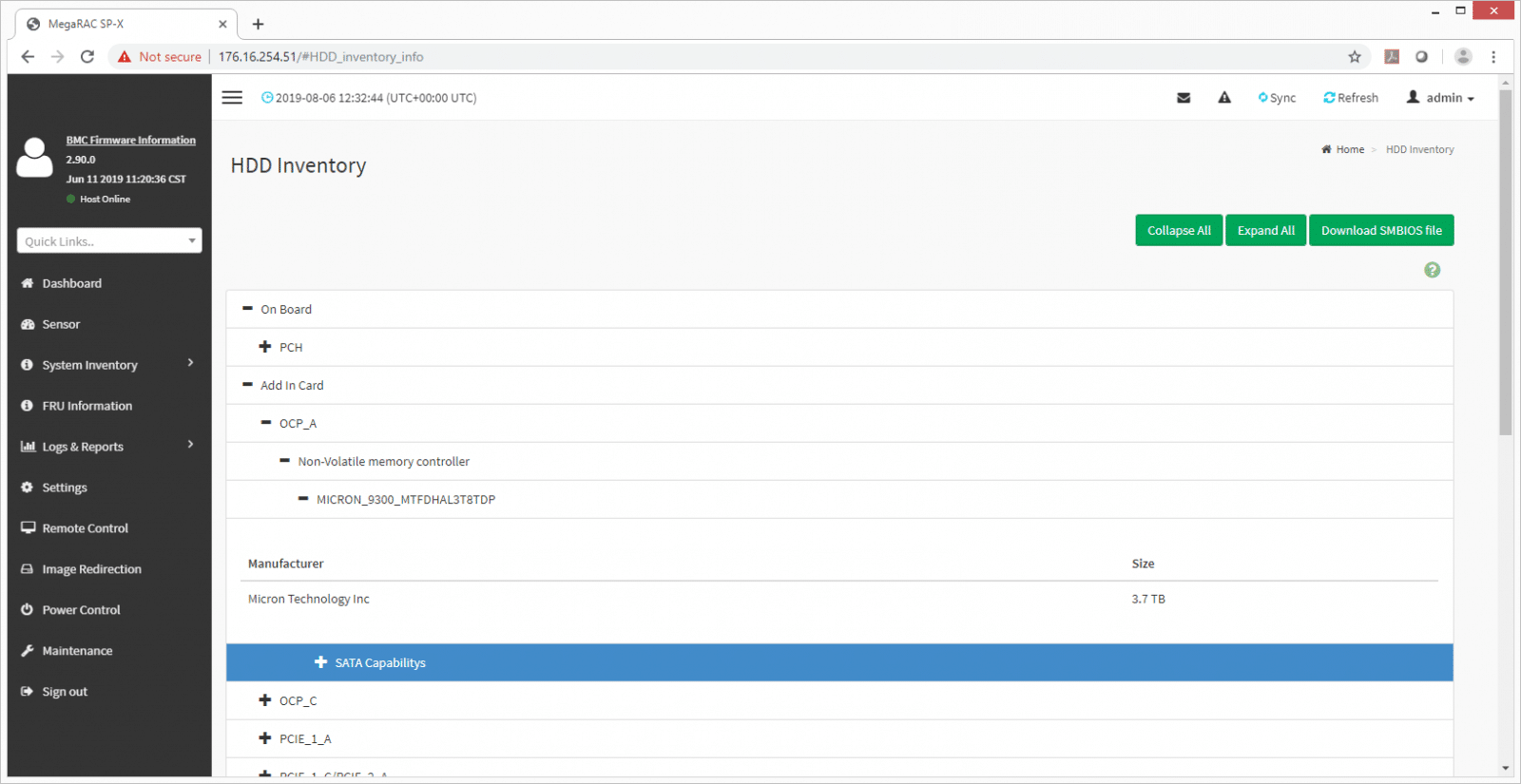

The HDD Inventory subtab is similar to the above giving information about the drives installed and the ability to drill down for more information.

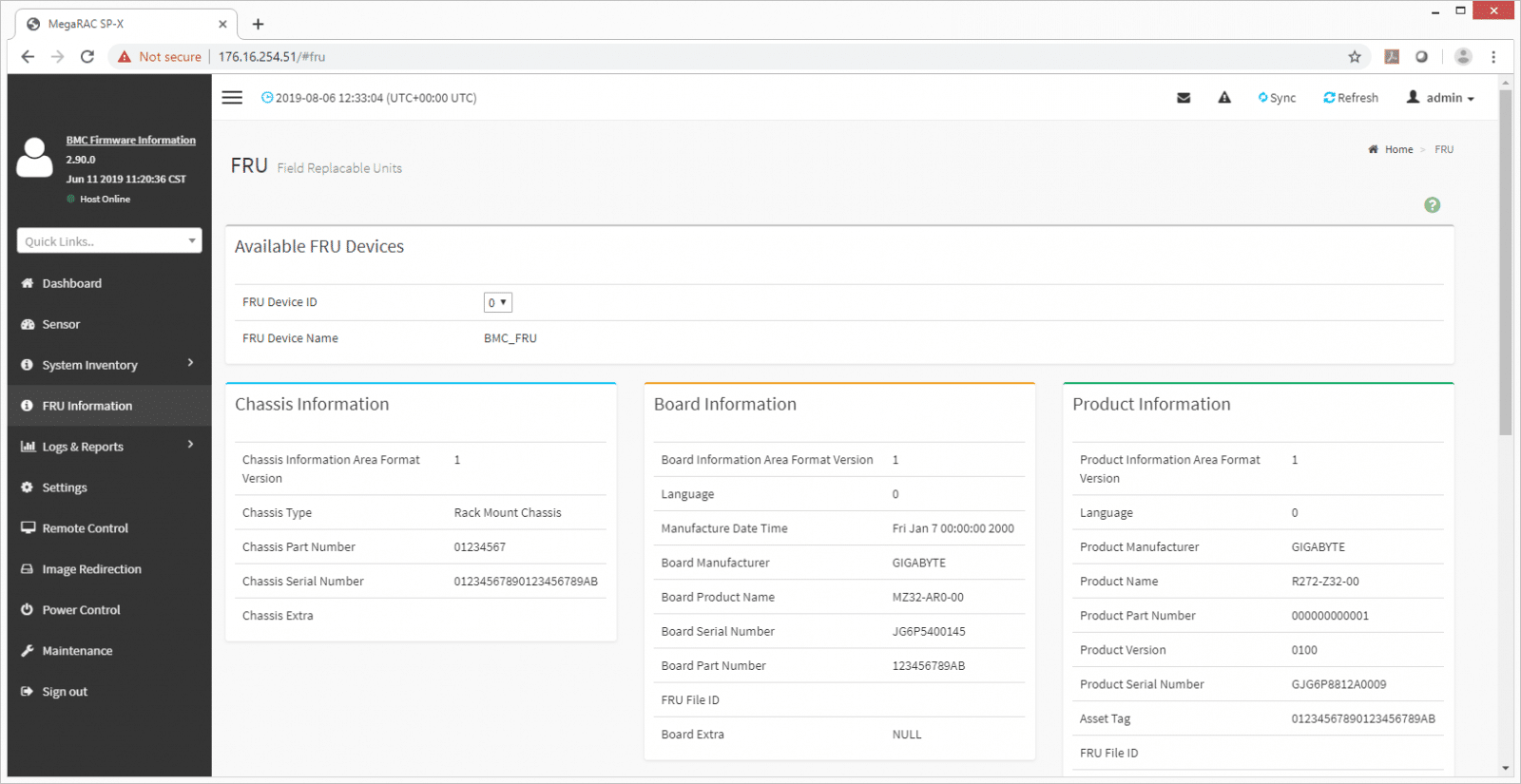

The next main tab is the FRU (Field Replaceable Units) information. As the name implies, this tab gives information on FRU unites, here we can see info on the chassis and the motherboard.

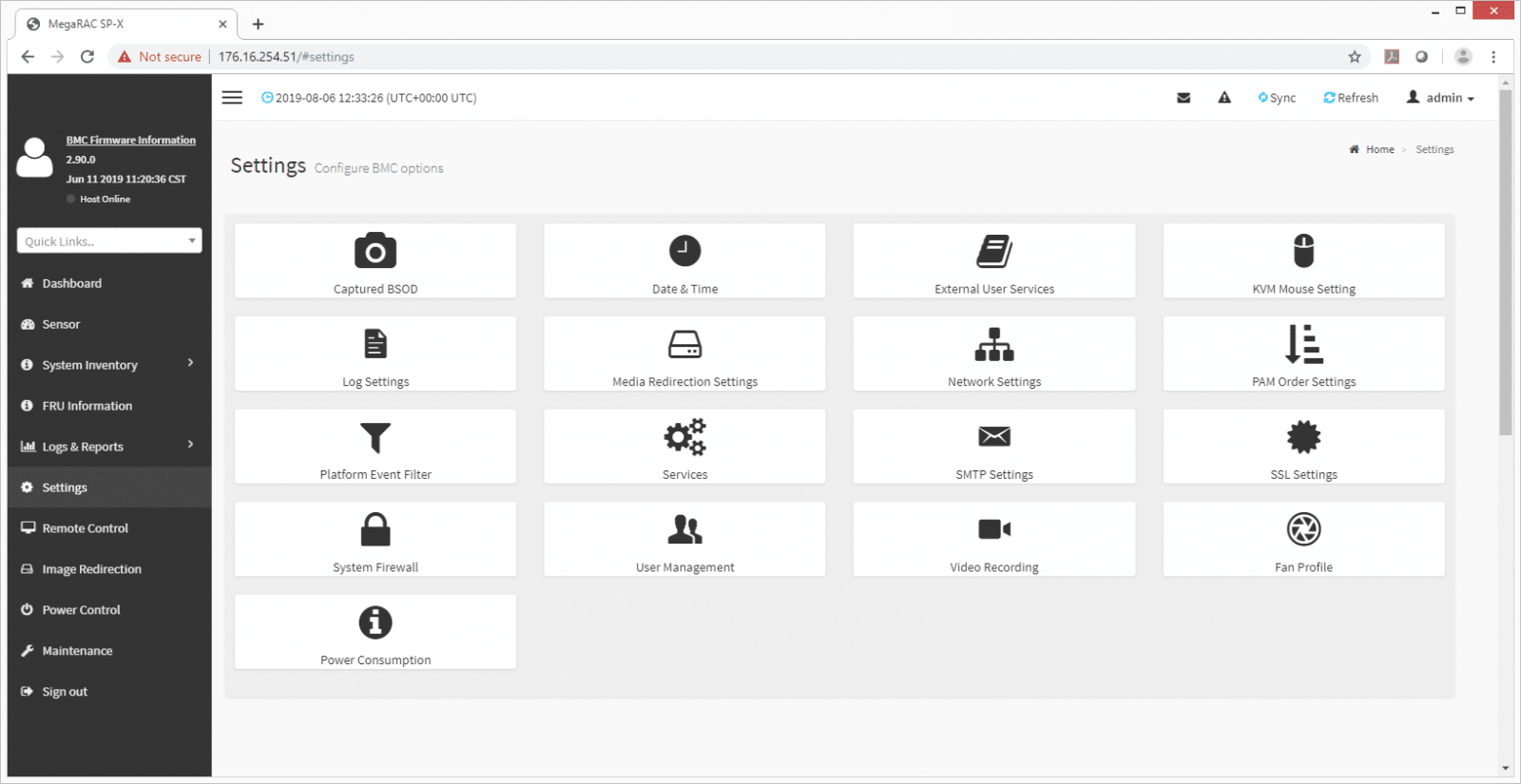

The Settings tab in fairly comprehensive. It gives admins access to all the setting options they need and the ability to change them to cater to their chosen workloads.

The Next tab is Remote Control. Here users have the option of either launching a KVM or launching JAVA SOL. We launched the KVM.

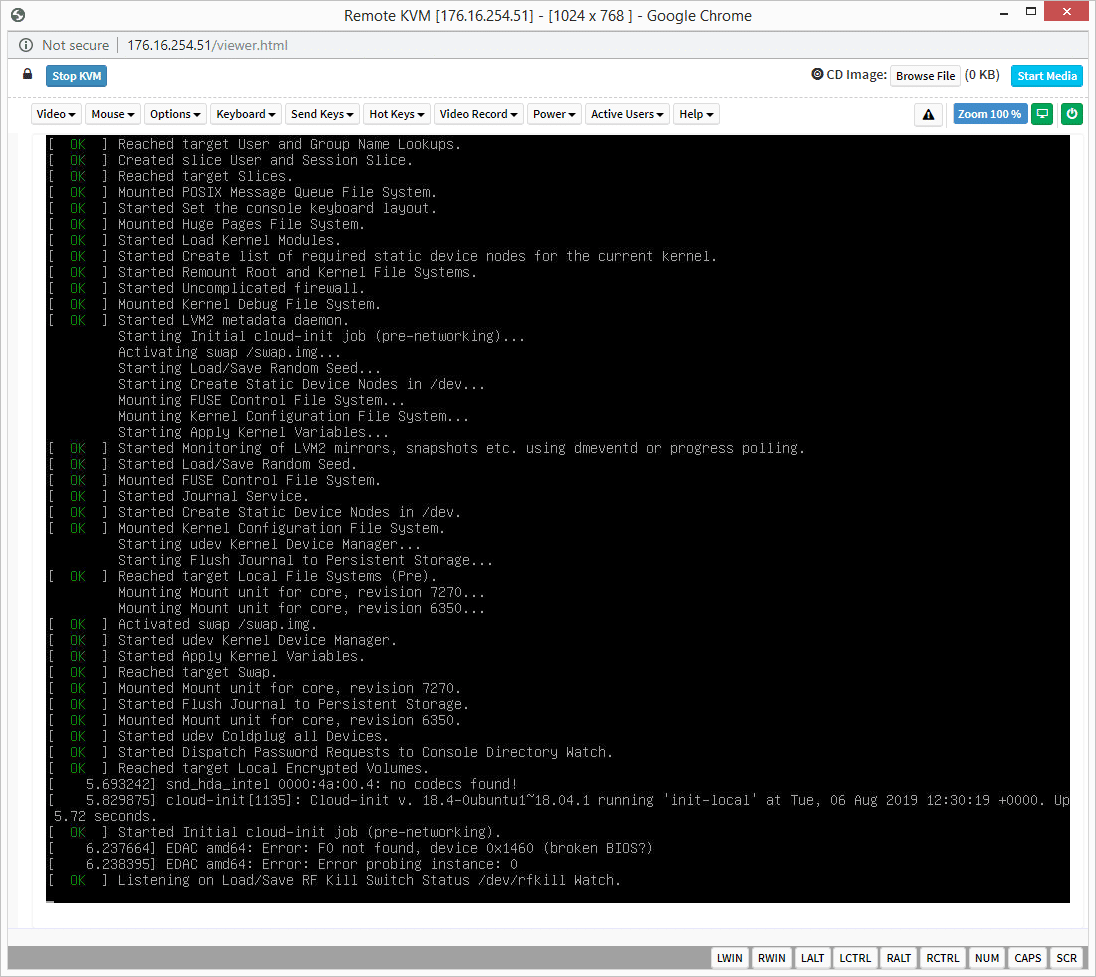

Once launched it gives users remote access to the server OS, which in our example is a Linux loading screen. Remote console windows are an invaluable tool in a datacenter where you want local control without having to haul over a monitor, keyboard and mouse crash cart to do so. Visible in the top right of the window is the CD image feature which lets you mount ISOs from your local system to be remotely accessible on the server for loading software.

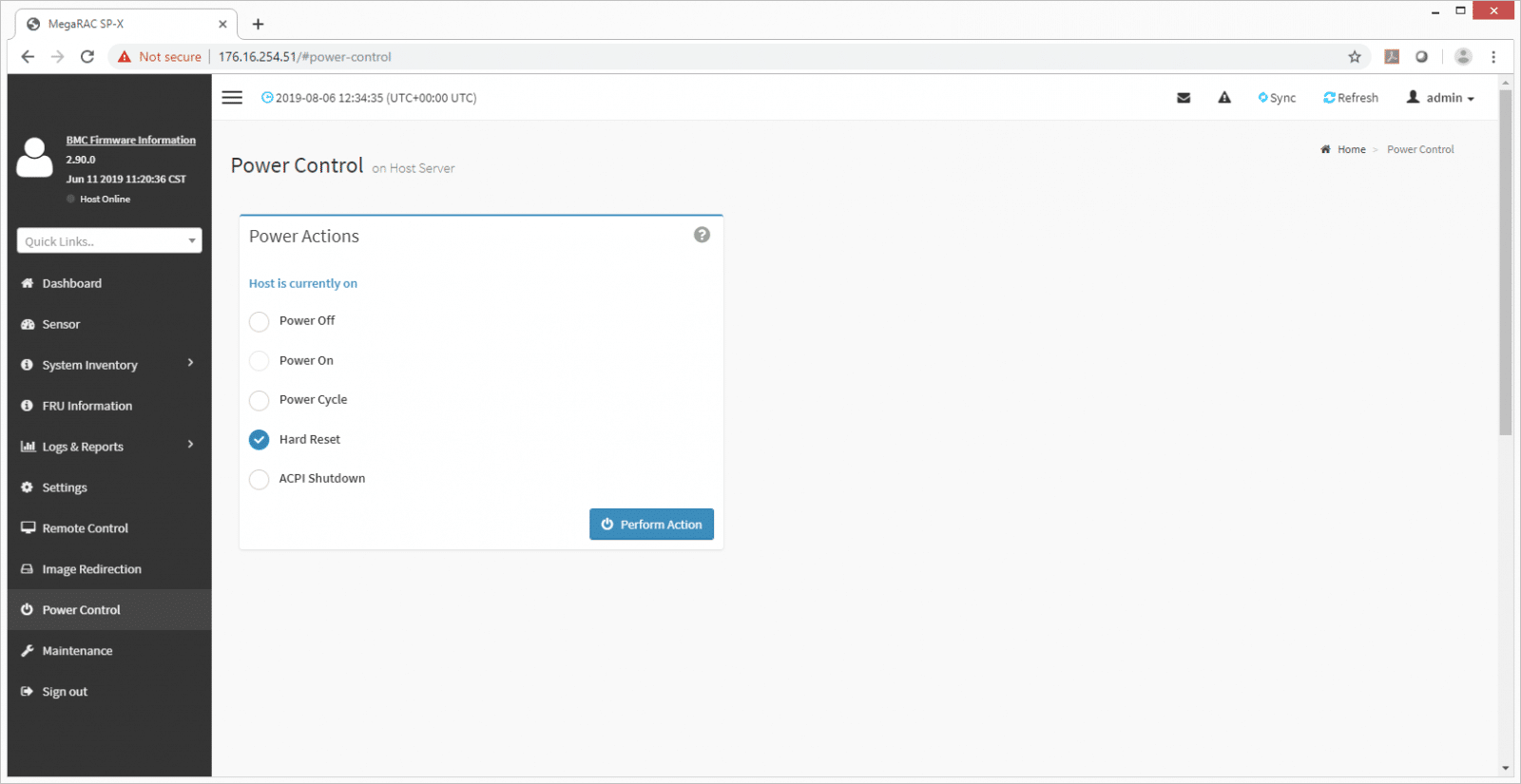

The Power Control tab gives a small list of power actions including Power Off, Power On, Power Cycle, Hard Reset, and ACPI Shutdown.

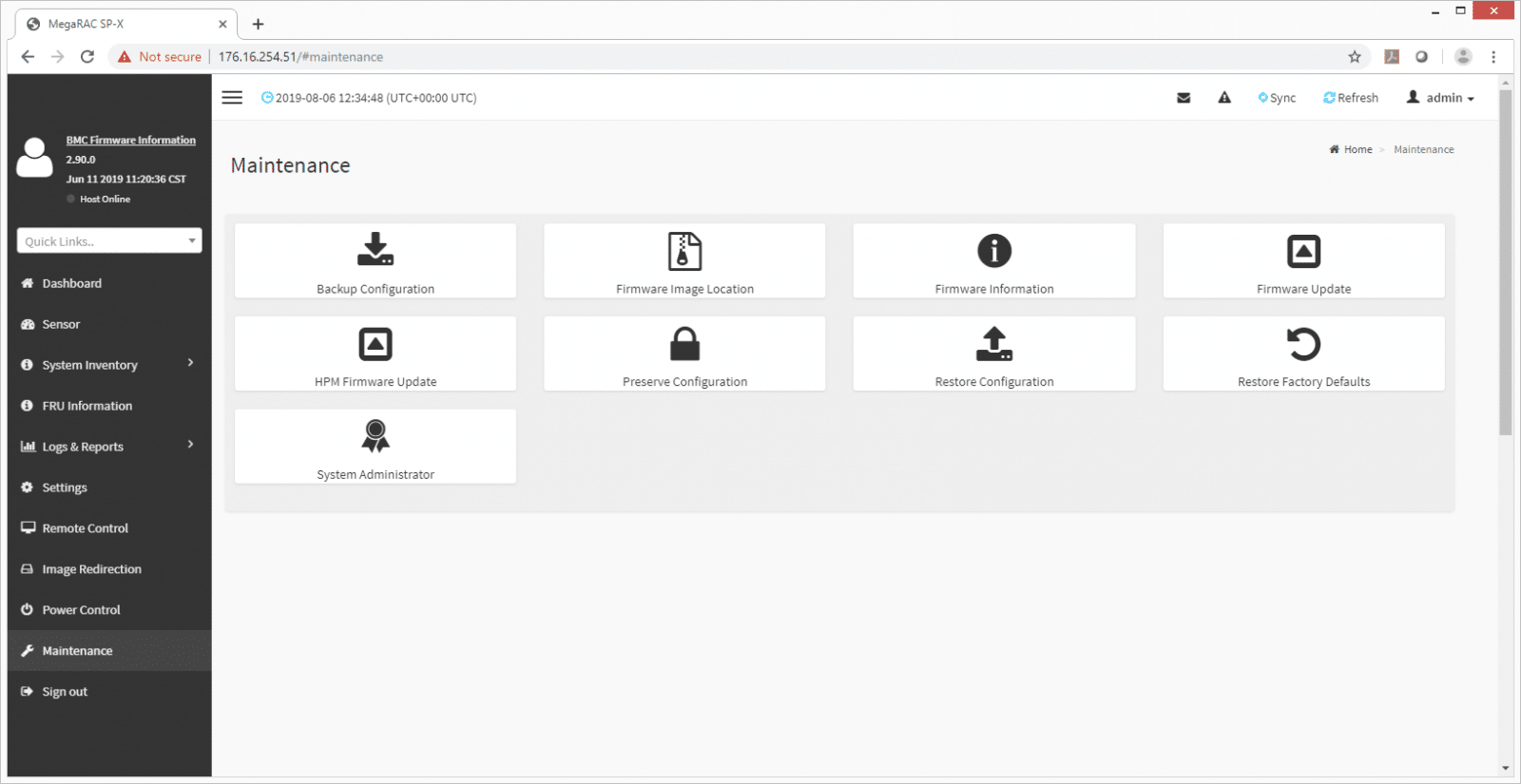

The Maintenance tab allows access to multiple things administrators may need to take care of including Backup Configuration, Firmware Image Location, Firmware Information, Firmware Update, HPM Firmware Update, Preserve Configuration, Restore Configuration, Restore Factory Defaults, and System Administrator.

The BIOS information can also be accessed through the Firmware information under the Maintenance tab.

Gigabyte R272-Z32 Configuration and Performance

For our initial testing lineup we focus on synthetic benchmarks inside a bare metal Linux environment. We installed Ubuntu 18.04.02 and leveraged vdbench to apply our storage-driven benchmarks. With 12 Micron 9300 Pro 3.84TB SSDs loaded into the server, our focus was saturating the CPU with storage I/O. As additional OS support comes out fully supporting AMD EYPC Rome, primarily VMware vSphere (waiting on 6.7 U3 to be GA), we will add to the testing on this server platform.

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

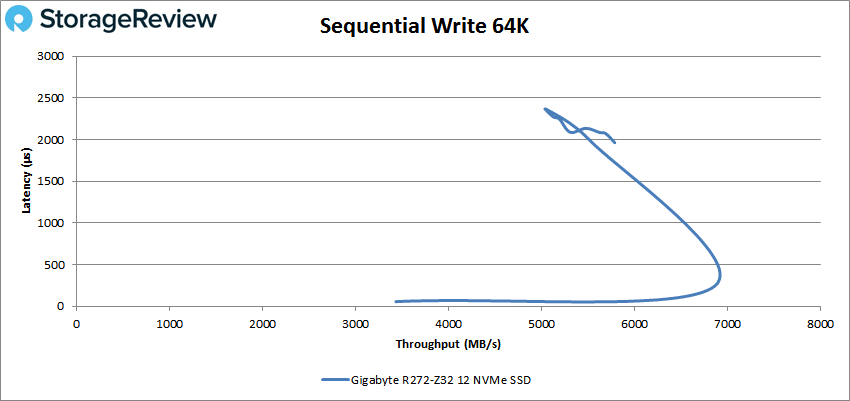

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

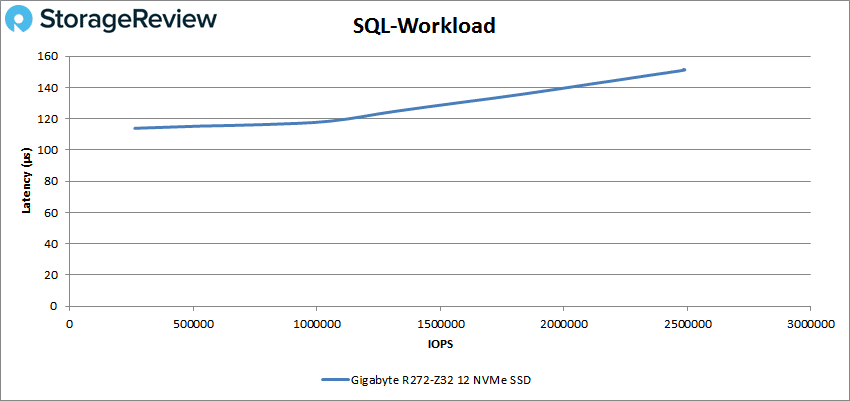

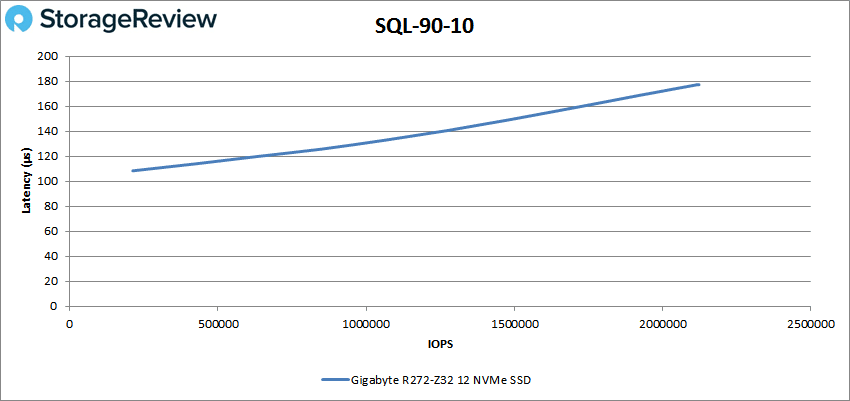

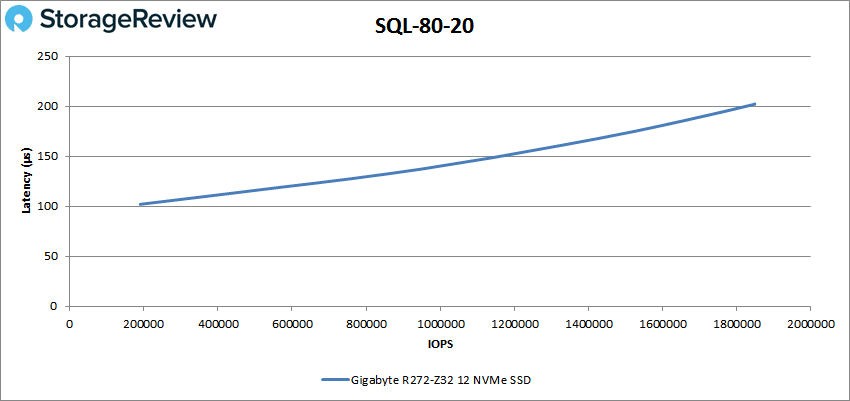

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

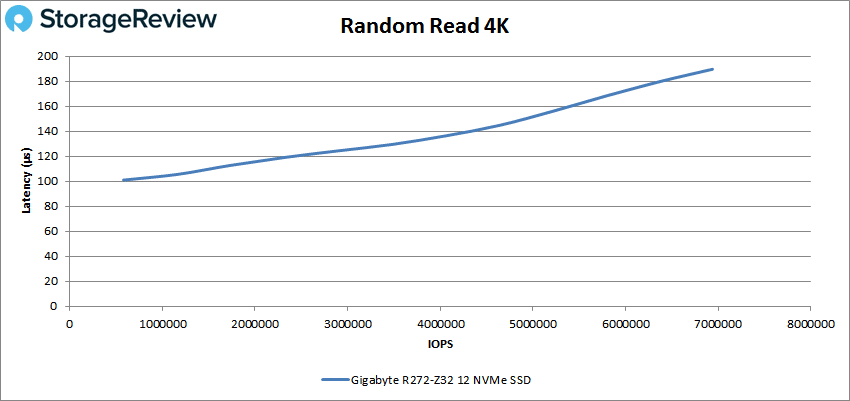

With random 4K read the GIGABYTE R272-Z32 started just over 100µs and peaked at 6,939,004 IOPS with a latency of 189.6µs.

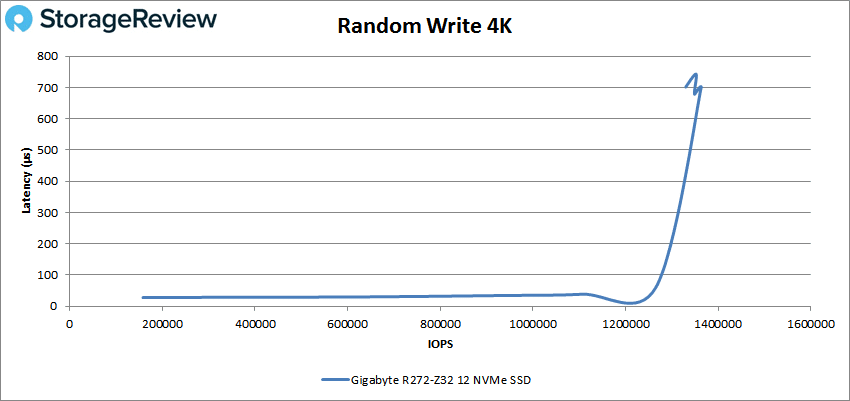

For random 4K write, the server 158,161 IOPS with only 28µs latency. The server stayed under 100µs until about 1.27 million IOPS and peaked at 1,363,259 IOPS at a latency of 699.8µs.

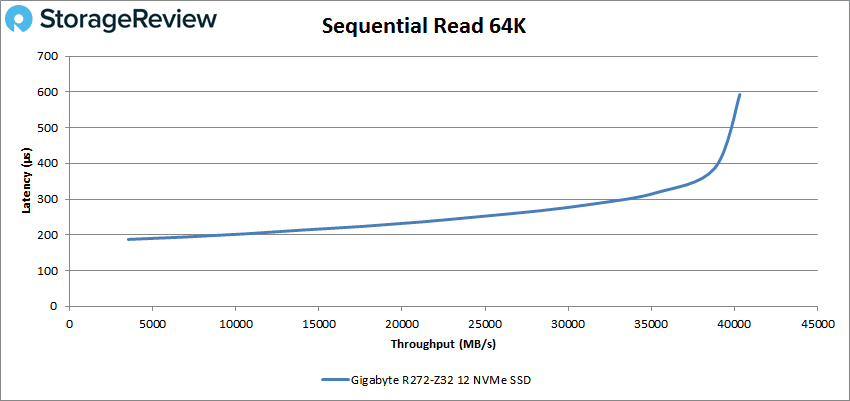

Switching over to sequential workloads, we saw the server peak at 645,240 IOPS or 40.3GB/s at a latency of 592.9µs in our 64K read.

In 64K write, the server peaked at about 110K IOPS or about 6.8GB/s at a latency of 246.1µs before dropping off significantly.

Our next set of tests are our SQL workloads: SQL, SQL 90-10, and SQL 80-20. For SQL the server peaked at 2,489,862 IOPS with a latency of 151.2µs.

For SQL 90-10 the server had a peak performance of 2,123,201 IOPS with a latency of 177.2µs.

Our last SQL test, the 80-20, saw the server hit a peak performance of 1,849,018 IOPS with a latency of 202.1µs.

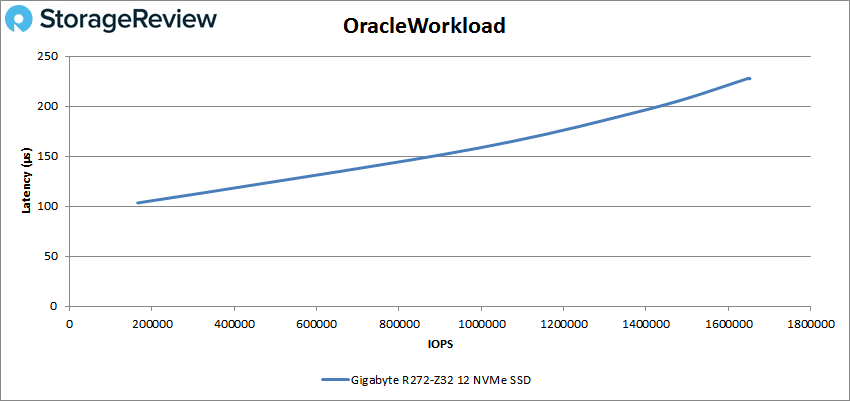

Next up are our Oracle workloads: Oracle, Oracle 90-10, and Oracle 80-20. With Oracle the GIGABYTE server peaked at 1,652,105 IOPS with a latency of 227.5µs.

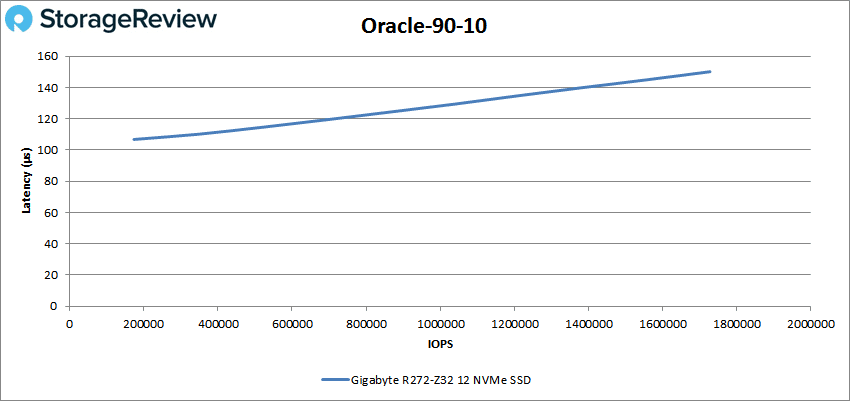

With Oracle 90-10 the sever peaked at 1,727,168 IOPS at a latency of only 150.1µs.

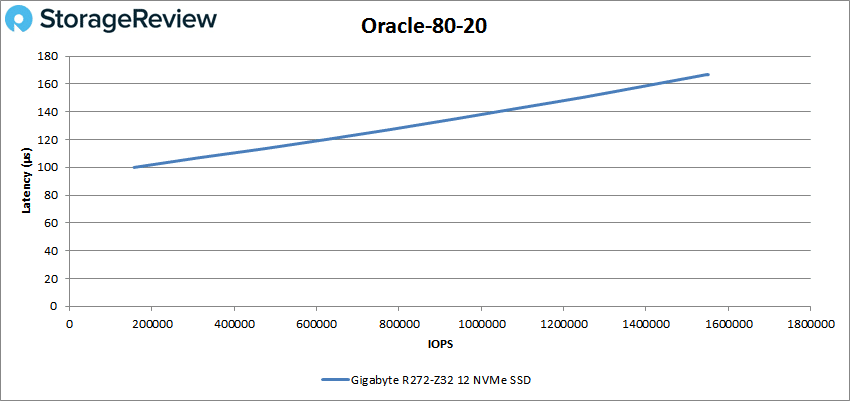

For Oracle 80-20 the sever hit a peak score of 1,551,361 IOPS at a latency of 166.8µs.

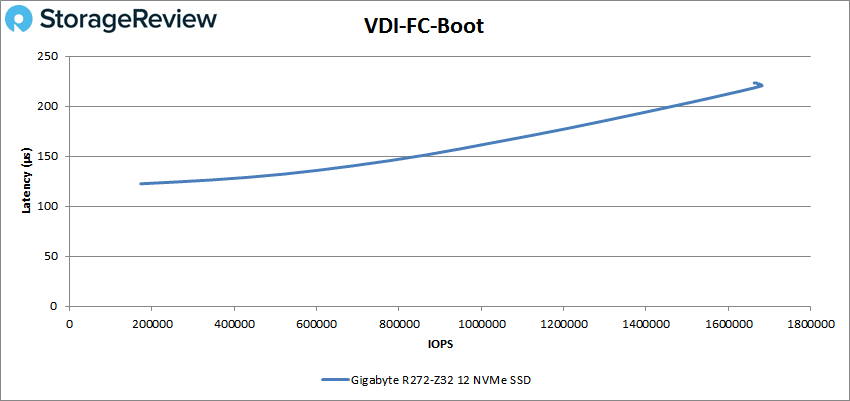

Next, we switched over to our VDI clone test, Full and Linked. For VDI Full Clone (FC) Boot, the EPYC Rome powered server had a peak performance of 1,680,812 IOPS at a latency of 220.4µs.

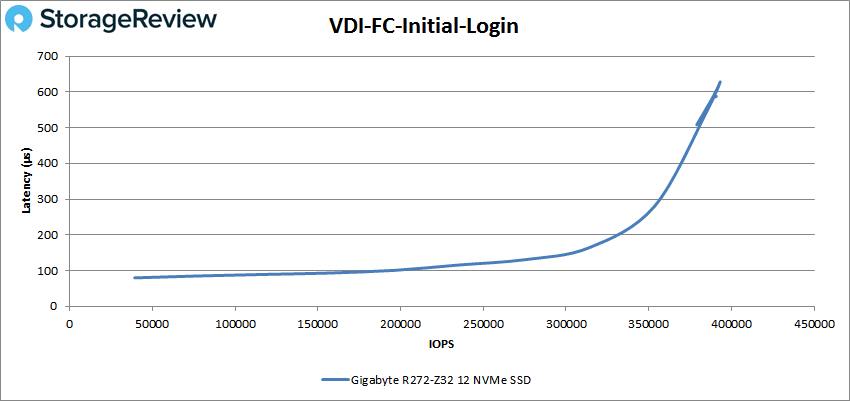

With VDI FC Initial Login the server started at 39,309 IOPS with a latency of 79.8µs. the server stayed under 100µs until about 200K IOPS and peaked at 393,139 IOPS at a latency of 627.3µs.

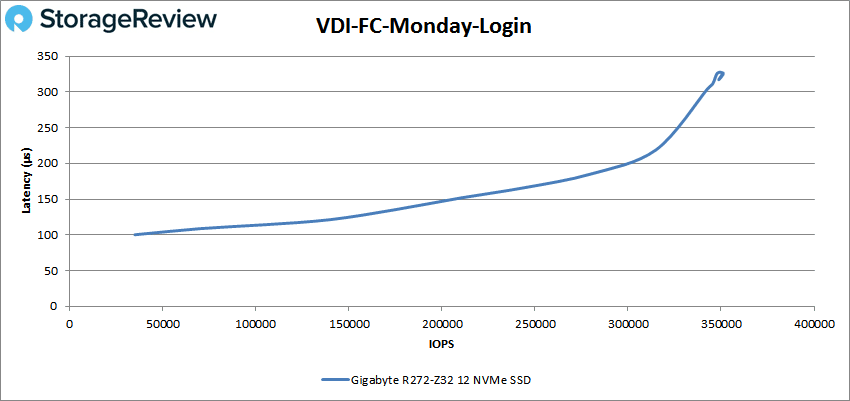

For VDI FC Monday Login the server peaked at 351,133 IOPS with a latency of 326.6µs.

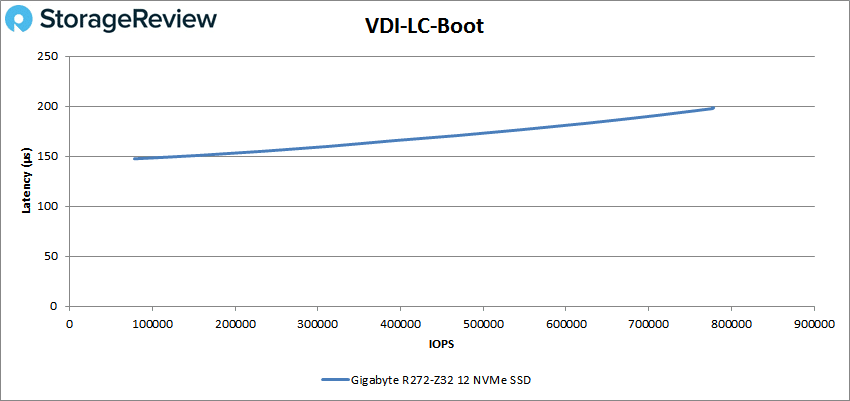

For VDI LC Boot the server peaked at 777,722 IOPS at a latency of 197.6µs.

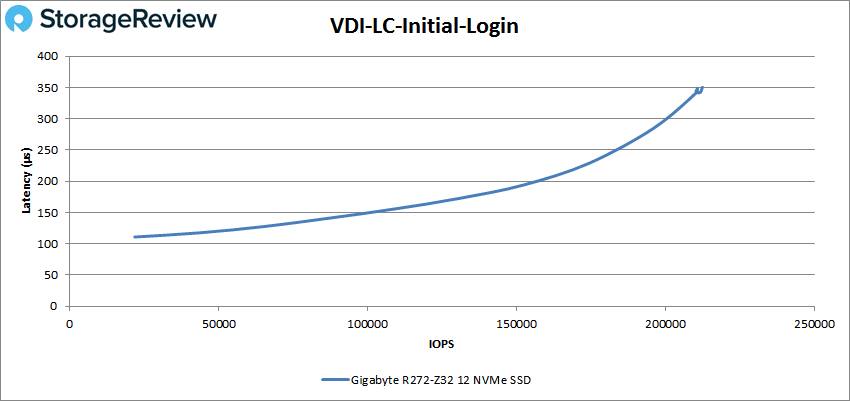

With VDI LC Initial Login the GIGABYTE server peaked at 211,720 IOPS at 341.9µs latency.

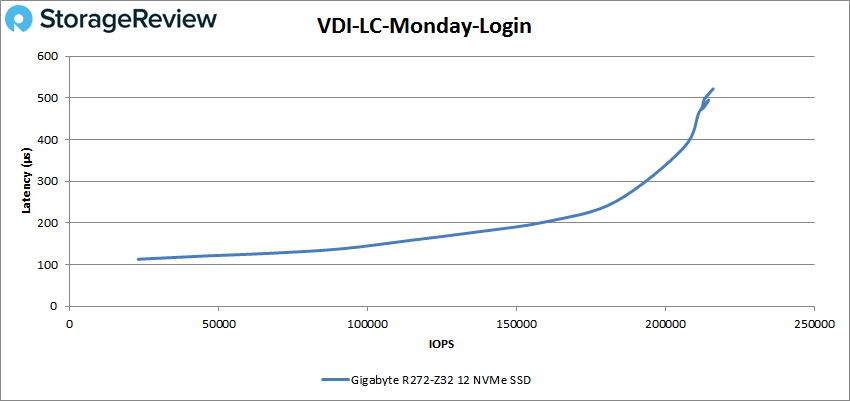

And finally with VDI LC Monday Login the EPYC Rome server had a peak performance of 216,084 IOPS with a latency of 521.9µs.

Conclusion

The new AMD EPYC 7002 CPUs are out, and the first server with the new processors (in our Lab, anyway) is the GIGABYTE R272-Z32. This 2U general purpose server uses the MZ32-AR0 motherboard, making it compatible with a single new EPYC Rome processor. The server has 16 DIMM slots, with a potential total of 1TB of DDR4 3200MHz RAM. The R272-Z32 has 24 hot-swap bays in the front for all NVMe storage, with two bays in the rear for SATA SSDs or HDDs. If customers need to added PCIe devices (including Gen4 devices now), there are seven slots in the rear, although only three are open. The server also supports AMI MegaRAC SP-X for BMC server management.

For testing we leveraged the AMD EPYC 7702P CPU, 256GB of 3,200MHz Micron DDR4 RAM, and 12 3.84TB Micron Pro 9300 SSDs. With the above this little server really brought the thunder. Just using our VDBench workloads, we saw the server hit 7 million IOPS in 4K read, 1.4 million IOPS in 4K write, an astounding 40.3GB/s in 64K sequential read, and 6.8GB/s in 64K sequential write. Moving into our SQL workloads the server continued to impress with 2.5 million IOPS, 2.1 million IOPS in SQL 90-10, and 1.85 million IOPS in SQL 80-20. In Oracle the server hit 1.65 million IOPS, 1.73 million IOPS in 90-10, and 1.55 million IOPS in 80-20. Even in our VDI clone tests the server was able to break a million IOPS in VDI FC boot with 1.68 million. While the latency was over 100µs for the most part, it only went over 1ms in the sequential 64K write test.

For a general-purpose server, the AMD EPYC Rome turned the GIGABYTE R272-Z32 into a beast. While we have good equipment in the server, we weren’t even close to maxing out its potential. As the list of OS’s that support Rome continue to grow, we will be able to see just how well the new CPUs stacks up across a plethora of workloads. These new processors with the servers that support them may be entering us into a new level of performance in the data center we’ve yet to see, especially when you start to factor into the untapped potential of PCIe Gen4

Amazon

Amazon