The GIGABYTE R282-Z92 Server is a new 2U dual-socket server that leverages the 2nd generation AMD EPYC processors. Being dual-socket, meaning that users can leverage up to 128 cores (64 per socket) with two EPYC CPUs. AMD EPYC processors also lend the added benefit of PCIe 4.0 devices which increase options for faster storage, FPGAs, and GPUs. The server comes with two open PCIe 4.0 expansion slots to support add-on items.

Further building off of the new AMD EPYC processors, the GIGABYTE R282-Z92 Server supports over 4TB of DDR4 memory with speeds up to 3200MHz. It can hit this through 16 DIMMs per CPU with module support up to 128GB apiece. While the server supports NVMe storage via 24 hot-swappable bays across the front (not to mention two more bays in the back for SATA/SAS storage), it doesn’t support PCIe 4.0 in the front bays.

Other notable hardware features are an onboard M.2 slot as well as more M.2 capacity through a riser card. The server comes with two 1GbE LAN ports but plenty of expansion for faster networking, including an OCP card slot. Power management is done through two hot-swappable PSUs as well as GIGABYTE’s intelligent power management features that both makes the server more efficient in terms of power usage and retain power in the case of a failure.

The R282-Z92 leverages its own GSM for management, here is a deeper dive into the GUI. GSM comes with a VMware plugin allowing users to use vCenter for both remote monitoring and management.

GIGABYTE R282-Z92 Specifications

| Dimensions (WxHxD, mm) | 2U 438 x 87 x 730 mm |

| Motherboard | MZ92-FS0 |

| CPU | AMD EPYC 7002 series processor family Dual processors, 7nm, Socket SP3 Up to 64-core, 128 threads per processor TDP up to 225W, cTDP up to 240W Fully support 280W Compatible with AMD EPYC 7001 series processor family |

| Chipset | System on Chip |

| Memory | 32 x DIMM slots DDR4 memory supported only 8-Channel memory per processor architecture RDIMM modules up to 128GB supported LRDIMM modules up to 128GB supported Memory speed: Up to 3200/ 2933 MHz |

| LAN | 2 x 1GbE LAN ports (1 x Intel I350-AM2) 1 x 10/100/1000 management LAN |

| Video | Integrated in Aspeed AST2500 2D Video Graphic Adapter with PCIe bus interface 1920×1200@60Hz 32bpp |

| Storage | Front side: 24 x 2.5″ NVMe hot-swappable HDD/SSD bays Rear side: 2 x 2.5″ SATA/SAS hot-swappable HDD/SSD bays, from onboard SATA ports |

| Expansion Slots | Riser Card CRS2014: – 1 x PCIe x16 slot (Gen4 x16), Occupied by CNV3024, 4 x NVMe HBARiser Card CRS2033: – 1 x PCIe x16 slot (Gen4 x16), FHHL, Occupied by CNV3024, 4 x NVMe HBA – 1 x PCIe x8 slot (Gen4 x8), FHHL, Occupied by CNV3022, 2 x NVMe HBA – 1 x PCIe x8 slot (Gen4 x8), FHHLRiser Card CRS2033: – 1 x PCIe x16 slot (Gen4 x16), FHHL, Occupied by CNV3024, 4 x NVMe HBA – 1 x PCIe x8 slot (Gen4 x8), FHHL, Occupied by CNV3022, 2 x NVMe HBA – 1 x PCIe x8 slot (Gen4 x8), FHHL1 x OCP 3.0 mezzanine slot with PCIe Gen4 x16 bandwidth from CPU_0 Supported NCSI function, Occupied by CNVO134, 4 x NVMe HBA1 x OCP 2.0 mezzanine slot with PCIe Gen3 x8 bandwidth (Type1, P1, P2) Supported NCSI function, Occupied by CNVO022, 2 x NVMe HBA1 x M.2 slot: – M-key – PCIe Gen3 x4 – Supports NGFF-2242/2260/2280/22110 cards – CPU TDP is limited to 225W if using an M.2 device |

| Internal I/O | 1 x M.2 slot 1 x USB 3.0 header 1 x COM header 1 x TPM header 1 x Front panel header 1 x HDD backplane board header 1 x PMBus connector 1 x IPMB connector 1 x Clear CMOS jumper 1 x BIOS recovery jumper |

| Front I/O | 2 x USB 3.0 1 x Power button with LED 1 x ID button with LED 1 x Reset button 1 x NMI button 1 x System status LED 1 x HDD activity LED 2 x LAN activity LEDs |

| Rear I/O | 2 x USB 3.0 1 x VGA 2 x RJ45 1 x MLAN 1 x ID button with LED |

| Backplane I/O | Front side_CBP20O5: 24 x NVMe ports Rear side_CBP2020: 2 x SATA/SAS ports Speed and bandwidth: SATA 6Gb/s, SAS 12Gb/s or PCIe x4 per port |

| TPM | 1 x TPM header with SPI interface Optional TPM2.0 kit: CTM010 |

| Power Supply | 2 1600W redundant PSUs 80 PLUS PlatinumAC Input: – 100-120V~/ 12A, 50-60Hz – 200-240V~/ 10.0A, 50-60HzDC Input: 240Vdc, 10ADC Output: – Max 1000W/ 100-120V +12V/ 81.5A +12Vsb/ 2.5A – Max 1600W at 200-240V or 240Vdc Input +12V/ 133A +12Vsb/ 2.5A |

| OS Compatibility | Windows Server 2016 ( X2APIC/256T not supported) Windows Server 2019 Red Hat Enterprise Linux 7.6 ( x64) or later Red Hat Enterprise Linux 8.0 ( x64) or later SUSE Linux Enterprise Server 12 SP4 ( x64) or later SUSE Linux Enterprise Server 15 SP1 ( x64) or later Ubuntu 16.04.6 LTS (x64) or later Ubuntu 18.04.3 LTS (x64) or later Ubuntu 20.04 LTS (x64) or later VMware ESXi 6.5 EP15 or later VMware ESXi 6.7 Update3 or later VMware ESXi 7.0 or later Citrix Hypervisor 8.1.0 or later |

| Weight | Net Weight: 18.5 kg Gross Weight: 25.5 kg |

| System Fans | 4 x 80x80x38mm (16,300rpm) |

| Operating Properties | Operating temperature: 10°C to 35°C Operating humidity: 8%-80% (non-condensing) Non-operating temperature: -40°C to 60°C Non-operating humidity: 20%-95% (non-condensing) |

GIGABYTE R282-Z92 Design and Build

The GIGABYTE R282-Z92 is a 2U server that has a very similar appearance to other GIGABYTE servers (metal frame, black drive bays with orange highlights, black front). Across the front of the device are the twenty-four 2.5” PCIe Gen3 bays that take up most of the front. On the left side is the power button, ID button, reset button, NMI button, and LEDs (System status, HDD activity, and LAN activity). On the right side are the two USB 3.0 ports.

On the rear, we see two more 2.5” hot-swap bays (for either SATA or SAS, HDD/SSD) on the left upper corner and two PSU beneath. Eight expansion slots take up a majority of the rest. Running across the bottom are two USB 3.0 ports, two 1GbE LAN ports, and one MLAN port.

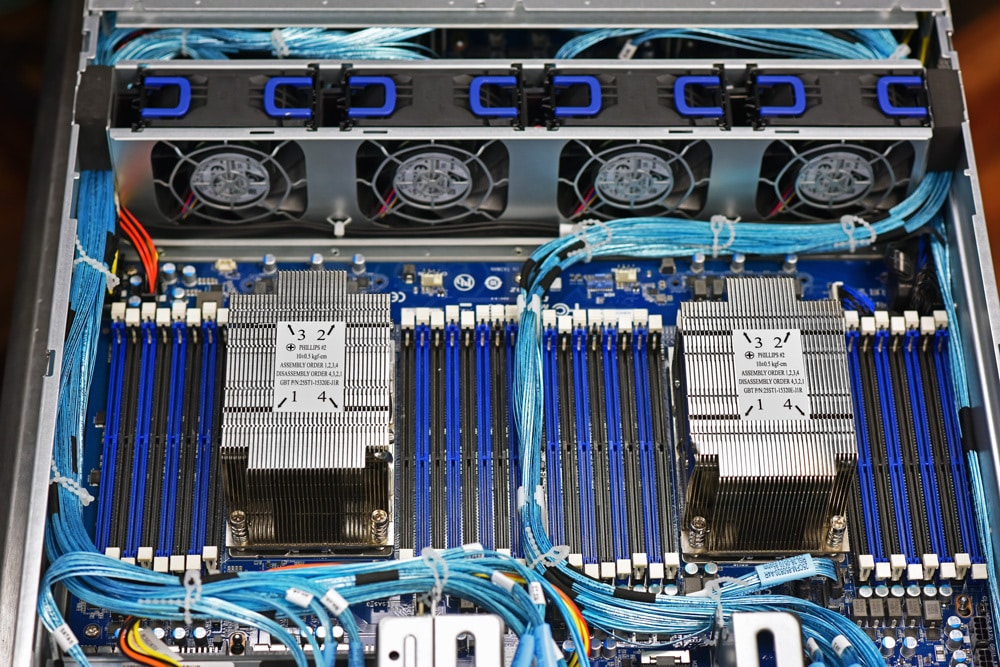

Popping off the top we can see the fans near the front followed by the CPUs and the RAM slots. Near the back is the M.2 slot for boot duty.

GIGABYTE R282-Z92 Performance

GIGABYTE R282-Z92 Configuration:

- 2 x AMD EPYC 7702

- 512GB, 256GB per CPU

- Performance Storage: 12 x Micron 9300 NVMe 3.84TB

- CentOS 7 (1908)

- ESXi 6.7u3

SQL Server Performance

StorageReview’s Microsoft SQL Server OLTP testing protocol employs the current draft of the Transaction Processing Performance Council’s Benchmark C (TPC-C), an online transaction processing benchmark that simulates the activities found in complex application environments. The TPC-C benchmark comes closer than synthetic performance benchmarks to gauging the performance strengths and bottlenecks of storage infrastructure in database environments.

Each SQL Server VM is configured with two vDisks: 100GB volume for boot and a 500GB volume for the database and log files. From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM, and leveraged the LSI Logic SAS SCSI controller. While our Sysbench workloads tested previously saturated the platform in both storage I/O and capacity, the SQL test looks for latency performance.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs and is stressed by Dell’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across our servers.

SQL Server Testing Configuration (per VM)

- Windows Server 2012 R2

- Storage Footprint: 600GB allocated, 500GB used

- SQL Server 2014

-

- Database Size: 1,500 scale

- Virtual Client Load: 15,000

- RAM Buffer: 48GB

- Test Length: 3 hours

- 2.5 hours preconditioning

- 30 minutes sample period

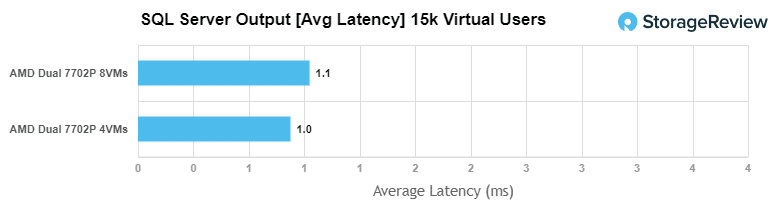

For our average latency SQL Server benchmark, we tested both dual AMD EPYC 7702 with 8VMs and 4VMs. With 8VMs we saw 1.1ms for average latency and 4VMs gave us 1ms.

Sysbench MySQL Performance

Our first local-storage application benchmark consists of a Percona MySQL OLTP database measured via SysBench. This test measures average TPS (Transactions Per Second), average latency, and average 99th percentile latency as well.

Each Sysbench VM is configured with three vDisks: one for boot (~92GB), one with the pre-built database (~447GB), and the third for the database under test (270GB). From a system resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM, and leveraged the LSI Logic SAS SCSI controller.

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Percona XtraDB 5.5.30-rel30.1

-

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

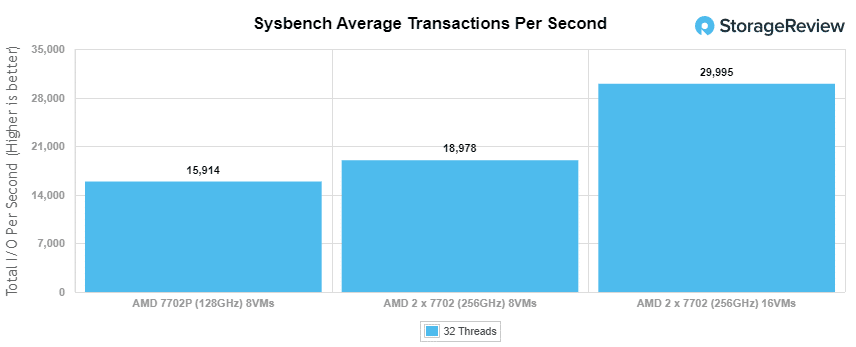

With the Sysbench OLTP, we tested a single 7702P with 8VMs, dual 7702’s with 8VMs, and 16VMs. The 7702P with 8VMs we saw 15,914 IOPS. With the two 7702 with 8VMs, the server hit 18,978 IOPS. And with two 7702 with 16VMs it hit 29,995 IOPS.

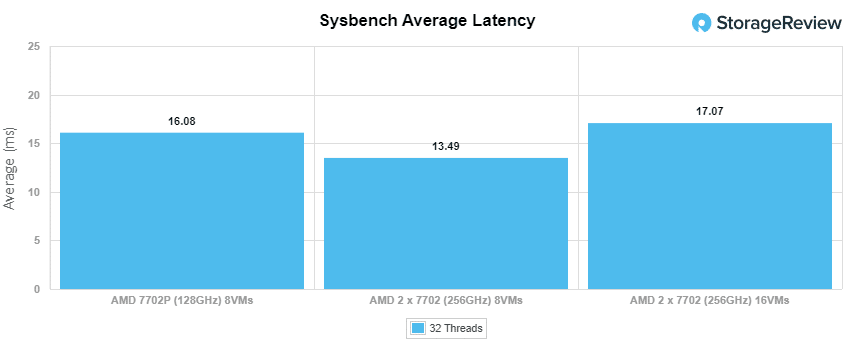

With Sysbench average latency we saw 16.08ms with the single 7702P. The two 7702 with 8VMs hit 13.49ms and 16VMs hit 17.07ms.

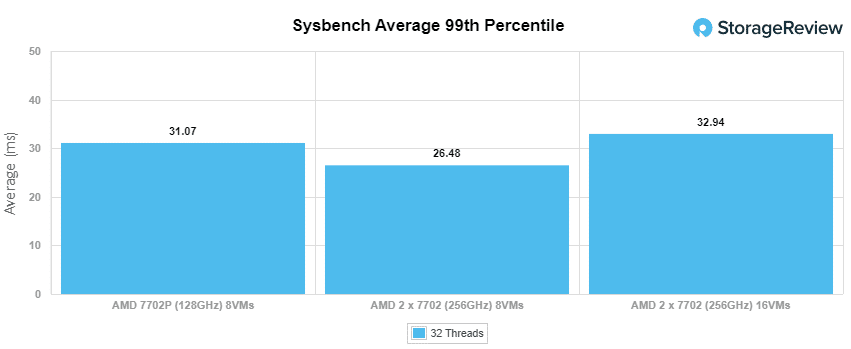

For our worst-case scenario latency (99th percentile) the single 7702P hit 31.07ms. The dual 7702 8VMs hit 26.48ms and the 16VMs hit only 32.94ms.

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

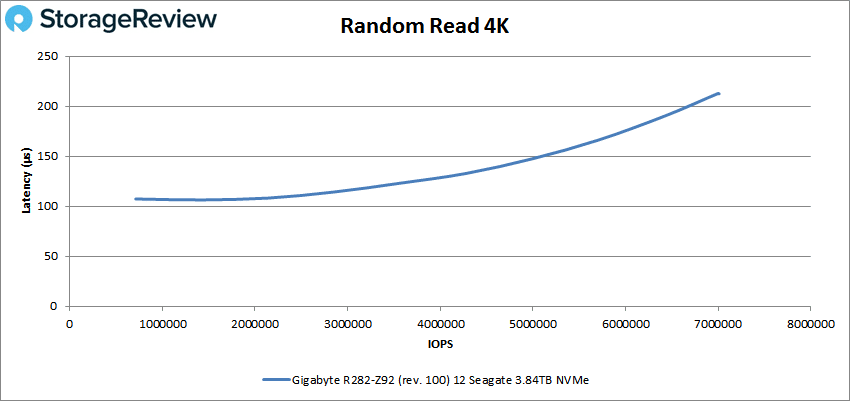

With random 4K read, the GIGABYTE R282-Z92 Server peaked at 7,005,724 IOPS with a latency of 213µs.

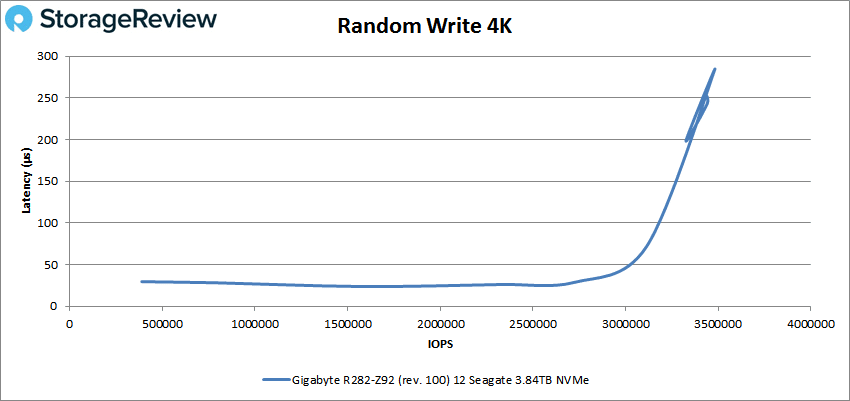

Random 4K write saw the server start at 389,144 IOPS at only 29.6µs for latency. It stayed under 100µs until it broke 3 million IOPS. It went on to peak at 3,478,209 IOPS with 282µs for latency.

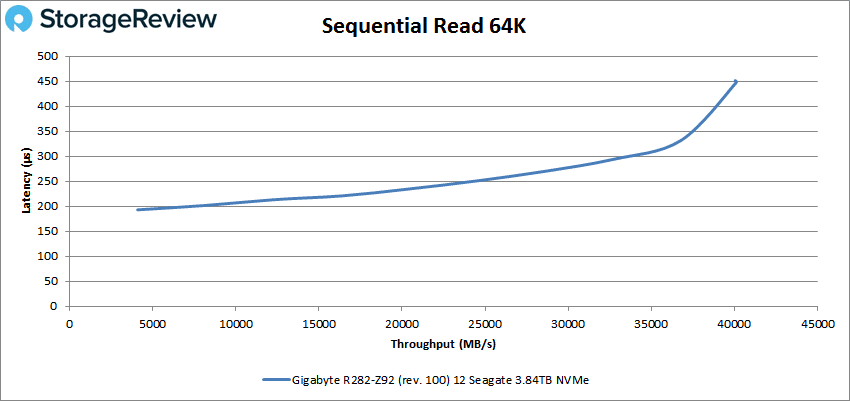

Next up is sequential workloads where we looked at 64k. For 64K read the GIGABYTE server peaked at 640,344 IOPS or 40GB/s at a latency of 448µs.

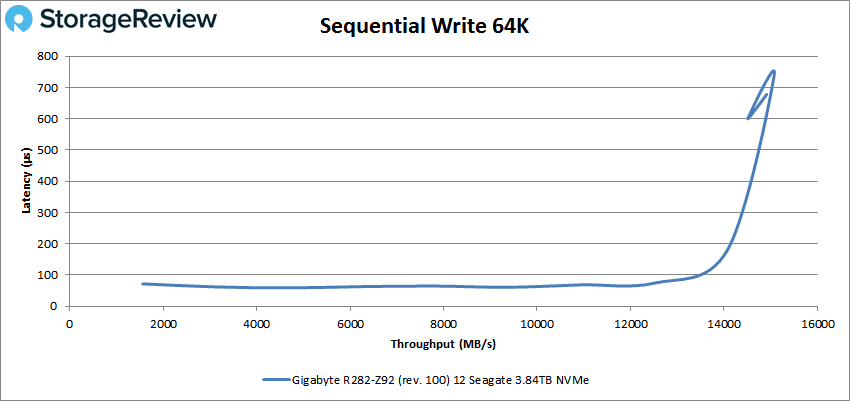

For 64K write the server started at 65µs and stayed under 1ms until it went close to peak that was about 255K IOPS or 15.9GB/s at 739µs before dropping off some.

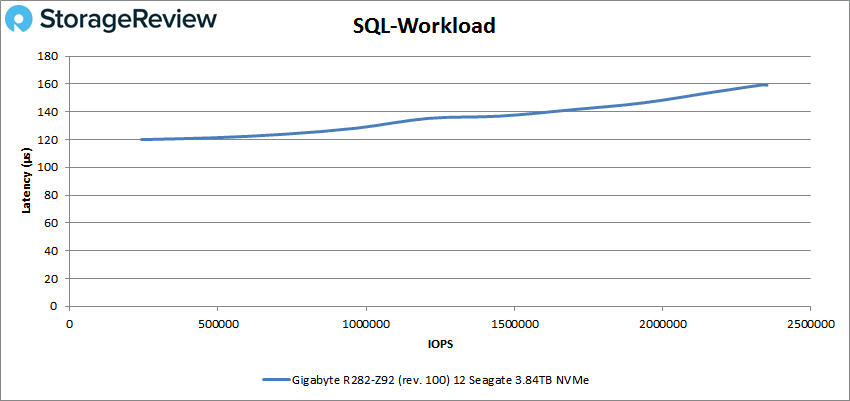

Our next set of tests are our SQL workloads: SQL, SQL 90-10, and SQL 80-20. Starting with SQL, the server peaked at 2,352,525 IOPS at a latency of 159.2µs.

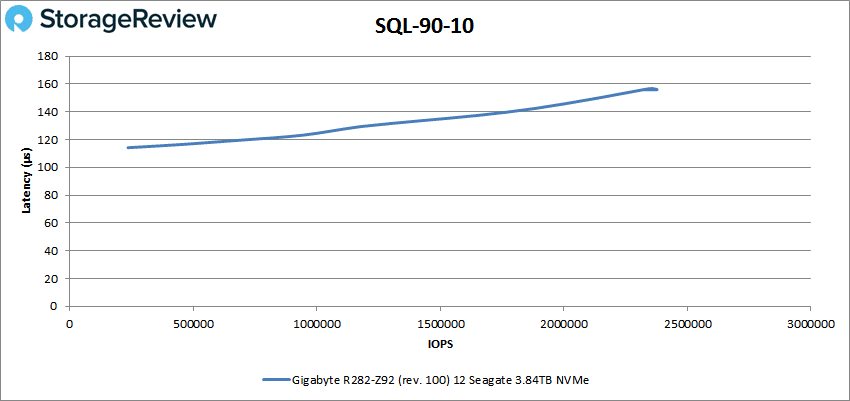

For SQL 90-10 the server peaked at 2,377,576 IOPS at 156µs for latency.

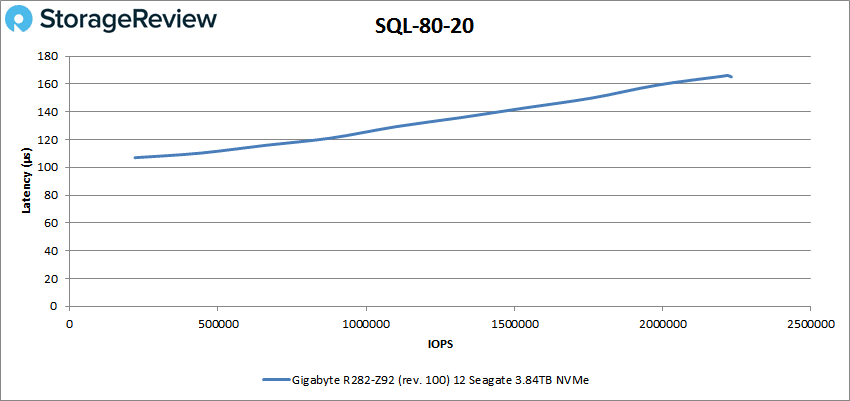

SQL 80-20 saw a peak performance of 2,231,986 IOPS with a latency of 165µs.

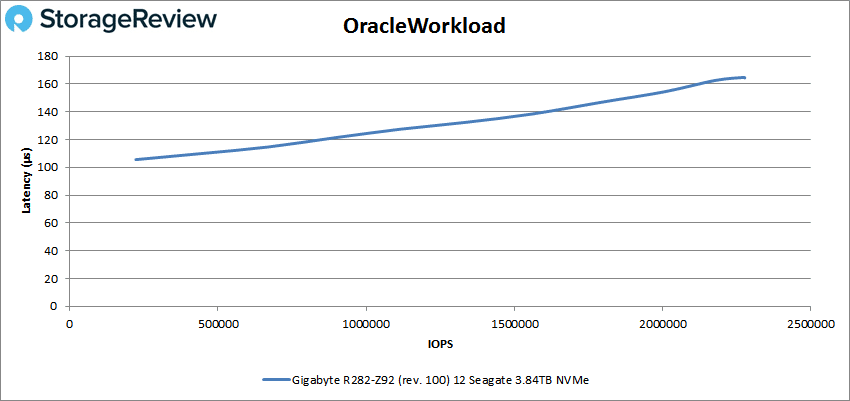

Next up are our Oracle workloads: Oracle, Oracle 90-10, and Oracle 80-20. Starting with Oracle, the GIGABYTE R282-Z92 Server hit a peak of 2,277,224 IOPS at a latency of 164.4µs.

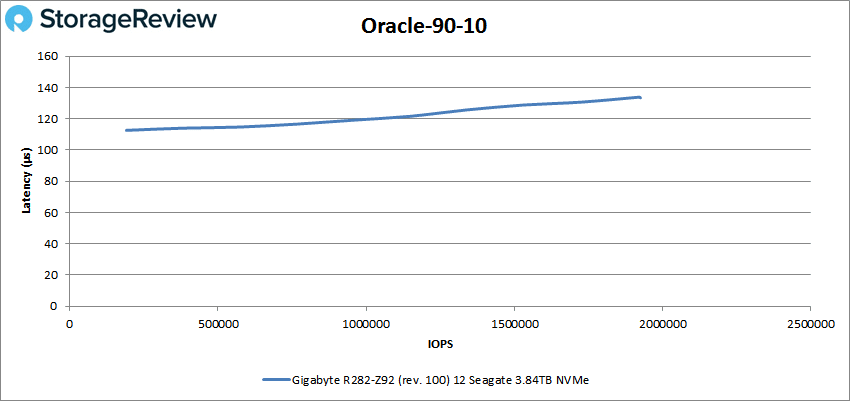

With Oracle 90-10 the server was able to peak at 1,925,440 IOPS with a latency of 133.4µs about 20µs higher than where it started.

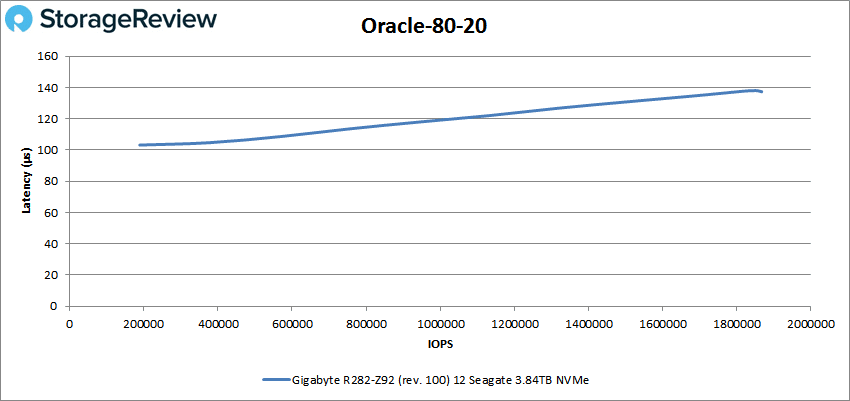

Oracle 80-20 gave the server a peak performance of 1,867,576 IOPS at a latency of 137.3µs.

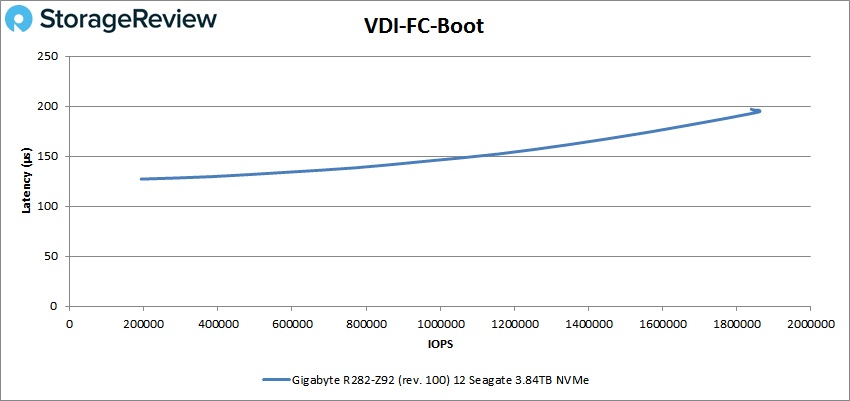

Next, we switched over to our VDI clone test, Full and Linked. For VDI Full Clone (FC) Boot, the GIGABYTE server hit a peak of 1,862,923 IOPS with a latency of 195µs.

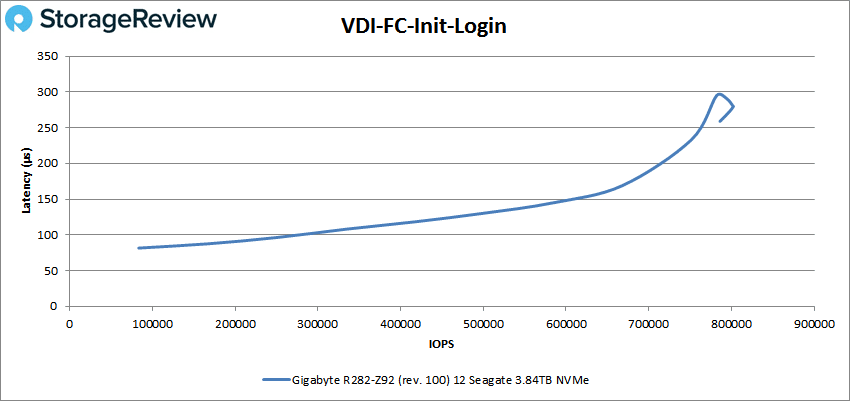

VDI FC Initial Login saw a peak of about 802,373 IOPS at 280µs before dropping off a bit.

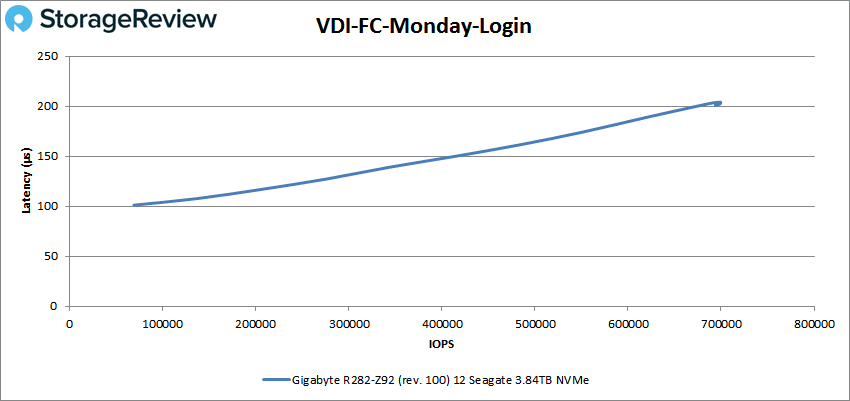

Next up is VDI FC Monday Login giving us a peak of 699,134 IOPS with a latency of 201µs.

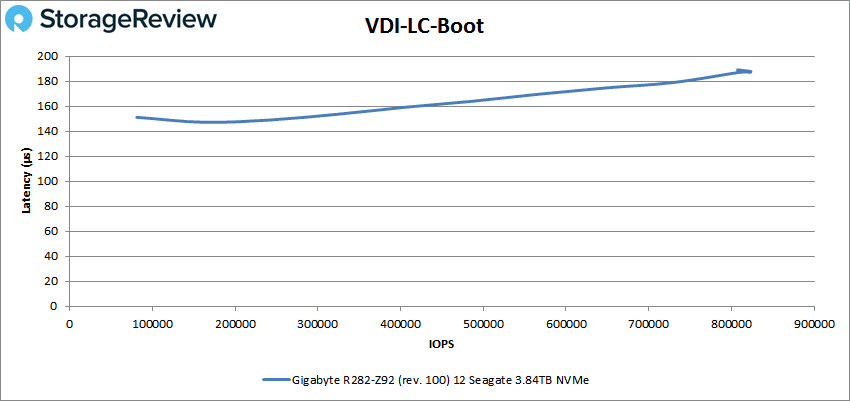

Switching to VDI Linked Clone (LC) Boot, the GIGABYTE hit a peak of 823,245 IOPS and a latency of 187.2µs.

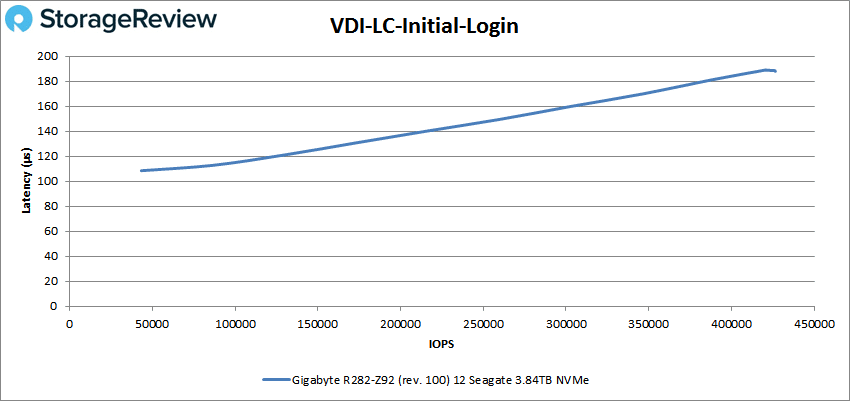

For VDI LC Initial Login the server peaked at 426,528 IOPS with a latency of 188.1µs.

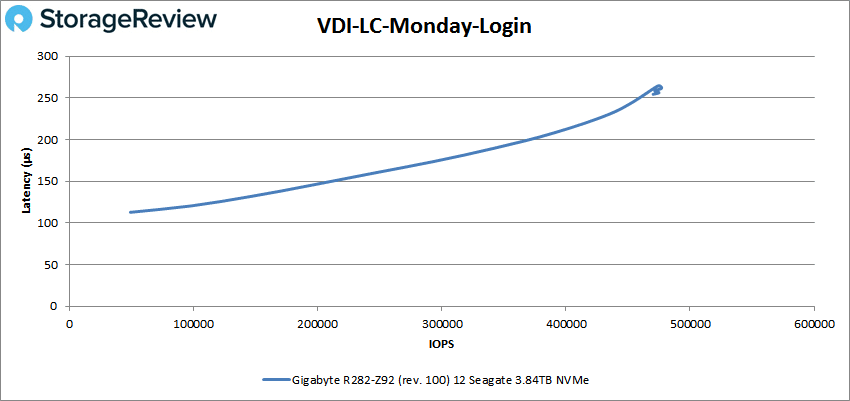

Finally, VDI LC Monday Login had a peak of 476,985 IOPS with a latency of just 261.5µs.

Conclusion

The GIGABYTE R282-Z92 is a dual-socket, 2U server that leverages the second-generation AMD EPYC processors and most of the benefits that come with them. Outfitting the server with two 64-core CPUs gives users up to 128 cores total. On top of that, one can leverage over 4TB of DDR4 RAM with speeds up to 3200MHz (16 DIMMS per CPU for a total of 32 with support for up to 128GB per slot). The EPYC lends PCIe 4.0 support for new storage options, FPGAs, GPUs, and OCP 3.0. For capacity storage, the server has 24 2.5” NVMe Gen3 bays across the front, two 2.5” SAS/SATA bays on the back, and an onboard M.2 slot. In terms of expansion, our configuration leaves two PCIe Gen4 slots open, along with the OCP slot. The rest of the PCIe slots are used for the adapter cards to support the NVMe drive bays in front.

For our Application Workload Analysis, we looked at SQL Server average latency and our normal Sysbench tests. For SQL Server we ran both dual AMD EPYC 7702 with 8VMs and 4VMs, seeing 1.1ms and 1ms respectively. With Sysbench we ran a single 7702P with 8VMs, dual 7702’s with 8VMs, and 16VMs. In transactional the single 7702P hit 16K IOPS, the dual 7702 8VMs hit 19K IOPS and the 30K IOPS for the dual 7702 16VMs. Sysbench average latency saw the single 7702P hit 16.1ms, the dual 7702 8VMs hit 13.5ms, and the dual 7702 16VMs hit 17.1ms. Sysbench worst-case scenario latency saw the single 7702P hit 31.1ms, the dual 7702 8VMs hit 26.5ms, and the dual 7702 16VMs hit 32.9ms.

On our VDBench workloads, the GIGABYTE R282-Z92 really impressed. While it didn’t always have sub-millisecond latency the peak numbers were consistently high. Highlights include 7 million IOPS for 4K read, 4K write had over 3.4 million IOPS, 64K read hit 40GB/s, and 64K write hit 15.9GB/s. With SQL we saw 2.4 million IOPS for SQL and SQL 90-10, and 2.2 million IOPS for SQL 80-20. With Oracle, the server hit 2.3 million IOPS, 1.9 million for Oracle 90-10, and 1.9 million for Oracle 80-20. For our VDI cloned test we saw Full Clone results of 1.9 million IOPS boot, 802K IOPS Initial Login, and 699K IOPS Monday Login and for Linked Clone we saw 823K IOPS for boot, 427K IOPS for Initial Login, and 477K IOPS for Monday Login.

Overall the GIGABYTE R282-Z92 is a robust platform with impressive storage density, leveraging 24 bays in the front, two in the back, and an onboard M.2 slot for boot. There are two letdowns primarily from a storage perspective. First is that GIGABYTE uses up five of the expansion slots for NVMe cards, which limits expansion options. Second, they don’t support PCIe Gen4 in the front bays. There are two Gen4 slots open in the back still, which allows for a little bit of I/O, storage, or GPU expansion if needed. On the Gen4 SSD front, there aren’t a lot of options there yet, but Gen4 is the future and a worthy investment, so we’d be happier to see it supported here, but if you really need the latest SSDs, then you’re not considering this particular server anyway. That said, the system screams as-is.

What we have in this platform is an amazing amount of computational power in 2U. We saw a really nice performance profile, with just 12 NVMe SSDs, and we only capped CPU in a single workload, meaning there’s more to give. Even so, we hit some nice hero numbers of 7 million 4K IOPS and 40GB/s 64K read. AMD has done a really great job with their second-generation EPYC CPUs and this platform does well to harness those 128 cores.

Amazon

Amazon