Hewlett Packard Enterprise’s (HPE) Apollo is a family of role-based converged-infrastructure compute and storage solutions at the high performance, high-density end of the enterprise performance spectrum. Apollo 4530 servers utilize a 4U chassis supporting up to 68 3.5″ HDDs, with three internal ProLiant Gen9 servers powered by Xeon E5-2600v3 or v4 processors, up to 1,024GB DDR4 memory, and with 4 PCIe slots and FlexibleLOM. From a storage perspective, that means each Apollo 4530 chassis can manage more than 500TB of storage with 8TB disks or just under 700TB with 10TB models. The systems are purpose-built for the rapidly expanding big data, analytics and HPC storage.

Apollo 4500 is descended from HPE’s SL4540 and is oriented towards object storage and enterprise analytics. The Apollo family is relatively vast in offering configurations, and Apollo 4530 servers like those in our lab dedicate more chassis space to compute resources. The compute density of the Apollo 4530 means that a full 42U rack would fit 30 servers, and the 4530 is available with up to 2 Intel Xeon E5-2600v4 series processors per server. CPUs are available from 6-22 cores and up to 145W.

Of course, HPE offers a wide range of SDS solutions that the enterprise is largely familiar with. The Apollo approach is fundamentally different than how most of the enterprise thinks about deployments of SDS, though, which is often perceived to be the domain of primary storage. The Apollo platform takes a capacity-centric approach to SDS, even with the server-dense 4530 chassis. This gives software partners a hardened, yet distinctive, platform from which to operate that’s built specifically for the task of Apollo 4530 at high-capacity scale. For its part, the Apollo 4530 is engineered to fill the analytics compute niche in the Apollo family and its three-server chassis creates opportunities for scalability and parallelism within a relatively small amount of rack space. For example, Hadoop deployments benefit from being able to process three copies of a dataset simultaneously. NoSQL-based analytics is another target for this architecture configuration.

To get some idea of the physical differences between models in the Apollo line, the 4510 has a single node, the 4520 has two nodes, and the 4530 has 3 nodes. Beyond the obvious number of nodes on the outside, the internal layout of each Apollo unit is different to address the number of nodes the chassis supports. The 4510 offers 60 internal drive bays attached to one node, the 4520 has 46 drive bays (23 evenly split per node), while the 4530 has 45 drive bays (with 15 per node). So for the peak amount of storage capacity, the 4510 can fit the most drives. But for the most fail-over resiliency, the 4530 with three-nodes offers the most protection or simultaneous access. All of the hard drives drop in through the top. The 4520 has expander modules for shared storage between the nodes, and the 4530 has three self-contained, non-shared servers with 15 drives each. The 4510 supports more controller options and overall drives than the 4520 or 4530 for maximum disk density, making it best for lowering the cost of scale-out object storage deployments. The 4510 can also be configured with 8 additional drive bays beyond the 60 inside, with bay add-on.

All Apollo 4000 systems incorporate an HPE Smart Array card with integrated FIPS 140-2 secure encryption functionality that is transparent to the operating system. Data on any drive can be encrypted and keys can be managed locally or via key management system for environments and applications that require it. Apollo also supports a deep portfolio of management utilities highlighted by iLO for the integral ProLiant servers and Cluster Management Utility (CMU) for provisioning, managing and monitoring the cluster.

HPE Apollo 4530 Specifications

- Form factor: 4U shared infrastructure chassis

- Server: Up to 3 servers per chassis

- Storage type: Up to 15 LFF hot-plug SAS/SATA/SSD per server; Up to 45 drives per chassis

- Storage capacity: Up to 150TB per server (15 LFF 10TB HDD); Up to 4.5PB per 42U rack (30 servers 10TB HDD)

- Storage controller: HPE Dynamic Smart Array b140i; Integrated HPE Smart Array P244br/HPE H244br controllers; plus additional Smart Array or Smart HBA controller options

- Processor family: Intel Xeon E5-2600 v3 or v4 Series

- Processor number: One or two per server

- Processor cores available: 6/8/10/12/14/16/18/20/22

- Processor frequency: From 1.6GHz-2.6GHz

- Memory: HPE SmartMemory; 16 DIMM slots; Up to 1,024GB DDR4 memory at up to 2,400 MHz

- Networking: 2 x 1 Gb Ethernet plus FlexibleLOM and PCIe options

- Expansion slots: Up to four PCIe Slots + FlexibleLOM support

- Management: HPE iLO 4

- Recommended for Management at scale: HPE Apollo Platform Manager; HPE Insight Cluster Management Utility

- Systems fans features: Five hot-plug fan modules (provide redundancy)

Build and Design

The Apollo 4530 system features a 4U chassis designed for maximum density. The Apollo 4530 incorporates three ProLiant Gen9 servers into this chassis, and each server can be outfitted with up to 15 hot-plug SAS or SATA drives. The densest Apollo 4530 storage configuration available uses 10TB LFF drives to achieve a total capacity of 4.5PB in a 42U rack full of 4530 systems.

The front of the system provides access to the three internal server nodes. Up to two SFF drives per server node can be accessed from the front, and each server features the expected range of LED indicators. All of the major components of an Apollo 4530 system support hot plug maintenance, including the server nodes as well as their drives and power supplies.

The rear of the Apollo 4530 provides access to each server node’s I/O modules and PCIe slots across the top. The bottom left of the chassis incorporates the HPE-APM rack management port, an iLO4 port, and an HPE Tools Service port. Bays for up to four power supplies round out the remaining space on the rear of the chassis.

Management

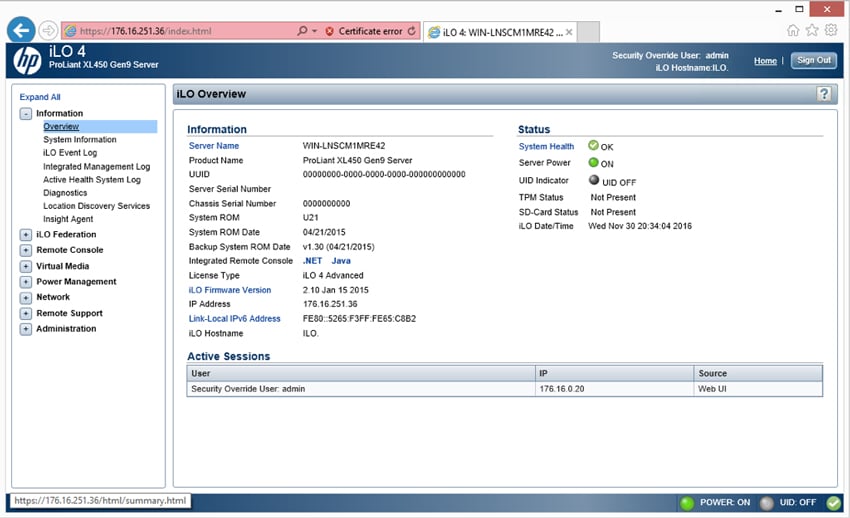

Apollo 4500 systems use HPE’s Integrated Lights Out 4 (iLO4) for agentless monitoring, management, and fault resolution. iLO4 incorporates a remote console, power control , virtual media management, federation discovery and health, and a RESTful API. A deeper review of iLO4 and the ProLiant Gen9 management experience can be found in our HPE iLO4 review and the HPE ProLiant DL360 Gen9 review.

iLO4 provides three remote console options: a .Net interface, a Java interface, or a mobile app. The core iLO4 interface uses a navigational tree along the left side.

iLO Federation Groups facilitate the monitoring and management of groups of servers, including firmware upgrades and the installation of license keys. iLO is offered with three licensing schemes for Gen9 servers like those used in the Apollo 4530. An “iLO Standard” license offers remote management through the Integrated Remote Console (IRC), as well as virtual media, and provides core iLO federation functionality. The “iLO Advanced” license includes full remote functionality and all iLO Federation features, such as group firmware updates, group virtual media, group power control, group power capping, and group license activation. There is also an “iLO Scale-Out” license oriented towards those use cases.

As a Gen9 platform, the Apollo 4500 systems can use HPE’s Insight Control management software. HPE Insight Control automates deployment and migration, as well as providing integration tools for VM management offerings from VMware, Microsoft, Citrix, and Xen.

The HPE Active Health System (AHS) logs health, configuration, and other telemetry from iLO4, system ROM, the Agentless Management Service, network interface cards, and other components. It can be used to automatically send telemetry to HPE’s Insight Online direct connect or Insight Remote Support 7.x programs.

The iLO mobile app for iOS and Android can interact with the iLO processor to provide access to system status, logs, scripting, and virtual media. A RESTful Interface Tool can script configuration for rapid deployment of ProLiant servers with heterogeneous operating systems, and Gen9 servers like the Apollo 4500 support management via HPE Virtual Connect Enterprise Manager 7.4.

HPE OneView

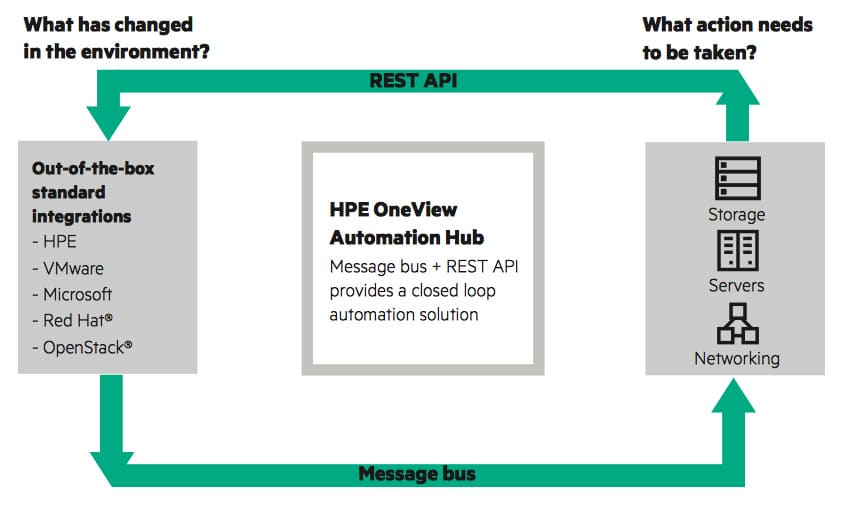

Another management option to use with Apollo is HPE’s OneView. OneView is an HPE infrastructure automation engine with software intelligence built in. The idea behind OneView is to simplify provisioning and lifecycle management across compute, storage and fabric through automation. Infrastructure can be configured, monitored, updated and repurposed with a single line of code, freeing up IT to manage other changing needs. OneView is aimed more at enterprises and can scale up to 640 servers.

IT is able to deploy infrastructure faster by using HPE’s templates that are included in OneView. These templates enable users to model settings in software such as RAID configuration, BIOS settings, FW baseline, network uplinks and downlinks, and SAN storage volumes and zoning. Another way in which OneView aids deployment is by automating device drivers and firmware updates at scale. Admins can set up minor updates with no downtime, and major updates with reduced downtime. OneView can also take reported hardware failures from iLO and log them with HPE support, which in turn speeds resolution of the issue.

HPE Insight Cluster Management Utility

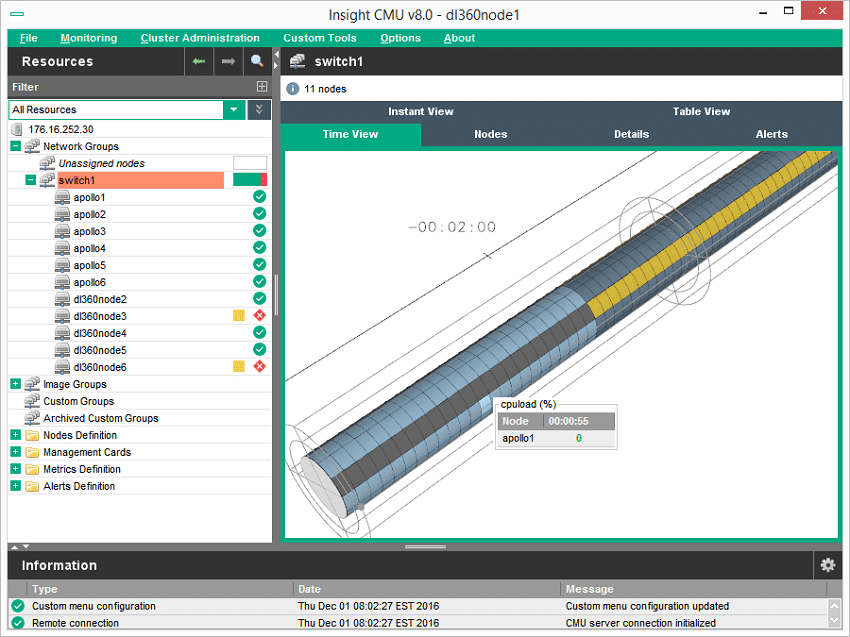

Another option for management, depending on users’ needs, is HPE Insight Cluster Management Utility (CMU). CMU is designed for cluster environments and provides users with monitoring, node metrics, and remote management. CMU is ideal for organizations that have to deal with hundreds to thousands of nodes. CMU supports Red Hat Linux, SUSE Linux Enterprise Server (SLES), and multiple community Linux distributions. CMU offers both a CLI and GUI for monitoring and management. CMU is designed more in mind for working with HPC and Big Data and can scale up to 10,000 servers in a cluster configuration.

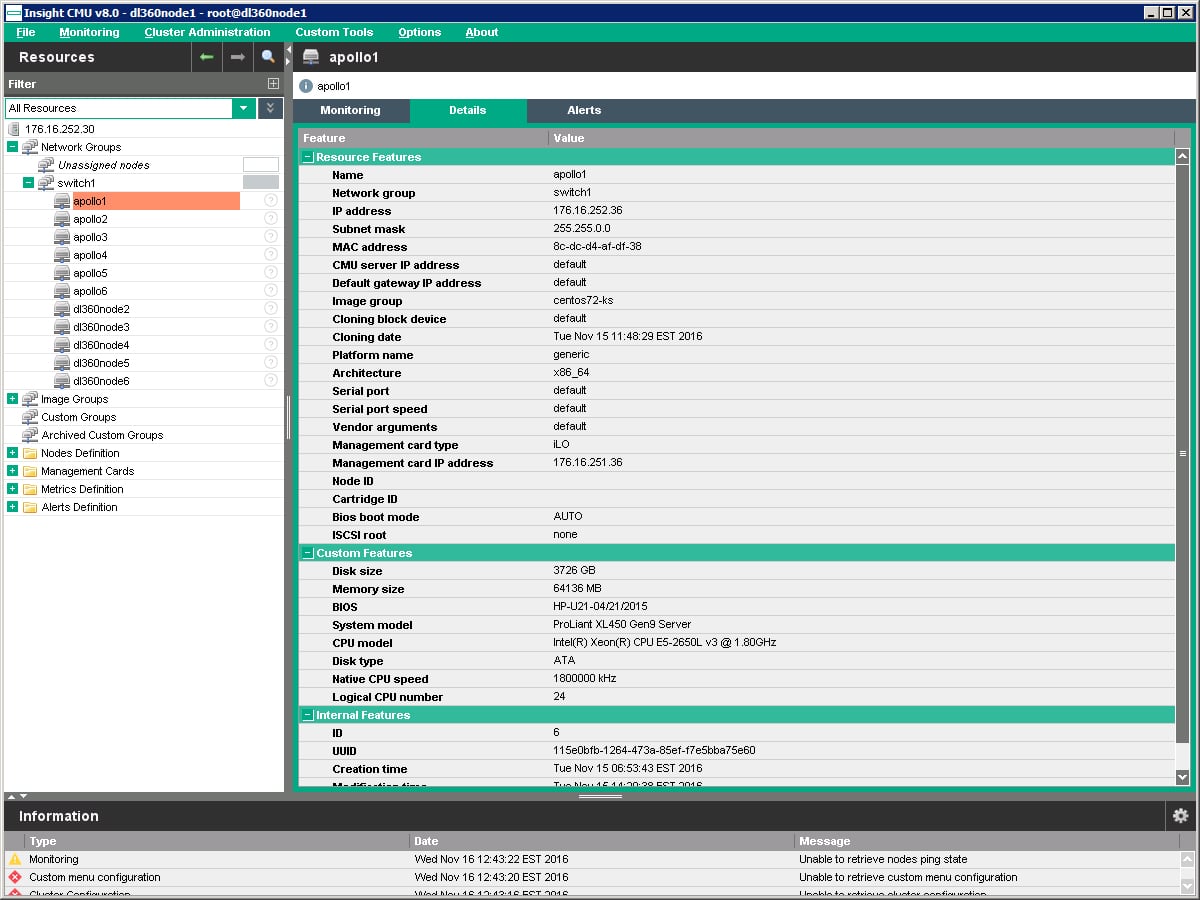

With the GUI, we are able to go into the monitoring section under details and click on one of the Apollos in our cluster. Here we can see a great deal of features including resource features, custom features, and internal features.

Right-click and open in new tab for a larger image

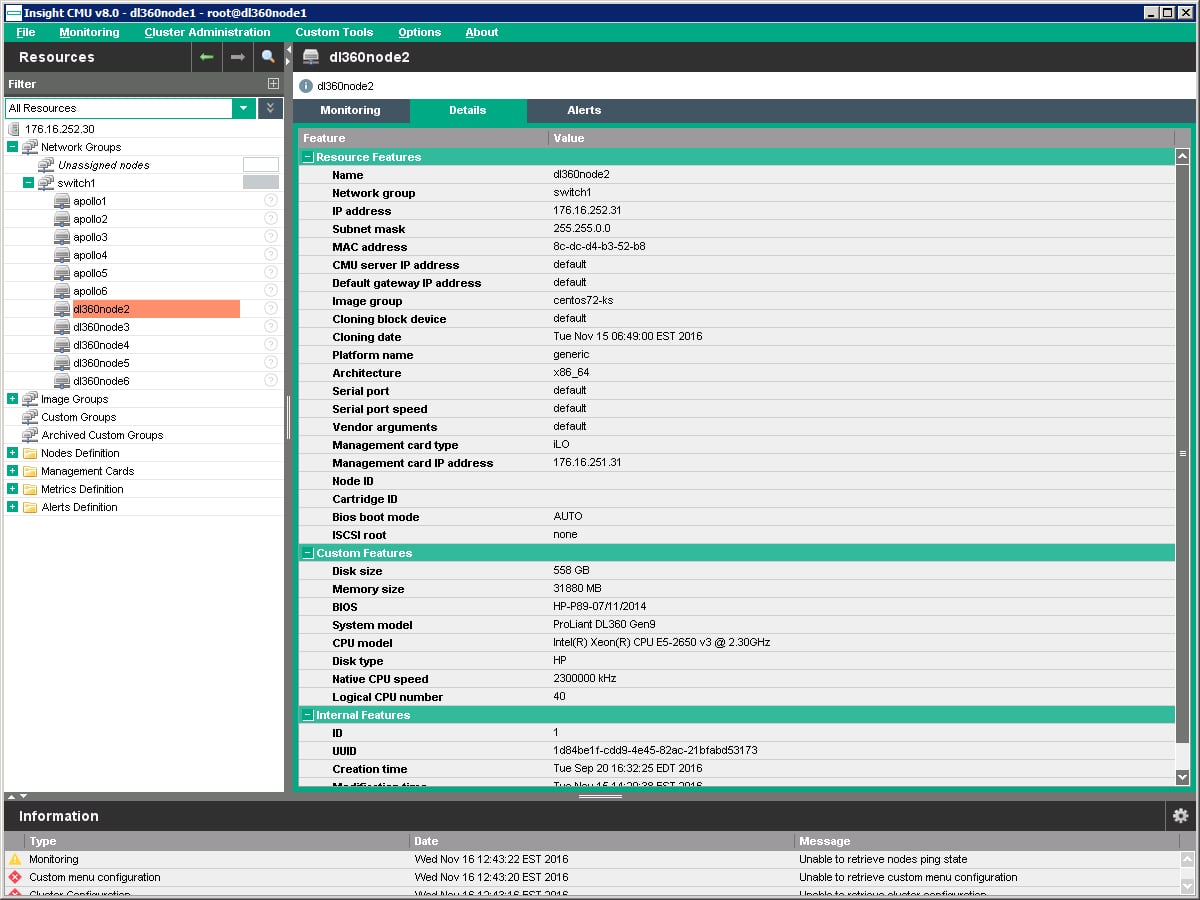

We are also able to look at the individual nodes we have to get a deeper look at the features per node.

Right-click and open in new tab for a larger image

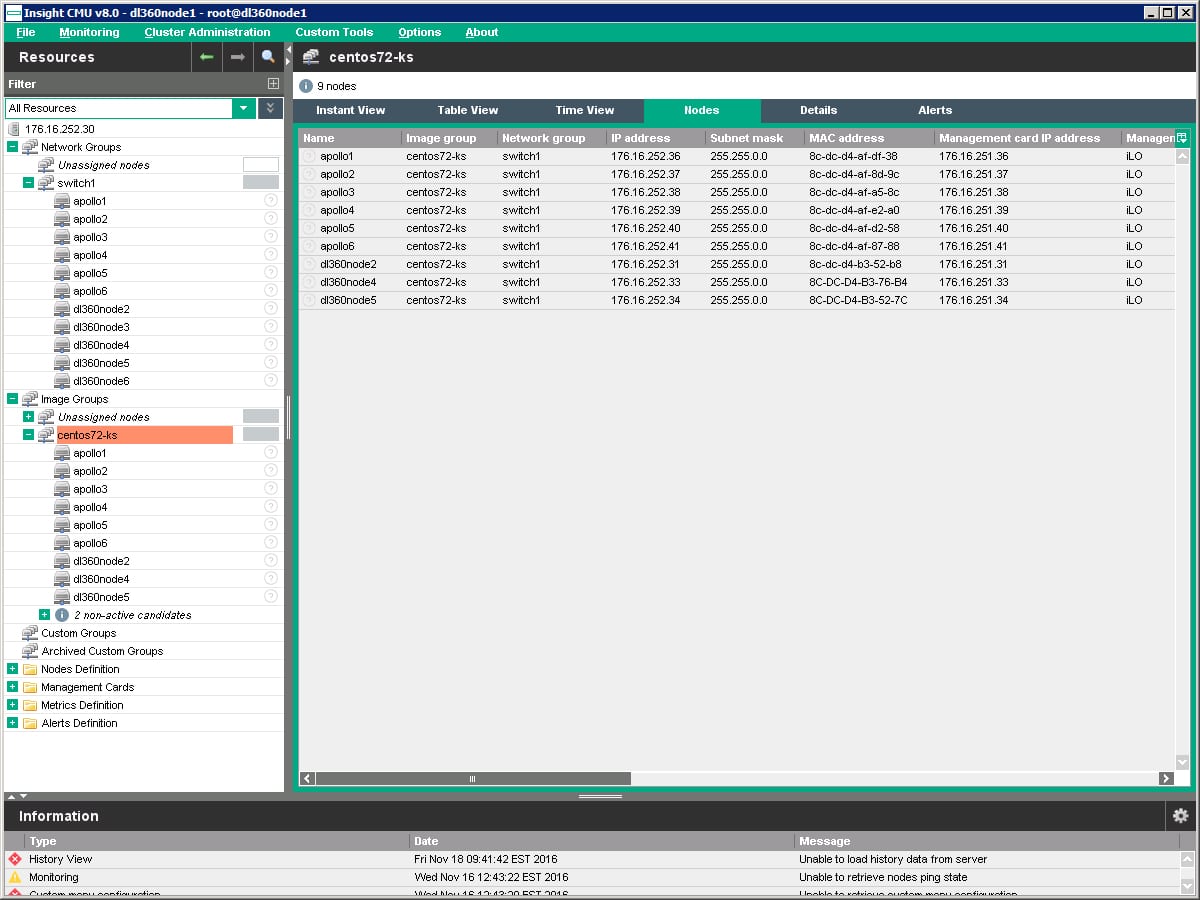

We were able to look at a specific image group to see which nodes belong to that group, and from which appliances.

Right-click and open in new tab for a larger image

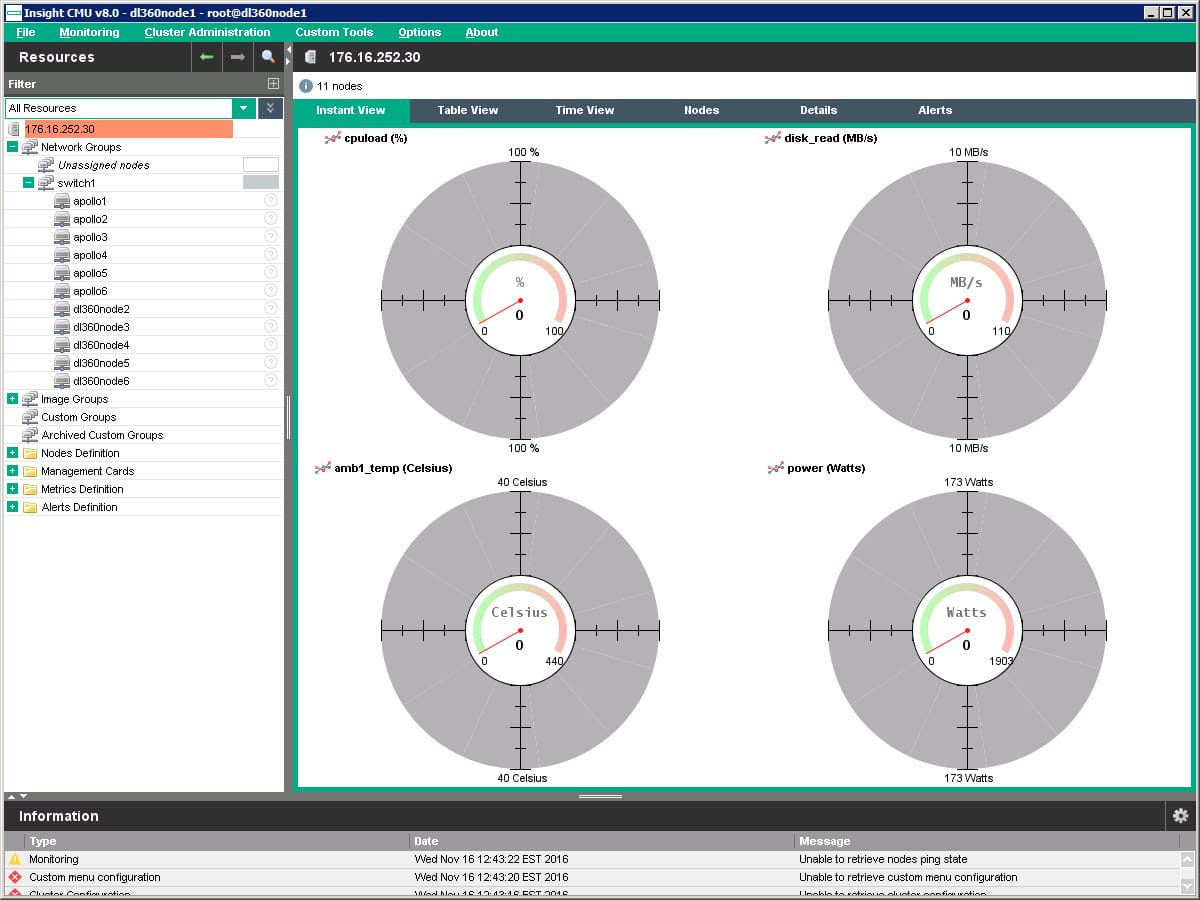

And with instant view, we are able to drop in and see the specific metrics of the cluster such as percentage of CPU load, Disk Read speeds, temperature, and power usage. At the time of grabbing the screen shot the cluster was powered down.

Right-click and open in new tab for a larger image

HPE Apollo Use Cases

Given the unique form factor of the hardware, HPE has done well to partner with several software companies to develop best practices and deployment guides for several use cases. We’ve highlighted a few of them below to provide a better picture for how Apollo can be leveraged.

Scality RING and the Apollo 4530

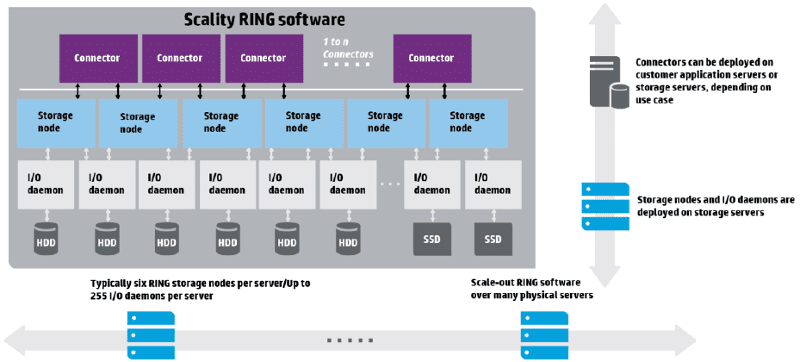

HPE describes the Apollo 4500 series as the building blocks for enterprise-scale object storage. Given that philosophy, we are particularly interested in considering the Apollo 4530 in light of HPE Scalable Object Storage with Scality RING. Scality RING can be deployed with at a minimum size of one cluster with six-server node and can scale up to thousands of server nodes at the petabyte scale.

In the storage layer, RING provides abstraction for the underlying hardware, as well as services for data access, data protection, and management. The middle layer of the RING architecture is a distributed virtual file system with data protection mechanisms and management services to support RING’s distributed key-value object store. At the top layer, RING application data services via a layer of “connector servers.” These can be physical gateways, or can be VMs running directly on the storage nodes.

Scality refers to RING’s web GUI as the supervisor, which provides a dashboard, as well as submenus to manage the various layers of the RING architecture. The supervisor communicates with the “sagentd” management agent installed on each server. A scriptable console interface named RingSH is also available for managing and monitoring purposes. RING provides an SNMP-compliant MIB to integrate with third party management tools and manage alerts.

Because RING does not require a custom Linux kernel, the system’s scaling and upgrade choices are hardware agnostic, limited only by the Linux distribution itself. Another reason that RING is making inroads with the likes of HPE and Dell is that RING provides tools to provide storage services for legacy systems and protocols in order to consolidate data from legacy applications onto new storage infrastructure.

Block Storage Reference Architectures: Ceph

HPE also provides reference architectures for object storage solutions powered by Ceph. HPE’s use case for Ceph is for offloading cold data from existing storage infrastructure. Ceph can scale to the hundred petabyte scale and can be configured to provide low-performance block storage as well as its object store. HPE recommends Apollo 4530 nodes for low-performance or ephemeral block storage hosts and the Apollo 4510 is recommended for object storage hosts. Ceph can be put to work in a variety of ways, and we recently reviewed SUSE Enterprise Storage, also running on Apollo servers.

Hadoop Reference Architectures: Cloudera and Hortonworks

HPE provides reference architectures for two Hadoop solutions: Cloudera and Hortonworks. Both reference architectures are verified for a dense, one-rack deployments as well as for larger deployments.

HPE verified this configuration with Cloudera 5.3 and Cloudera Manager 5, but HPE and Cloudera also support the use of other CDH 5.x releases with Apollo 4000 hardware. Customers using Cloudera at scale can also make use of Cloudera Enterprise, a subscription-based offering for cluster provisioning and management including environments which require measurable SLAs or chargebacks. The Apollo 4000 reference architecture for Hortonworks is based on Hortonworks Data Platform 2.2, and is also valid for HDP 2.x releases.

HPE also brings some of its own software to the table for these Hadoop reference architectures. HPE Vertica provides analytic processing for structured data such as call detail records, financial tick streams and parsed weblog data. Vertica can be used on an as-needed basis with these two Hadoop distributions to handle high-speed loads and ad-hoc queries over relational data.

IDOL for Hadoop utilizes HPE’s IDOL 10 analytics platform to provide unstructured storage functionality within Hadoop. For example, IDOL KeyView works by extracting text, metadata, and security information from data in order identify sensitive fields, such as social security and credit card numbers, in order to prevent sensitive information from unintentionally being stored in Hadoop.

Conclusion

The HPE Apollo 4500 Series is a remarkable platform geared specifically to popular large-scale software development projects, including the likes of Cloudera, Scality and Ceph. At its core, the Apollo 4500 mixes the benefits of JBOD storage with local compute resources. What makes the 4500 Series unique, though, is while other vendors might position a single server with vast storage resources or an HA JBOD with two servers, HPE covers that swath and takes it a step further with the 4510 (one node), 4520 (two nodes) and 4530 (three nodes) models. This offers exactly what the object storage guys want depending on how they configure their platforms. This unique design comes down to the object-store replica demand, where users build around the need of one, two or three replicas when choosing their level of storage resiliency.

The Apollo 4530 we reviewed combines three ProLiant Gen9 servers in one system chassis where 15 3.5″ HDDs are allocated to each node. This is a convenient and industry-leading configuration for parallelism and an impressive advancement for compute density in HPE’s enterprise lineup. Leveraging three nodes with up to 450TB of storage in a single chassis is an impressive bit-hardware engineering, but more importantly allows the system to draw upon the range of management and integration solutions available to current Gen9 ProLiant servers. This includes the iLO4 agentless management environment, OneView for more typical server management in the enterprise, and HPE’s Insight Cluster Management Utility for managing servers at scale in HPC and Big Data environments with node counts up to 10,000. The combination of management software makes the Apollo platform easy to deploy and one of–if not the most–robust solutions available by a wide margin.

Software-defined storage isn’t just for mainstream enterprise application workloads. As HPE has shown with Apollo and the 4530 specifically, unique hardware engineering can unlock tremendous potential on the software side when it comes to handling massive sets of data. As more organizations bring HPC and Big Data into the enterprise, traditional infrastructure will not likely be the best way to bring these initiatives to fruition. More importantly, for these new programs to be successful, purpose-built hardware that is “enterprise-grade” will be required. Whitebox cost effectiveness is great in some places, but for the business HPE is targeting, the need for the hardware to fall in line with existing server management and support protocols is critical. Whether that’s iLO, OneView, CMU or a combination of these tools coupled with on-site hardware support, the Apollo 4530 is a great solution to bring data mining and analytics projects in-house on a platform that’s been hardened for these specific tasks.

Amazon

Amazon