The Inspur NF5266M6 is a 2U dual-socket rackmount server designed for high-density storage, supporting up to 26 3.5-inch drives—24 in the front with a unique tray-load system and an additional 2 in the back. It’s not lacking for performance, thanks to Intel’s “Ice Lake” Xeon processors, and offers ample expansion with up to seven PCIe slots, including OCP 3.0. Overall, we found the NF5266M6 a versatile storage server that checks the boxes that buyers in this segment require.

Inspur NF5266M6 Specifications

The NF5266M6 is one of Inspur’s six offerings in its rackmount M6 server family. In addition to 24 3.5-inch hot-swappable front bays, it also supports a variety of drive options around the back, including a bank of E1.S ruler SSDs. Its seven PCIe slots include up to two OCP 3.0 slots. This server supports two third-generation Intel “Ice Lake” Xeon Scalable CPUs and 16 DIMM slots (eight per CPU) for up to 2TB of RAM.

The NF5266M6’s competition includes the HPE Apollo 4200 Gen10, which also offers 24 hot-swappable 3.5-inch bays and 16 DIMM slots. For the most part, there are few systems that offer this much 3.5″ density within a 2U form factor.

For system management, the NF5266M6 runs Intelligent Management System BMC (ISBMC4) with IPMI 2.0 and Redfish 1.8 support.

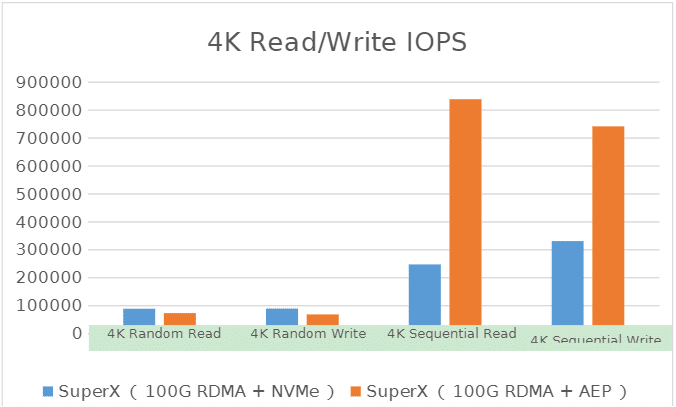

Inspur also offers its SuperX storage in hyper-converged infrastructure (HCI) via Inspur Rail. Multiple NF5266M6 servers can work together for superior performance. In Inspur’s testing, it configured three NF5266M6s with two Intel Xeon Gold 6330 CPUs, 128GB of RAM, two NVMe caching drives, and one 4TB SAS storage drive, and connected them through a 100Gbps TCP network. Performance in that scenario is up to 830,000 IOPS in 4K sequential read and 740,000 IOPS in 4K sequential write, according to Inspur.

The NF5266M6’s full specifications are as follows:

| Model | NF5266M6 |

| Form Factor | 2U rackmount server |

| Processor |

|

| Memory | 16 x memory slots.

|

| Storage | Front: up to 24 × 3.5-inch SAS/SATA SSDs (hot-plug)

Rear:

|

| I/O Scalable Slot | Up to 7×PCIe slots, including 1x OCP3.0 slot, up to 2x OCP3.0 slots. |

| Network | Up to 2 x OCP3.0, 1/10/25/40/100/200Gb/s 1/10/25/40/100/200Gb standard NICs. |

| Interface | 2 x rear USB3.0 + 1 x rear VGA + 1 x COM port |

| System Fan | N+1 redundancy and hot-pluggable. |

| PSU | 1+1 redundant platinum power supplies High-voltage DC and titanium power supply. |

| System Management | An independent 1000Mbps NIC dedicated to IPMI remote management. |

| OS | Microsoft Windows Server, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, CentOS, etc. |

| Dimension

(W x H x D) |

|

| Weight | 45kg (99.2 lbs) |

| Working Temperature | 5°C-40°C. 41°F-113°F. |

Inspur NF5266M6 Build and Design

The NF5266M6 has normal 2U rackmount measurements of 19 by 3.4 by 35 inches (including mounting ears). The front of the server has a different look than you’d expect, however, as no individual drive bays are visible. Instead, the 24 hot-swappable front bays are uniquely divided into three trays of eight drives. (See our demonstration on TikTok.)

The drives sit in caddies with a unique handle that allows you to simply lift them out, almost like a mini-shopping basket. (We also demonstrated this on TikTok.)

This server can be configured in numerous ways. There are up to seven PCIe slots, which include five slimline x8. Two 3.5-inch or eight drives can be installed around back. As seen below, our configuration has four 2.5-inch drives, two SATA and two NVMe. Interestingly, Inspur also offers an option with eight E1.S NVMe SSDs. This configuration opens a lot of doors with the HDDs inside. SDS players will love having access to so much flash and capacity in one box.

An OCP 3.0 module is optional; it supports up to 100Gbps NICs. Intel Persistent Memory 200 series modules are also supported. The rear port selection includes a VGA video-out (which uses an Aspeed 2600 controller), two USB 3.0, and COM (Serial).

The NF5266M6 supports two Intel Ice Lake Xeon CPUs. We’re testing it with two 205-watt chips, though up to 225-watt chips are supported. This server’s cooling comes from six 6038 or 6056 mid-chassis fans, which you can see here.

The airflow is well-positioned to pass through the CPU heatsinks and surrounding memory DIMMS. Each CPU gets eight DIMMs. The 1+1 redundant power supplies, visible at the bottom right, are either 1300W or 1600W and carry 80 Plus Platinum (94% efficiency) ratings. Titanium gold power supplies (96% efficiency) are also supported.

Here you can see the motherboard’s twin M.2 slots in our configuration. These are nice to have, as some servers omit them and force you to use a drive bay or PCIe slot for boot storage.

Inspur NF5266M6 Performance

We are testing the NF5266M6 configured as follows:

- (2) Intel Xeon Platinum 8352Y (32-core/64-thread, 48MB cache, 205-watt TDP)

- 256GB DDR4-3200 ECC (16 x 16GB RDIMM)

- (2) 7.68TB Solidigm P5510 SSDs (for SSD testing)

- (24) 8TB Western Digital HDDs (for HDD testing)

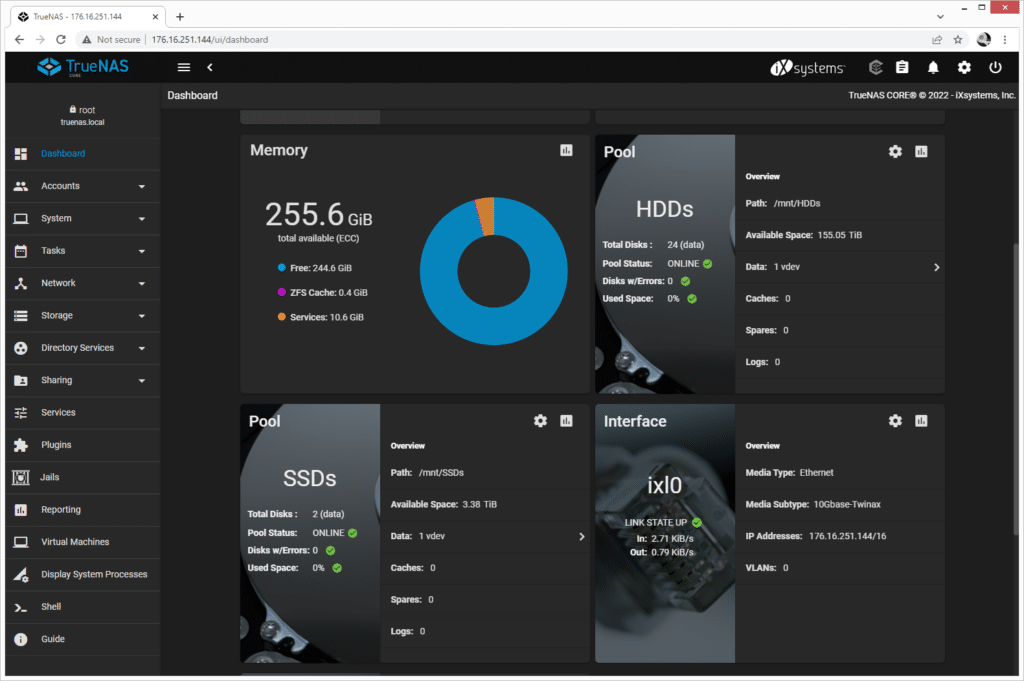

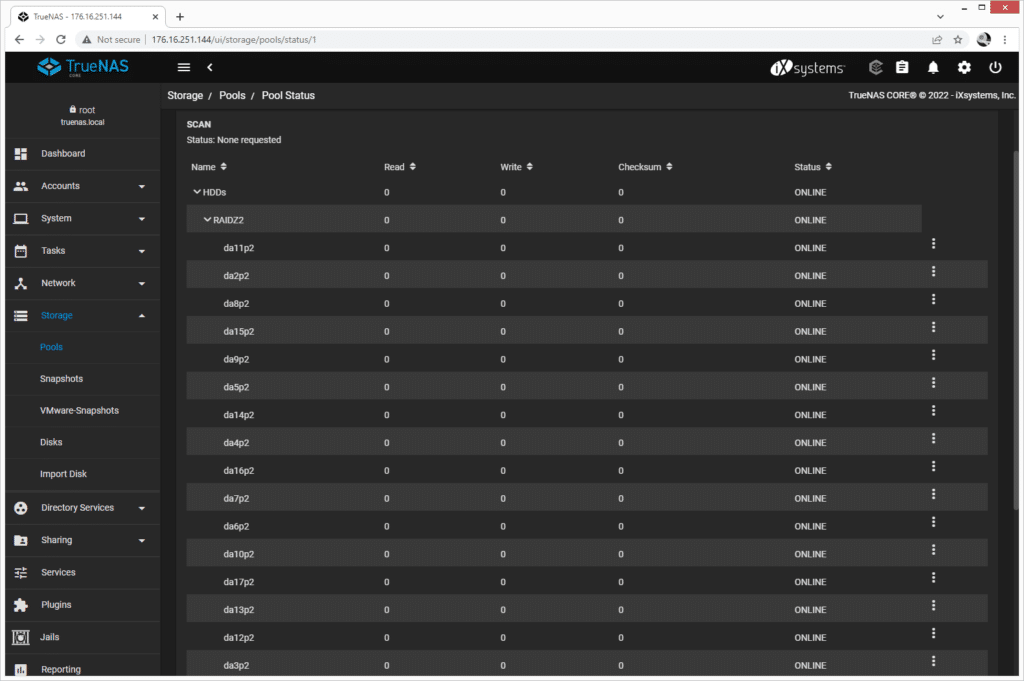

Most of our testing will be done with the SSDs. We focused on synthetic workloads based on the configuration supplied. That said, with the hard drives, we did install TrueNAS CORE on the NF5266M6 just to demonstrate that it works. Configured in RAIDZ2, our 24-drive setup started and ran without a hitch. If you were to leverage 20TB hard drives, you could get nearly half a petabyte of storage out of this platform, with room for NVMe storage for cache or a flash tier.

VDBench Workload Analysis

When it comes to benchmarking storage devices, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions.

These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 128 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 32 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 16 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

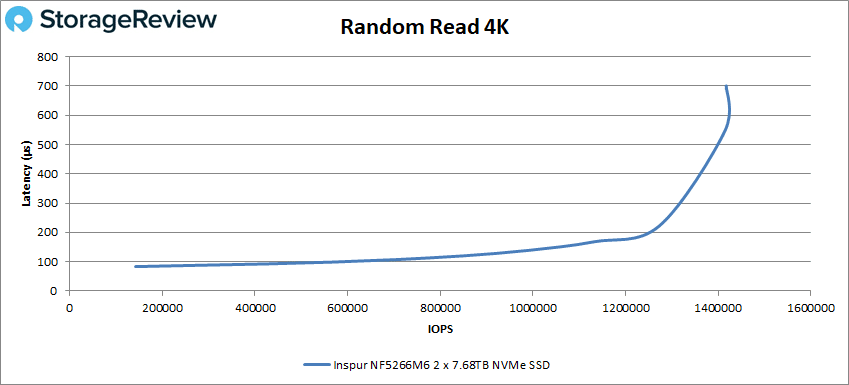

Starting with 4K random read, the NF5266M6 performed reasonably well, staying under 200µs latency until about 1,200,000 IOPS; it ended the test at 1,416,752 IOPS with a latency of 688µs.

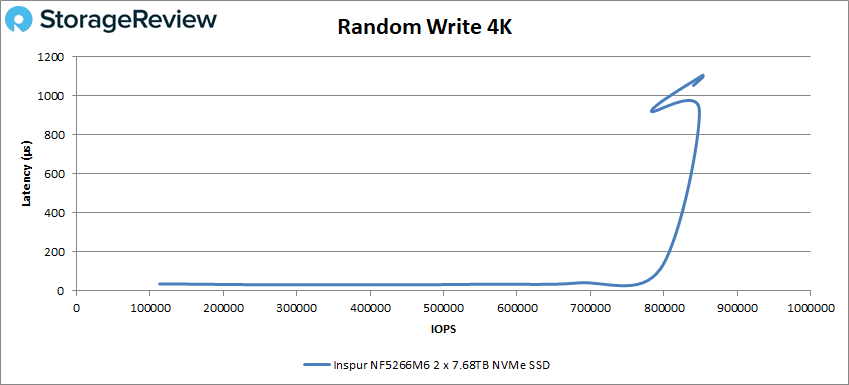

The NF5266M6 fared similarly in random write 4K, where latency was sub-50µs until about 700,000 IOPS, when it skyrocketed to end the test at 1,054µs with 840,663 IOPS.

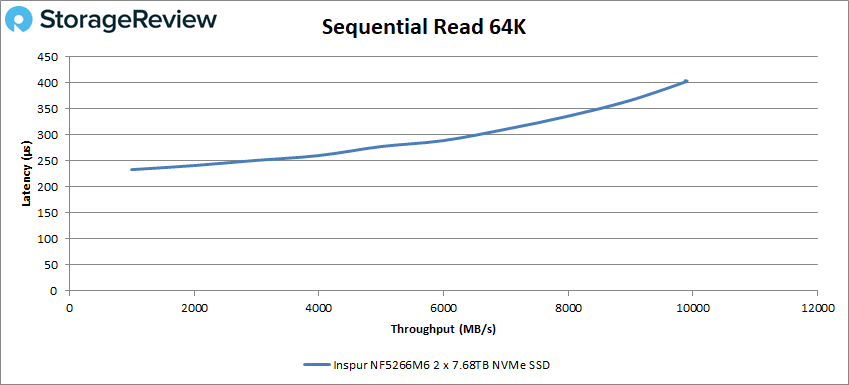

Next up is 64K sequential testing. Starting with read performance, the NF5266M6 ended at 9,882MB/s at 404µs latency, a respectable showing given we’re only leveraging two NVMe drives.

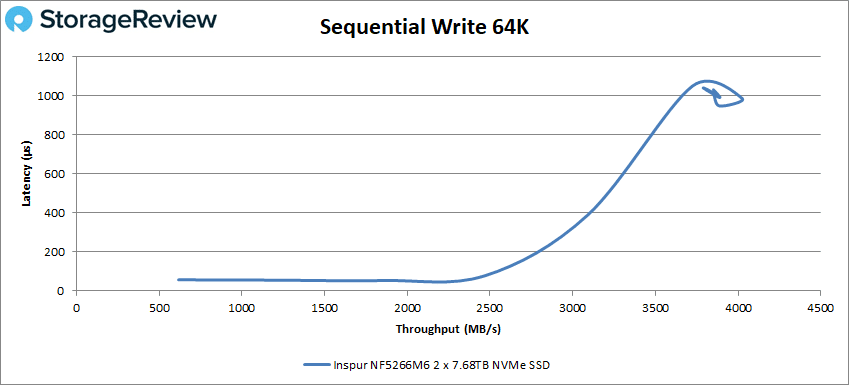

Sequential write 64K, we saw the NF5266M6 slightly unstable at the top end; its best showing was 3,888MB/s at 950µs, ending the test slightly worse than that at 3,852MB/s with 1,033µs latency.

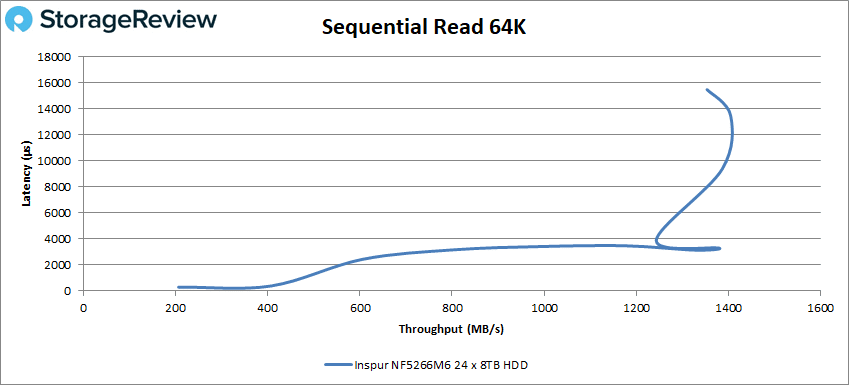

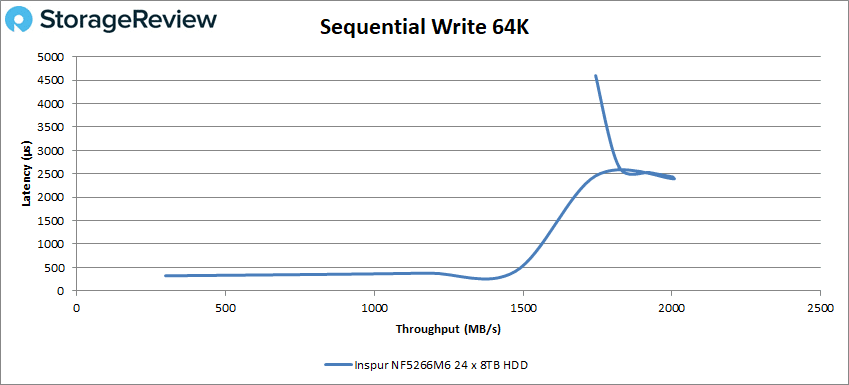

We’ll also look at the 64K sequential testing with hard drives, focusing on peak performance only. In 64K sequential read, the NF5266M6 achieved 1,403MB/s.

Last in the HDD testing, the NF5266M6 peaked at 2,007MB/s.

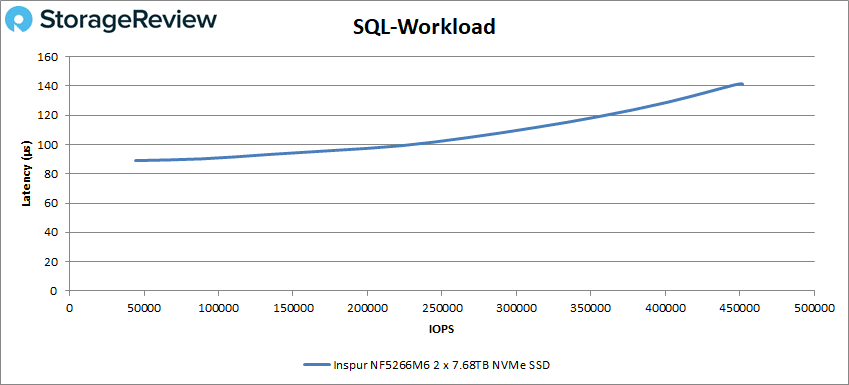

Switching back to SSD-based testing, our SQL workloads follow; these include SQL Workload, SQL 90-10, and SQL 80-20. The NF5266M6 began SQL workload with 89µs latency and 44,592 IOPS. Latency gradually increased, finishing the test at 141µs and 451,681 IOPS.

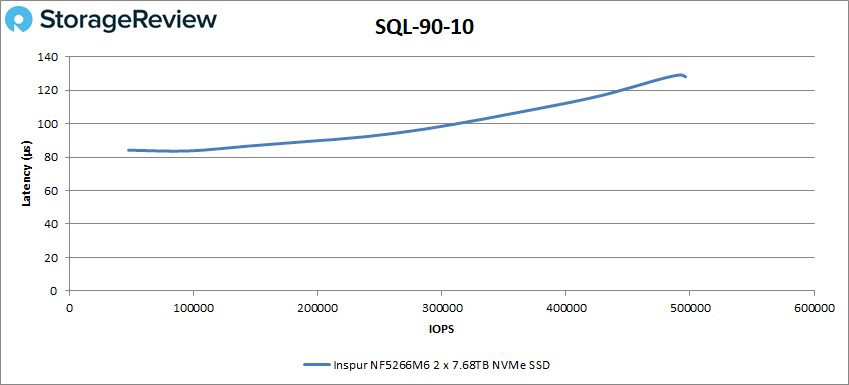

Latencies were lower in SQL 90-10, starting at 84µs with 47,578 IOPS and peaking at 128µs with 496,215 IOPS.

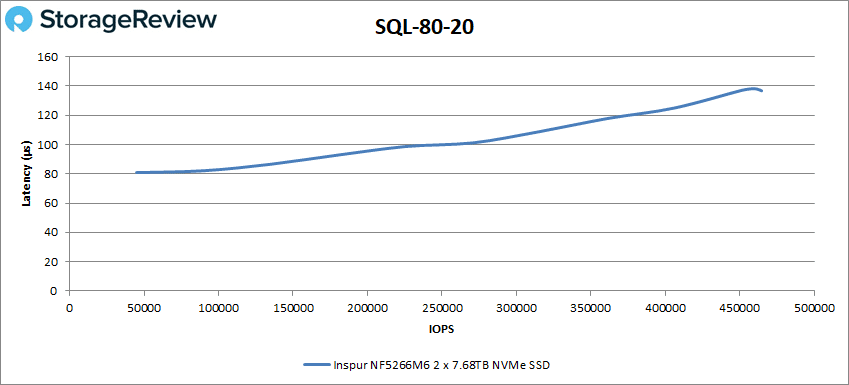

The NF5266M6’s performance was similar in our last SQL test, SQL 80-20, where it started with a low latency of 81µs with 45,195 IOPS and ending at 137µs with 464,677 IOPS.

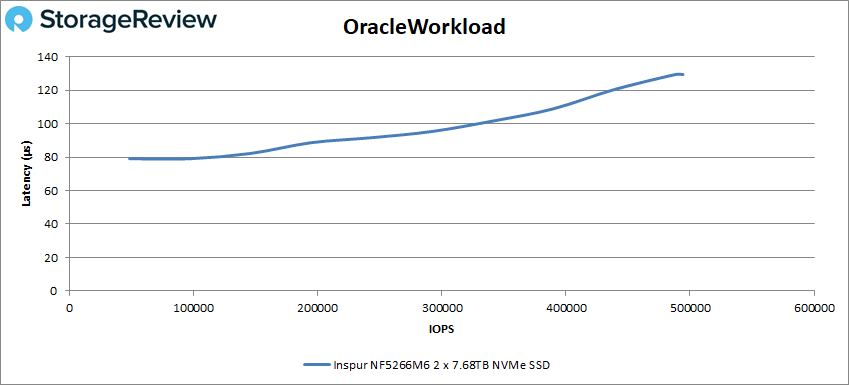

Continuing onto our Oracle workloads, the NF5266M6 exhibited similar behavior as it did in the SQL tests, with latencies starting low and gradually increasing with no surprises. In our first test, Oracle workload, we saw a starting latency of 79µs with 48,677 IOPS and a finish at 129µs with 491,131 IOPS.

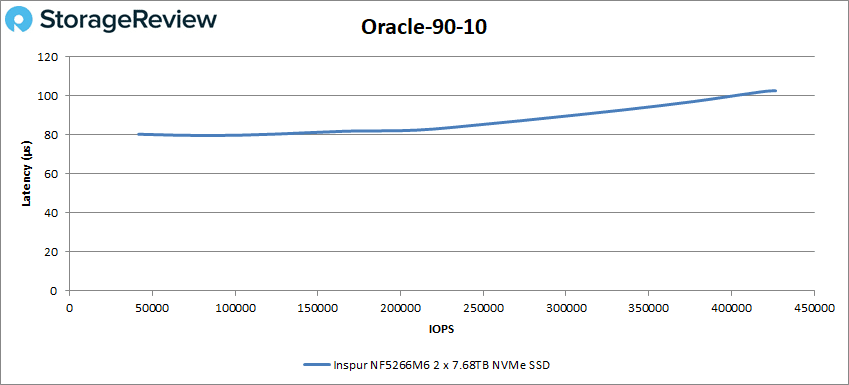

In Oracle 90-10, the NF5266M6 was quite stable under 85µs through about 200,000 IOPS. It finished the test at 103µs and 426,744 IOPS.

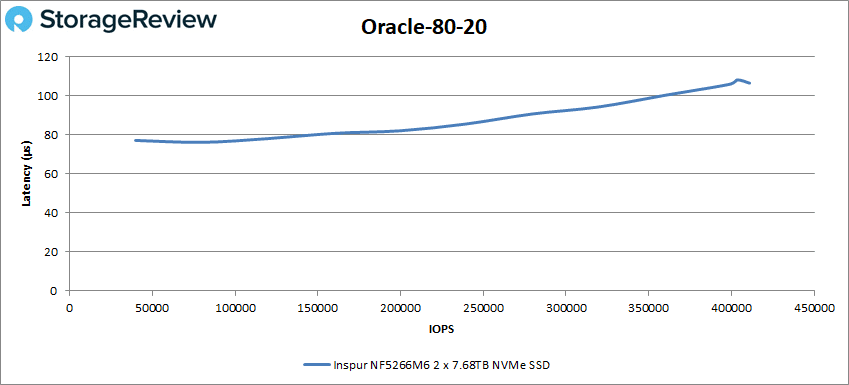

Latencies were slightly less stable in Oracle 80-20, though they started lower, at 77µs with 40,018 IOPS. The peak result was 106µs with 410,738 IOPS.

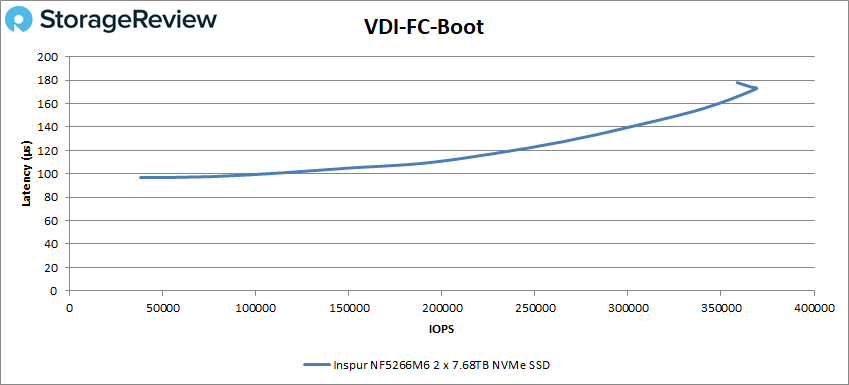

Our final test series is VDI clone test, of which we run Full and Linked. Starting with VDI Full Clone (FC) boot, the NF5266M6 performed predictably until the end of the test. It achieved 368,948 IOPS at 173µs before regressing to 358,679 IOPS at 178µs to finish the test.

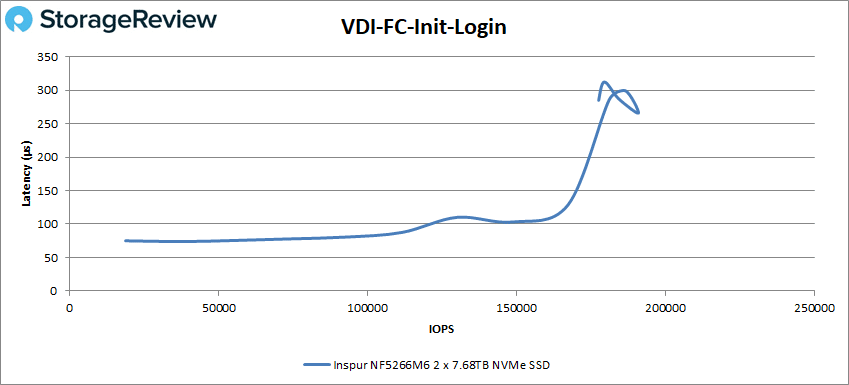

Next is VDI FC Initial Login, where the NF5266M6 started stable but showed instability past 150,000 IOPS where latencies spiked. It finished the test with 177,721 IOPS with 285µs latency.

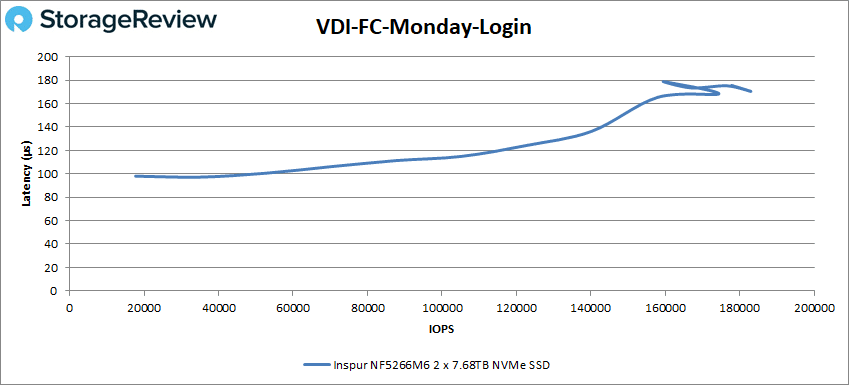

We also saw spikes in VDI FC Monday Login towards the end, though not too bad. The last result was 177,877 IOPS at 176µs latency.

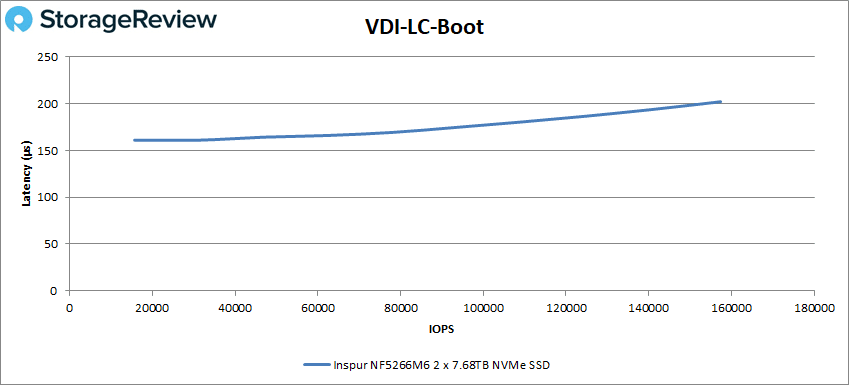

Finally, we look at VDI Linked Clone (LC) testing. Here in the boot test, the NF5266M6 showed stable performance and reasonable latencies, starting at 15,601 IOPS at 161µs latency and ending at 157,448 IOPS at 203µs.

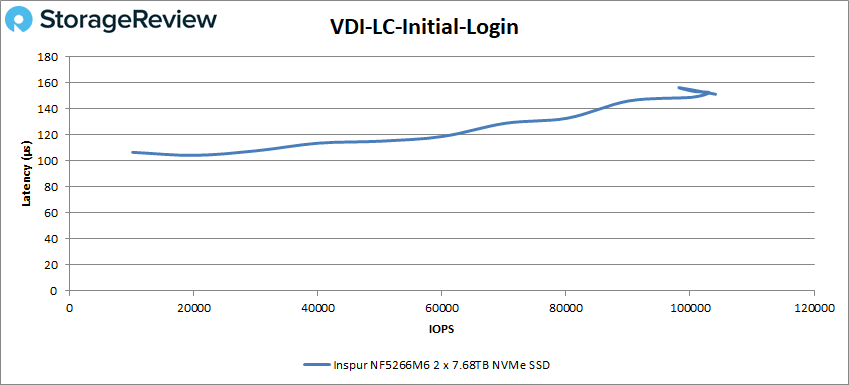

The NF5266M6 showed some minor instability at the end of VDI LC Initial Login, ending at 104,049 IOPS at 151µs latency.

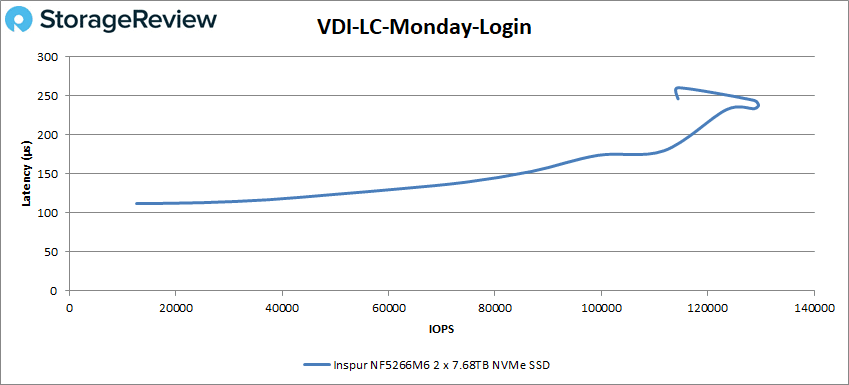

Last is VDI LC Monday Login. Here, the NF5266M6 exhibited instability similar to what it showed in VDI FC Monday Login. Its best showing was 129,641 IOPS at 238µs before regressing to finish the test at 114,476 IOPS at 246µs.

Conclusion

The Inspur NF5266M6 is a compelling high-density storage platform. This 2U two-socket server is based on Intel’s third-generation Xeon Scalable processors (Ice Lake) for good performance. Its memory ceiling is constrained by its 16 DIMM slots, so though this server can be used for other workloads, high-density storage is its strength. Inspur’s NF5266M6 server line is more suitable for application workloads, with higher memory ceilings and NVMe drives for tiering/caching.

Highlights for the NF5266M6 include its unique tray-load drive approach, which makes swapping out multiple drives a breeze. It can also be configured with an additional two 3.5-inch bays or four or eight 2.5-inch bays, depending on how many PCIe slots you need (there are seven, including up to two OCP 3.0). We also like that the NF5266M6 offers two onboard M.2 slots so you don’t have to give up PCIe expansion or NVMe drives for boot volumes.

Performance highlights from our testing include 1,416,752 IOPS in random read 4K, 451,681 IOPS in SQL workload, and 491,131 IOPS in Oracle workload. When equipped with two 7.68TB Solidigm P5510 SSDs, we saw 9,882MB/s in sequential read 64K and 3,888MB/s in sequential write 64K, whereas with 24 8TB drives we saw 1,403MB/s and 2,007MB/s.

Overall, the Inspur NF5266M6 is a capable high-density storage server with as many bays as you’d expect from a much larger server in just 2U, good expansion, wide configuration options, and stable performance for its intended applications. Toss in some NVMe in the back for flash acceleration or a large flash volume with eight E1.S SSDs and this Inspur M6 has tremendous potential.

Amazon

Amazon