The Mangstor NX6325 NVMe Over Fabrics (NVMf) shared storage array offers up to 21.6TB of flash storage in an HP DL380 2U server. Like the NX6320 we reviewed earlier this year, the NX6325 aggregates Mangstor’s add-in card (AIC) NVMe drives and shares them out over the network. This enables latency sensitive applications to take advantage of all the great benefits of NVMe storage, without needing to have an AIC inside every server. When paired with the Mellanox SN2700 100GbE switch and ConnectX4 (Ethernet or InfiniBand), the NX6325 has impressive capabilities, offering up reported read performance of 12GB/s and writes of 9GB/s out of a single array.

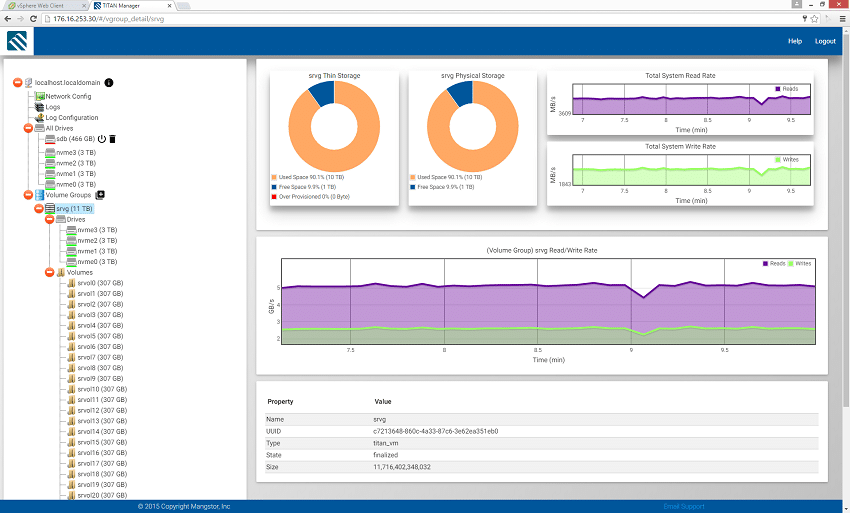

Orchestrating the storage is Mangstor’s TITAN software. We saw an early version of TITAN in the NX6320; Mangstor made the software generally available earlier this month. At its core, TITAN brings tight integration between the NVMe SSDs and Remote Direct Memory Access (RDMA) Network Interface Cards (NICs) while efficiently using the HPE server capabilities. TITAN enables IT managers to dynamically provision flash both locally or remotely with centralized management. Capabilities also include features such as storage caching and cluster management, and TITAN offers a REST API and GUI tools that enable IT managers to easily deploy and manage large clusters of NVMf storage.

Mangstor offers two capacity options configured for either Ethernet or InfiniBand. Buyers may choose from a 10.8TB system configured with four 2.7TB drives or a 21.6TB system with four 5.4TB drives. Our review system is the 10.8TB Ethernet configuration.

Mangstor NX6325 Specifications

- Flash Storage Arrays

- Ethernet

- NX6325R-016TF-2P100GQSF, 10.8TB (4 x 2.7TB SSD)

- NX6325R-032TF-2P100GQSF, 21.6TB (4 x 5.4TB SSD)

- InfiniBand

- NX6325I-016TF-2P100GQSF, 10.8TB (4 x 2.7TB SSD)

- NX6325I-032TF-2P100GQSF, 21.6TB (4 x 5.4TB SSD)

- Ethernet

- Bandwidth Rd/Wr (GB/s): 12.0 / 9.0

- Throughput Rd/Wr (4K) (IOPS): 3.0M / 2.25M

- Power

- 450 W Typical

- Maximum Peak Power 800W

- I/O Connectivity

- 2x100Gb/s QSFP Ethernet

- 2x100Gb/s QSFP InfiniBand

- Read/Write Latency: 110uS / 30uS

- Appliance Attributes

- 2U Rack Mount (HxWxD): 3.44 x 17.54 x 26.75 in (8.73 x 44.55 x 67.94 cm)

- Supply Voltage: 100 to 240 VAC, 50/60 Hz – 800W Redundant Power Supply

- Heat dissipation (BTU/hr): 3207 BTU/hr (at 100 VAC), 3071 BTU/hr (at 200 VAC), 3112 BTU/hr (at 240 VAC)

- Maximum inrush current: 30A (Duration 10ms)

- Approximate Weight: 51.5 lb (23.6 kg) fully populated

- Environmentals

- Standard Operating Support: 10° to 35°C (50° to 95°F) at sea level with an altitude derating of 1.0°C per every 305 m (1.8°F per every 1000 ft) above sea level to a maximum of 3050 m (10,000 ft)

- Humidity: Non-operating 5 to 95% relative humidity (Rh), 38.7°C (101.7°F) maximum wet bulb temperature, non-condensing

- Fabric Protocol Support: RDMA over Converged Ethernet (RoCE), InfniBand, iWARP

- Client OS Support: RHEL, SLES, CentOS, Ubuntu, Windows, VMware ESXi 6.0 (VMDirectPath)

- Operational Management: CLI, RESTful API, OpenStack Cinder

- Array & SSD Warranty: Hardware 5 years; Base software 90 days

- Software Warranty: Covered under 1-yr, 3-yr, 5-yr Gold or Platinum Level Service and Support Options

Build and Design

In the previously reviewed NX6320 array, Mangstor leveraged a Dell R730-based platform. This time around,with the NX6325, they’re using an HPE ProLiant DL380 Gen9. While on the surface the move appears “like for like,” the change is significant for customers wanting to deploy 100Gb Ethernet or Infiniband, pushing the storage potential to the max. The Dell R730 offers 7 PCIe 3.0 slots; four Full Height x16 and three Half Height x8. The HPE DL380 Gen9 has just 6 PCIe 3.0 expansion slots, although with an appropriate PCIe riser kit, users can create a configuration with six Full Height x16 slots.

That difference is significant, since the cards Mangstor offers are Full Height PCIe SSDs, and the 100GbE Mellanox ConnectX-4 NICs are x16 slots. In the Dell configuration, users would have to drop one PCIe SSD to fit in the 100GbE card, while in the HPE configuration, users can equip the system with 4 PCIe SSDs and two 100GbE NICs at the same time. This is less of an argument about which server is better than the other, but rather an analysis about which server works better with this rather unique card combination. Mangstor points out that for customers wanting to use 40Gb Ethernet or 56Gb Infiniband, both the NX6320 and NX6325 products deliver equivalent features and performance. Since we’re testing the 100Gb Ethernet in this review, the NX6325 is the right choice.

Sysbench Performance

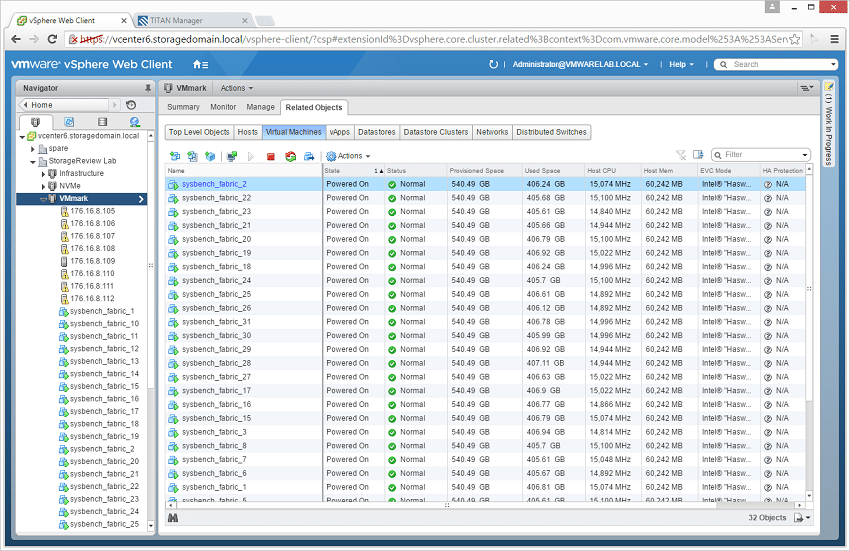

Each Sysbench VM is configured with three vDisks, one for boot (~92GB), one with the pre-built database (~447GB) and the third for the database under test (307GB). The LUNs are an even split of 90% of the usable storage (11TB) to adequately fit the database (253GB in size). From a system-resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM, and leveraged the LSI Logic SAS SCSI controller. Load gen systems are Dell R730 servers; we employ from four to eight in this review, scaling servers per 4VM group.

Dell PowerEdge R730 8-node Cluster Specifications

- Dell PowerEdge R730 Servers (x8)

- CPUs: Sixteen Intel Xeon E5-2690 v3 2.6GHz (12C/24T)

- Memory: 128 x 16GB DDR4 RDIMM

- Mellanox ConnectX-4 LX

- VMware ESXi 6.0

Our previous review of the Mangstor NX6320 leveraged Mellanox ConnectX-3 cards and OFED ESXi 6.0 drivers, where one card was assigned to each VM (limiting our scale to the number of NICs on hand). The current iteration of the NX6325 with Mellanox ConnectX-4 LX cards and the latest SRIOV drivers allowed us to share multiple virtual adapters with up to 4 VMs at the same time. With one card allocated per server, we used this configuration to allow us to scale up to testing 32 VMs total.

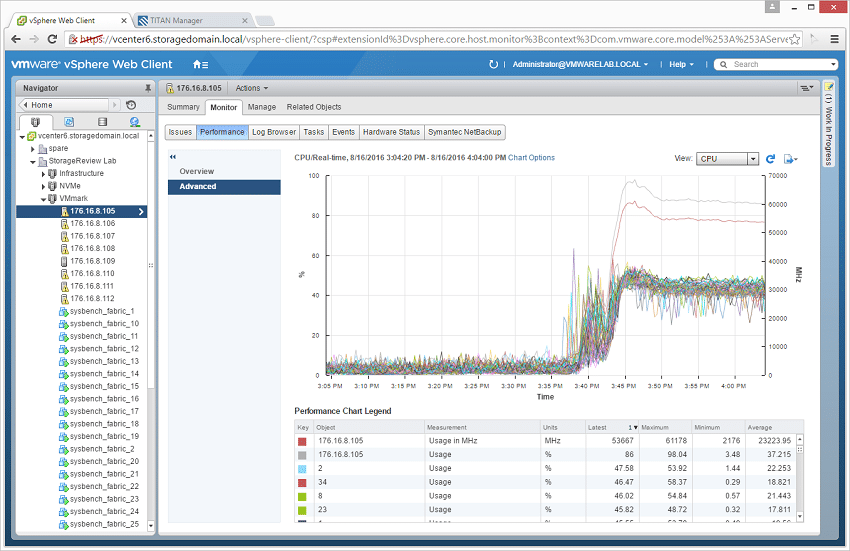

Under a full load of 32VMs, we saw each server level off with roughly 86% CPU utilization.

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Storage Footprint: 1TB, 800GB used

- Percona XtraDB 5.5.30-rel30.1

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

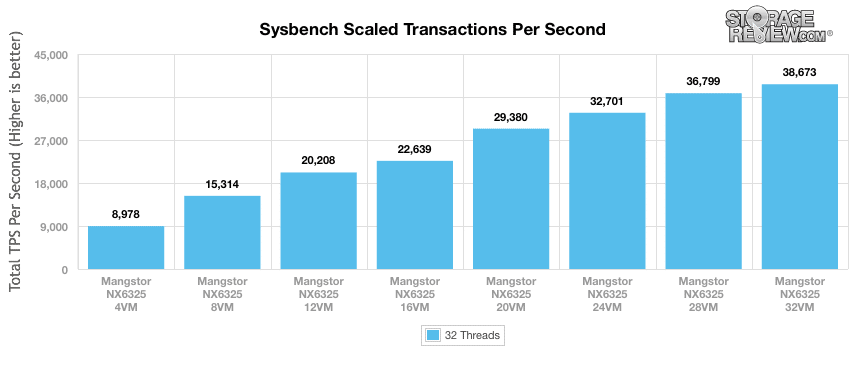

In our Sysbench OLTP test, we took the array from a small load of 4VMs up to an all-out saturation point with 32VMs. The Mangstor NX6325 hit some impressive records. For starters, it offered the fastest performance in Sysbench that wasn’t locally attached flash, and compared to anything else we’ve ever tested, it raised the bar by more than a factor of two. The NX6325 topped out at 38,673 TPS, while the previous record holder for a modern flash shared-storage solution came in at “only” 17,316 TPS.

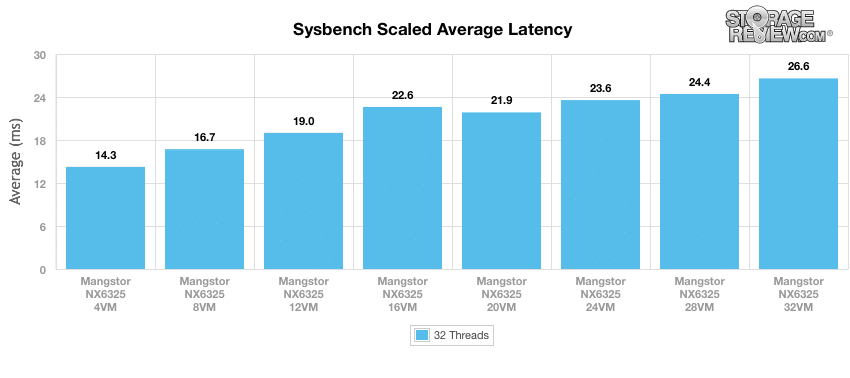

Looking at latency, we find the NX6325 coped very well under load, keeping overall transactional latency in a range of 14.3ms at 4VMs to 26.6ms at 32VMs. So while the load ramped up by 8x, the latency didn’t even double.

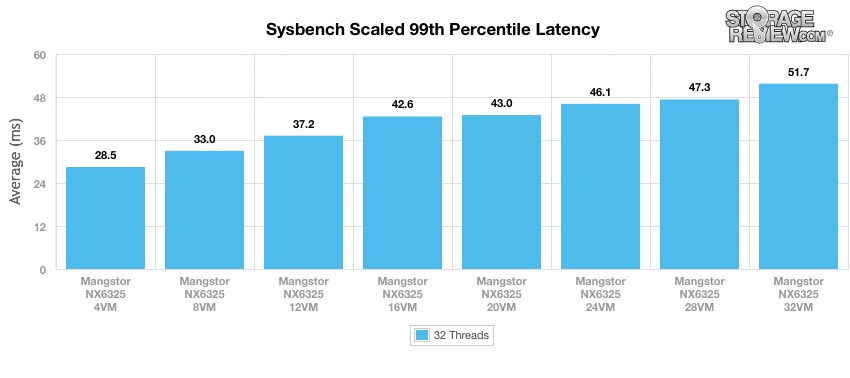

Looking at fringe latency with our 99th percentile figures, the Mangstor NX6325 handled itself quite well under load. We saw values slowly increase as the overall load went up, but nothing rose dramatically.

Conclusion

The Mangstor NX6325 NVMe Over Fabrics (NVMf) shared storage array is one of the first commercially available systems that enables very fast NVMe storage to be taken out of the application servers and put into a box where it can be leveraged by an entire cluster of servers. In this case, the NX6325 that we’re reviewing offers up 10.8TB of NVMe storage in an HPE ProLiant DL380 Gen9, over a fabric consisting of a Mellanox SN2700 100GbE switch and ConnectX4 LX adaptor cards. The net result is a package that is absolutely scorching to the point that it’s the fastest shared storage to ever hit the lab. To be fair, this isn’t directly comparable with other fast things we’ve seen (like the more traditional all flash SAN) and because of that a few things need to be made clear.

NVMf is still a very young concept in terms of execution. Mangstor, for their part, is very far along on the path, and for extremely latency sensitive applications, the results are phenomenal. A few things still need to mature for this to displace SAN technology everywhere, but for key workloads, it’s a fantastic option. Compared to other NVMf solutions, Mangstor leverages existing Ethernet or IB for the fabric, making it more compatible than some that use customized hardware. It is also important to note that the way storage is presented in this array doesn’t offer protection from a PCIe SSD failure. The array is configured in RAID0 for peak performance and capacity. There are some applications that can cope with systems such as this, but for traditional workloads, it must be stressed that this array is not a “one size fits all.”

Looking at performance in our Sysbench OLTP test, we saw extraordinary numbers. At 4VMs, aggregate TPS was higher than most arrays offer total, or what locally-attached NVMe flash may offer. Ramping that workload up by increasing the number of VMs, we saw aggregate performance continue to climb until it outpaced the nearest flash shared-storage solution by more than a factor of two. The main downside for our environment right now is driver support, which prevents it from being used as a VMware shared datastore. This presents a few challenges, such as VM migration (being tied to a virtual network device) or guest OS support, which is currently centered around Linux. However for users who can work around these limitations, the Mangstor NX6325 opens up the door to levels of performance that few, if any, competing solutions can match.

Pros

- Takes all the performance potential of NVMe and shares it over high-speed network

- Fastest shared storage solution we’ve tested to date

- Not tied to customized hardware for the network fabric

Cons

- Requires a good deal of manual configuration

The Bottom Line

The Mangstor NX6325 NVMe Over Fabrics (NVMf) shared storage array offers exceptionally fast NVMe flash performance over a shared Ethernet fabric.

Amazon

Amazon