Almost two years ago, we completed a review of a Viking Enterprise Solutions (VES) storage server with 24 NVMe bays and twin compute nodes in a 2U chassis. VES is a major OEM, creating some of the most innovative storage server systems in the market. Recently we had the opportunity to get hands-on with a version of their storage server that’s tuned for single-ported NVMe drives. Naturally, we took 24 Solidigm P5316 30.72TB QLC SSDs, dropped them in the server, and stood back to see what almost 750TB of RAW flash can do.

Beyond their OEM work, VES also sells to a variety of HPC and Hyperscale customers. This is an important consideration because as we consider storage server performance outside of the traditional enterprise realm, the way organizations with massive data footprints configure storage is different.

Many of the workloads these servers target are what we consider modern analytics and AI applications where performance is critical and data availability a little less so. As such, these configurations do not look like a traditional SAN, where data services and resiliency are of primary focus. In this example, we’re configuring for optimal performance within the VES storage server rather than adding I/O cards and leveraging the server as shared storage.

This configuration nuance is important. We’re delivering 12 of the P5316 SSDs to each AMD EPYC compute node in the back of the system. These nodes address the storage in JBOD, assuming application-level resiliency for data availability. While we didn’t leverage GPUs for this report, it’s quite reasonable to configure those nodes with something like an NVIDIA A2 for analytics or inferencing workloads.

Before we dive too far into the server and storage configuration, though, let’s look into the key hardware components that are part of this work.

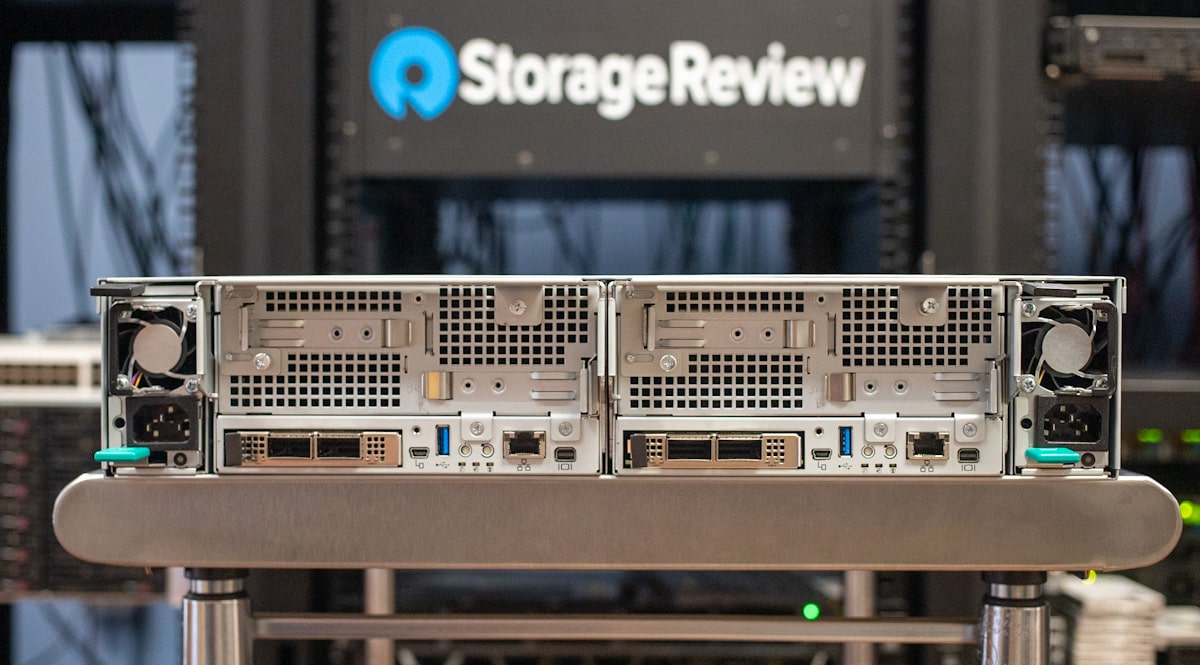

Viking Enterprise Solutions VSS2249P Storage Server

For this work, we went to VES to find a powerful server that could get the most out of the 24 Solidigm P5316 30.72TB SSDs in the front. This isn’t a trivial issue; the single-ported drives will perform best in a solution that can deliver four PCIe v4 lanes from one of the AMD server nodes to each drive. Direct access offers the highest performance from each SSD, as opposed to flowing through an internal expander that can limit bandwidth. Additionally, this system is designed for single-ported SSDs, like Solidigm P5316, compared to the review from the previous Viking Enterprise Server that was designed for dual-ported SSDs.

Viking Enterprise Solutions VSS2249P Highlights

The Viking Enterprise Solutions VSS2249P is a 2U dual-node storage server featuring 24 bays for single port U.2 PCIe v4 drives. More specifically, each server node (or module) supports 12 single-port, hot-pluggable NVMe 2.5-inch U.2 (SFF-8639) SSDs via x4 PCIe Gen4 lanes, making this a performance-driven server. This makes it ideal for use cases where I/O bottlenecks can be an issue, such as edge-computing storage, analytics, machine learning, AI, OLTP databases, high-frequency trading, as well as modeling, simulation, scientific research, and other high-performance use cases.

VES is a leading storage and server development company specializing in developing large-scale solutions for high-performance and cloud-computing enterprise OEM customers. Due to their broad customer portfolio, they have extensive experience in leveraging emerging technologies when developing their solutions, which can help give their customers a competitive advantage. We expect much of the same with the VSS2249P.

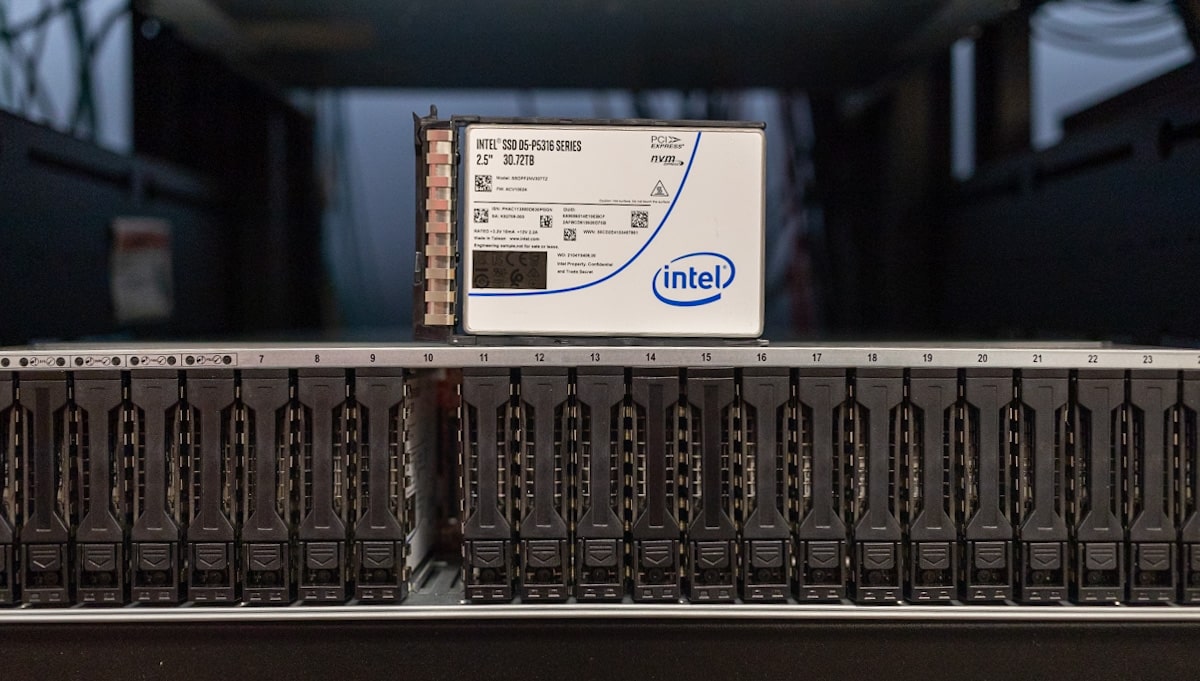

Solidigm D5-P5316

We’ve had this server in our lab before, but this time we’ve populated them with 30.72TB Solidigm D5-P5316 PCIe Gen4 NVMe SSDs, which amounts to almost three-quarters of a petabyte for storage via the U.2 15mm form factor. This will allow customers (specifically in the hyperscale space) to have large-scale deployments. The D5-P5316 drives also feature 144-layer QLC NAND, which will drive down costs while retaining high-capacity models and solid performance.

The D5-P5316 are quoted to deliver up to 7GB/s in sequential reads, while the 30.72TB models offer a bit more speed in writes with 3.6GB/s. In random 4K reads, Solidigm quotes their new drive at 800,000 IOPS for all models. The drive also features a 0.41 drive writes per day (DWPD) rating, a 5-year warranty, and a range of enhanced security, including AES-256 Hardware Encryption, NVMe sanitize, and firmware measurement.

These drives are ideal for environments that need to optimize and accelerate storage within Data center workloads such as Content Delivery Networks (CDN), Hyper-Converged Infrastructure (HCI), and Big Data.

Overall, we found that Solidigm has created a drive that finds an outstanding balance between capacity, performance, and cost–which is perfect for the VSS2249P.

Viking Enterprise Solutions VSS2249P Components and Build

The two server modules inside the VSS2249P enclosure are hot-swappable and are equipped with an AMD EPYC Rome CPU, two x16 PCIe Gen4 slots, and one OCPNIC v3.0 that supports Gen 4 PCIe add-in cards, and up to 8 DIMMs. At 3.43 inches (H) x 17.2 inches (W) x 27.44 inches (D), the VSS2249P is also spec’d to fit nicely in an industry-standard 19-inch, 1.0-meter rack, allowing it to be deployed in a variety of applications.

Each node in our configuration includes an AMD EPYC 7402P CPU, which features 24 cores, a base clock of 2.8GHz (max boost of 3.35GHz), 48 threads, and 128MB in L3 cache. It’s also outfitted with 64GB of DDR4 RAM (8 x 8GB) and a 250GB M.2 boot SSD.

The VSS2249P is designed as a cable-free system. For example, the drive plane provides connectivity for power, data, and management, as well as the PSUs. The system fans are also part of the server sled assembly (connected to the drive plane via the fan board) and are powered and controlled by the drive plane. For easy access, the fans are removed via the top cover. All SSDs plug directly into the mid-plane. This makes servicing the VSS2249P seamless, while the lack of cables makes for better airflow and thus cooler server nodes.

Viking VSS2249P Specifications

| Enclosure | 2 Node, Single Port Drives |

| Gen 4 PCIe Slots | Two x16 HH/HL, One x16 Gen OCP v3 |

| NTB | N/A |

| Server Canister | Single CPU, 8 DDR4 DIMM Slots |

| Firmware |

|

| CPU | EPYC (ROME or MILAN) CPU |

| Management Network | 1GbE – Mgmt Port |

| Servers |

|

| Memory |

|

| External Interfaces | 1 USB, 1 Displayport, 1Gb IPMI, 1 MicroUSB console port |

| AC Power |

|

| Hot-swappable Components |

|

| Operating Environment |

|

| Non-Operating Environment |

|

| 2U Enclosure Dimensions and Weight |

|

24 x Solidigm D5-P5316 Performance Tests

While most of us look at QLC flash as a lower-performance alternative to TLC SSDs, that is only looking at one side of the equation. Smaller block random write performance may be lower due to architectural decisions, like coarse indirection, but sequential writes and large block random write performance is very competitive and very close to entry-level TLC DC SSDs.

With the TLC-based flash in the market, write speeds are lower, but read performance is still highly capable, if not completely competitive. Our focus in this review was leveraging 24 Solidigm P5316 30.72TB SSDs inside a 2-node server, showing how far we can push them with plenty of compute behind them.

The last time we looked at a similar Viking Enterprise Solutions system, it was built to share 24 SSDs across two nodes, with each node having multi-path access to each SSD. The VSS2249P uses similar nodes on the backend, although 12 SSDs are direct-connected to one node, with the remaining 12 to the other. This gives each SSD a full 4-channels of PCIe Gen4 lanes back to the node it is connected to.

We installed Ubuntu 20.04 on each server and leveraged FIO to simultaneously saturate all 24 Solidigm P5316 SSDs. Each SSD was fully filled up with a sequential fill and then partitioned to focus the workload footprint on 5% of the drive surface. We focused on QLC-optimized block sizes, which overlap with traditional flash media. The main difference comes down to minimizing write activity smaller than 64K, which forces a write-indirection pain point of QLC flash. With that said, the workloads we measured were as follows:

- 1MB Sequential

- 64K Sequential

- 64K Random

- 64K Random 70R/30W

- 64K Random 90R/10W

- 4K Random Read

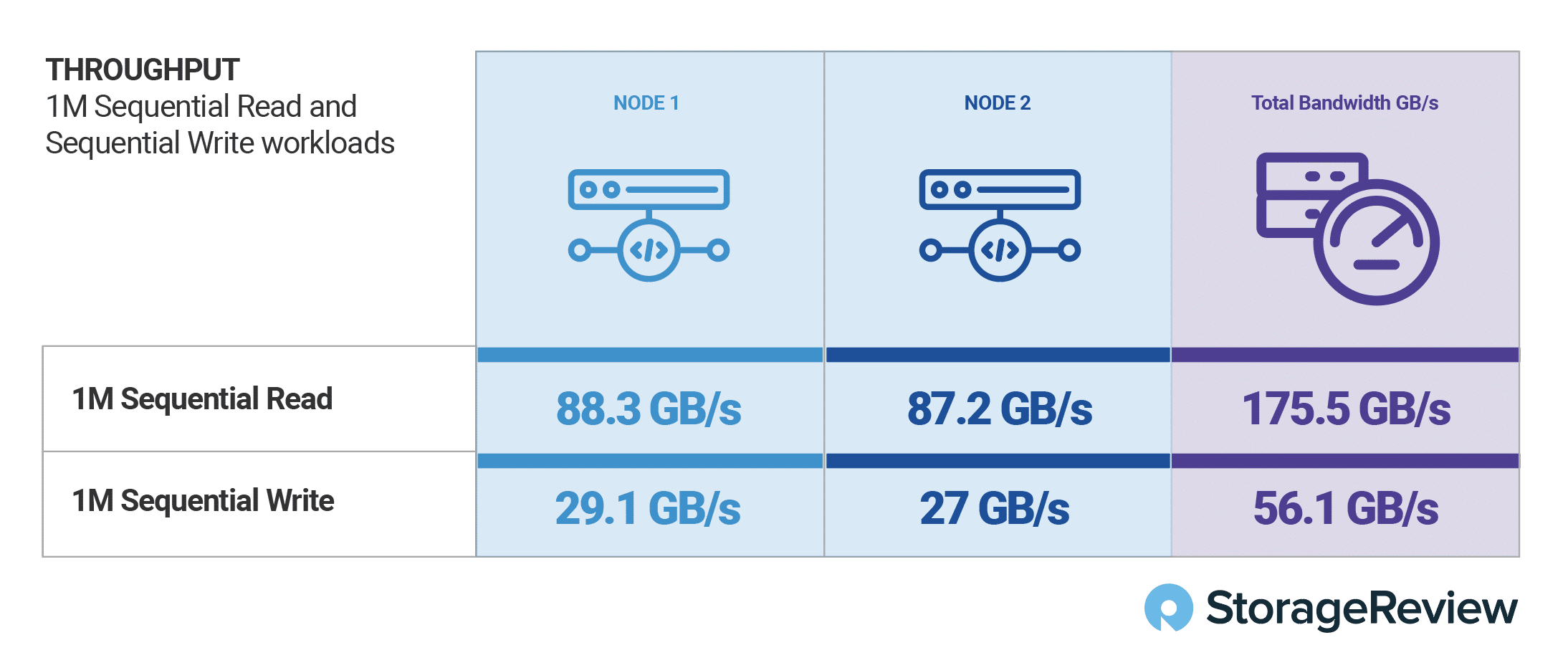

In our first test with a 1MB sequential transfer size, we measured an incredible 175.5GB/s of bandwidth across 24 of the P5316 SSDs. This worked out to just over 7.3GB/s per SSD on the front end. With a sequential 1M write workload, that amount measured at 56.1GB/s or 2.34GB/s per SSD.

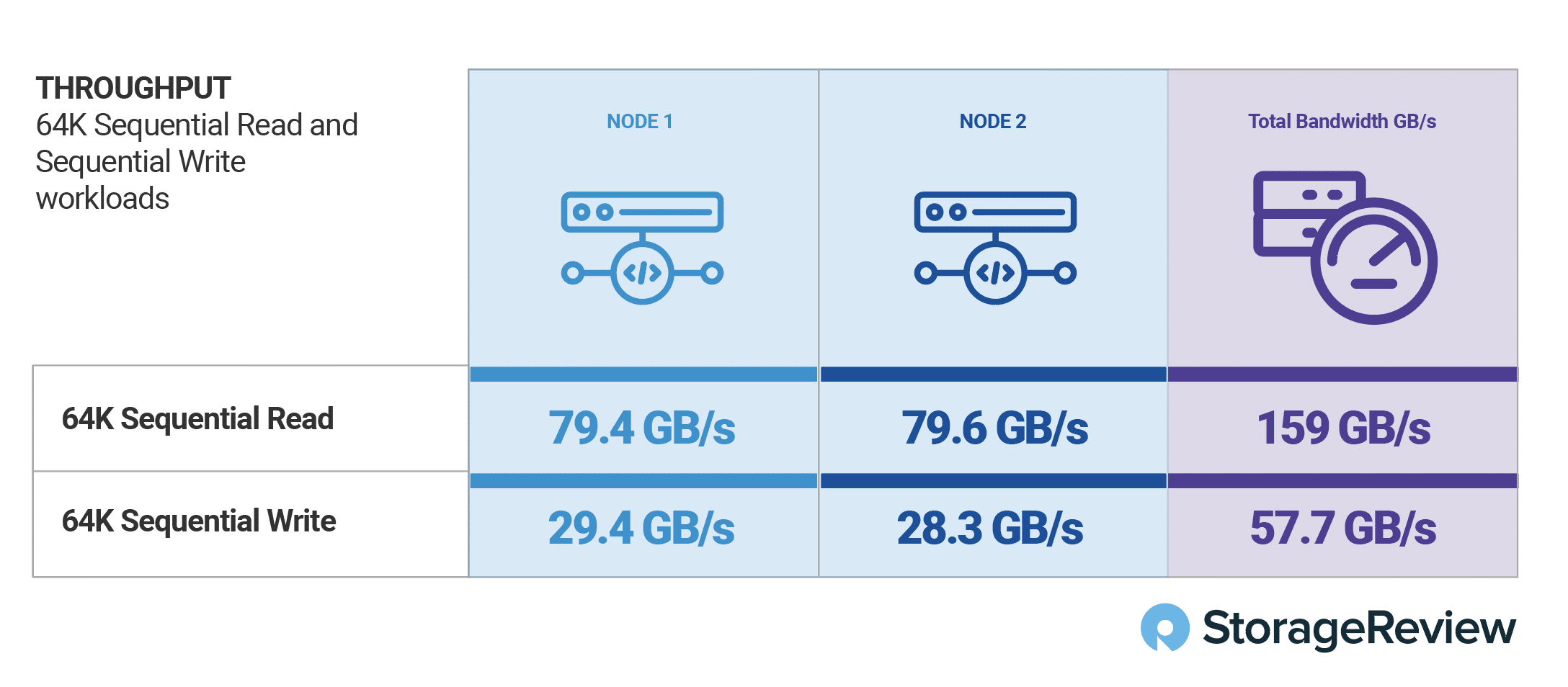

Decreasing the block size to a 64K workload, the Solidigm P5316 SSDs offered 159GB/s of bandwidth or over 6.62GB/s per SSD. The write workload measured 57.7GB/s or 2.40GB/s per SSD.

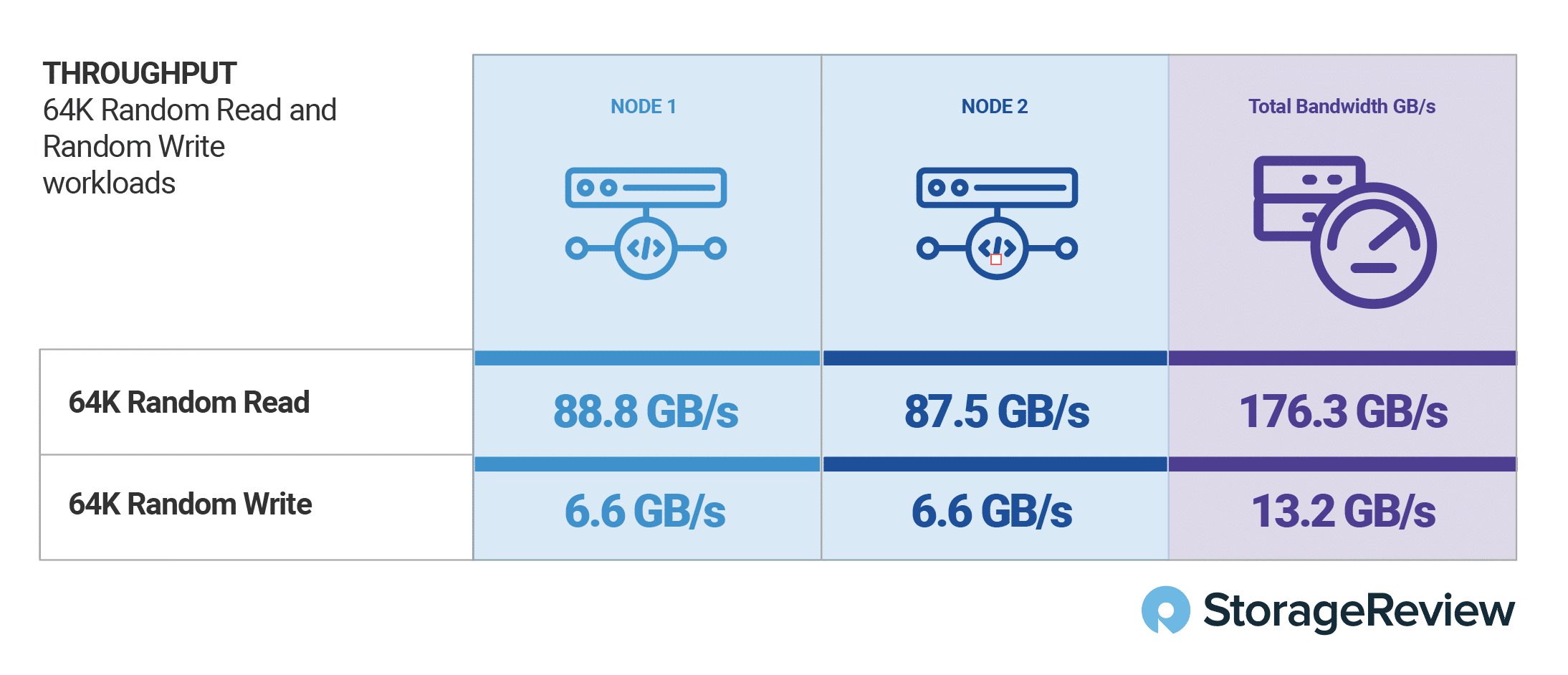

With not all workloads being sequential, we moved to a more demanding 64K random working set, which put the QLC SSDs into one of their most stressful situations. Read traffic saw its highest bandwidth, with an insane 176.3GB/s of traffic. Switching from read to write, though, this is where the P5316 SSDs saw the most stress, measuring 13.2GB/s or 550MB/s per drive. This lines up with the spec sheet figures for this workload, but does show where these SSDs reach their limit.

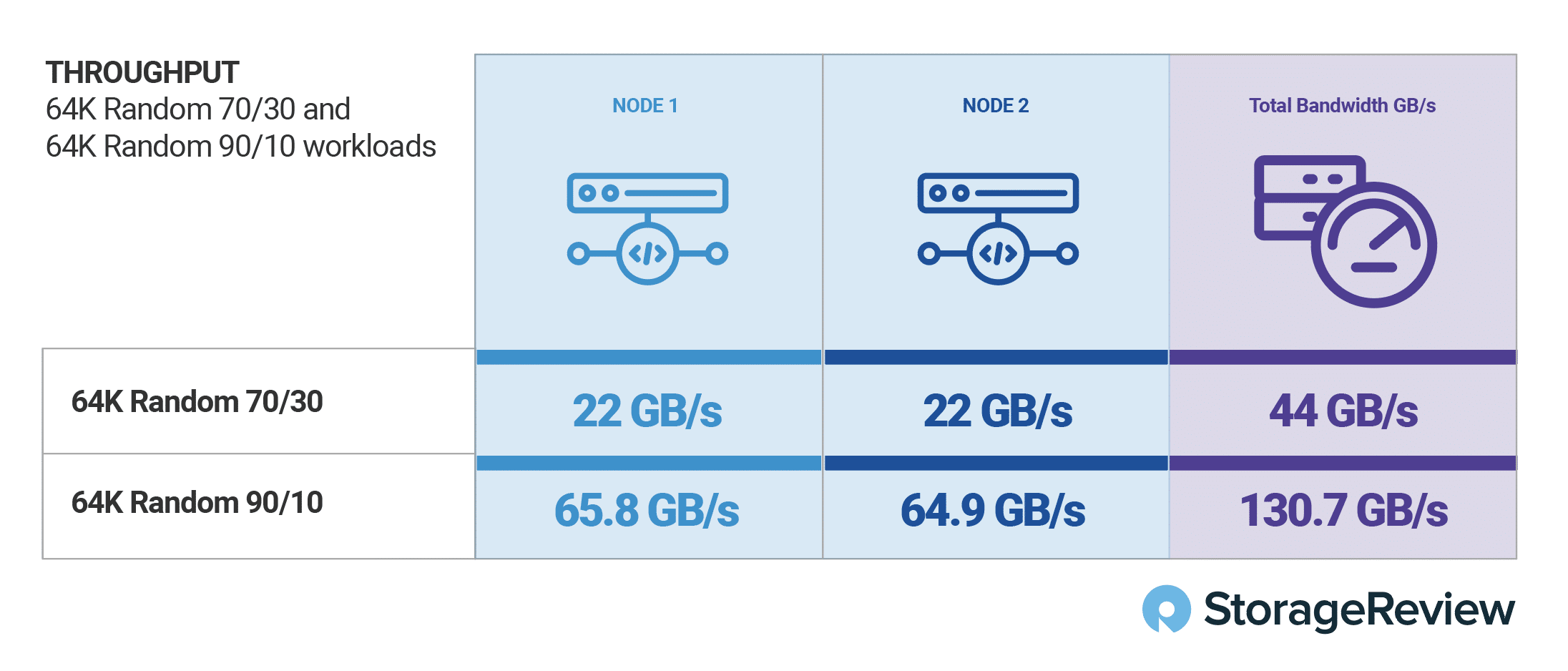

Knowing that 64K random read offered the highest drive performance and write offered the lowest, we looked at a mixed workload combination to see how these drives vary as the read/write balance is shifted. With a 70% read 64K random workload, the drive group measured 44GB/s. However, when we tweaked that further to 90% read, the bandwidth shot up to 130.7GB/s. This further drives the point where QLC SSDs deployed in the right situations can be powerhouse drives, although they aren’t designed to replace TLC SSDs in all situations.

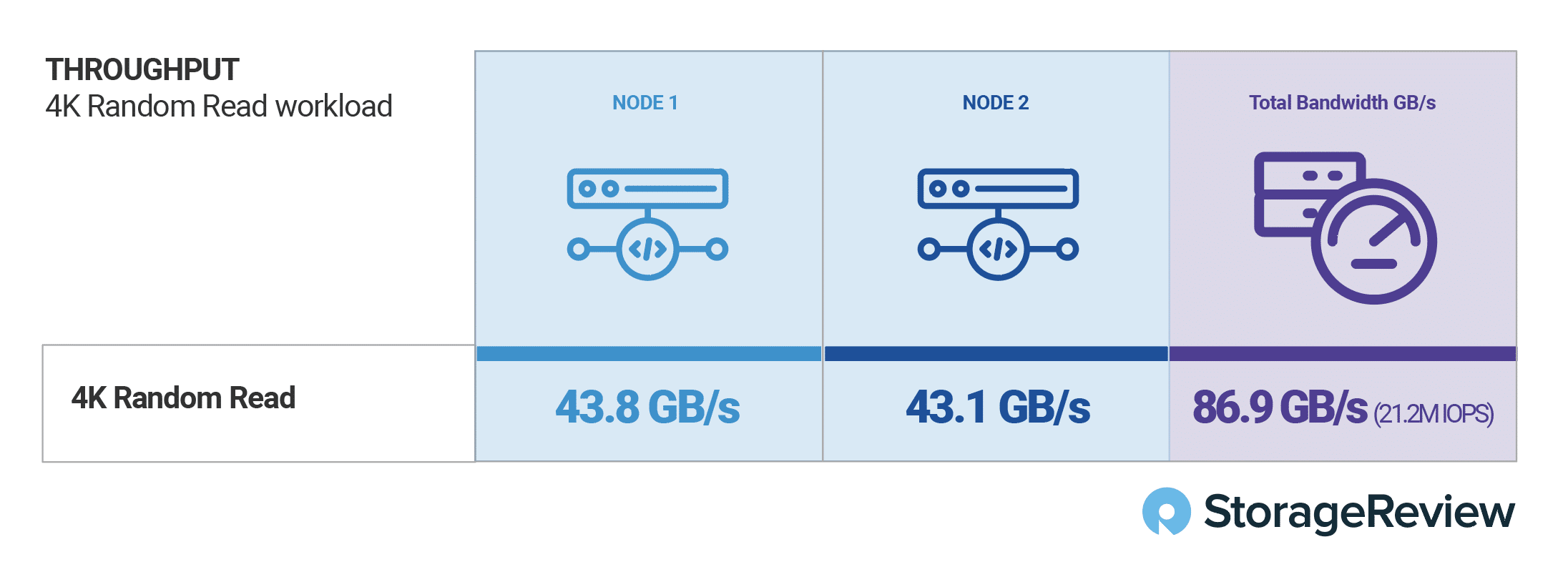

Wrapping up the testing, we looked at a peak throughput test focusing on 4K random read performance. The 4K write was skipped as these drives use a coarse indirection unit of 64K and won’t provide the highest performance on 4K. In 4K random read, we measured nearly 87GB/s of 4K traffic or 21.2 million IOPS. That is an impressive stat, aligning closely with TLC SSD offerings in the market.

Final Thoughts

We’ve done extensive work with Solidigm’s QLC SSDs in the past, but this is by far the most significant work we’ve done with them to date, jamming nearly 750TB of storage into a 2U server. We wanted to look at how the drives perform in a configuration where applications like analytics and inferencing can take advantage of modern platform design. While the general feeling towards QLC is they are only good for value or archive projects, that couldn’t be further from the truth.

Looking at performance, we can see the P5316 SSDs in the VES VSS2249P storage server were able to post amazing results. Large-block sequential performance is server-saturating, with each SSD nearly maxing out its Gen4 U.2 bay in read performance. We measured 175.5GB/s in 1M read, which worked out to 7.3GB/s per SSD.

Random-read performance was also great, topping out at 176.3GB/s in a 64K block size. But don’t sleep on the write performance; the drives did very well in large blocked workloads. 64K sequential write measured 57.7GB/s, while 64K random tapered down to 13.2GB/s. Mixed workloads with a focus on read activity performed quite well, where we measured 44GB/s in 64K 70/30 and just under 131GB/s in 64K 90/10. Finally, for small-block random read we measured an amazing 86.9GB/s or 21.2M IOPS in our 4K workload.

In the past, we’ve done work with the dual-node HA version of this Viking Enterprise Server leveraging dual-ported TLC SSDs. While not exactly apples-to-apples, there are some interesting trend lines that show these QLC SSDs stand up very well against TLC solutions.

Both drive sets were able to drive an immense amount of bandwidth, with the TLC SSDs measuring 125GB/s and the Solidigm P5316 QLC SSDs measuring 159GB/s in 64K sequential read. Write performance was also close, with the TLC SSDs measuring 63.2GB/s in 64K sequential write compared to the P5316s with 57.7GB/s.

This data isn’t meant to suggest that QLC is a complete replacement for TLC in all applications, TLC still has a big advantage as write percentage and the need for endurance increases. For many use cases though, QLC SSDs are ready for deployment and can often be faster than TLC competitors, especially when the workload is not very very write-intensive.

Further, if you need a blend of capacity and performance, QLC DC SSDs will win hands down, this is a unique combination that QLC, and in the future, PLC SSDs, are well positioned to serve. Given we posted over 175GB/s in this VES storage server across nearly 3/4 of a PB of storage in 2U, the rack efficiency looks pretty compelling.

Solidigm sponsors this report. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Amazon

Amazon