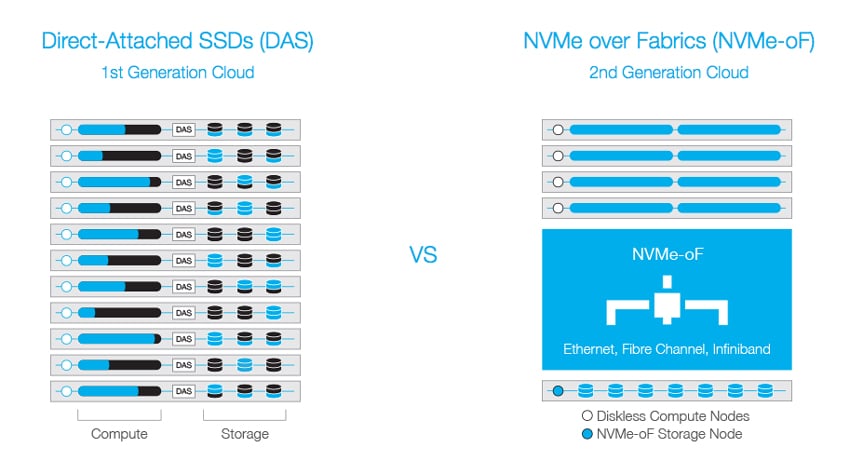

At the Open Compute Project this year, Toshiba announced the release of its new software around NVMe over Fabrics (NVMe-oF), KumoScale. KumoScale is designed to maximize the performance benefits of direct-attached NVMe drives over the data center network through disaggregation, abstraction and management integration. The software brings the already high-performing NVMe SSDs by allowing diskless compute nodes (with only a boot drive) to access this flash storage over high-speed fabrics network connectivity. This type of connection will bring the networked storage to near-peak performance.

While this software can be used in any standard x86 system platform, for our review we are leveraging the Newisys NSS-1160G-2N dual node server. The Newisys NSS-1160G-2N platform is optimized for hyper-scale service model with hot swap of NVMe drives, balanced throughput from network-to-drives, cold aisle FRU-based servicing, redundant power and cooling as well as other key scale-out data center requirements. Our server presents storage via two 100G Mellanox cards with 8x Toshiba NVMe SSDs on one node, the second node is used for management purposes. The load generation will come from a single Dell PowerEdge R740xd that is directly connected to the Newisys over dual 100G Mellanox ConnectX-5 NICs. The Newisys can hold up to 16 NVMe and dual Xeon server boards in its compact 1U form factor and is optimized for lowest latency and highest performance with direct-attach drives, though the server is a bit longer than what we are used to in our racks.

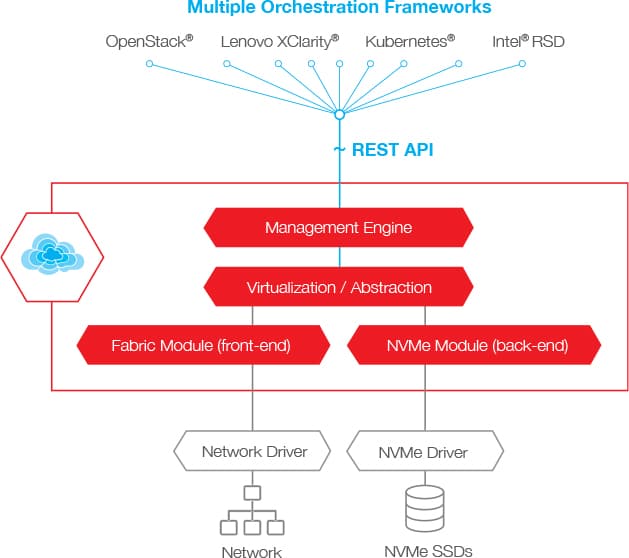

KumoScale holds several other benefits over traditional direct-attached SSDs. Leveraging NVMe-oF users need fewer nodes to hit even higher compute power and storage. Having less nodes means that they can be managed better and reduce costs. Part of the reduction in costs would be through the elimination of stranded storage and compute power. KumoScale uses restful APIs to integrate with multiple orchestration frameworks; most interestingly, it works with Kubernetes. This will allow those that leverage Kubernetes for container storage to do so at much higher performance, with just the right amount of provisioned storage. Aside from Kubernetes, KumoScale also works with OpenStack, Lenovo XClarity, and Intel RSD.

Management

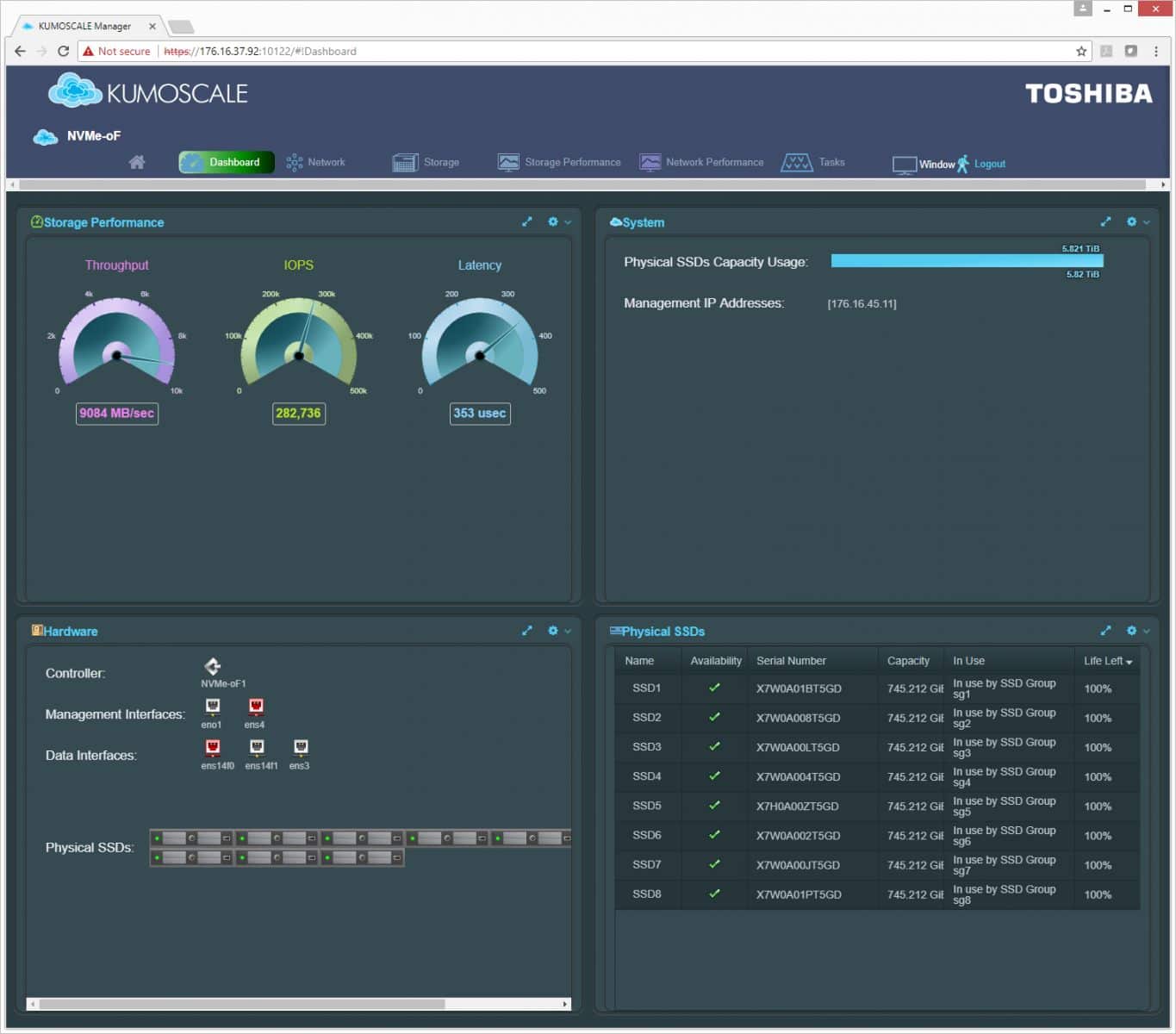

As a bit of a standout, KumoScale comes with a fairly lean and intuitive GUI. Typically, this type of solution is controlled through the CLI (and in fact, several aspects still will be). On the dashboard tab, users can easily see the storage performance, system capacity, and hardware status, and can drill down a bit into individual SSD status.

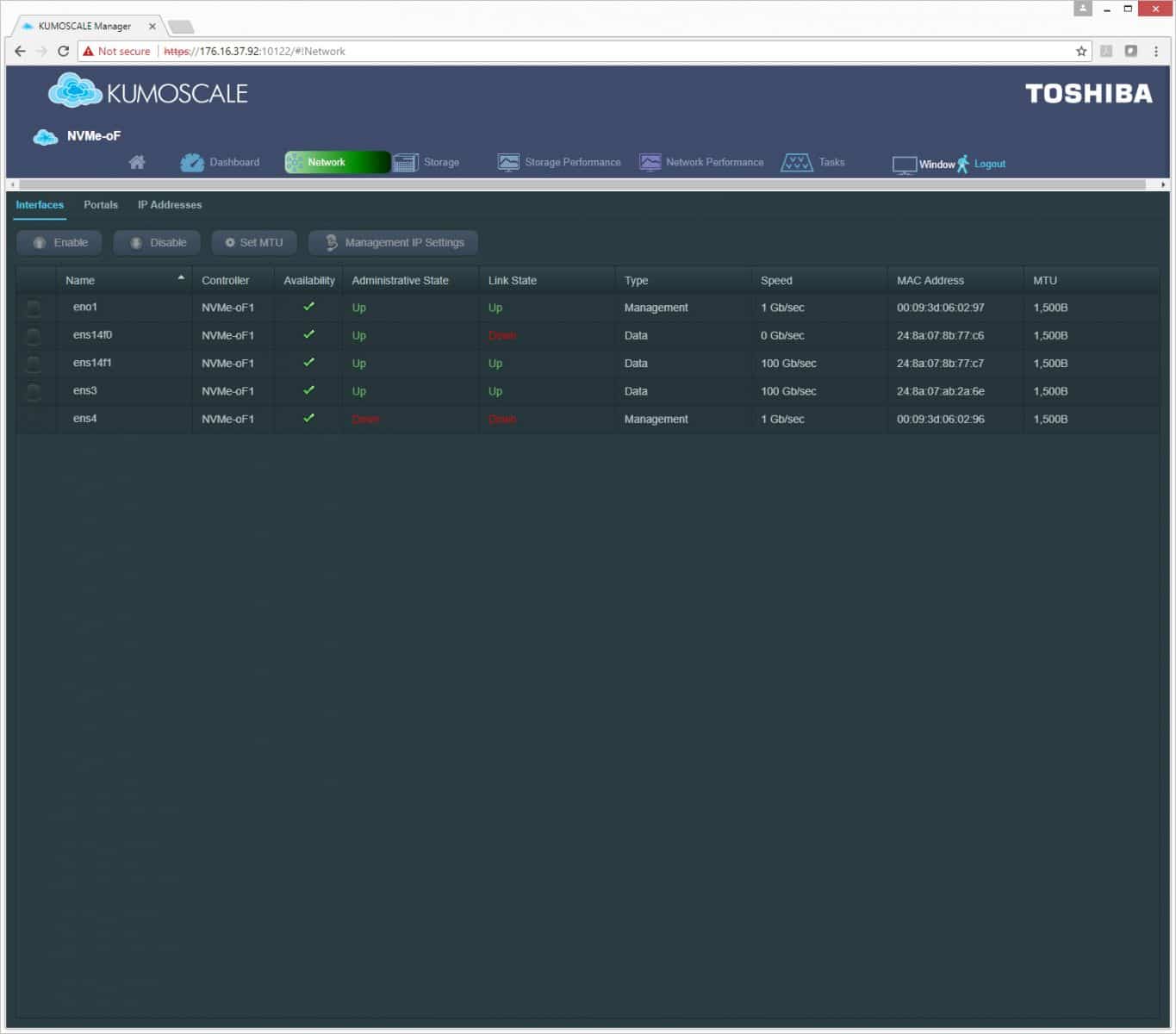

The next tab over is the network tab that shows the availability and link status of the controller(s), along with the type, speed, MAC address, and MTU.

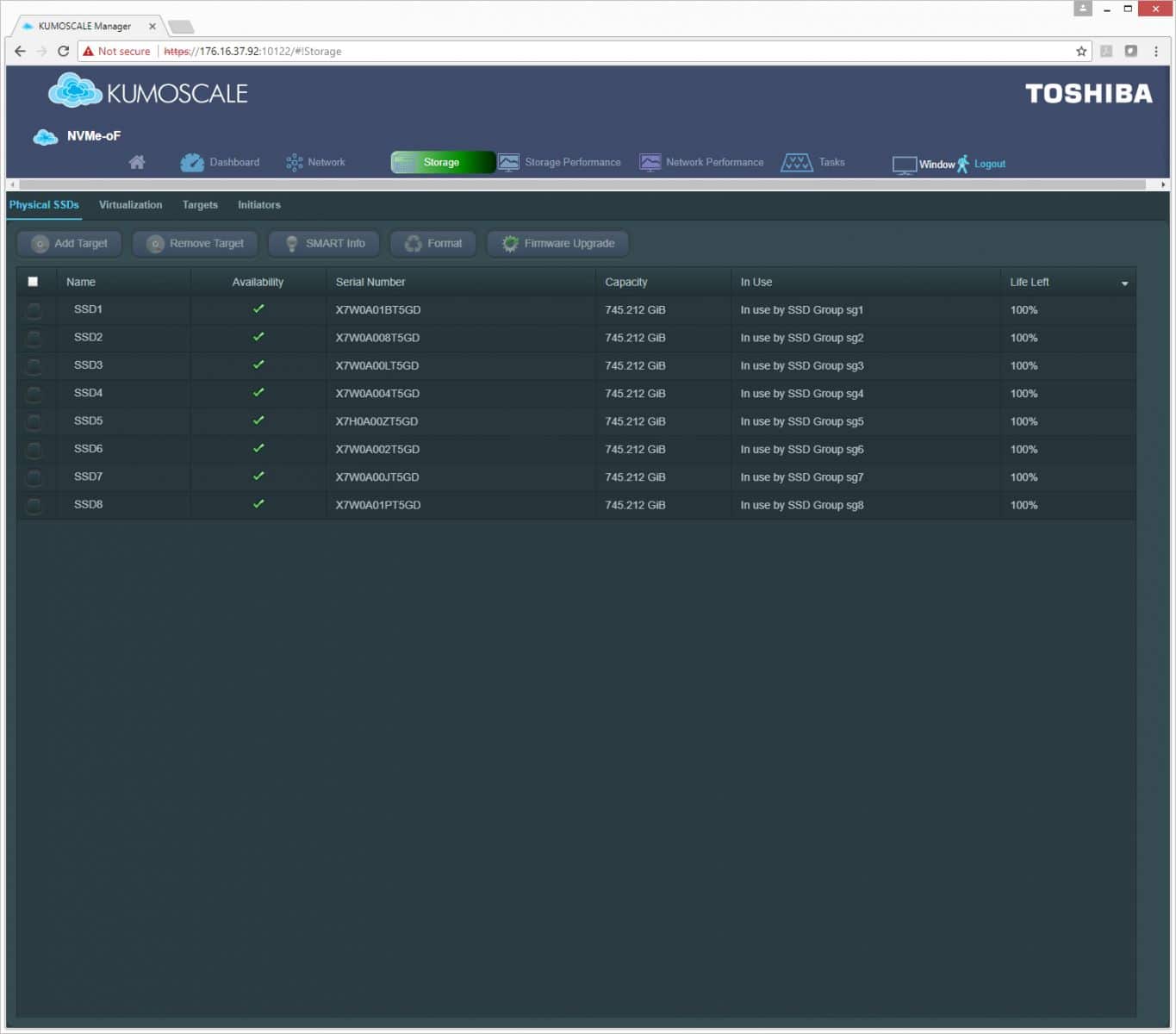

The storage tab breaks down into four sub-tabs. The first sub-tab is the physical SSDs. Here users can see the drives by their name, whether or not they are available, their serial numbers, their capacity, their group usage and the percentage of life left.

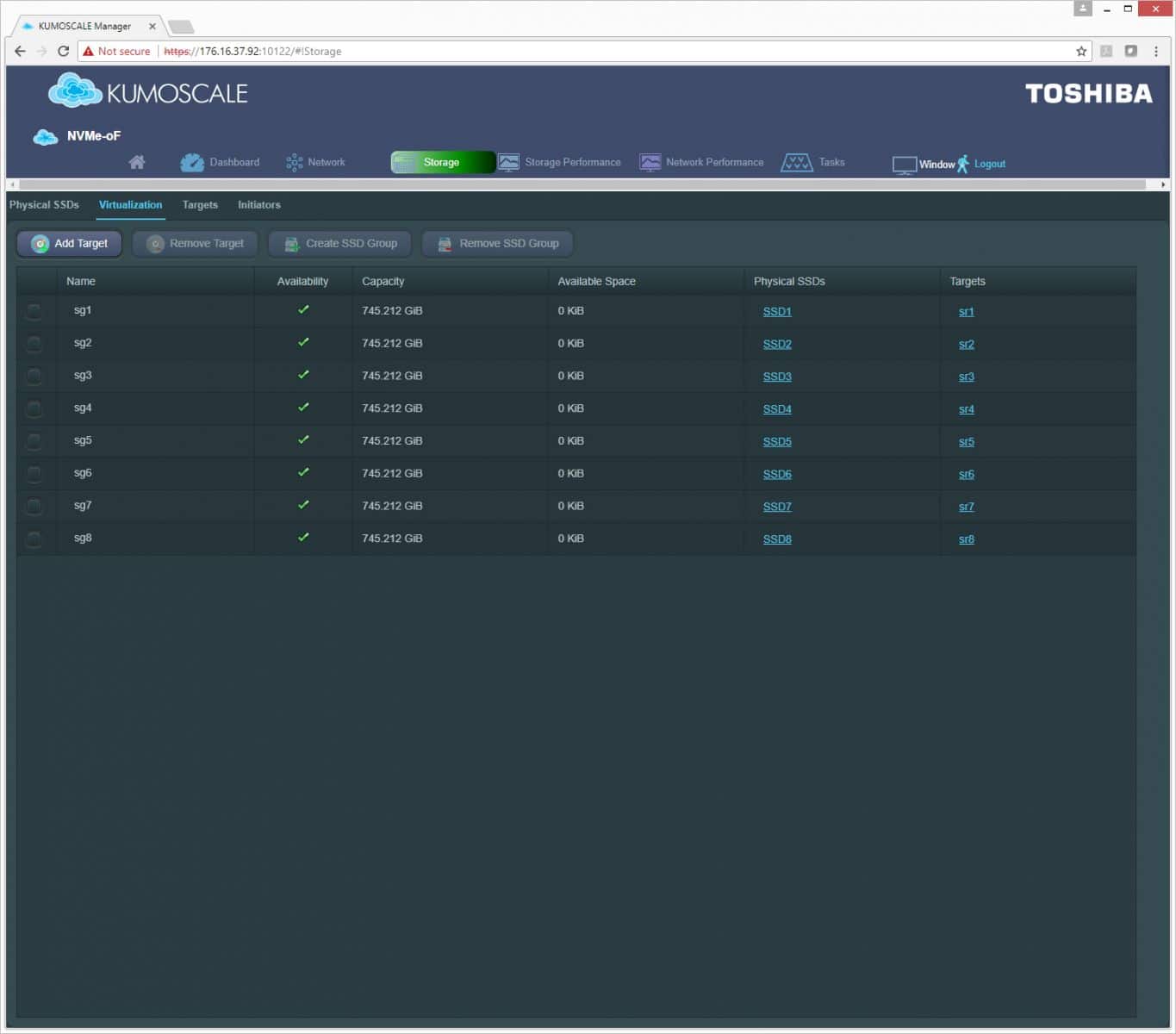

The next sub-tab in virtualized storage groups. This sub-tab is similar to the above with name, availability, capacity, as well as available space, the physical SSD it is virtualized from, and its target.

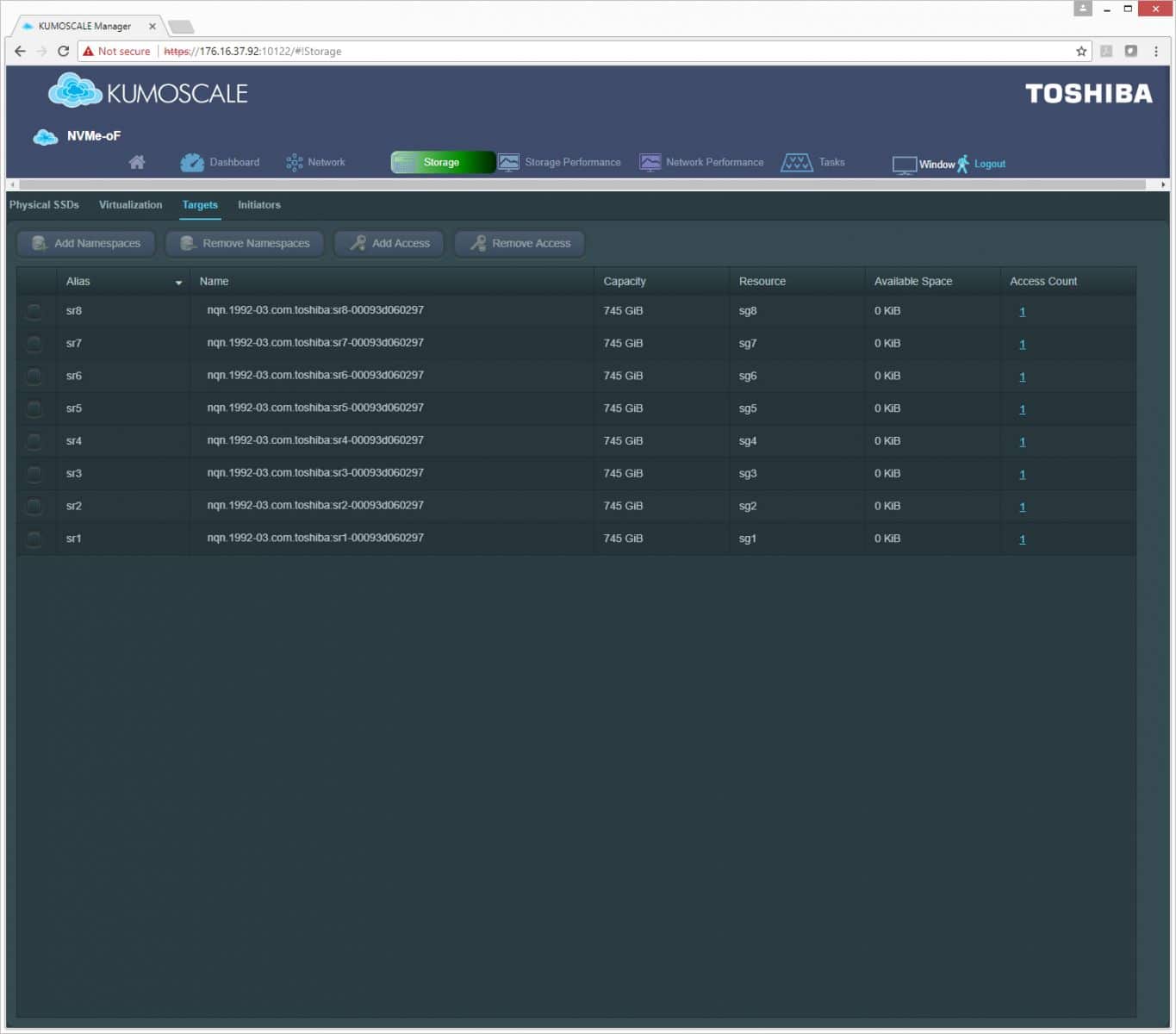

The next sub-tab, Targets, expands on the targets above and shows the virtualized storage exposed to the host, including group volumes.

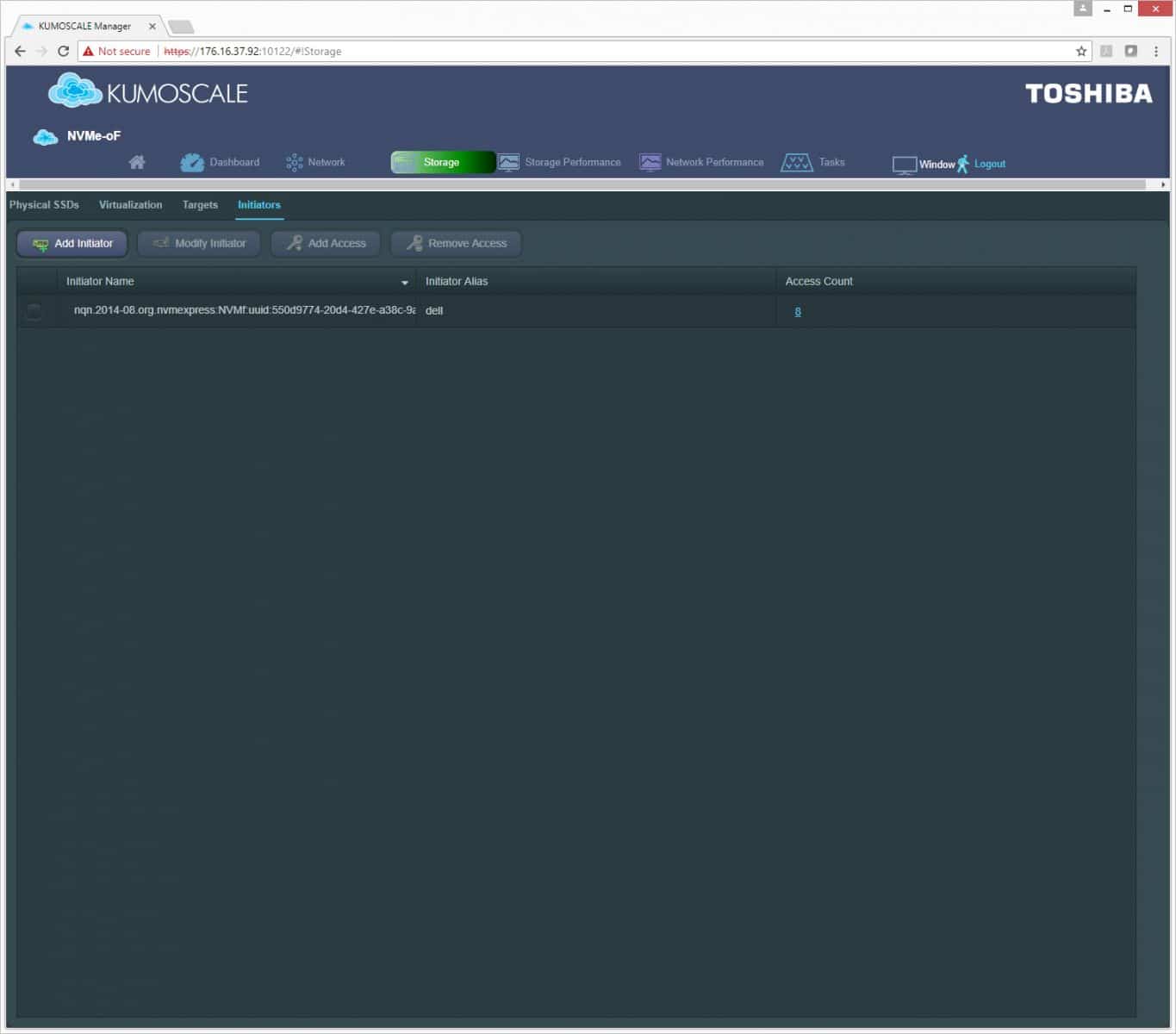

The final sub-tab under storage is the initiators tab. This tab gives the initiators name, alias (in this case Dell), and access count. The user can grant Access Control (ACL) for target-initiator pair.

The next main tab is Storage Performance. Here users can see a read-out of throughput, IOPS, and latency for a given time frame.

And finally, we come to network performance, which also gives users a breakdown of performance metrics, bandwidth and packets for a given time.

Performance

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from "four corners" tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices. On the array side, we use our cluster of Dell PowerEdge R740xd servers:

Profiles:

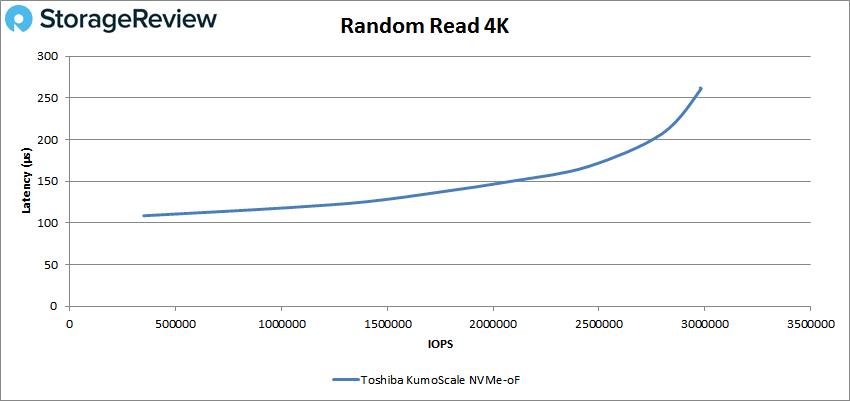

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

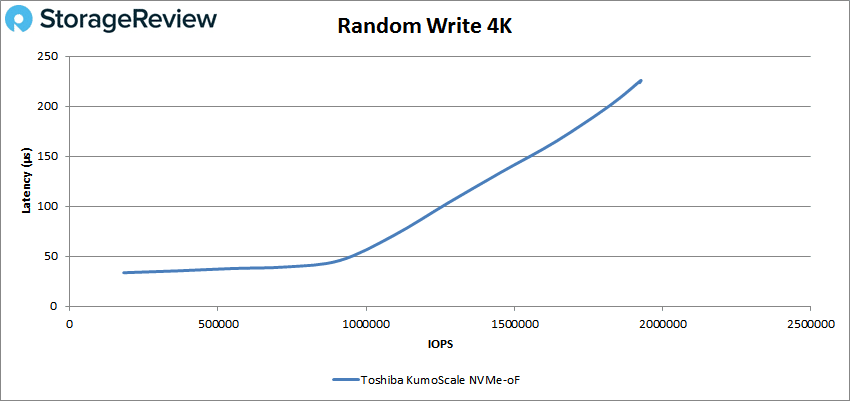

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

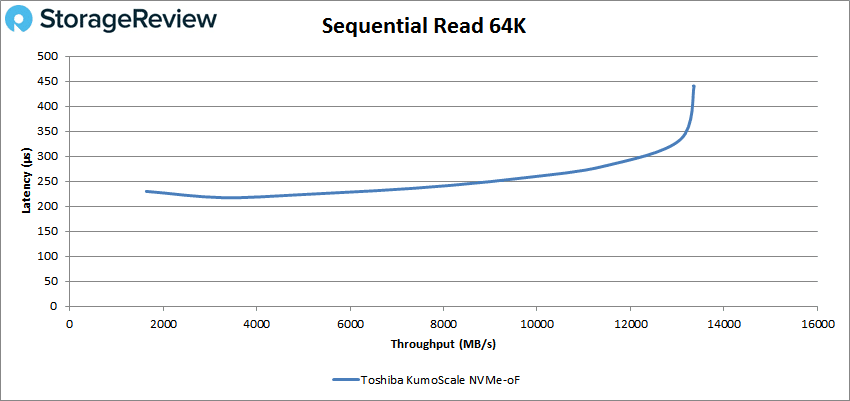

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

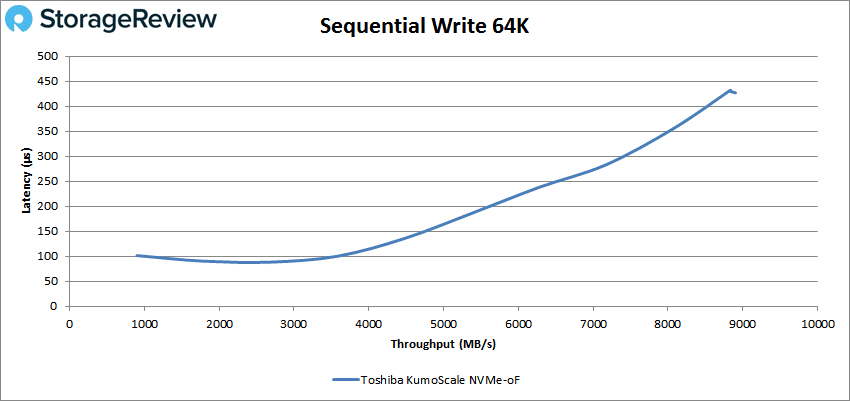

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

In 4K peak read performance, the Newisys with KumoScale (referred to as “the storage node" for the rest of this review as it is the only device being looked at) had sub-millisecond performance throughout the test, peaking at 2,981,084 IOPS with a latency of 260μs.

In 4K peak write performance, the storage node peaked at 1,926,637 IOPS with a latency of 226μs.

Switching to 64K peak read, the storage node had a peak performance of 213,765 IOPS or 13.36GB/s with a latency of 441μs.

For 64K sequential peak write, the storage node hit 141,454 IOPS or 8.83GB/s with a latency of 432μs.

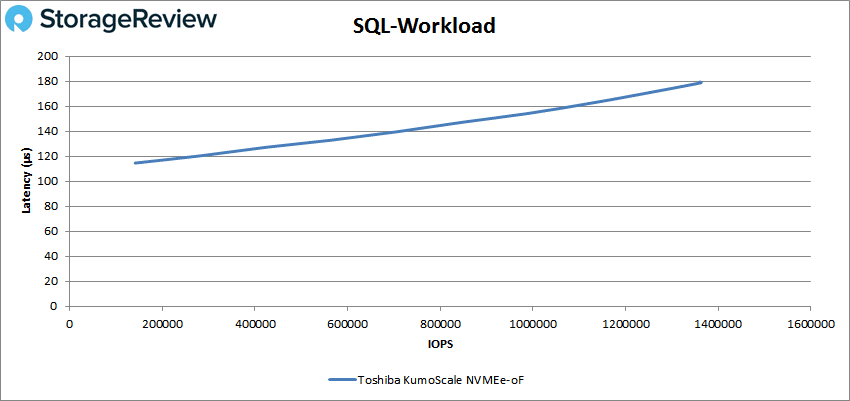

In our SQL workload, the storage node peaked at 1,361,815 IOPS with a latency of 179μs.

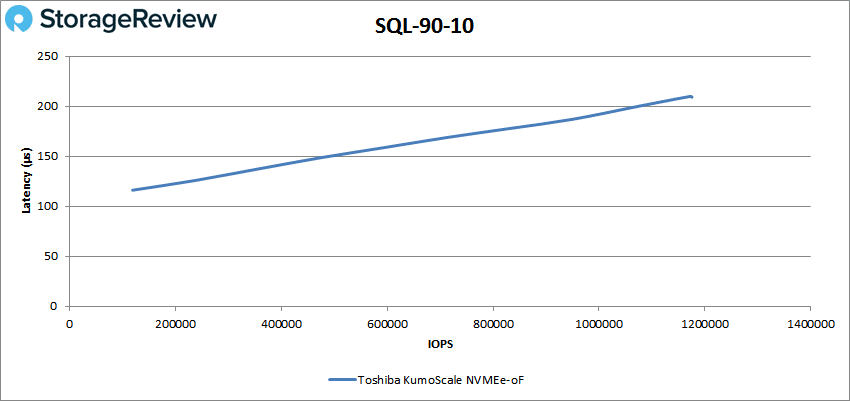

In the SQL 90-10 benchmark, we saw peak performance of 1,171,467 IOPS at a latency of only 210μs.

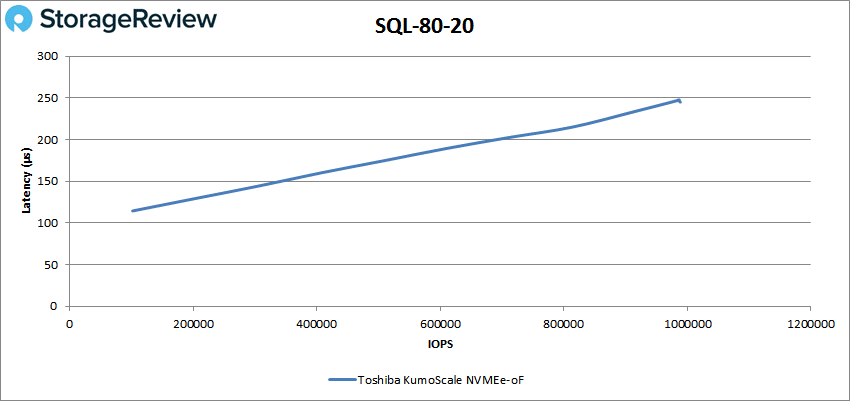

The SQL 80-20 benchmark showed the storage node hitting a peak performance of 987,015 IOPS with a latency of 248μs.

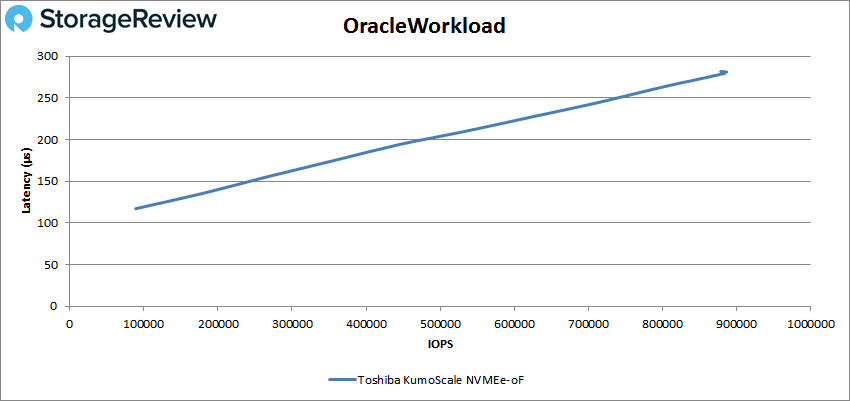

With the Oracle Workload, the storage node had a peak performance of 883,894 IOPS with a latency of 280μs.

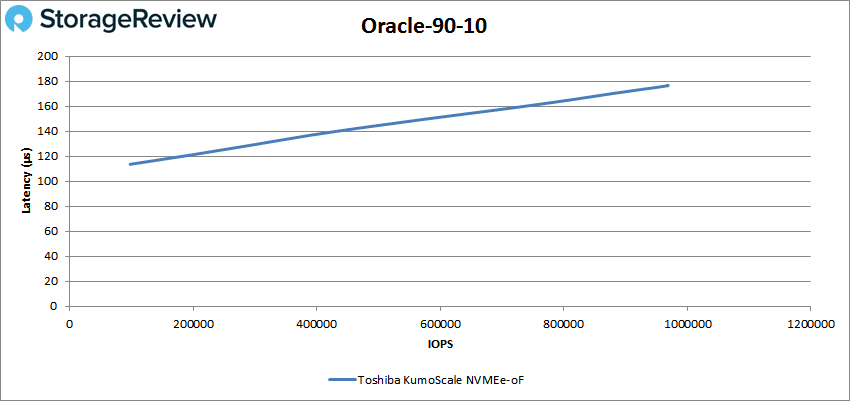

The Oracle 90-10 showed peak performance of 967,507 IOPS with a latency of 176μs.

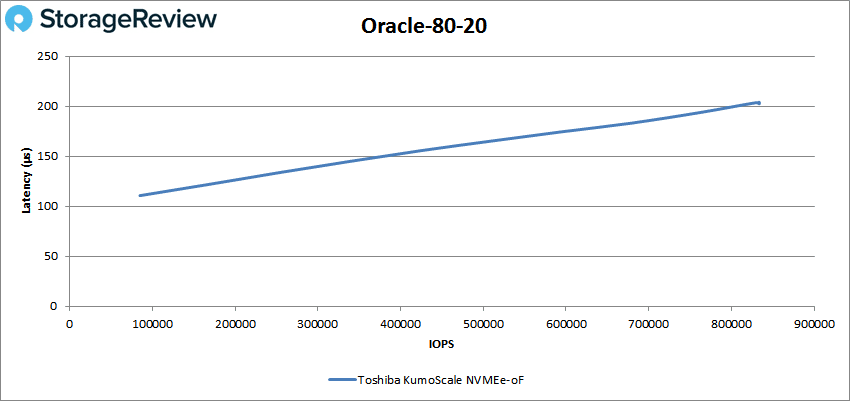

In the Oracle 80-20, the storage node was able to hit 829,765 IOPS with 204μs latency.

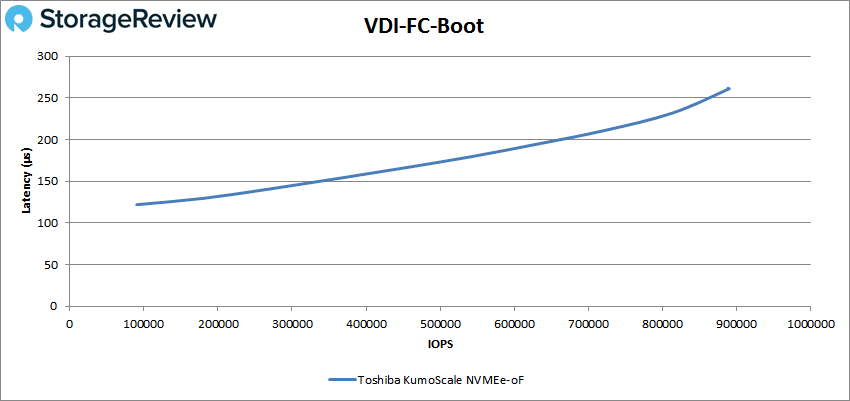

Next we switched over to our VDI clone test, Full and Linked. For VDI Full Clone Boot, the storage node peaked at 889,591 IOPS with a latency of 261μs.

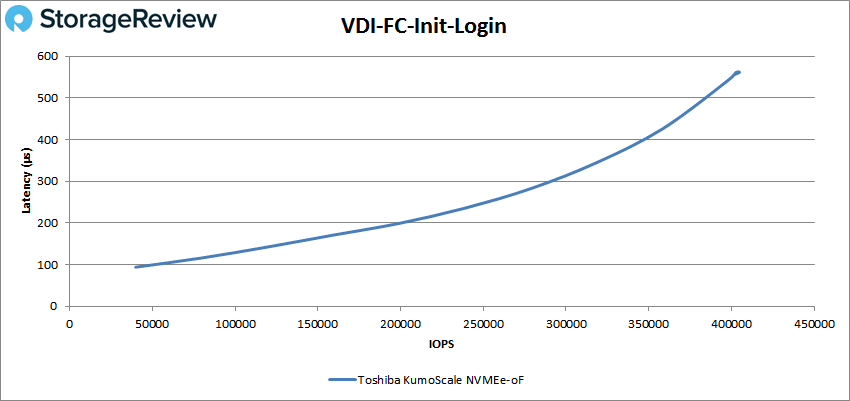

The VDI Full Clone initial login saw the storage node hit a peak of 402,840 IOPS with a latency of 562μs.

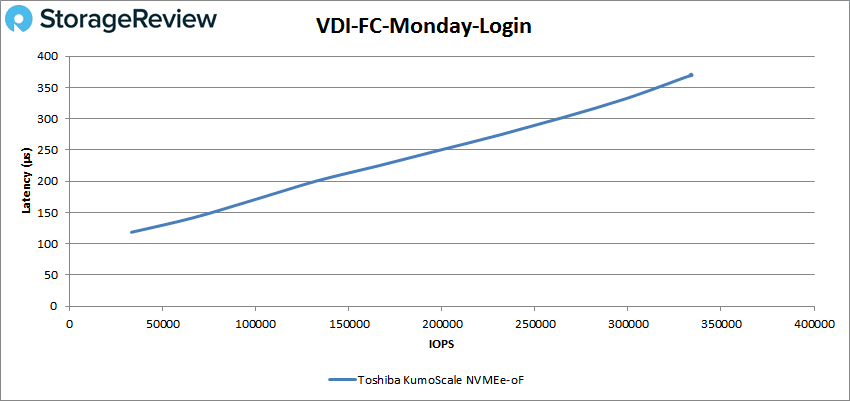

The VDI Full Clone Monday login showed a peak performance of 331,351 IOPS and a latency of 369μs.

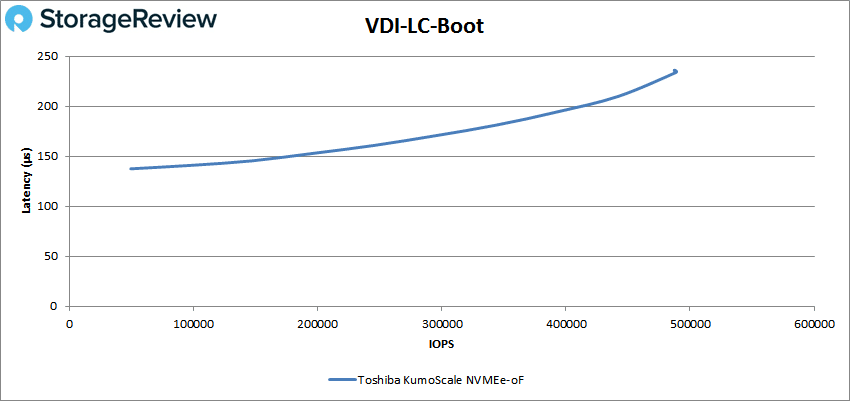

Moving over to VDI Linked Clone, the boot test showed a peak performance of 488,484 IOPS and a latency of 234μs.

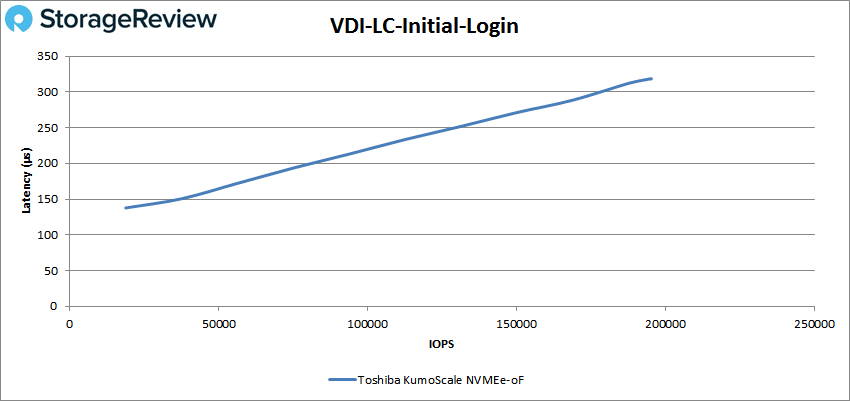

In the Linked Clone VDI profile measuring Initial Login performance, the storage node peaked at 194,781 IOPS with a latency of 318μs.

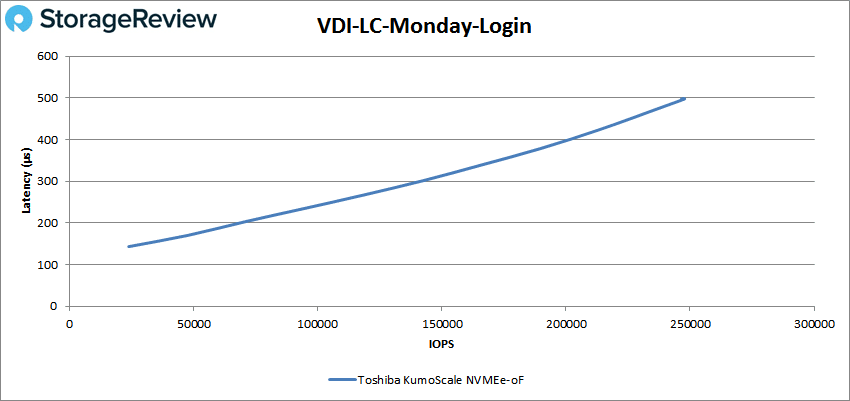

In our last profile, we look at VDI Linked Clone Monday Login performance. Here the storage node peaked at 247,806 IOPS with latency of 498μs.

Conclusion

Designed to maximize the performance of block storage, KumoScale software pools NVMe SSDs together to deliver the right amount of capacity and IOPS that can be shared by thousands of job instances over NVMe-oF. This gives cloud users more flexibility, scalability, and efficiency. While KumoScale can be used in several different hardware options to create the storage node (Toshiba recommends Intel Xeon CPU E5-2690 v4 @2.30GHz or equivalent and 64GB of DRAM), we used the Newisys NSS-1160G-2N dual node server. Not only will NVMe-oF bring storage to near-peak performance, KumoScale also works with multiple orchestration frameworks including Kubernetes, OpenStack, Lenovo XClarity and Intel RSD.

The Newisys system powered by Toshiba KumoScale can certainly bring the thunder in terms of performance. Nowhere did the storage node come close to breaking 1ms, the highest latency was 562μs in the VDI FC initial login. Some highlights include nearly hitting 3 million IOPS in 4K read, nearly 2 million in 4K write, 1.3 million IOPS in the SQL workload, 1.1 million IOPS in the SQL 90-10, and nearly 1 million in the SQL 80-20. For 64K sequential performance, the storage node hit 13.36GB.s read and 8.83GB/s write.

While there is no question performance is astronomical, putting KumoScale in context really makes it shine. Latency and performance are dramatically better through this platform than other non-NVMe-oF platforms. Latency is closer to that of local storage performance, which is exactly what the NVMe-oFprotocol strives for and what the applications these systems are positioned at require. Performance at scale from this system is what should really matter though. We looked at performance of 8 SSDs in one storage node, where production systems would have multiple storage nodes, each with their own storage pools. Performance in that intended scenario blows traditional storage array metrics out of the water with ease, making KumoScale a game changer when it comes to NVMe-oF arrays. Toshiba has done extremely well in delivering performance efficiencies with KumoScale and it even has a GUI for evaluation and development. Paired with the Newisys chassis, this solution will surely find success in large datacenters that can make use of the throughput and latency benefits Toshiba KumoScale software offers.

Amazon

Amazon