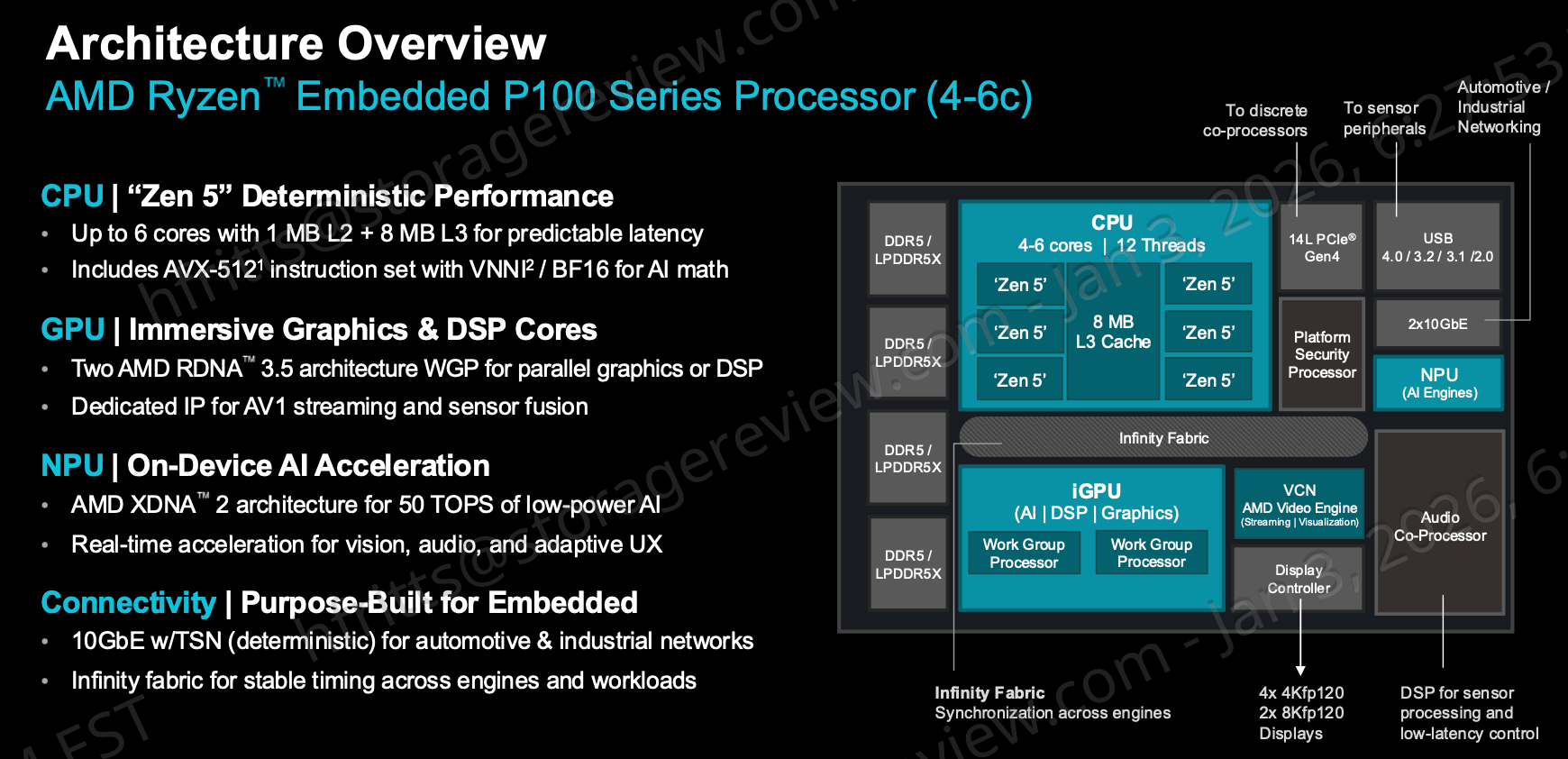

AMD has introduced its new Ryzen AI Embedded Processor lineup. This portfolio targets AI workloads at the edge for automotive, industrial automation, and emerging physical AI platforms, including humanoid robotics. It launches with the Ryzen AI Embedded P100 Series and the forthcoming X100 Series. These processors combine Zen 5 CPU cores, RDNA 3.5 graphics, and an XDNA 2 NPU in a compact BGA package.

Integrating the CPU, GPU, and NPU into a single SoC aims to provide energy-efficient, low-latency AI inference. This design is perfect for embedded systems where power, thermal management, and board space are limited.

Platform Overview

Ryzen AI Embedded models use AMD’s latest Zen 5 CPU architecture for scalable x86 performance and consistent control. They pair this with an RDNA 3.5 GPU for real-time visualization and graphics. An integrated XDNA 2 NPU delivers up to 50 TOPS of dedicated AI acceleration, freeing the CPU and GPU from inference workloads.

AMD is positioning the portfolio for:

- Automotive digital cockpits and HMI systems

- Smart healthcare and industrial automation

- Physical AI and autonomous systems, such as robotics

By integrating the CPU, GPU, and NPU into a single device, OEMs and Tier 1s can simplify system design compared to multi-chip setups. They can still boost AI and graphics performance for in-vehicle or industrial applications.

Ryzen AI Embedded P100 Series

The first products to ship are the Ryzen AI Embedded P100 Series. They are available in 4- and 6-core configurations and are optimized for in-vehicle experiences and industrial HMI and control.

Key features include:

- 4-6 Zen 5 cores with boost clocks up to 4.5 GHz

- Up to a 2.2x improvement in single-thread and multi-thread performance compared to previous Ryzen Embedded platforms, based on AMD’s internal SPECrate2017_int_base estimates

- RDNA 3.5 GPU providing up to 35% better rendering performance than the previous generation in AMD’s GFXBench Vulkan testing

- XDNA 2 NPU with up to 50 TOPS for on-device AI inference

The P100 GPU supports up to four 4K displays or two 8K displays at 120 fps, meeting the demands of multi-screen digital cockpits and advanced industrial visualization. A hardware video codec engine enables high-fidelity, low-latency streaming and playback without consuming CPU resources.

Thermally, P100 SKUs operate within a nominal TDP range of 15-54 W, with a maximum operating junction temperature of 105 °C and a 25 mm x 40 mm BGA footprint. This setup is designed for harsh, space-limited edge applications, including fanless or semi-ruggedized designs.

AMD is offering both industrial-temperature and automotive-grade variants:

- Industrial SKUs rated from 0 °C to 105 °C or -40 °C to 105 °C

- Automotive-grade SKUs, like P122a and P132a, with -40 °C to 105 °C operation and AEC Q100 qualification, along with support for up to 10 years of longevity

AI and Model Support

The XDNA 2 NPU is rated at up to 50 TOPS and supports a wide range of AI models at the edge. These include:

- Vision transformers for advanced perception

- Compact LLMs for on-device language and intent understanding

- CNNs and related architectures for detection, classification, and tracking

This support enables in-vehicle and industrial experiences that combine voice, gesture, and environmental context locally, rather than relying solely on cloud-based inference.

AMD claims the P100 Series delivers up to 3x the AI TOPS of its Ryzen Embedded 8000 Series. This is significant for customers upgrading existing designs to achieve higher AI performance per watt and per board.

I/O, Memory, and System Integration

For networking and connectivity, the P100 Series offers features suitable for connected edge systems:

- Up to two 10 GbE ports with TSN support for time-sensitive industrial and automotive networking

- Support for DDR5 (up to 5600 MT/s) and LPDDR5X (up to 8000 MT/s or 7500 MT/s with RAS features) with ECC

- USB4 (up to 2 ports on select SKUs), plus additional USB 3.x and USB 2.0 connectivity

These capabilities are meant to support multi-domain in-vehicle architectures, industrial controllers with high-speed deterministic Ethernet, and AI-enabled edge gateways.

Software Stack and Virtualization

Ryzen AI Embedded comes with a unified, open software stack covering CPU, GPU, and NPU:

- Optimized CPU libraries for Zen 5

- Open-standard GPU APIs for RDNA 3.5

- Native XDNA AI runtime accessible via Ryzen AI Software

The platform uses an open-source Xen-based virtualization framework to manage multiple OS domains on the same SoC. AMD points out typical configurations like:

- Yocto or Ubuntu Linux for HMI and application logic

- FreeRTOS for complex real-time control domains

- Android or Windows for broader application ecosystems

These domains operate concurrently in isolated partitions, using an ASIL-B-capable architecture. For automotive and industrial OEMs, this setup aims to simplify safety-critical integration, reduce non-recurring engineering costs, and accelerate production time.

Additional roadmap points include:

- Higher-core-count P100 SKUs with 8–12 cores, targeting industrial automation and more compute-dense edge platforms, are expected to start sampling in the first quarter of next year.

- X100 Series processors, featuring up to 16 CPU cores and greater AI performance for demanding physical AI and autonomous systems, are set to sample in the first half of next year.

Specifications

| AMD Ryzen AI Embedded P100 Series (4-6 cores) | INDUSTRIAL TEMP | AUTOMOTIVE GRADE | |||||

|---|---|---|---|---|---|---|---|

| Model # | P121 | P132 | P121I | P132I | P122a | P132a | |

| CPU | “Zen 5” CPU Cores | 4 | 6 | 4 | 6 | 4 | 6 |

| Max Frequency | Up to 4.4 GHz | Up to 4.5 GHz | Up to 4.4 GHz | Up to 4.5 GHz | Up to 3.65 GHz | Up to 3.65 GHz | |

| L3 Shared Cache | 8 MB | 8 MB | 8 MB | 8 MB | 8 MB | 8 MB | |

| GPU | Work Group Processors | 1 | 2 | 1 | 2 | 2 | 2 |

| 4K120 / 8Kp120 Displays | 4 / 2 | 4 / 2 | 4 / 2 | 4 / 2 | 4 / 2 | 4 / 2 | |

| GPU Max Frequency | 2.7 GHz | 2.8 GHz | 2.7 GHz | 2.8 GHz | 2.0 GHz | 2.4 GHz | |

| NPU | TOPS | 30 | 50 | 30 | 50 | 30 | 50 |

| I/O | 10GE Ports w/ TSN | 2 | 2 | 2 | 2 | 2 | 2 |

| DDR5 (ECC) | 5600 MT/s | ||||||

| LPDDR5X (ECC) | 7500 MT/s | 8000 MT/s | 7500 MT/s | 8000 MT/s | 7500 MT/s w/ RAS | 7500 MT/s w/ RAS | |

| USB 4.0 | 2x USB4 | N/A | |||||

| Other USB | 1x USB 3.2 | 1x USB 3.1 | 3x USB 2 | 1x USB 2 (Secure BIOS) | |||

| Power & Thermal | Nominal TDP | 28 W | 28 W | 28 W | 28 W | 28 W | 45 W |

| Nominal TDP Range | 15 – 54 W | 15 – 54 W | 15 – 54 W | 15 – 54 W | 15 – 30 W | 25 – 45 W | |

| Junction Temperature | 0 to 105°C | 0 to 105°C | -40 to 105°C | -40 to 105°C | -40 to 105°C | -40 to 105°C | |

| Package & Reliability | Package | 25 mm x 40 mm | |||||

| Longevity / Grade | 2.5 Years (standard) | Up to 10 Years (extended) | AEC-Q100 | ||||

Showcasing Next-Gen Automotive Compute and ADAS Platforms

AMD is also showcasing a wide range of automotive technologies that integrate driver-assistance systems, digital cockpit features, and cloud-based development for software-defined vehicles. The demonstrations, held in the Las Vegas Convention Center West Hall, illustrate how Versal AI Edge, Ryzen Embedded, EPYC Embedded, and Radeon Pro GPUs can work together in scalable automotive architectures.

The showcase is organized around three main themes: ADAS and perception, in-vehicle infotainment and digital cockpit, and cloud-native automotive development. Several demos highlight the AMD Versal AI Edge Series, including the second generation, as the key compute platform for multi-sensor perception and ADAS.

MultiVision Camera Perception With StradVision

In partnership with StradVision, AMD is demonstrating a multi-camera perception system based on the Versal AI Edge Series Gen 2. StradVision’s AI perception software runs on the Versal AI Edge architecture, combining scalar processing, adaptive logic, and AI engines. The demo focuses on multi-camera fusion, object detection, and tracking for higher-level ADAS and automated driving functions, with an emphasis on real-time performance.

Vision-Based Highway Driver Assistance

Another demo uses the first-generation Versal AI Edge to perform a vision-focused driver-assistance task. This system performs closed-loop vehicle control in a virtual driving environment, detecting lanes and objects in real time. It serves as a model for camera-first ADAS systems that aim to cut reliance on expensive sensor packages while maintaining strong perception.

360-Degree Surround View Visualization

A surround-view solution on Versal AI Edge Gen 2 demonstrates low-latency stitching of multiple camera feeds into a clear 360-degree view. By integrating image processing and rendering on a single device, the platform targets applications such as automated parking, low-speed maneuvering assistance, and improved situational awareness. The focus is on low latency and high image quality, suitable for production parking and surround-view ECUs.

Autoware Front Camera Perception

AMD is also presenting an Autoware 2.0-based front-camera perception pipeline on the Versal AI Edge Gen 2. This open-source stack demonstrates object detection under challenging weather conditions and serves as a scalable reference for ADAS developers and system integrators. The combination of Versal AI Edge and Autoware aims to speed up evaluation and prototyping of critical safety cases.

Heterogeneous VirtIO for Automotive Compute

To meet the needs of new zonal and centralized compute structures, AMD is showcasing a heterogeneous VirtIO setup in which a Ryzen Embedded processor pairs with a first-generation Versal AI Edge device. The demo uses standard VirtIO to virtualize and manage workloads across CPU and accelerator domains. This approach targets future E/E architectures, allowing different SoCs to share virtualized I/O and services while handling mixed-criticality tasks.

Beyond LiDAR With Zynq UltraScale+ MPSoC

For LiDAR-focused ADAS systems, AMD is introducing Beyond’s long-range forward ADAS LiDAR sensor, designed around the AMD Zynq UltraScale+ MPSoC. In this setup, the Zynq UltraScale+ manages point-cloud processing, calibration, and RX/TX control. The aim is to integrate signal processing, real-time control, and higher-level perception on a single programmable SoC, simplifying latency and BOM complexity in LiDAR-based ADAS modules.

IVI and Digital Cockpit: Ryzen Embedded and Versal in a Unified Stack

For in-vehicle infotainment, AMD is presenting a unified platform for digital cockpit, ADAS visualization, and virtualized software domains.

Vehicle Experience Platform

The Vehicle Experience Platform demonstration combines next-generation Ryzen Embedded processors with first-generation Versal AI Edge devices. Ryzen Embedded is the primary host for digital cockpit tasks, while Versal AI Edge enhances perception, sensor fusion, or other AI functions. The platform serves as a single-stack reference that supports seamless multi-display cockpit graphics, smart workload distribution between CPU and accelerator, and flexible customization for brand-specific experiences.

Digital Cockpit With Virtualized Workloads

Another demo explicitly shows a virtualized digital cockpit running on next-generation Ryzen Embedded CPUs, along with a premier automotive OS and hypervisor stack. The system integrates cluster, infotainment, and possibly ADAS visualization on a single silicon chip, ensuring separation between domains. For OEMs and Tier 1s, this architecture aims to reduce the number of hardware components and simplify software management across different vehicle trims.

Virtualized Android Automotive on Xen

AMD is also presenting a virtualized Android Automotive setup running on next-generation Ryzen Embedded processors with a first-generation Versal AI Edge. This platform uses the Xen hypervisor to separate infotainment, cluster, and connected services into distinct virtual machines. This arrangement shows how Android Automotive can operate alongside other real-time or safety-critical domains on the same hardware, leveraging Versal for demanding compute or AI-driven features.

Cloud-Native Automotive Development: EPYC Embedded and Radeon Pro

Looking beyond in-vehicle systems, AMD is addressing the trend of cloud-native automotive software development with a virtual platform demo.

A cloud-native workflow demonstrates how AMD EPYC Embedded CPUs and Radeon Pro GPUs support virtual platform setup, validation, and extensive software testing. Developers can execute complete software stacks, including OS, middleware, and applications, in virtualized environments well before physical ECUs are available. The combination of EPYC Embedded and Radeon Pro seeks to accelerate CI/CD pipelines, regression testing, and hardware-in-the-loop validation as they move to the cloud.

Availability

Ryzen AI Embedded P100 processors with 4-6 cores are now available for early-access customers. Tools and documentation are ready, and production shipments are anticipated in the second quarter. The P100 Series processors, with 8–12 cores and targeting industrial automation applications, are expected to begin sampling in the first quarter. Sampling of the X100 Series with up to 16 cores is likely to start in the first half.

Amazon

Amazon