At AWS re:Invent, NVIDIA and Amazon Web Services announced an enhanced partnership focused on interconnect technology, cloud infrastructure, open models, and physical AI. This collaboration deepens NVIDIA’s integration with the AWS stack, covering custom silicon, networking, AI software, and robotics simulation. The focus is on production-scale AI and requirements for sovereign cloud.

NVLink Fusion for AWS Custom Silicon and Next-Generation AI Infrastructure

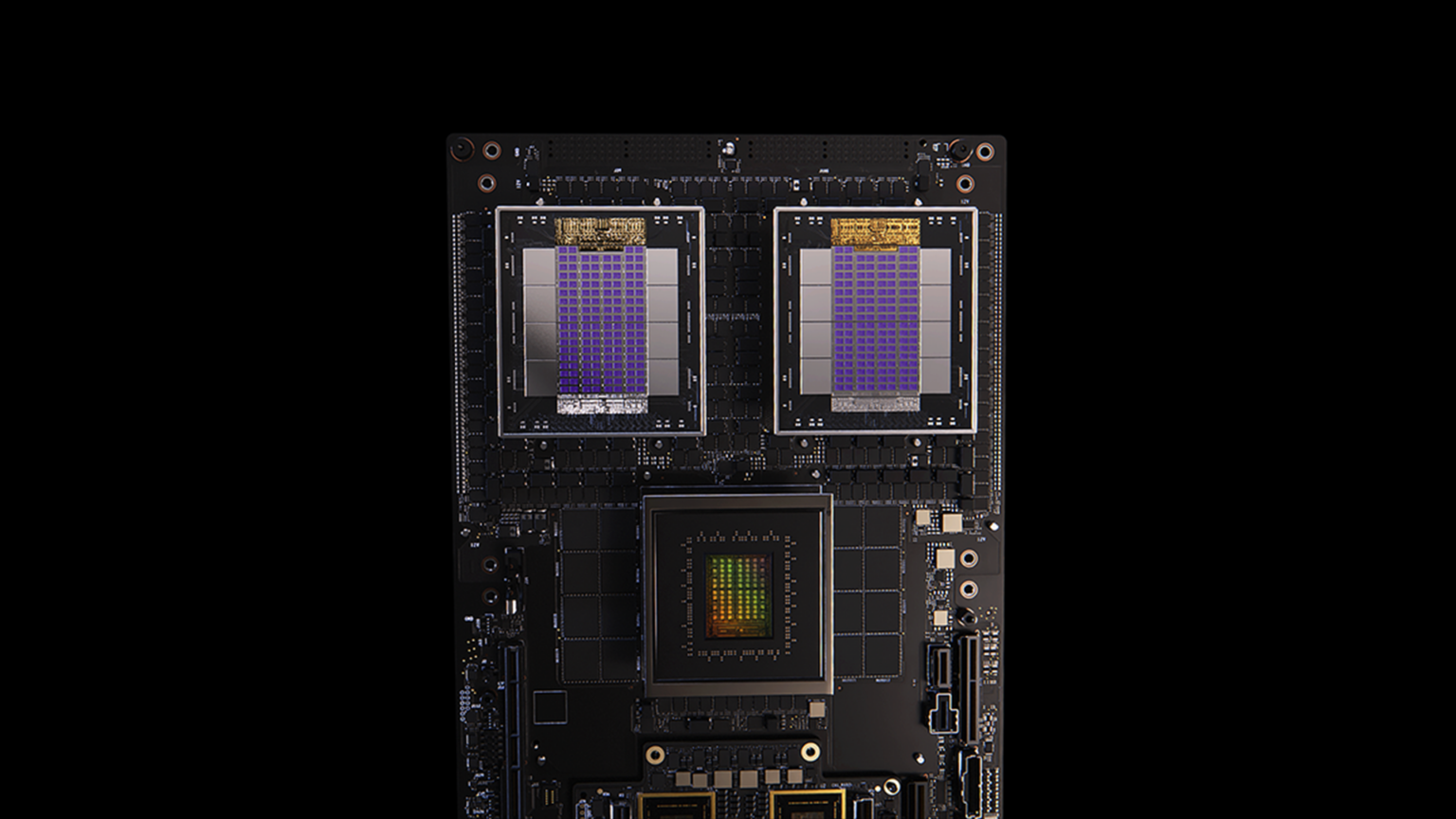

A key point of the announcement is AWS’s support for NVIDIA NVLink Fusion. AWS would license NVLink Fusion for building a custom AI infrastructure. AWS plans to add NVLink Fusion technology to its wide range of custom-designed silicon. This includes next-generation Trainium4 accelerators aimed at inference tasks and agentic AI model training. By using NVIDIA NVLink Fusion, AWS will link the NVIDIA NVLink scale-up interconnect with the NVIDIA MGX rack architecture and its custom silicon. The aim is to boost performance, simplify system design, and speed up market readiness for next-generation, cloud-scale AI platforms.

AWS also gains from the NVLink Fusion supplier ecosystem, which provides a complete rack-scale solution, including rack, chassis, power delivery, and cooling subsystems. This ecosystem makes procurement and integration easier for AWS as it builds high-density, AI-optimized infrastructure. Trainium4 is designed to work seamlessly with NVLink and NVIDIA MGX. This marks the first phase of a multigenerational NVLink Fusion collaboration between NVIDIA and AWS. AWS has already deployed NVIDIA MGX racks at scale with NVIDIA GPUs; integrating NVLink Fusion aims to standardize and streamline deployment and systems management across varied platforms, mixing AWS silicon and NVIDIA hardware.

Additionally, AWS uses various Graviton CPUs that handle a range of general-purpose and cloud-native workloads. The AWS Nitro System plays an essential role by supporting virtualization, security, and data-plane offload, thereby improving overall performance and protection for AWS services.

NVIDIA founder and CEO Jensen Huang framed the collaboration as a response to the growing demand for GPU computing driven by more capable AI models and greater enterprise adoption. He described the NVLink Fusion integration with AWS Trainium4 as a way to merge NVIDIA’s scale-up architecture with AWS’s custom silicon to create next-generation accelerated platforms that fuel what he called an AI industrial revolution. Huang stated the goal is to make advanced AI more accessible and move global infrastructure toward widespread intelligence.

AWS CEO Matt Garman highlighted the depth of the 15-year relationship between the two companies, noting that the collaboration advances large-scale AI infrastructure. He mentioned customer outcomes, including higher performance, better efficiency, and increased scalability. Garman emphasized support for NVIDIA NVLink Fusion across Trainium, Graviton, and Nitro to unlock new technical capabilities and encourage faster innovation for AWS customers.

NVIDIA Vera Rubin architecture on AWS

In networking, the NVIDIA Vera Rubin architecture on AWS will support AWS Elastic Fabric Adapter and the Nitro System. This design offers customers flexible, robust networking options while ensuring full compatibility with AWS’s existing cloud infrastructure and accelerating the delivery of new AI services.

Convergence of Scale and Sovereignty With Blackwell and AWS AI Factories

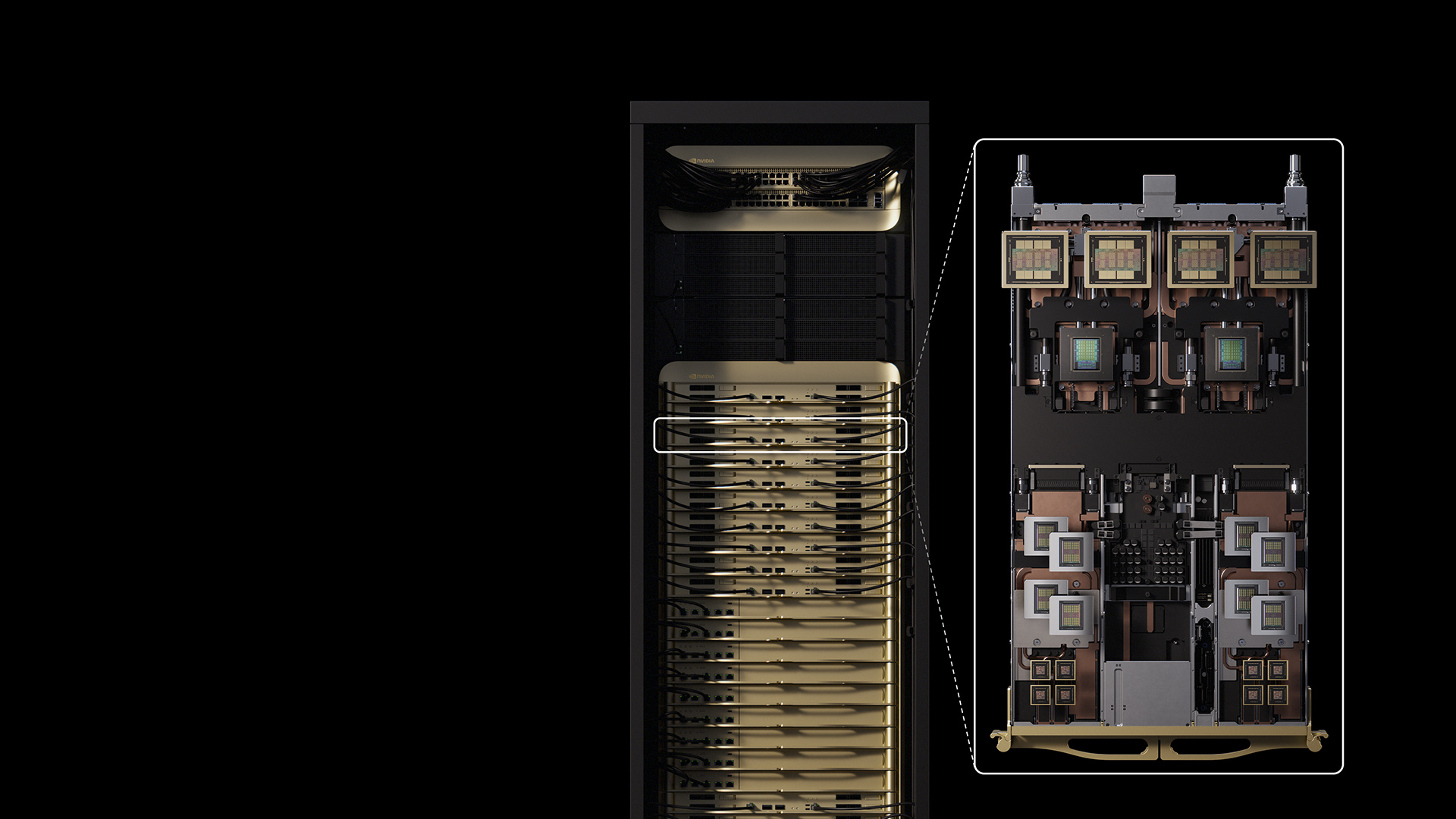

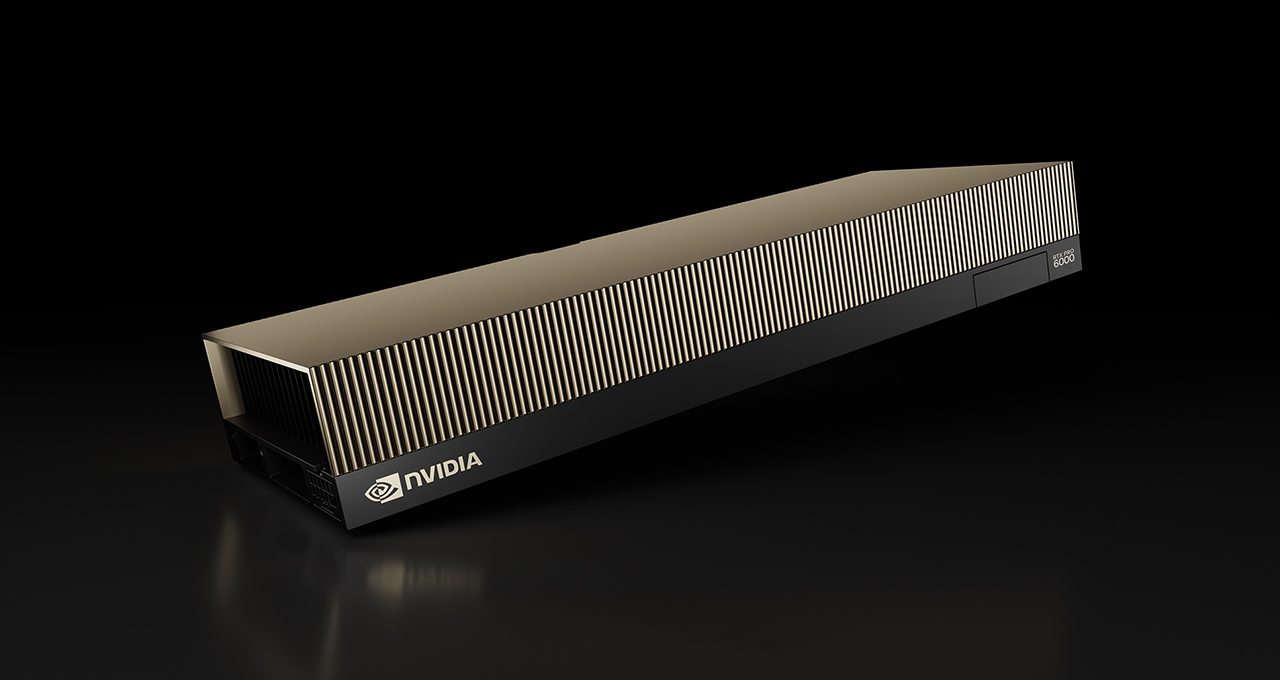

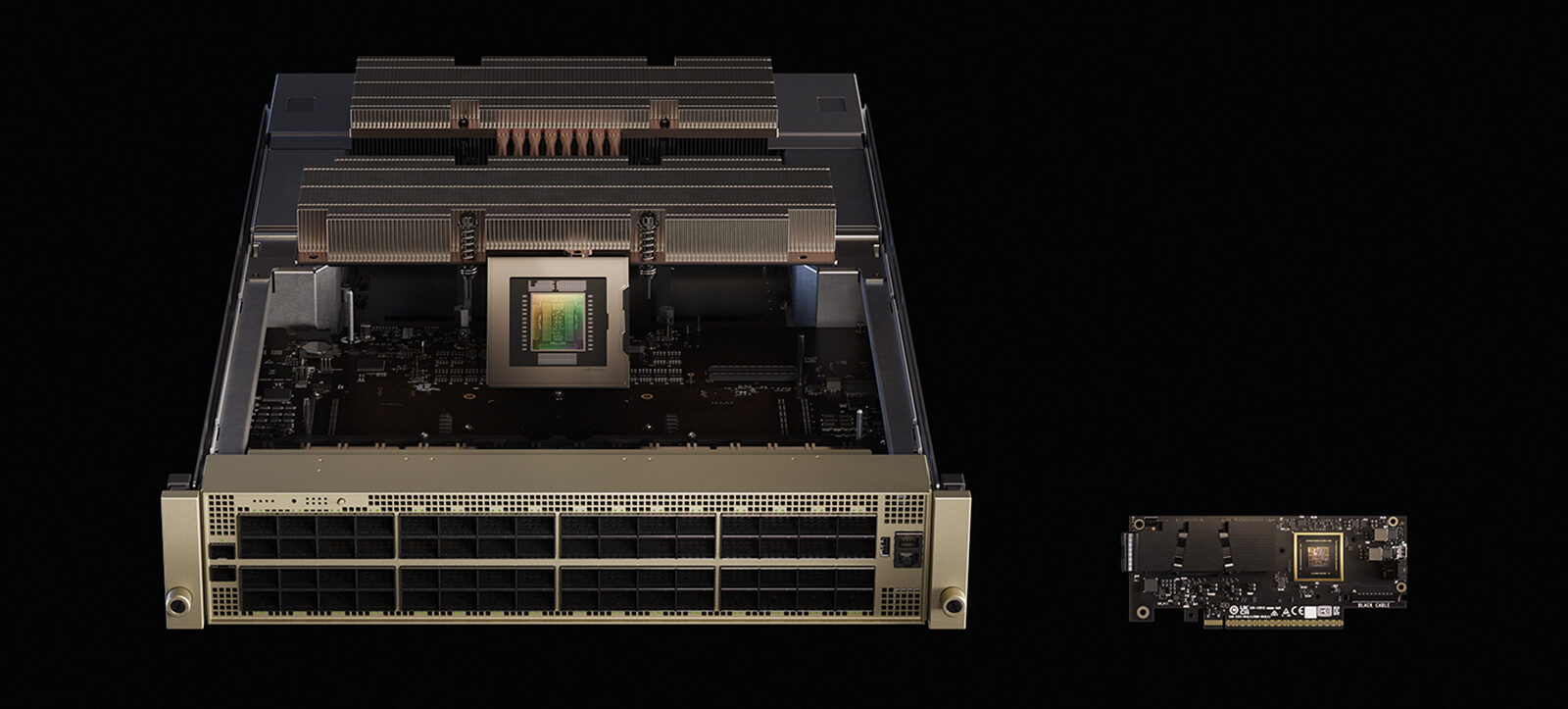

AWS is expanding its accelerated computing portfolio by adding the NVIDIA Blackwell architecture, including NVIDIA HGX B300 systems and NVIDIA GB300 NVL72 GPUs for large-scale training and inference clusters. In addition, NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, designed for visual and graphics-intensive applications, are expected to be available on AWS soon.

These GPUs are part of the infrastructure backbone for AWS AI Factories. This new AI cloud offering is for customers who need dedicated AI infrastructure in their own data centers, managed by AWS. The model delivers cloud-grade AI infrastructure within customer-controlled facilities. This ensures organizations maintain oversight and security over their AI resources within their own environments.

It also focuses on data control by meeting local data residency requirements. This way, sensitive information stays within specified geographic limits, fulfilling regulatory standards while allowing smooth operations. The model offers access to advanced training and inference platforms at scale, enabling users to efficiently develop, deploy, and manage complex AI solutions across large datasets.

Global Commitment

NVIDIA and AWS commit to deploying sovereign AI clouds worldwide and to bringing advanced AI capabilities to regions needing to comply with strict sovereign AI policies. With AWS AI Factories, the two companies plan to provide secure, sovereign AI infrastructure that delivers unmatched compute capacity while meeting rising regulatory and data-sovereignty requirements.

For public sector organizations, AWS views AI Factories as a significant shift in federal supercomputing and AI strategy. Customers can combine AWS’s reliable, secure, and scalable cloud infrastructure with NVIDIA Blackwell GPUs and the complete NVIDIA accelerated computing stack, including NVIDIA Spectrum-X Ethernet switches.

The goal is to create a unified system that enables customers to access advanced AI services and capabilities, allowing them to train and deploy very large models at scale. Furthermore, organizations maintain strict control over proprietary data that complies with local regulations.

NVIDIA Software on AWS: Simplifying the Developer and Data Experience

NVIDIA and AWS are also working together on software and data layers to accelerate processing of unstructured data and manage agent lifecycles.

Amazon OpenSearch Service now features serverless GPU acceleration for vector index building, powered by NVIDIA cuVS, an open-source library for GPU-accelerated vector search and clustering. This integration shifts toward GPUs being the default engine for vector-heavy, unstructured data workloads.

Early adopters report significant improvements in their workflows, noting that vector indexing is up to ten times faster, significantly boosting efficiency. Additionally, this new approach costs about a quarter as much as traditional methods, resulting in significant savings. These advancements are seen as a breakthrough, providing both speed and economic benefits to users.

These performance and cost enhancements help reduce search latency, accelerate write paths, and improve throughput for dynamic AI patterns such as retrieval-augmented generation, where quick vector indexing is crucial. AWS is the first major cloud provider to offer serverless vector indexing backed by NVIDIA GPUs.

To transition AI agents from proof of concept to production, customers need to see performance data, optimization tools, and scalable management.

NVIDIA and AWS are teaming up to create a complete end-to-end solution by integrating several key components. This cooperation aims to simplify agent development, management, and optimization processes across their platforms.

At the heart of this initiative are the Strands Agents, designed to aid in agent creation and management. Complementing this is the NVIDIA NeMo Agent Toolkit, which provides advanced tools for deep profiling, performance tuning, and optimization, ensuring efficient deployment. Meanwhile, Amazon Bedrock’s AgentCore provides a secure, scalable infrastructure for agents within the AWS environment, enabling reliable operations across applications. Together, these elements are set to ensure a smooth progression from prototype builds to production-ready, observable, and scalable AI agents.

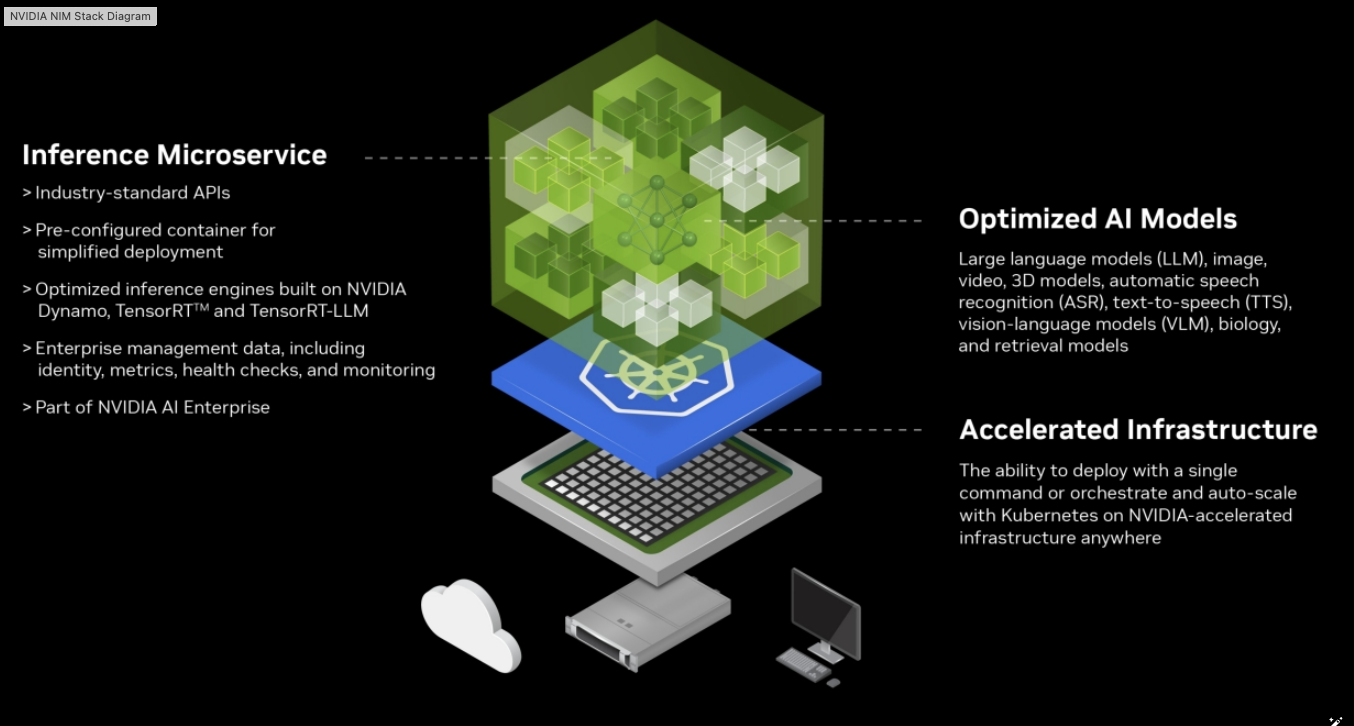

This expanded software partnership builds on existing NVIDIA integrations on AWS, including NVIDIA NIM microservices and frameworks such as NVIDIA Riva for speech and NVIDIA BioNeMo for scientific workloads. These capabilities, combined with model development and deployment on Amazon SageMaker and Amazon Bedrock, are designed to accelerate the deployment of agentic AI, speech AI, and scientific applications while using familiar AWS services.

Accelerating Physical AI and Robotics on AWS

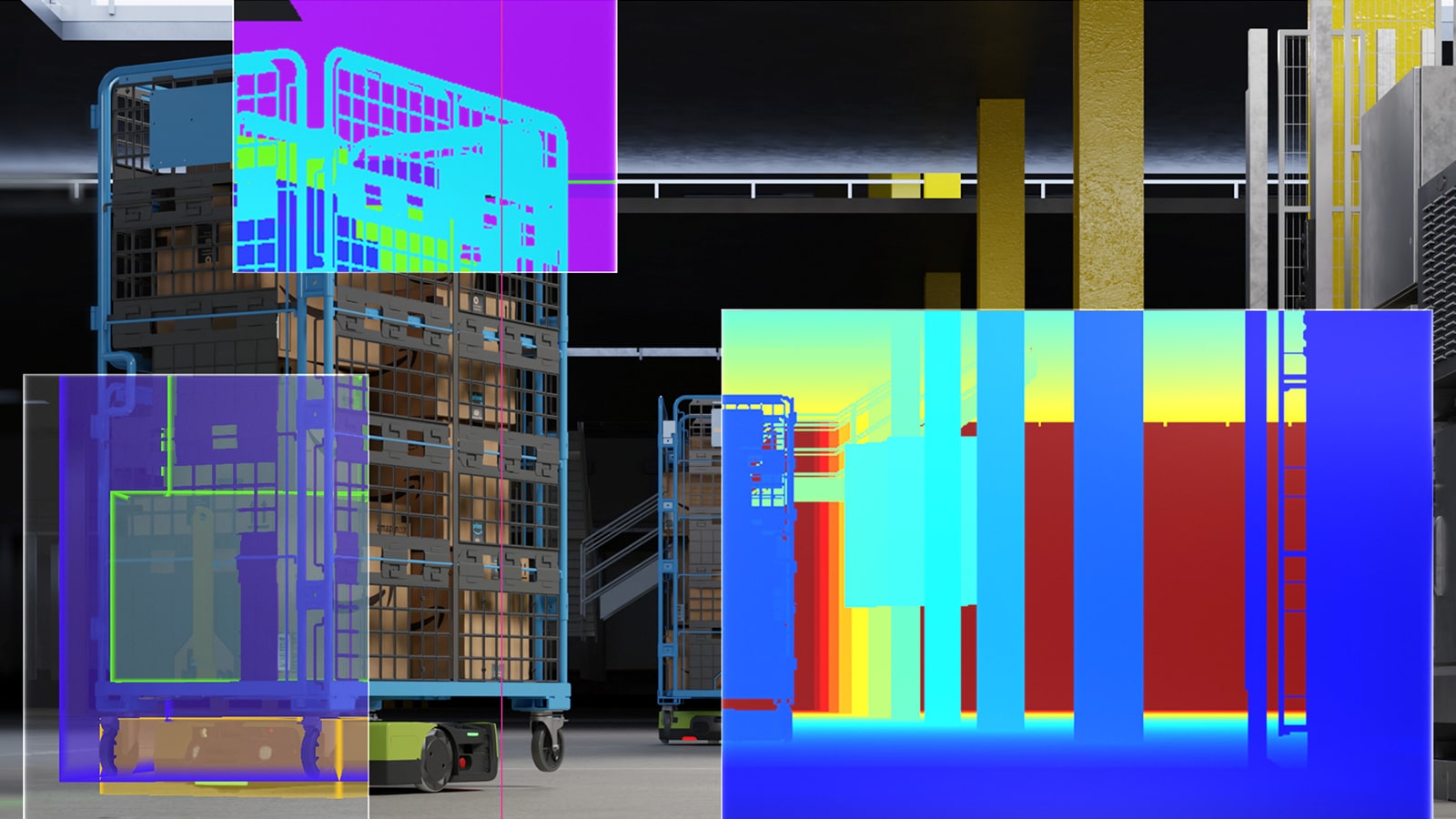

The collaboration also extends into physical AI and robotics, where training and deploying robot models depend on high-quality datasets and strong simulation frameworks.

NVIDIA Cosmos world foundation models (WFMs) are now available as NVIDIA NIM microservices on Amazon EKS. This gives customers a way to run real-time robotics control and simulation workloads in a cloud-native, Kubernetes-based environment that ensures reliability and flexibility.

For batch-oriented and offline tasks, such as large-scale synthetic data generation, Cosmos WFMs are also offered as containers on AWS Batch. This supports high-throughput workflows for generating diverse world states and scenarios at scale.

Cosmos-generated world states can be used to train and validate robot behaviors in open-source simulation and learning frameworks like NVIDIA Isaac Sim and Isaac Lab. This process creates a complete pipeline that aids various development and testing stages. Initially, Cosmos WFMs generate structured, diverse environments, providing a wide range of evaluation scenarios.

Next, Isaac Sim and Isaac Lab simulate robotic systems and policies within these environments. This simulation phase enables thorough testing and refinement of robotic behaviors in a controlled setting. Finally, models are validated through simulation before deployment on real robots. This approach minimizes risk and reduces costs related to iterative testing, ensuring a more reliable and efficient development cycle.

Various robotics companies are already using the NVIDIA Isaac platform on AWS throughout the robotics development lifecycle. This group includes Agility Robotics, Agile Robots, ANYbotics, Diligent Robotics, Dyna Robotics, Field AI, Haply Robotics, Lightwheel, RIVR, and Skild AI. Their use cases range from collecting, storing, and processing robot-generated data to training and simulation tasks necessary for scaling real-world applications.

Long-Term Collaboration and Industry Positioning

These expanded announcements build on AWS and NVIDIA’s long-standing relationship. Recently, NVIDIA received the AWS Global GenAI Infrastructure and Data Partner of the Year award. This award recognizes technology partners with the AWS Generative AI Competency, highlighting those that support vector embeddings, data storage and management, and synthetic data generation across multiple data forms and formats.

This expanded collaboration positions AWS and NVIDIA to deliver tightly integrated, cloud-scale AI platforms. These platforms aim to address both performance and sovereignty needs while offering enterprises a more straightforward path from experimental AI projects to large-scale production deployments.

Amazon

Amazon