During the AWS re:Invent keynote, AWS and NVIDIA announced a significant development for artificial intelligence (AI). AWS CEO Adam Selipsky invited NVIDIA CEO Jensen Huang on stage to elaborate upon the two companies’ strategic collaboration efforts. This partnership is set to deliver the most advanced infrastructure, software, and services, fueling generative AI innovations. The collaboration is a blend of NVIDIA’s cutting-edge technology and AWS’s robust cloud infrastructure, marking a new era in AI development.

Revolutionizing Cloud AI with NVIDIA GH200 Grace Hopper Superchips

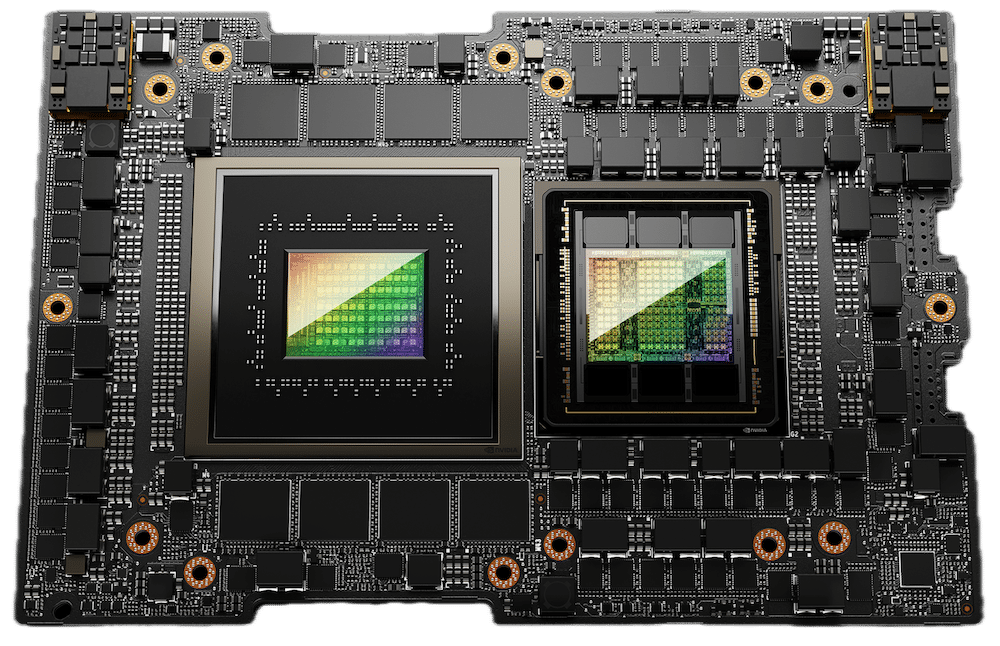

A cornerstone of this collaboration is AWS’s introduction of NVIDIA’s GH200 Grace Hopper Superchips into the cloud, a first among cloud providers. These superchips represent a significant leap in cloud-based AI computing. The GH200 NVL32 multi-node platform, which connects 32 Grace Hopper Superchips using NVIDIA NVLink and NVSwitch technologies, will be integrated into Amazon EC2 instances. These new Amazon EC2 instances cater to various AI, HPC, and graphics workloads.

Each GH200 Superchip combines an Arm-based Grace CPU with an NVIDIA Hopper architecture GPU on the same module, enabling a single EC2 Instance to provide up to 20TB of shared memory to power terabyte-scale workloads. This setup allows joint customers to scale to thousands of GH200 Superchips, offering unprecedented computational power for AI research and applications.

Supercharging Generative AI, HPC, Design, and Simulation

AWS is introducing three new EC2 instances: the P5e instances, powered by NVIDIA H200 Tensor Core GPUs, are designed for large-scale and cutting-edge generative AI and HPC workloads. The G6 and G6e instances, powered by NVIDIA L4 and L40S GPUs, respectively, are suited for AI fine-tuning, inference, graphics, and video workloads. The G6e instances are particularly suitable for developing 3D workflows, digital twins, and applications using NVIDIA Omniverse, a platform for building generative AI-enabled 3D applications.

NVIDIA GH200-powered EC2 instances will feature 4.5TB of HBM3e memory. That’s a 7.2x increase compared to current H100-powered EC2 P5d instances. CPU-to-GPU memory interconnect will provide up to 7x higher bandwidth than PCIe, enabling chip-to-chip communications that extend the total memory available for applications.

AWS instances with GH200 NVL32 will be the first AI infrastructure on AWS to feature liquid cooling. This will help ensure that densely packed server racks operate efficiently at maximum performance. EC2 instances with GH200 NVL32 will also benefit from the AWS Nitro System, the underlying platform for next-generation EC2 instances. Nitro offloads I/O for functions from the host CPU/GPU to specialized hardware, delivering more consistent performance with enhanced security to protect customer code and data during processing.

NVIDIA Software on AWS Boosts Generative AI Development

In another game-changing announcement, NVIDIA software running on AWS will boost generative AI development. NVIDIA NeMo Retriever microservice will offer tools to create accurate chatbots and summarization tools using accelerated semantic retrieval.

Pharmaceutical companies can speed up drug discovery with NVIDIA BioNeMo, which is available on Amazon SageMaker and coming to DGX Cloud.

Leveraging the NVIDIA NeMo framework, AWS will train select next-generation Amazon Titan LLMs. Amazon Robotics is using NVIDIA Omniverse Isaac to build digital twins for automating, optimizing, and planning autonomous warehouses in virtual environments prior to deployment in the real world.

NVIDIA DGX Cloud Hosted on AWS: Democratizing AI Training

Another pivotal aspect of this partnership is hosting NVIDIA DGX Cloud on AWS. This AI-training-as-a-service will be the first to feature the GH200 NVL32, providing developers with the largest shared memory in a single instance. The DGX Cloud on AWS will significantly accelerate the training of cutting-edge generative AI and large language models (LLMs), potentially reaching beyond 1 trillion parameters. This service democratizes access to high-end AI training resources, previously only available to those with significant computational infrastructure.

Project Ceiba: Building the World’s Fastest GPU-Powered AI Supercomputer

In an ambitious move, AWS and NVIDIA are collaborating on Project Ceiba to build the world’s fastest GPU-powered AI supercomputer. This system, featuring 16,384 NVIDIA GH200 Superchips and capable of processing 65 exaflops of AI, will be hosted by AWS for NVIDIA’s research and development team. The supercomputer will propel NVIDIA’s generative AI innovation, impacting areas like digital biology, robotics, autonomous vehicles, and climate prediction.

A New Era of AI Development on AWS

This expanded collaboration between AWS and NVIDIA is more than just a technological advancement; it’s a paradigm shift in cloud-based AI computing. By combining NVIDIA’s advanced AI infrastructure and AWS’s cloud capabilities, this partnership is set to revolutionize how generative AI is developed and deployed across various industries. From pharmaceuticals to autonomous vehicles, the implications of this collaboration are vast and far-reaching, promising to unlock new possibilities in AI and beyond.

Amazon

Amazon