NVIDIA GTC 2023 kicked off by introducing new products, partners, innovations, and software. To give you an idea of the breadth of announcements, the keynote lasted 78 minutes. Four new platforms were announced, each optimized for a specific generative AI inference workload and specialized software.

The platforms combine NVIDIA’s full stack of inference software with the latest NVIDIA Ada, Hopper, and Grace Hopper processors. Two new GPUs, the NVIDIA L4 Tensor Core GPU and H100 NVL GPU, were launched today.

NVIDIA L4 for AI Video delivers 120x more AI-powered video performance than CPUs, combined with 99 percent better energy efficiency. The L4 serves as a universal GPU for virtually any workload, offering enhanced video decoding and transcoding capabilities, video streaming, augmented reality, generative AI video, and more.

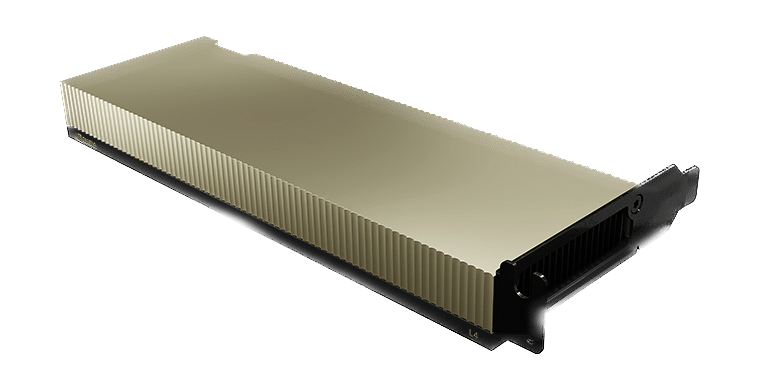

The NVIDIA Ada Lovelace L4 Tensor Core GPU delivers universal acceleration and energy efficiency for video, AI, virtualized desktop, and graphics applications in the enterprise, in the cloud, and at the edge. With NVIDIA’s AI platform and full-stack

approach, L4 is optimized for inference at scale for a broad range of AI applications, including recommendations, voice-based AI avatar assistants, generative AI, visual search, and contact center automation.

The L4 is the most efficient NVIDIA accelerator for mainstream use, and servers equipped with L4 power up to 120x higher AI video performance and 2.7x more generative AI performance over CPU solutions, as well as over 4x more graphics performance than the previous GPU generation. NVIDIA L4 is a versatile, energy-efficient, single-slot, low-profile form factor making it ideal for large deployments and edge locations.

The NVIDIA L40 for Image Generation is optimized for graphics and AI-enabled 2D, video, and 3D image generation. The L40 platform serves as the engine of NVIDIA Omniverse, a platform for building and operating metaverse applications in the data center, delivering 7x the inference performance for Stable Diffusion and 12x Omniverse performance over the previous generation.

The NVIDIA L40 GPU delivers high-performance visual computing for the data center, with next-generation graphics, compute, and AI capabilities. Built on the NVIDIA Ada Lovelace architecture, the L40 harnesses the power of the latest generation RT, Tensor, and CUDA cores to deliver visualization and compute performance for demanding data center workloads.

The L40 offers enhanced throughput and concurrent ray-tracing and shading capabilities that improve ray-tracing performance and accelerate renders for product design and architecture, engineering, and construction workflows. The L40 GPU delivers hardware support for structural sparsity and optimized TF32 format for out-of-the-box performance gains for faster AI and data science model training. The accelerated AI-enhanced graphics capabilities, including DLSS, deliver upscaled resolution with better performance in select applications.

The L40’s large GPU memory tackles memory-intensive applications and workloads such as data science, simulation, 3D modeling, and rendering with 48GB of ultra-fast GDDR6 memory. Memory is allocated to multiple users with vGPU software to distribute large workloads among creative, data science, and design teams.

Designed for 24×7 enterprise data center operations with power-efficient hardware and components, the NVIDIA L40 is optimized to deploy at scale and deliver maximum performance for diverse data center workloads. The L40 includes secure boot with the root of trust technology, providing an additional layer of security, and is NEBS Level 3 compliant to meet data center standards.

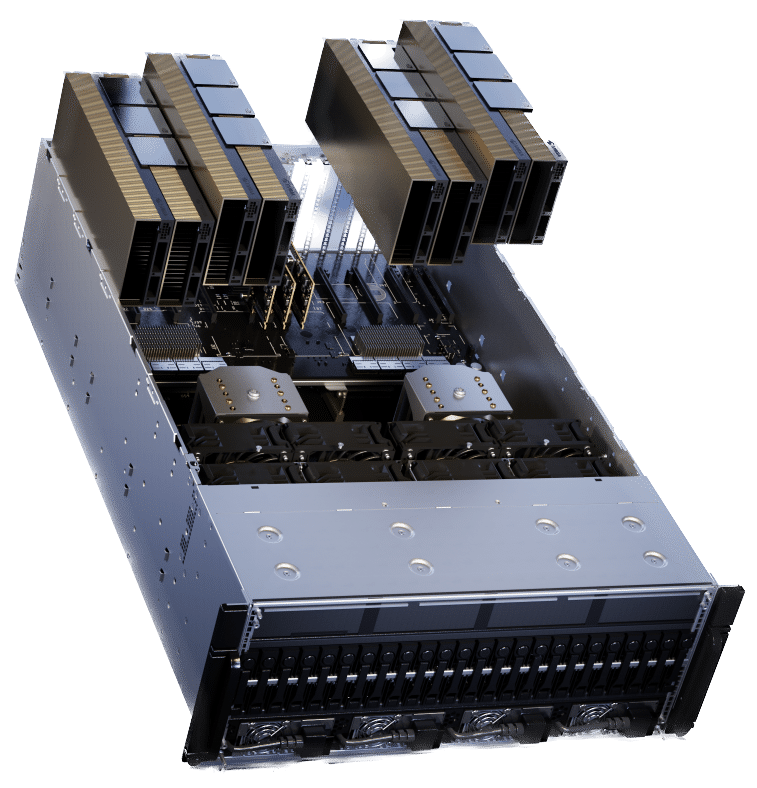

The NVIDIA H100 NVL for Large Language Model Deployment is ideal for deploying massive LLMs like ChatGPT at scale. The new H100 NVL comes with 94GB of memory with Transformer Engine acceleration and delivers up to 12x faster inference performance at GPT-3 compared to the prior generation A100 at data center scale.

The PCIe-based H100 NVL with NVLink bridge utilizes Transformer Engine, NVLink, and 188GB HBM3 memory to deliver optimum performance and scaling across data centers. The H100 NVL supports Large Language Models up to 175 billion parameters. Servers equipped with H100 NVL GPUs increase GPT-175B model performance up to 12x over NVIDIA DGX A100 systems while maintaining low latency in power-constrained data center environments.

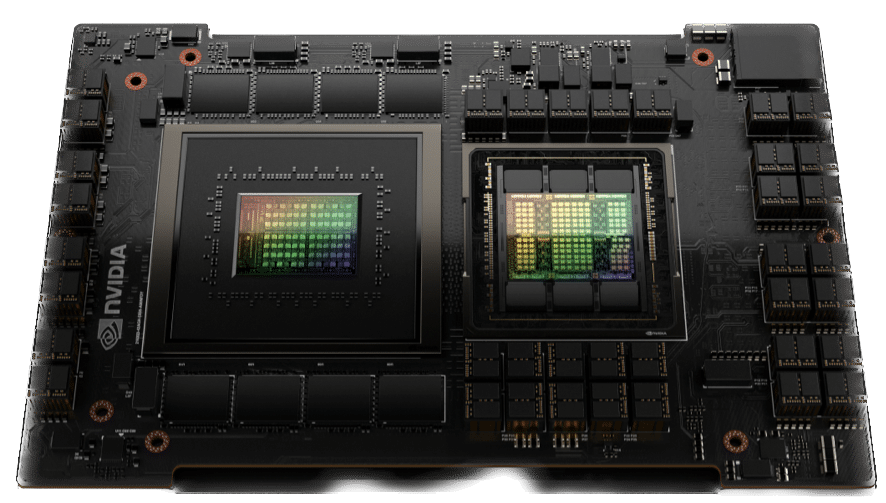

NVIDIA Grace Hopper for Recommendation Models is ideal for graph recommendation models, vector databases, and graph neural networks. With the 900 GB/s NVLink-C2C connection between CPU and GPU, Grace Hopper can deliver 7x faster data transfers and queries than PCIe Gen 5.

The NVIDIA Grace Hopper Superchip is a breakthrough accelerated CPU designed from the ground up for giant-scale AI and high-performance computing (HPC) applications. The superchip will deliver up to 10X higher performance for applications running terabytes of data, enabling scientists and researchers to reach unprecedented solutions for the world’s most complex problems.

The NVIDIA Grace Hopper Superchip combines the Grace and Hopper architectures using NVIDIA NVLink-C2C to deliver a CPU+GPU coherent memory model for accelerated AI and HPC applications. Grace Hopper includes 900 gigabytes per second (GB/s) coherent interface, is 7x faster than PCIe Gen5, and delivers 30x higher aggregate system memory bandwidth to GPU compared to NVIDIA DGX A100. On top of all that, it runs all NVIDIA software stacks and platforms, including the NVIDIA HPC SDK, NVIDIA AI, and NVIDIA Omniverse.

Modern recommender system models require substantial amounts of memory for storing embedding tables. Embedding tables contain semantic representations for items and users’ features, which help provide better recommendations to consumers.

Generally, these embeddings follow a power-law distribution for frequency of use since some embedding vectors are accessed more frequently than others. NVIDIA Grace Hopper enables high-throughput recommender system pipelines that

store the most frequently used embedding vectors in HBM3 memory and the remaining embedding vectors in the higher-capacity LPDDR5X memory. The NVLink C2C interconnect provides Hopper GPUs with high-bandwidth access to their local LPDDR5X memory. At the same time, the NVLink Switch System extends this to provide Hopper GPUs with high-bandwidth access to all LPDDR5X memory of all Grace Hopper Superchips in the NVLink network.

Amazon

Amazon