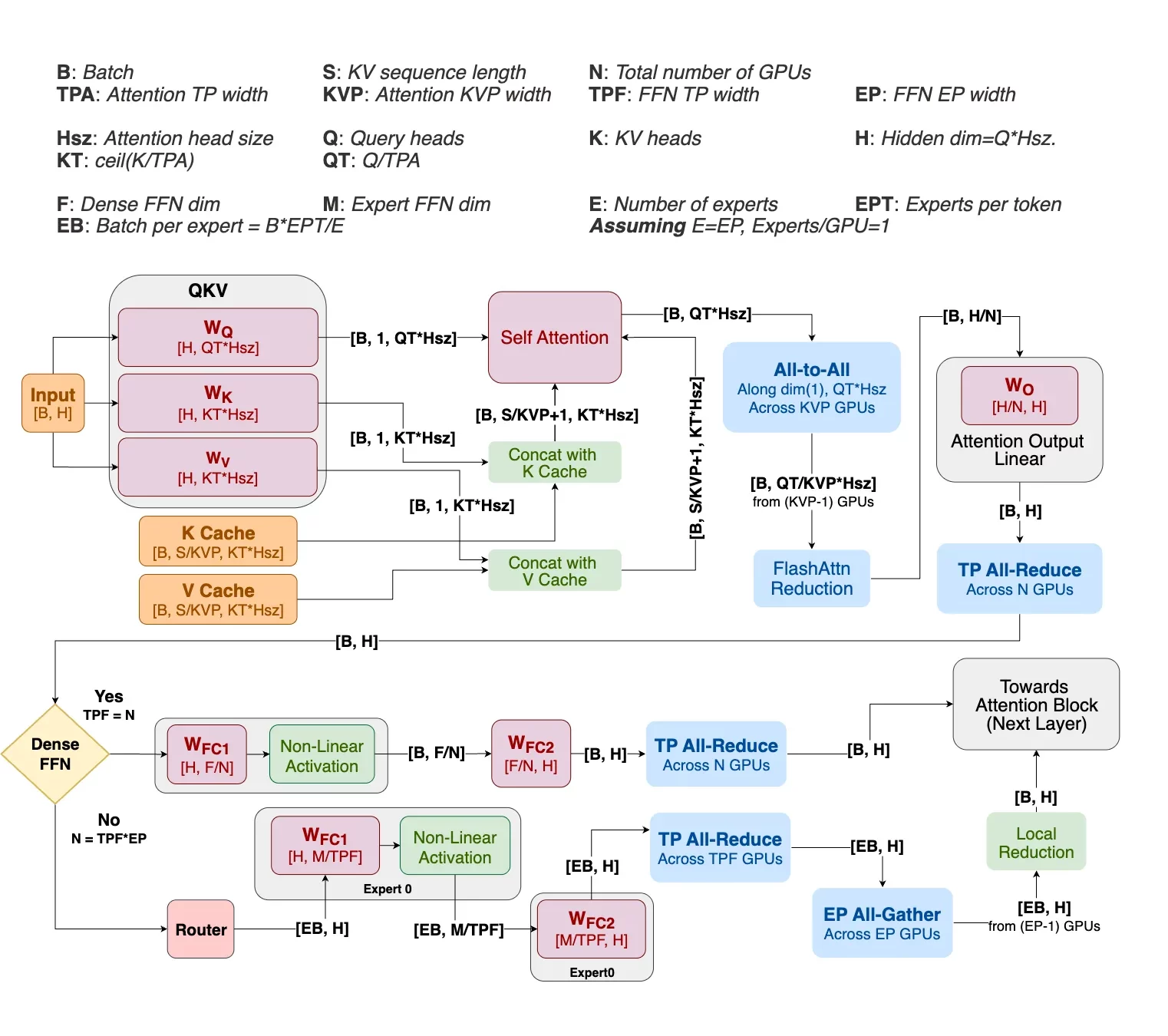

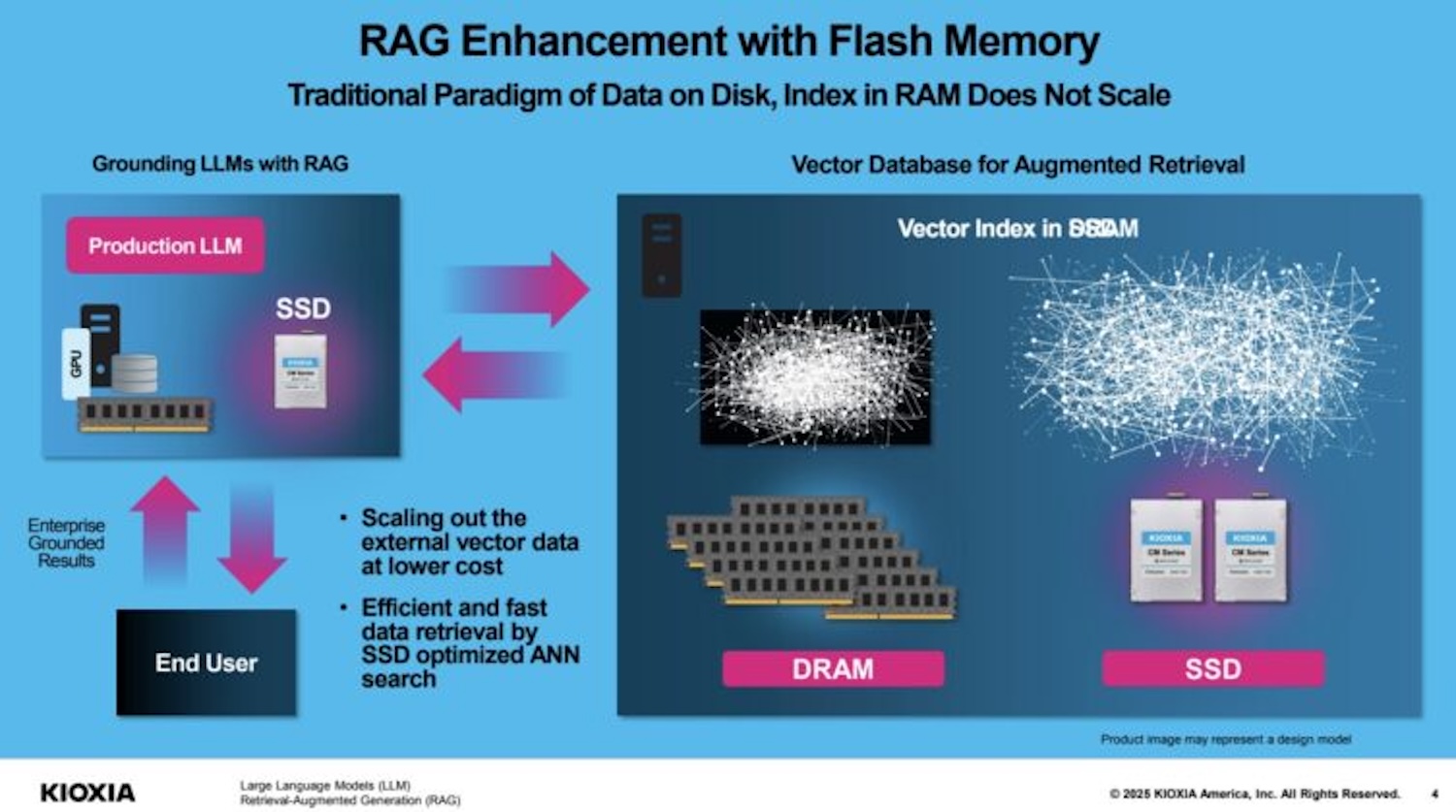

NVIDIA Helix Parallelism boosts real-time LLM performance on Blackwell GPUs, scaling multi-million-token AI with 32x efficiency gains.

NVIDIA Helix Parallelism boosts real-time LLM performance on Blackwell GPUs, scaling multi-million-token AI with 32x efficiency gains.

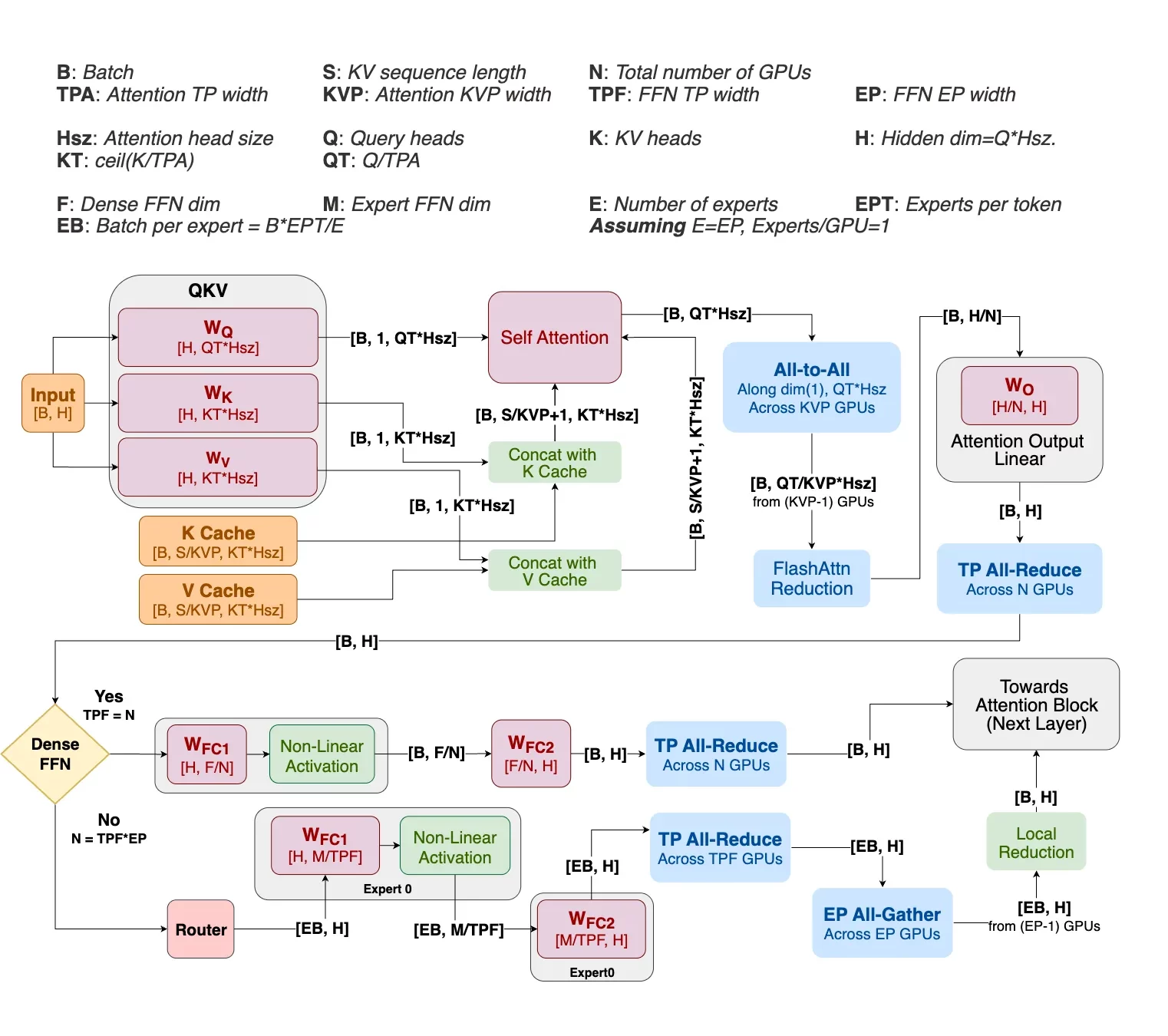

Hypertec TRIDENT iGW610R-G6, a 1U server, supports up to four full-height GPUs in a single-phase immersion environment. That’s up to 200 GPUs in a 50U tank.

IBM Power11 servers deliver unprecedented AI performance, hybrid-cloud flexibility, and robust resiliency, ensuring seamless, secure operations for enterprise workloads.

Dell and CoreWeave deliver the first NVIDIA GB300 NVL72 system, setting a new benchmark in AI performance and scalability for enterprise workloads.

Dell PowerScale earns NVIDIA Cloud Provider program certification.

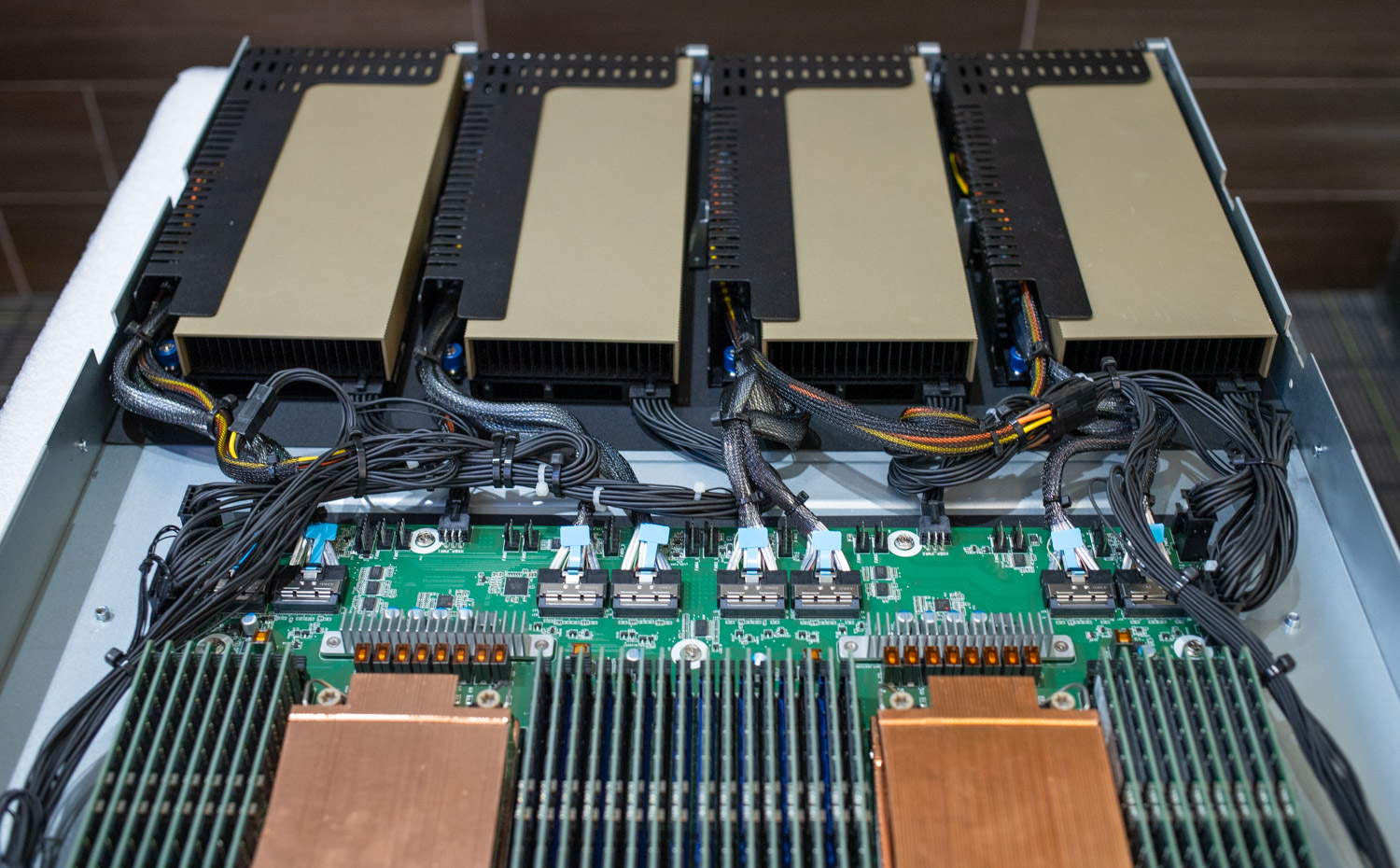

KIOXIA delivers a significant update to its open-source AiSAQ software, featuring advanced usability and flexibility in AI database searches within RAG systems.

HPE GreenLake Intelligence uses a new agentic AI framework that unifies and automates operations across the entire IT stack.

HPE simplifies AI adoption with integrated, end-to-end solutions and services.

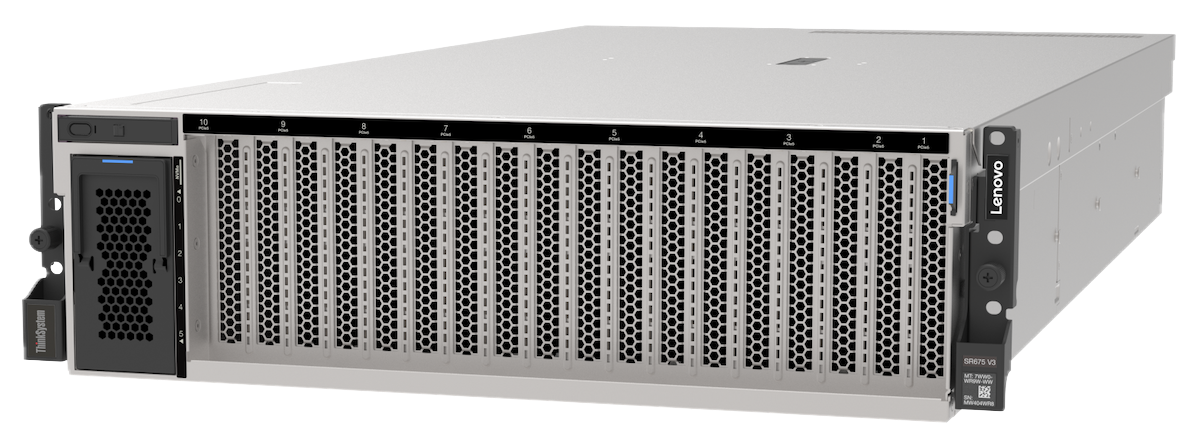

Lenovo expands Hybrid AI Advantage with full-stack solutions, platforms, and services to accelerate enterprise AI adoption and ROI.

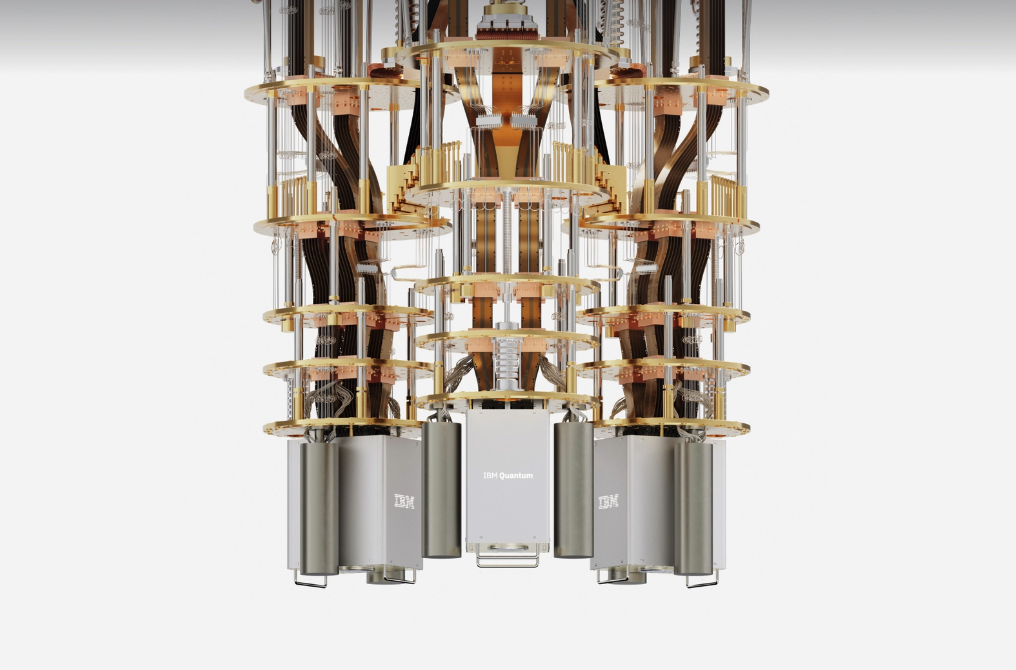

IBM unveils Starling, a fault-tolerant quantum computer, with a roadmap to 2,000 logical qubits and 1B operations by 2033.