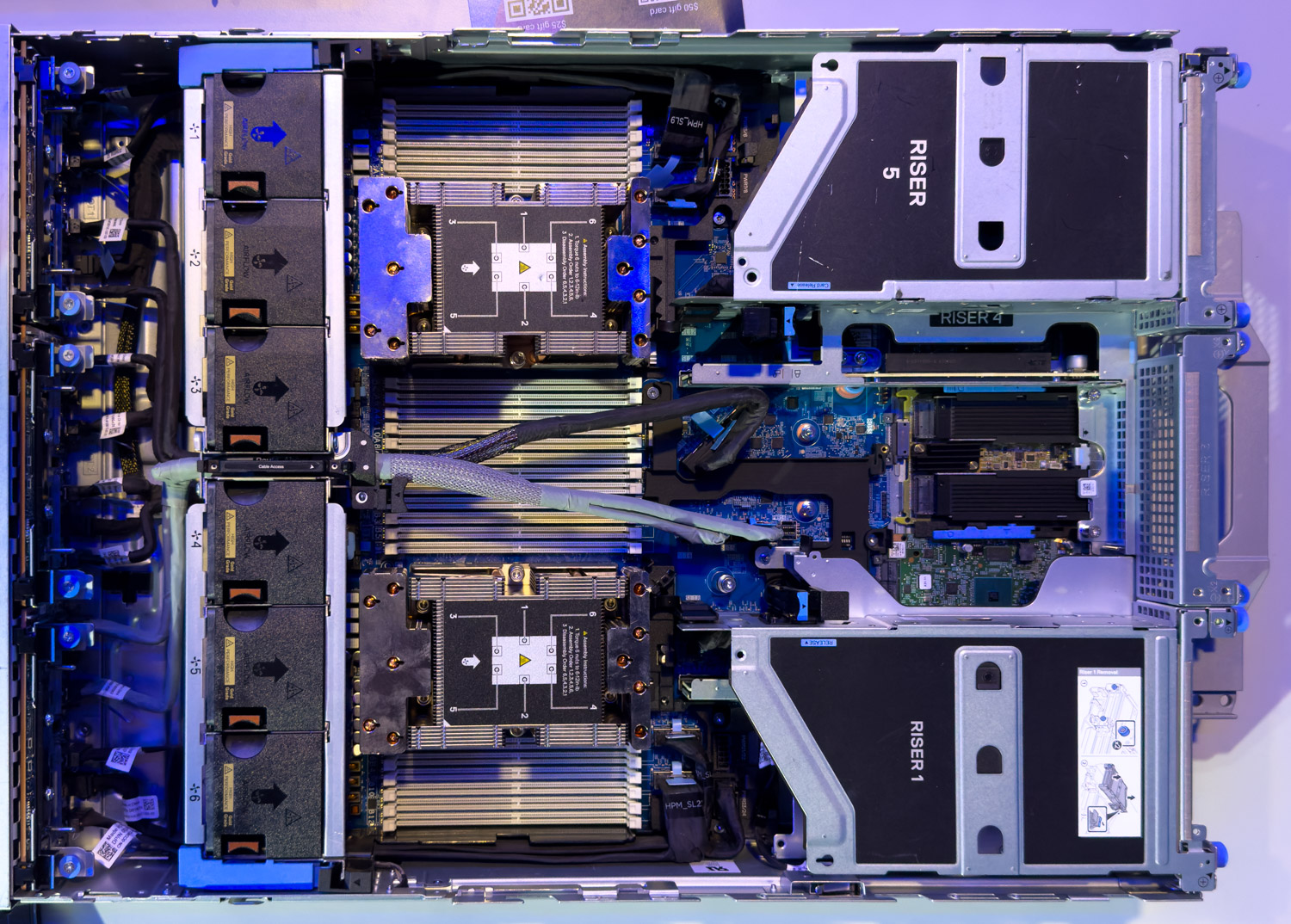

Dell PowerEdge R7725xd delivers uncompromised Gen5 NVMe performance with 24 x4 U.2 bays and up to 3PB of flash in a 2U form factor.

Dell PowerEdge R7725xd delivers uncompromised Gen5 NVMe performance with 24 x4 U.2 bays and up to 3PB of flash in a 2U form factor.

E2 SSDs are poised to redefine high-capacity storage with up to 1PB per drive—bridging the gap between HDDs and high-performance flash.

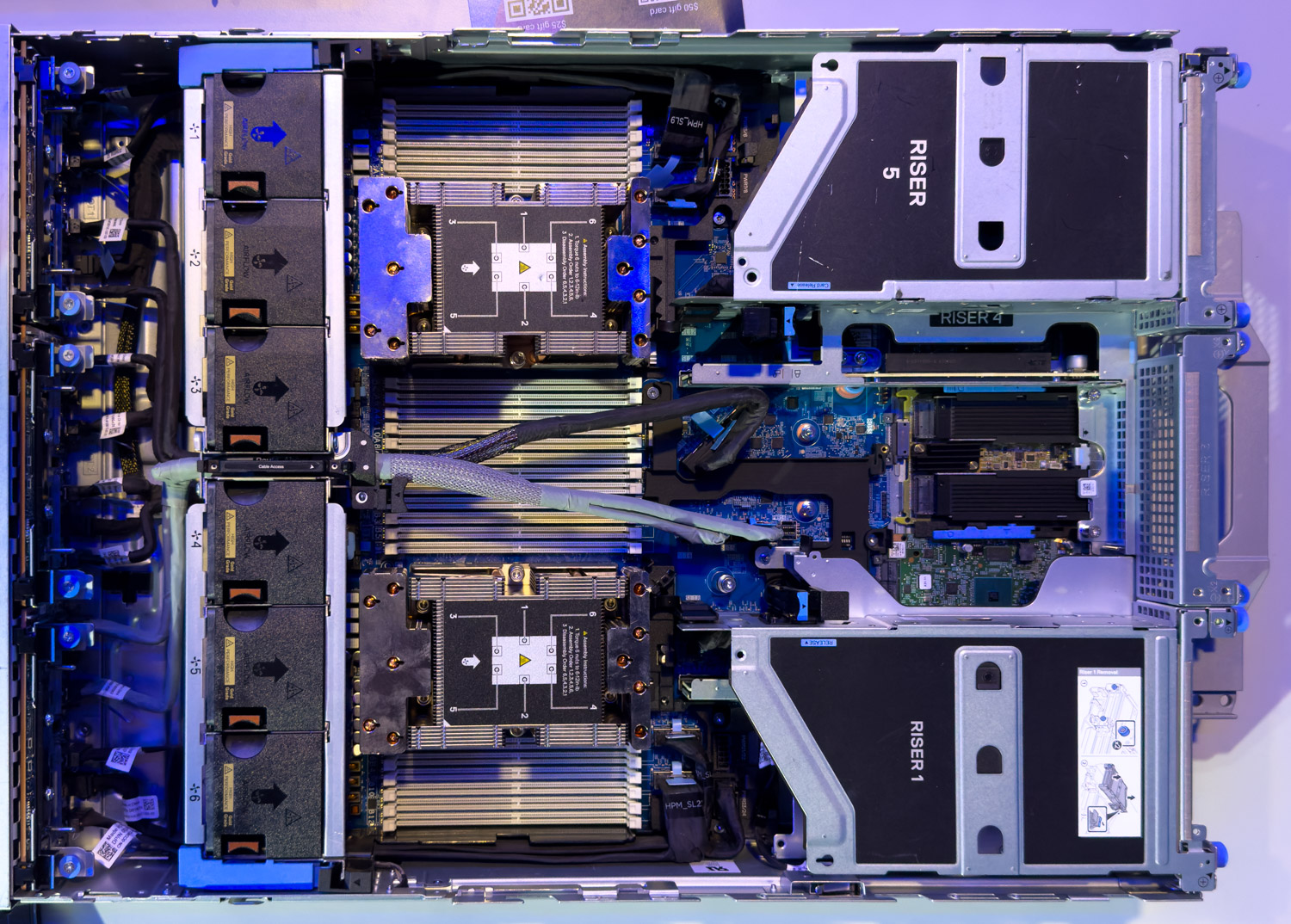

Broadcom’s 2025 report shows private cloud rising to parity with public cloud, driven by AI, security, and cost control priorities.

The Enosemi acquisition targets scaling advanced photonics and co-packaged optics solutions.

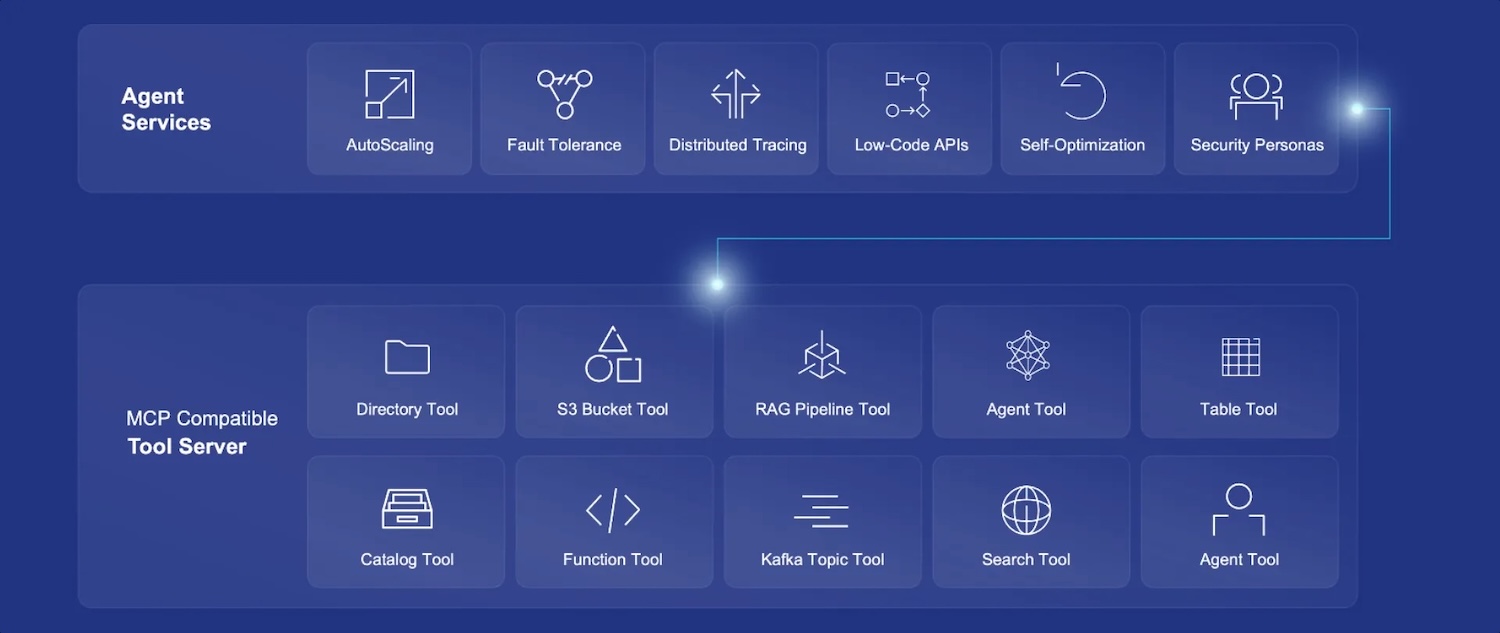

VAST Data has announced the launch of the VAST AI Operating System, a purpose-built platform designed to power the next era of AI innovation.

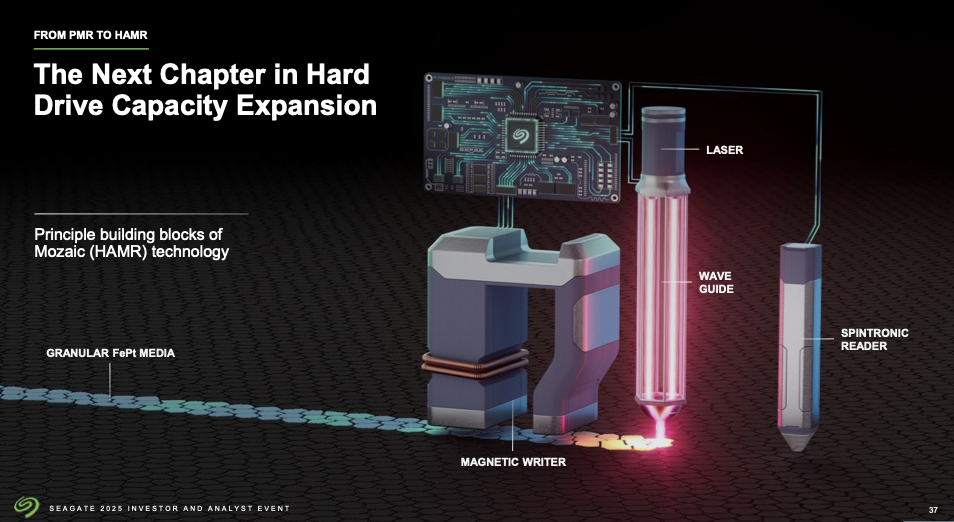

Seagate advances HAMR tech with its Mozaic platform, laying the groundwork for 100TB hard drives to meet growing AI and hyperscale storage demands.

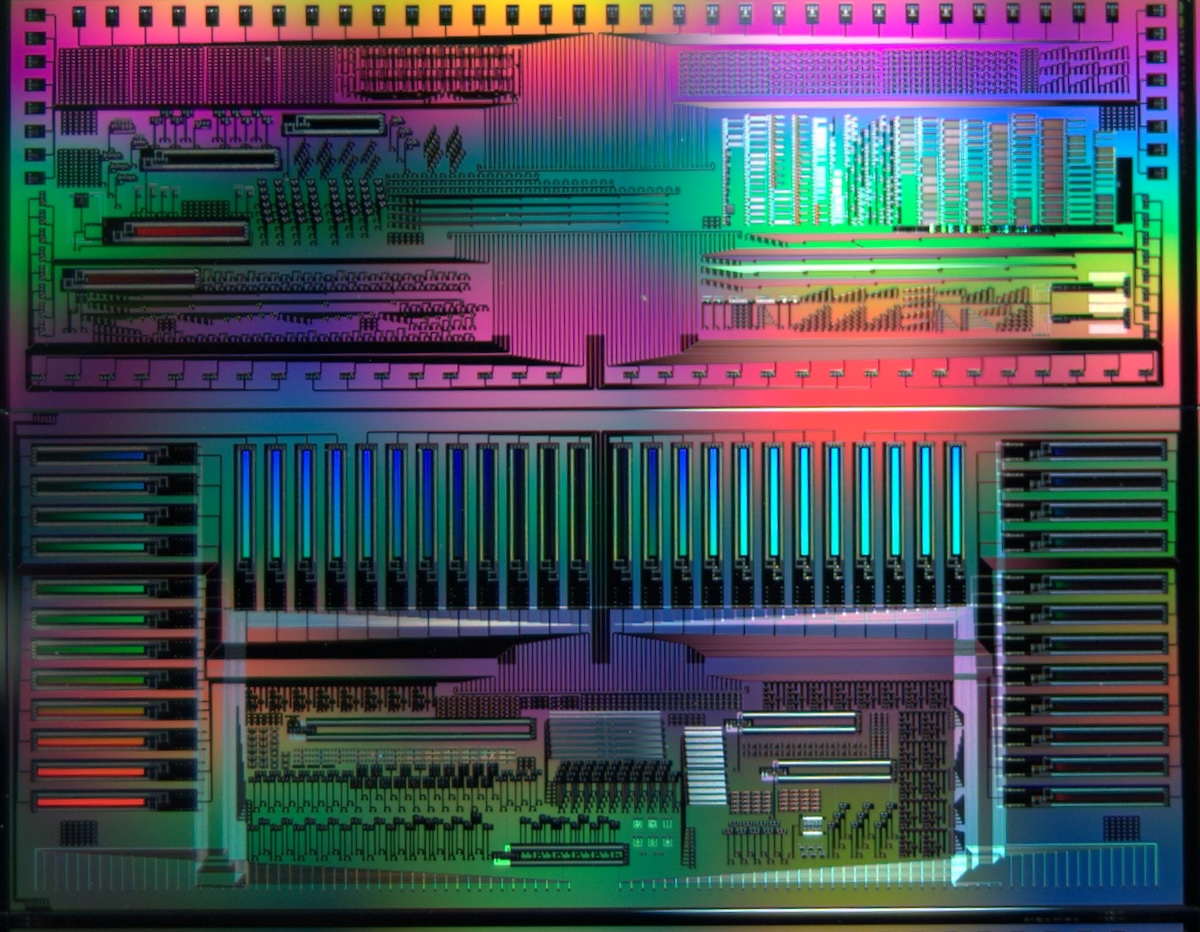

Intel Xeon 6 CPUs feature up to 128 P-cores, Priority Core Turbo, and SST-TF, delivering performance gains in AI, data centers, and telecom.

The Synology PAS7700 is a high-speed, all-NVMe storage platform offering 30GB/s throughput, 2M IOPS, and up to 1.65PB capacity.

At DTW 2025, Dell unveiled significant advances across storage, cyber resiliency, private cloud automation, and edge operations with NativeEdge.

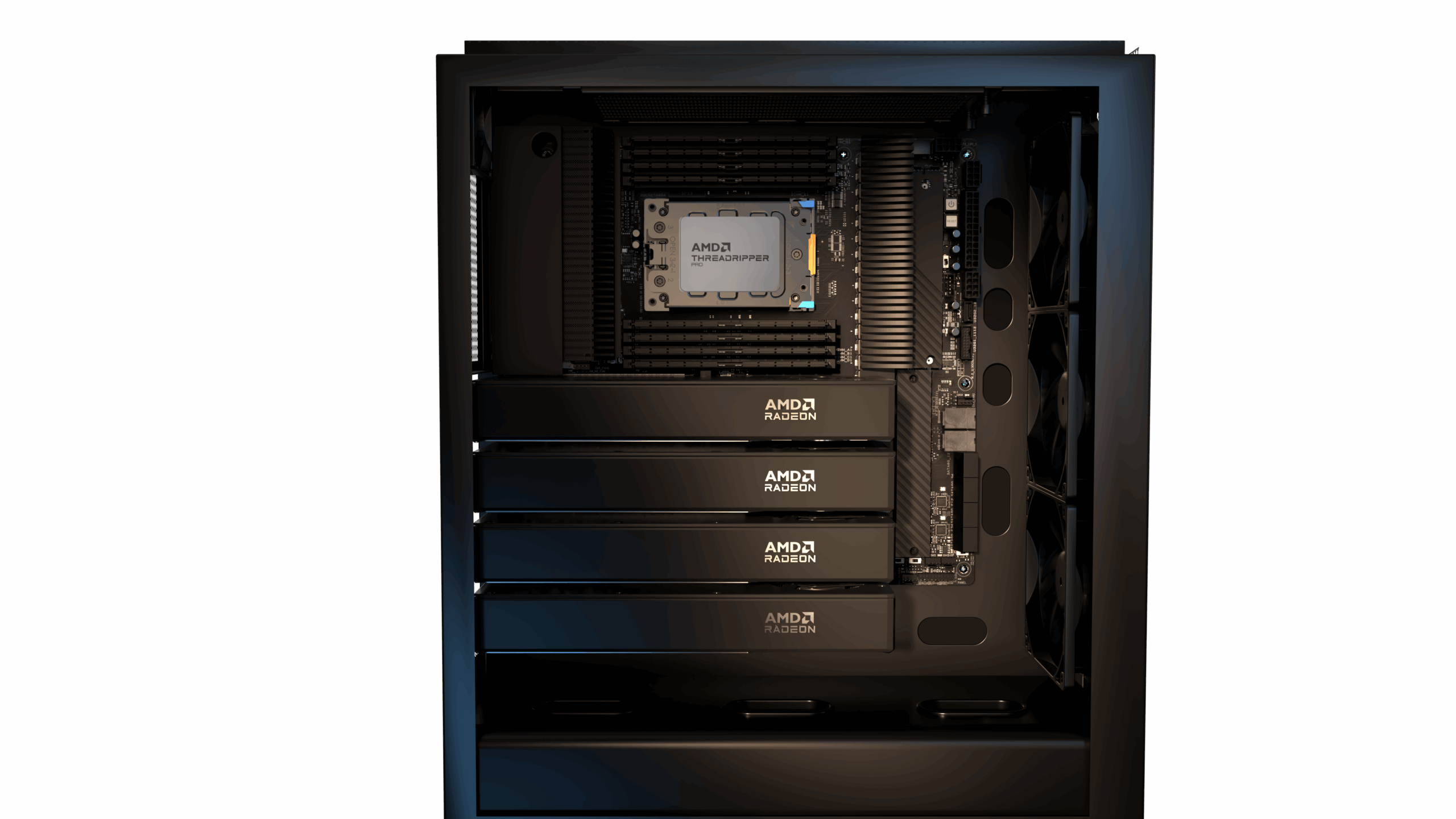

AMD unveils Ryzen Threadripper 9000 CPUs, Radeon AI PRO R9700 GPU, and RX 9060 XT GPU at COMPUTEX 2025, expanding pro and AI offerings.