Supermicro announced expanded rack-scale manufacturing capacity and upgraded direct liquid cooling capabilities to support the upcoming NVIDIA Vera Rubin NVL72 and NVIDIA HGX Rubin NVL8 platforms. The company is positioning the move to shorten deployment timelines for high-density AI infrastructure by combining US-based in-house design and manufacturing with its Data Center Building Block Solutions (DCBBS) approach and an end-to-end liquid-cooling technology stack.

Supermicro CEO Charles Liang emphasized that the company’s collaboration with NVIDIA and its building-block design methodology are intended to accelerate the delivery of advanced AI platforms. He also highlighted Supermicro’s expanded manufacturing footprint and liquid-cooling expertise as key enablers of efficient, reliable, large-scale deployments of Vera Rubin and Rubin systems across hyperscale and enterprise environments.

Rack-Scale Flagship: NVIDIA Vera Rubin NVL72 SuperCluster

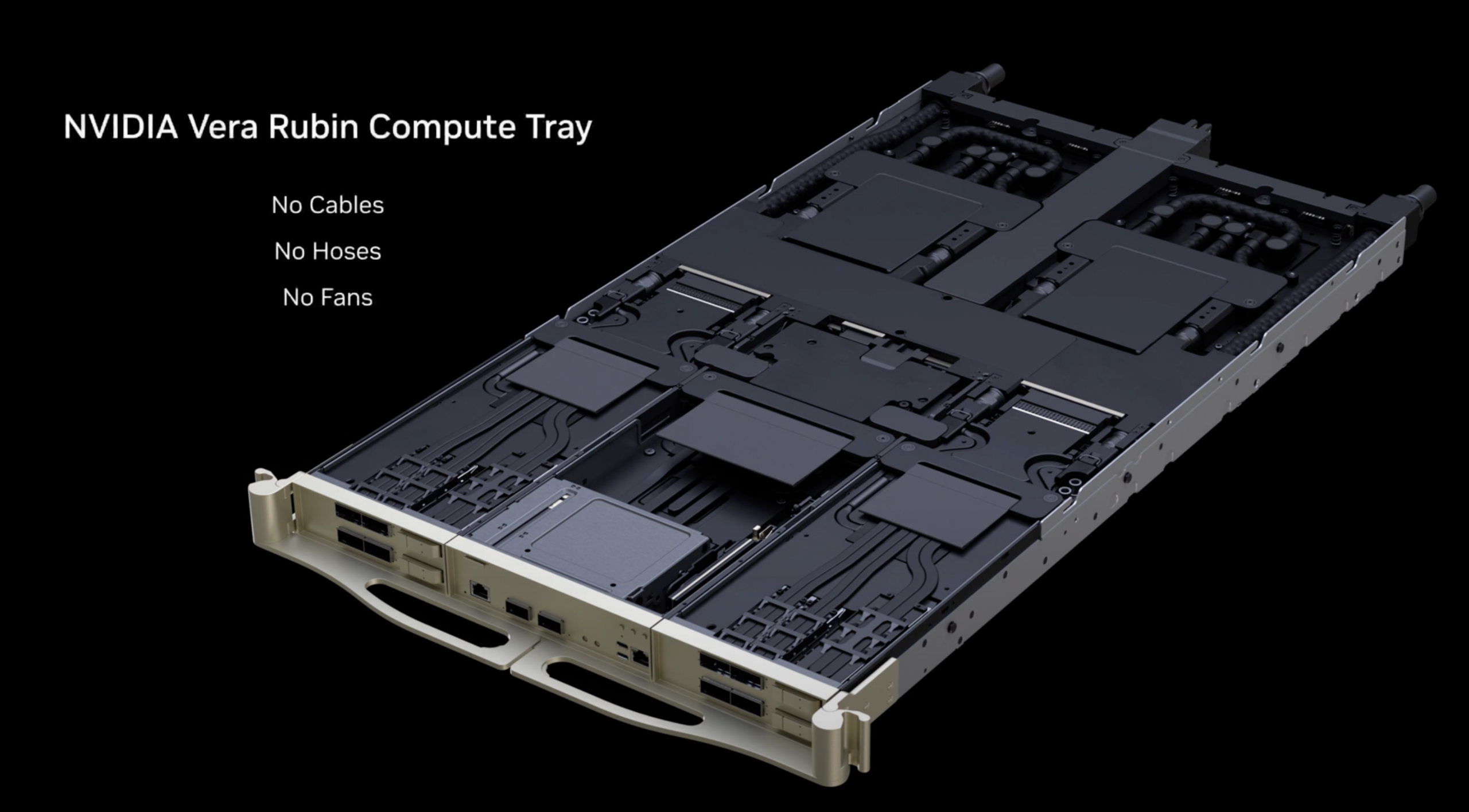

At the top end, Supermicro will support the NVIDIA Vera Rubin NVL72 SuperCluster. This rack-scale system integrates 72 NVIDIA Rubin GPUs and 36 NVIDIA Vera CPUs, along with NVIDIA ConnectX-9 SuperNICs and NVIDIA BlueField-4 DPUs. The platform uses NVIDIA NVLink 6 for intra-rack coherence. It is designed to scale out over NVIDIA Quantum-X800 InfiniBand and NVIDIA Spectrum-X Ethernet, aligning with the current industry push toward rack-as-a-system designs for training, inference, and agentic AI at scale.

NVIDIA rates the NVL72 configuration at 3.6 exaflops of NVFP4 performance, backed by 1.4 PB/s of HBM4 bandwidth and 75 TB of fast memory. Supermicro’s implementation is built on the 3rd-generation NVIDIA MGX rack architecture, with an emphasis on serviceability, reliability, and availability to support sustained cluster operations.

Cooling is central to the design. Supermicro is incorporating an enhanced data center-scale liquid-cooling stack with in-row Coolant Distribution Units (CDUs). The goal is to enable scalable warm-water cooling that reduces energy and water use while maintaining density and predictable performance under heavy AI workloads.

Compact Dense Compute: 2U Liquid-Cooled NVIDIA HGX Rubin NVL8

For enterprises and service providers seeking a more modular building block, Supermicro also highlighted 2U liquid-cooled NVIDIA HGX Rubin NVL8 systems. This 8-GPU platform is aimed at AI and HPC workloads, with NVIDIA quoting 400 petaflops of NVFP4 performance. The platform is specified at 176TB/s of HBM4 bandwidth and 28.8TB/s of NVLink bandwidth, with 1600Gb/s of NVIDIA ConnectX-9 networking via SuperNICs.

Supermicro is offering a rack-scale design intended to preserve deployment flexibility, with configuration options featuring flagship x86 CPUs, including next-generation Intel Xeon and AMD EPYC processors. The company also highlighted a high-density 2U busbar design for rack integration, paired with Supermicro’s direct liquid cooling (DLC) technology to support higher sustained power envelopes without sacrificing serviceability.

Vera Rubin Platform Features

Supermicro’s platform support aligns with several key Vera Rubin-era capabilities that directly impact cluster design and operational planning, including:

- NVIDIA NVLink 6 is a high-speed interconnect fabric that enables higher bandwidth and lower latency for GPU-to-GPU and CPU-to-GPU communication. This is especially relevant for training and inference of very large mixture-of-experts models, where communication patterns can dominate performance at scale.

- The NVIDIA Vera CPU features NVIDIA-designed Arm cores and is claimed to deliver 2x performance uplift over the prior generation. The CPU supports spatial multithreading with 88 cores and 176 threads, along with 1.2 TB/s LPDDR5X memory bandwidth and greater memory capacity. Vera also increases CPU-to-GPU coupling with 1.8 TB/s NVLink-C2C bandwidth to GPUs, cited as 2x the previous generation.

- NVIDIA’s 3rd Generation Transformer Engine targets long-context and narrow-precision acceleration, which has become critical as modern inference shifts toward persistent sessions, multi-agent pipelines, and higher concurrency. The platform also incorporates 3rd Generation Confidential Computing, described as rack-scale confidential computing with a unified GPU-level trusted execution environment that protects and isolates models, data, and prompts. For operators deploying AI into regulated workflows, confidential computing and GPU execution boundary isolation are increasingly important platform selection criteria.

- Reliability improvements are enabled by the 2nd Generation RAS Engine, which includes advanced health monitoring and real-time checks to reduce downtime. In dense liquid-cooled environments, fault detection and serviceability are essential because maintenance windows are costly and operational tolerance for instability is low.

Networking: Spectrum-X Ethernet Photonics and Rack-Scale Throughput

Supermicro also tied its Rubin platform support to NVIDIA’s Spectrum-X Ethernet Photonics, built on the Spectrum-6 Ethernet ASIC. NVIDIA specifies 102.4 Tb/s switching on TSMC 3nm, with 200G SerDes co-packaged optics and fully shared buffers.

The stated objective is to improve efficiency and operational stability compared with traditional pluggable optics, with NVIDIA citing 5x higher power efficiency, 10x higher reliability, and 5x higher application uptime. NVIDIA listed multiple switch models, including the liquid-cooled SN6800 (409.6 Tb/s CPO with 512x 800G ports), SN6810 (102.4 Tb/s CPO with 128x 800G ports), and SN6600 (pluggable optics, 128x 800G ports, available in air- or liquid-cooled configurations). For data center operators, these designs point toward higher-density 800G Ethernet fabrics with improved power and thermal characteristics, which is increasingly relevant as front-end networking power becomes a measurable portion of rack budgets.

Storage Integration for AI Factories

On the storage side, Supermicro highlighted complementary solutions built on its petascale all-flash storage server and JBOF systems. These platforms support NVIDIA BlueField-4 DPUs and a range of data management solutions, aligning storage and networking with the same rack-scale, accelerated architecture used by the compute layer.

Although Supermicro did not provide performance figures for the storage platforms in this announcement, the emphasis on integration signals a continued move toward validated full-stack designs in which compute, network, and storage are qualified together for AI factory deployments.

Manufacturing Scale and Time-to-Online

The operational theme of the announcement is manufacturing throughput and deployment velocity. Supermicro’s expanded facilities and liquid-cooling stack are designed to streamline production and delivery of fully liquid-cooled Vera Rubin and Rubin platforms. At the same time, the modular DCBBS architecture enables rapid configuration, validation, and scaling. For enterprise technical buyers, the differentiator is less about a single chassis specification and more about predictable delivery, repeatable rack builds, and reduced time from equipment receipt to stable production service.

Supermicro is betting that as liquid cooling becomes the default for top-tier AI density, vendors that can industrialize rack-scale manufacturing and validation will be best positioned to support large-scale rollouts without extended integration cycles.

Amazon

Amazon