Supermicro showcased a new range of U.S.-made, TAA-compliant AI systems at the NVIDIA GTC DC25 event. Designed to meet the demanding needs of federal agencies and high-assurance organizations, the company plans to release next-generation NVIDIA AI platforms in 2026. These will include the NVIDIA Vera Rubin NVL144 and the NVIDIA Vera Rubin NVL144 CPX, along with new solutions for deskside AI development and large-scale HPC and AI factories.

Supermicro focuses on design, manufacturing, and validation in San Jose, California. This strategy ensures compliance with TAA requirements, Buy American Act eligibility, and enhanced security for government supply chains.

Charles Liang, Supermicro’s president and CEO, emphasized the strong partnership with NVIDIA and the commitment to local manufacturing. He sees these as key advantages for federal AI deployments. Liang positions Supermicro as a leading American innovator in AI infrastructure. The long-standing collaboration with NVIDIA enables rapid adoption of new platforms while meeting strict U.S. government requirements.

Highlights for Federal Workloads

Supermicro is providing extensive support across the latest NVIDIA architectures to deliver high performance, efficiency, and scalability for critical workloads. This includes:

- NVIDIA HGX B300 and B200

- NVIDIA GB300 and GB200

- NVIDIA RTX PRO 6000 Blackwell Server Edition

The targeted use cases include:

- Cybersecurity and risk detection

- Engineering and design

- Healthcare and life sciences

- Data analytics and fusion platforms

- Modeling and simulation

- Secure virtualized infrastructure

2026 Roadmap: NVIDIA Vera Rubin Platforms

Supermicro intends to introduce the NVIDIA Vera Rubin NVL144 and NVL144 CPX platforms in 2026. These systems are designed to exceed previous training and inference capabilities, allowing agencies and integrators to manage complex, multi-tenant AI workloads more efficiently and reliably.

AI Factory for Government: Enhanced Reference Design

Building on NVIDIA’s AI Factory for Government reference design, Supermicro is enlarging its government-focused offerings with systems tailored for secure, multi-workload AI operations both on-prem and in hybrid cloud environments. The updated lineup includes:

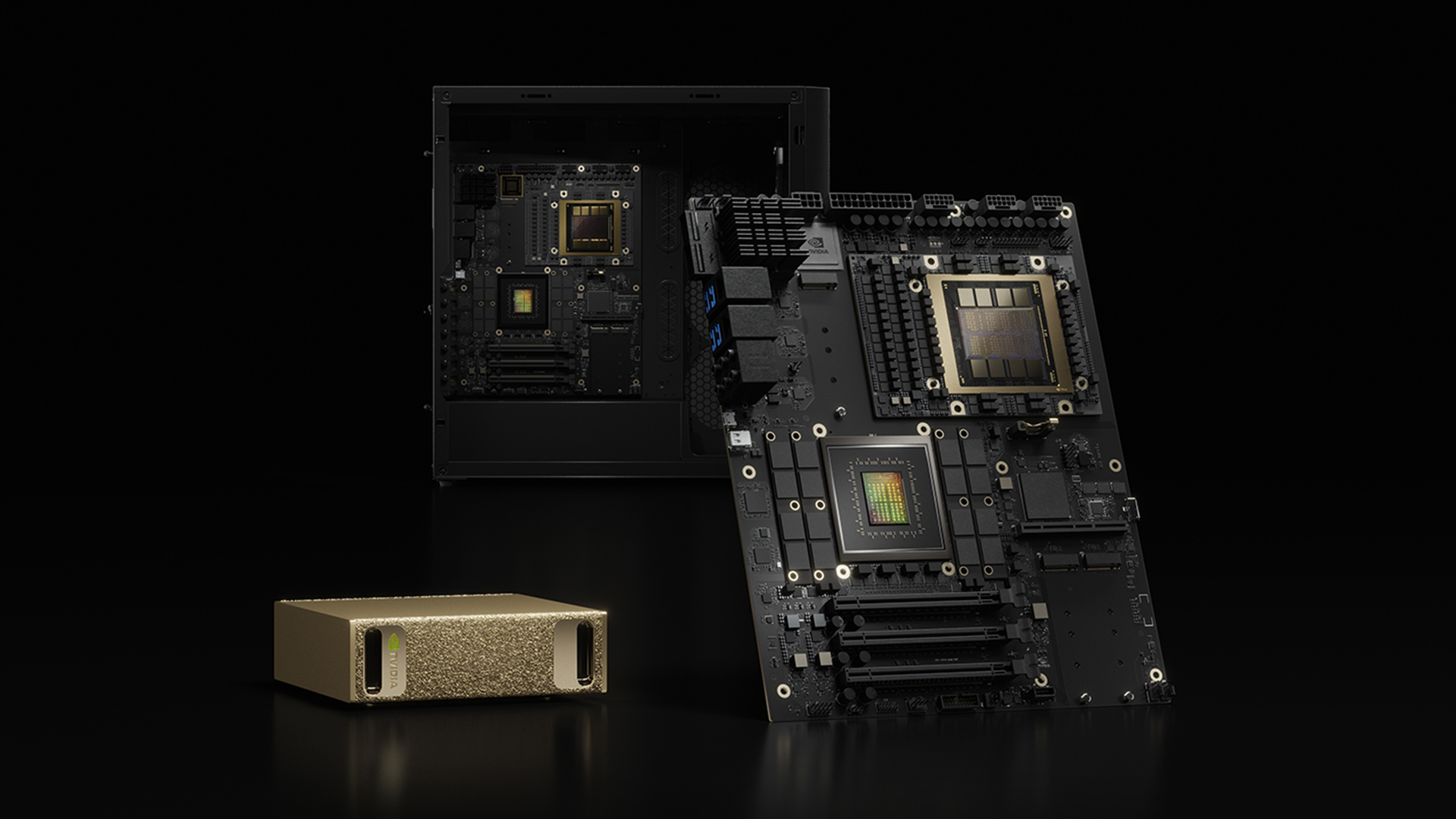

- Super AI Station based on NVIDIA GB300 for deskside development and prototyping

- Rack-scale NVIDIA GB200 NVL4 HPC solutions for extensive training, simulation, and scientific workloads

These products prioritize security, reliability, and scalability in line with federal standards and requirements.

Accelerated Networking: Early Support for BlueField-4 and ConnectX-9

Supermicro announced plans to support the new NVIDIA BlueField-4 DPU and NVIDIA ConnectX-9 SuperNIC in large-scale AI factories. When available, these technologies will help to speed up:

- Cluster-scale AI networking

- Secure, high-throughput storage access

- Data processing offload and infrastructure efficiency

Supermicro’s modular hardware design reduces the need for redesign, allowing for quick adoption of new DPUs and NICs. This approach helps lower time-to-market and lifecycle expenses.

New: Super AI Station (Deskside GB300)

Supermicro launched the liquid-cooled ARS-511GD-NB-LCC Super AI Station. This deskside system delivers server-grade AI capabilities and is powered by the NVIDIA GB300 Grace Blackwell Ultra Superchip. It is intended for organizations that require on-premises, low-latency AI without relying on cloud services. The platform is excellent for model training, fine-tuning, app development, and algorithm prototyping, and can support models with up to 1 trillion parameters.

Key platform features include:

- NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip

- Up to 784 GB of coherent memory

- Integrated NVIDIA ConnectX-8 SuperNIC

- Closed-loop, direct-to-chip liquid cooling for CPU, GPU, NIC, and memory

- Up to 20 PFLOPS AI performance

- Bundled NVIDIA AI software stack

- Optional additional PCIe GPU for rendering and graphics acceleration

- 5U desktop tower with optional rack-mounting

- 1600W PSU compatible with standard outlets

This deskside system delivers over five times the AI PFLOPS of typical PCIe GPU workstations, combining lab flexibility with production-class performance in secure settings.

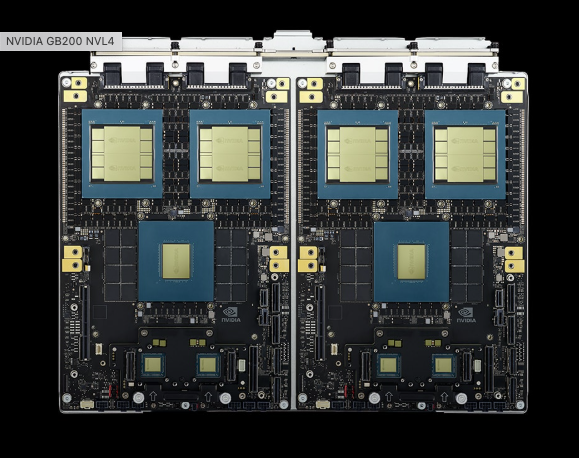

Now Available: Rack-Scale GB200 NVL4 for HPC and AI

Supermicro’s ARS-121GL-NB2B-LCC NVL4 rack-scale solution is now available for GPU-accelerated HPC and AI science workloads, such as molecular simulation, weather modeling, fluid dynamics, and genomics. Each node connects four NVIDIA Blackwell GPUs over NVLink with two NVIDIA Grace CPUs via NVLink-C2C. This setup provides high-bandwidth, low-latency compute fabrics.

Notable features:

- Four B200 GPUs and two Grace Superchips per node with direct-to-chip liquid cooling

- Four 800G NVIDIA Quantum InfiniBand ports per node, delivering 800G per B200 GPU (alternative NICs available)

- Up to 128 GPUs in a 48U NVIDIA MGX rack for high rack density

- Busbar power distribution for easy scaling

- Compatible with in-rack or in-row CDUs for liquid cooling

These systems support full-cycle AI development and deployment using NVIDIA AI Enterprise software and NVIDIA Nemotron open AI models.

High-Density U.S.-Made HGX Systems

To support the rack-scale GB200 NVL4 offering, Supermicro introduced a TAA-compliant, high-density 2OU NVIDIA HGX B300 8-GPU system allowing up to 144 GPUs per rack. This design meets the needs of federal data centers that require top performance, efficient cooling, and easy serviceability.

Amazon

Amazon