Vultr, AMD, and NetApp, all members of the Vultr Cloud Alliance, have developed a new reference architecture focused on data-intensive and AI workloads across hybrid and sovereign cloud environments. The design targets organizations that need to consolidate distributed data, accelerate AI, and enforce data locality and compliance controls without adding operational complexity.

The architecture combines Vultr’s global and sovereign cloud regions, AMD Instinct GPUs with the AMD ROCm open software platform, and NetApp ONTAP data management. The objective is a reproducible, cloud-centric pattern that enterprises can adopt to modernize AI infrastructure while preserving existing on-premises investments.

A Unified Hybrid Cloud Data Platform for AI and Enterprise

At the core of this architecture is a hybrid cloud pattern that aggregates data from multiple on-premises NetApp environments into a single cloud-based instance hosted on Vultr.

On-premises NetApp systems replicate data from multiple sites into a consolidated NetApp ONTAP environment in Vultr. Once ingested, this data is immediately available to Vultr compute and GPU instances. The result is a unified and consistent view of enterprise datasets that simplifies:

- Running analytics and BI workloads on current data

- Training, tuning, and deploying AI and ML models

- Supporting business continuity and disaster recovery workflows

This centralization is designed to be non-disruptive to existing operations. Organizations do not need to re-architect on-premises storage. Instead, they extend their footprint into Vultr using NetApp’s replication and data management capabilities.

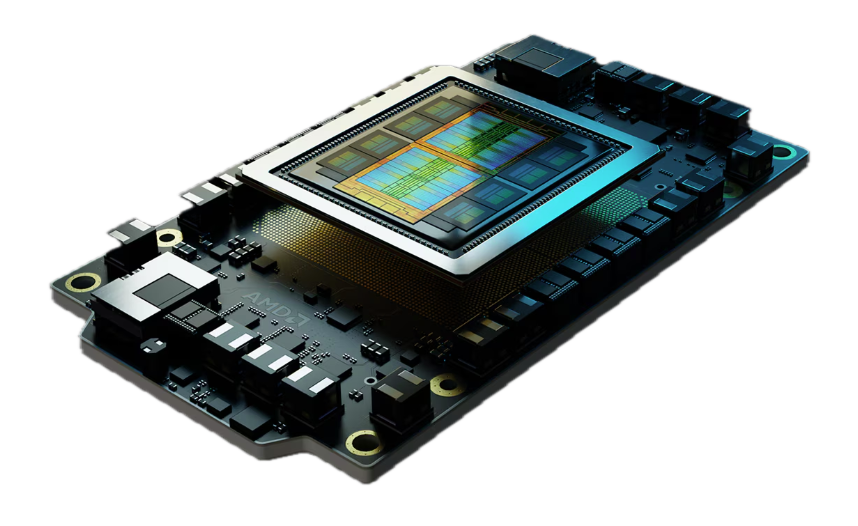

AI and Accelerated Computing with AMD Instinct and ROCm

AI and accelerated workloads in this reference design run on AMD Instinct GPUs, supported by the AMD Enterprise AI Suite and the AMD ROCm software platform.

The AMD Enterprise AI Suite and ROCm stack are used to provide:

- Optimized libraries for AI and HPC workloads

- Framework integrations for popular model development environments

- Performance-tuned components that accelerate training, tuning, and inference

This software-and-hardware combination is designed to deliver predictable performance and a familiar, open software ecosystem to technical teams, while leveraging Vultr’s elastic compute model.

Extensible with AMD AI Blueprints

The architecture can be extended using AMD AI Blueprints. These are validated patterns that define reference approaches for:

- Building end-to-end AI pipelines

- Designing data workflows and feature pipelines

- Managing model lifecycle processes, from experimentation to production

On Vultr infrastructure integrated with NetApp ONTAP, AMD AI Blueprints provide a prescriptive path from POC to scaled deployment. They help organizations standardize data flow from on-premises environments into training datasets, model iteration, and inference service deployment, while maintaining control and visibility over data locations.

Sovereign Cloud and Regulatory Alignment

Because the architecture can be deployed in Vultr sovereign cloud regions, it aligns with the data residency, privacy, and compliance requirements standard in regulated industries.

Enterprises can:

- Keep sensitive data within specific geographic or jurisdictional boundaries

- Leverage NetApp ONTAP policy and governance features to enforce data handling rules

- Use Vultr’s regional isolation to support regulatory or contractual constraints

This combination of sovereign regions and ONTAP-based data controls allows organizations to use cloud-scale GPU and CPU resources while maintaining tight governance over where data resides and how it is accessed.

Partner Architecture Contributions

Each partner contributes a distinct capability within the architecture:

- NetApp ONTAP: Acts as the data fabric across on-premises and cloud. It provides replication, snapshotting, tiering, and governance capabilities that allow data to move predictably between multiple sites and the Vultr cloud, while preserving consistency and control.

- Vultr Cloud Platform: Provides flexible, cost-efficient cloud compute, including GPU instances, delivered across global and sovereign regions. Vultr hosts the centralized ONTAP environment and the AI and analytics workloads that consume that data.

- AMD Instinct GPUs, ROCm, and Enterprise AI Suite: Deliver high-performance acceleration for AI and data-intensive compute workloads. ROCm and the Enterprise AI Suite supply the software stack required to run modern models at scale, while AMD AI Blueprints give teams proven configurations and workflows.

Designed for Performance, Control, and Long-Term Flexibility

The overall design is intended to help enterprises modernize hybrid cloud and AI operations without a wholesale redesign of existing infrastructure. On-premises NetApp arrays remain in place, but gain a clear path to cloud-based AI and analytics on Vultr with AMD acceleration.

The reference architecture supports:

- AI and ML workloads spanning training, fine-tuning, and inference

- Analytics pipelines that need a current, consistent view of distributed data

- Enterprise applications that require shared datasets across regions

- Disaster recovery and business continuity workflows that depend on reliable replication and rapid recovery

By centralizing data on ONTAP, deploying compute and GPU resources on Vultr, and leveraging AMD’s AI stack for acceleration, the architecture provides a validated, practical model for scaling AI while keeping data mobility, security, and governance predictable and controlled.

Amazon

Amazon