Open Compute Project (OCP) has announced the additions of the Wiwynn ES200 edge server and NVIDIA HGX-H100 GPU baseboard to their platform.

Wiwynn’s short-depth edge server is designed to tackle the growing demand for computing power at edge sites, specifically for applications like instant AI inference, on-site data analytics, and multi-channel video processing.

NVIDIA’s HGX-H100 GPU baseboard physical specification is expected to help improve OCP’s OAI Sub-Project’s ability to leverage HGX and to develop cost-effective, high efficiency, scalable platform and system specifications for high-performance computing in AI and HPC applications.

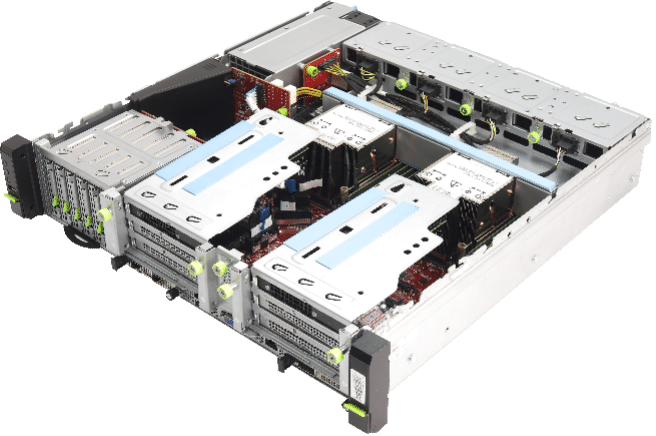

Wiwynn ES200 Edge Server

Wiwynn ES200 is a 2U dual-socket edge server powered by a 3rd Gen Intel Xeon Scalable processor. With NUMA-balancing, it can deliver the computing power of each installed processor to fulfill different workloads separately without creating communication bottlenecks between CPUs.

It also features extra PCIe Gen4 x16 slots, which can be outfitted with two double-width add-in cards. This adds a lot of flexibility to the system, making it ideal for use cases like AI inference acceleration, video transcoding, and data analytics, as well as use cases that need additional network connectivity. It features flexible storage modules including EDSFF (E1.S) or U.2 SSD and two OCP NIC 3.0 cards, allowing the ES200 to produce impressive local data processing and high-bandwidth network connection.

The ES200 is also NEBS-3 compliant and features a short-depth compact form factor. Moreover, Wiwynn indicates that it can run smoothly under difficult operating conditions and can fit in various edge sites.

Wiwynn ES200 Edge Server Node Specifications

| Node Specification | |

| Processor | 3rd Generation Intel Xeon Scalable Processors, TDP 250W/270W |

| Processor Sockets | 2 |

| Chipset | Intel C621A series |

| Security | TPM v2.0 (option) |

| System Bus | Intel UltraPath Interconnect; 11.2GT/s |

| Memory | 16 DIMM slots; DDR4 up to 3200 MT/s, and up to 64GB per DIMM; 12 DIMM slots with up to 2 DCPMMs (Intel Barlow Pass) |

| Storage | ‧ One onboard 2280/22110 M.2 SSD Module slot ‧ Six U.2 (15/7mm), or Eight EDSFF E1.S (5.9/9.5mm) |

| Expansion Slots | Up to six PCIe Gen4 slots: ‧ Two PCIe x16 FHFL Dual-width + One PCIe x8 FHHL ‧ One PCIe x16 FHHL slots + Two PCIe x8 FHHL ‧ Six PCIe x8 FHHL slots is optional by demand Two on-board OCP 3.0 NIC x16 |

| Management LAN | ‧ One GbE Dedicated BMC Port |

| I/O Ports | ‧ Power Button w/ LED ‧ UID Button w/ LED ‧ RST Button ‧ Three USB ports ‧ BMC Management port (front side) ‧ VGA port (D-sub) |

| Chassis Specification | |

| Dimensions | 2U Rack; 86(H) x 440 (W) x 450 (D) (mm) |

| PSU | Hot-pluggable, Redundant (1+1) 1600W CRPS |

| Fan | Four 8038 dual rotor, Redundant (7+1) |

| Weight | 14.47kg |

| Environment | ‧ Ambient operating temperature range: -5 to +55 degree Celsius

‧ Operating and Storage relative humidity: 10% to 90% (non-condensing) ‧ Storage temperature range: -40 to +70 degree Celsius |

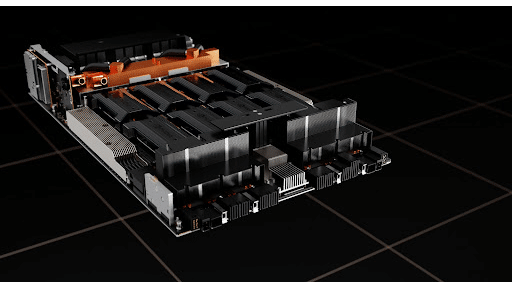

NVIDIA HGX-H100 Baseboard

The NVIDIA HGX-H100 is a baseboard that hosts eight of the most recent Hopper GPU modules. The HGX baseboard physical specification defines things like the physical dimension host interfaces, management, and debug interfaces. NVIDIA’s contribution package also features the baseboard physical specification, baseboard input/output pin list and a pin map, 2D/3D drawing.

The following list from OCP indicates how NVIDIA HGX’s contribution builds on their “four tenets”: efficiency, scalability, openness, and impact:

- Efficiency – This is the 3rd generation HGX baseboard form factor, and each generation has provided ~3x-5x higher AI Training throughput while consuming similar active power. As a result, the HGX baseboard is now the de facto standard, with most global hyperscalers designing around this form factor for AI training and inference.

- Scalability – Globally, hundreds of thousands of HGX systems are in production. The systems and enclosures for this form factor will be stable and built at scale. The HGX specification will include physical dimensions, management interfaces for monitoring/debugging etc. for at-scale services.

- Openness – HGX-based systems are the most common AI training platform globally. Many OEMs/ODMs have built HGX-based AI systems. With the contribution of this HGX physical specification, the OAI group will converge UBB2.0 with the HGX spec as much as possible. OEMs/ODMs could leverage the same system design with different accelerators on UBB/HGX-like baseboards, such as the same baseboard dimension, host interfaces, management interfaces, power delivery, etc.

- Impact – The NVIDIA HGX platforms have become the de facto global standard for AI training platforms. Opening this HGX baseboard form factor allows other AI accelerator vendors to leverage the HGX ecosystem of systems and enclosures. This should accelerate other AI vendors to leverage existing infrastructure and adopt their solutions sooner. This further accelerates innovation in the AI/HPC fields.

Amazon

Amazon