Windows is great for a lot of things, there’s a reason it’s the number one operating system in the world. That said, it’s not perfect, especially when it comes to embracing new storage standards. As such, there’s a tremendous opportunity for enterprising companies to develop solutions for Windows shops. As NVMe SSDs continue to dominate the enterprise, becoming the standard for SSD server storage, there’s increased demand to be able to share that storage. Sadly in Windows, that’s a problem, until recently that is. Earlier this year StarWind commercialized an NVMe-oF Initiator for Windows.

Up to commercialization, StarWind had been offering their NVMe-oF Initiator for Windows as a free tool for development and PoC use cases. They still offer the free version for those who want to play, but the GA shipping version is what we’re looking at today. In fact, if you’re looking at any NVMe-oF Initiator for Windows, you’re probably consuming StarWind IP. They OEM the solution to a variety of partners that need to build out their offerings.

NVMe-oF Initiator for Windows Configuration

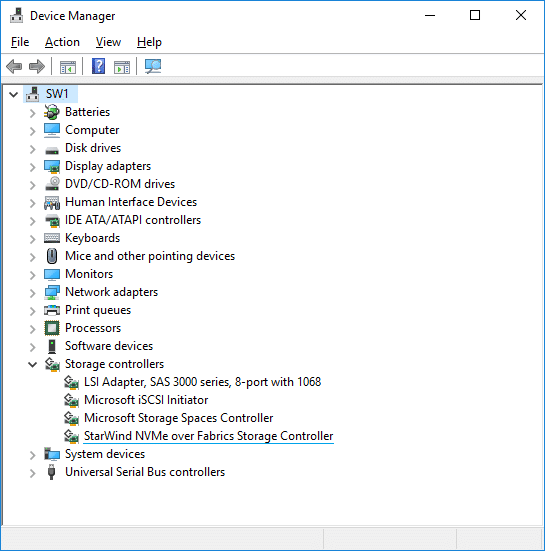

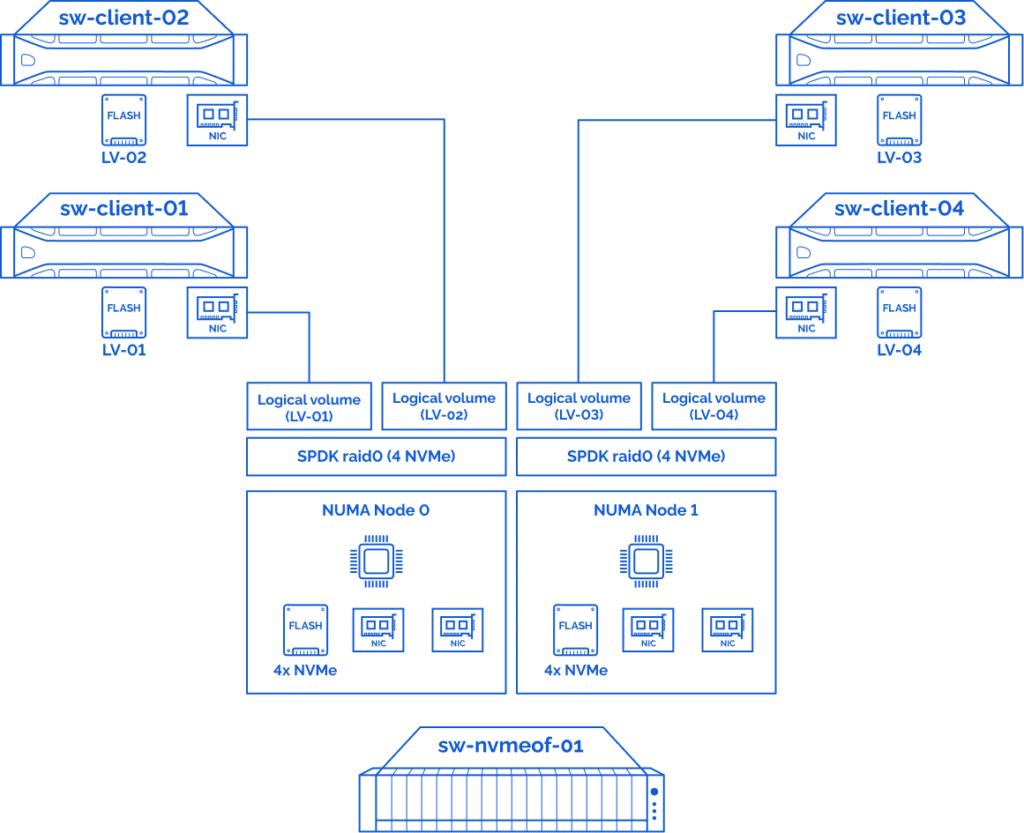

The StarWind NVMe-oF Initiator for Windows is a simple install on any Windows host. There’s no need for specialized hardware or additional Windows componentry. The software is Windows-certified (Server 2019 and Windows 10) and tested for compatibility with the major NVMe-oF storage vendors. In our scenario, we have a very simple configuration of one storage host and four clients.

Each of the four clients are Dell PowerEdge R740 servers. They’re each running two Intel Xeon Gold 6130 CPUs at 2.1GHz with 256GB of DRAM. For connectivity, we’re using NVIDIA ConnectX-5 EN 100GbE NICs (MCX516A-CCAT). The servers are installed with Windows Server 2019 Standard Edition, leveraging the StarWind NVMe-oF Initiator for Windows version 1.9.0.455. For the Linux testing, we used CentOS 8.4.2105 (kernel – 4.18.0-305.10.2) with nvme-cli 1.12. The servers are direct-attached to the storage host.

The storage host is an Intel OEM server (M50CYP2SB2U) equipped with two Intel Xeon 8380 CPUs at 2.3 GHz with 512GB of DRAM. We again used NVIDIA ConnectX-5 EN 100GbE NICs (MCX516A-CDAT), this time we had four in the host. In this case we’re using CentOS 8.4.2105 (kernel – 5.13.7-1.el8.elrepo) and SPDK v21.07.

Within the host, we’re using eight Intel P5510 Gen4 NVMe SSDs. The SSDs are split into two batches of four for NUMA alignment with the CPUs. They’re configured in RAID0 for maximum performance.

NVMe-oF Initiator for Windows Performance

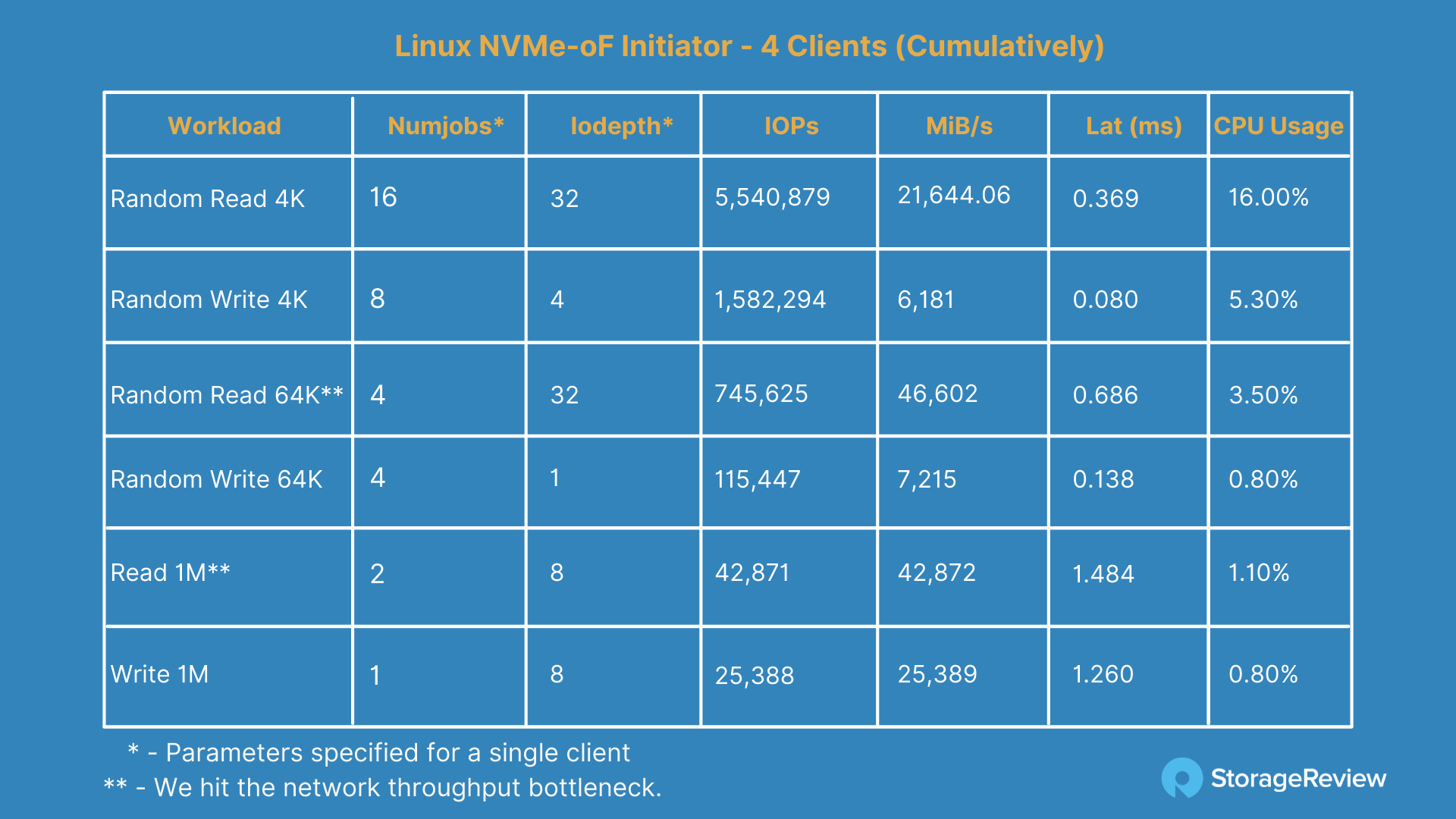

For this testing, we ran the following benchmarks via FIO leveraging both Linux and Windows initiators.

- Random Read 4K – 16 Thread, 32 Queue Depth

- Random Write 4K – 8 Thread, 4 Queue Depth

- Random Read 64K – 4 Thread, 32 Queue Depth

- Random Write 64K – 4 Thread, 1 Queue Depth

- Sequential Read 1M – 2 Thread, 8 Queue Depth

- Sequential Write 1M – 1 Thread, 8 Queue Depth

A single test duration is 3600 seconds (1 hour). Prior to benchmarking the write operations, storage has been first warmed up for 3600 seconds (1 hour). All the tests have been performed three times and the average value was used as a final result.

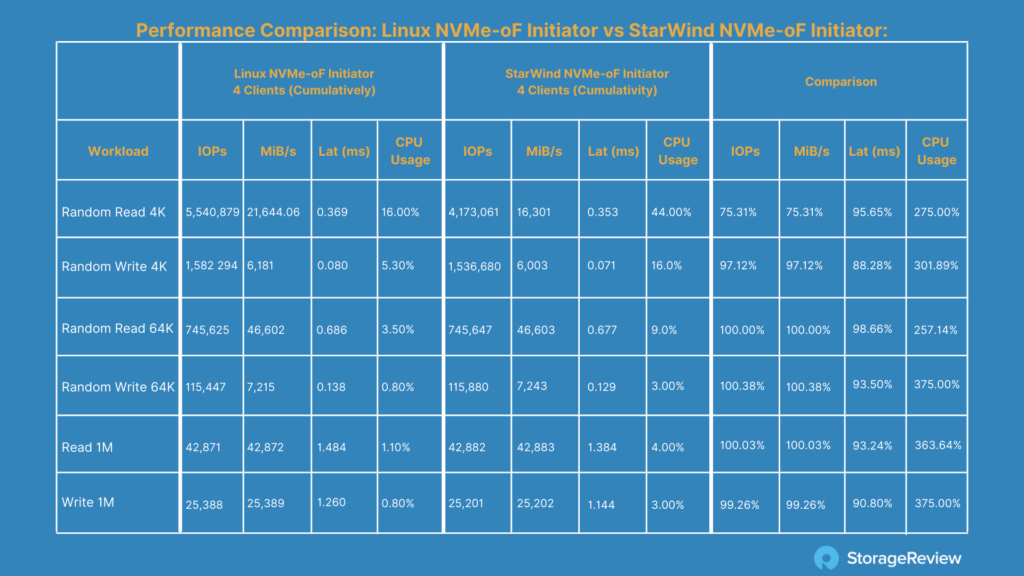

In our first group looking at the performance of the Linux NVMeoF initiator performance across 4 clients, we measured 5.54M IOPS at a bandwidth of 21.6GB/s at 0.369ms latency in 4K random read. 4K random write performance measured 1.58M IOPS at a bandwidth of 6.2GB/s with a latency of 0.08ms.

Moving to large-block transfers we measured both 64K random, and finally, a 1M sequential test focusing on bandwidth across the fabric. In 64K random read we measured 46.6GB/s at 0.69ms of latency, and 7.2GB/s at 0.14ms of latency in write. 1M sequential came in at 42.9GB/s read at 1.48ms of latency, and 25.4GB/s at 1.26ms of latency for write.

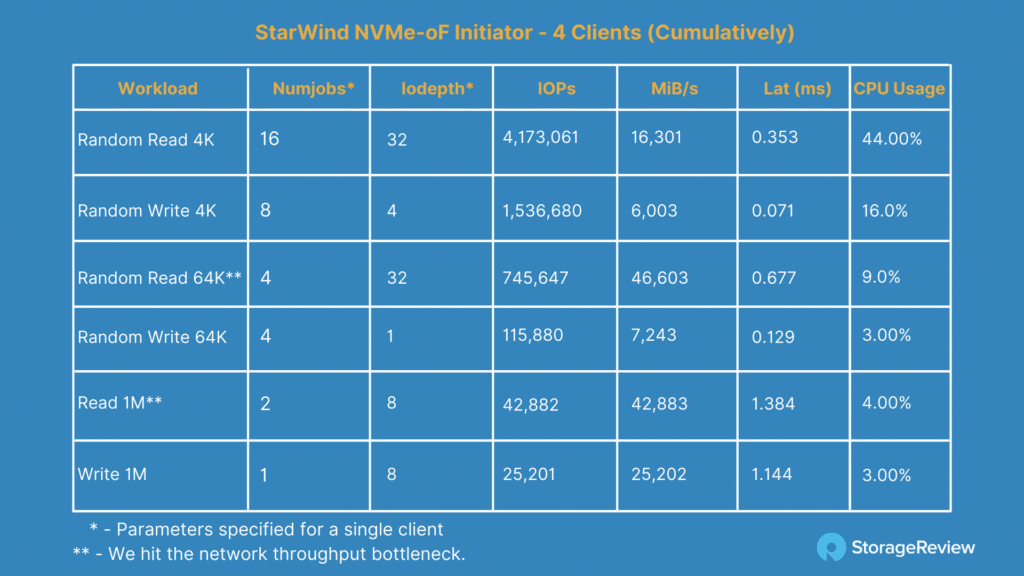

Next, we switched to Windows where we leveraged the StarWind NVMeoF initiator across the same four clients. Here we measured 4.17M IOPS in 4K random read or 16.3GB/s at 0.35ms of latency. 4K random write came in at 1.54M IOPS or 6GB/s at 0.07ms of latency.

We then moved to a larger 64K transfer size with the same random access profile. Here we measured 46.6GB/s read at 0.68ms of latency and 7.2GB/s write at 0.13ms of latency. Switching to our last workload profile of a 1M transfer size with a sequential access pattern, we measured 42.9GB/s read at 1.38ms latency and 25.2GB/s write at 1.14ms latency.

Comparing the figures head to head, both Windows and Linux performance came in line very close to one another with the exception of 4K random read. In all other tests the performance gap was less than 3%. The main difference really comes down to the CPU overhead added when flowing through the Windows storage stack. This works out to a 2.7 to 3.7x difference, where increased I/O itself adds to the CPU usage the most.

Moving from a 16% CPU utilization in Linux to 44% in Windows is a pretty large jump, but one going from 3.5% to 9% wouldn’t be felt to the same degree. For applications that need to be run in Windows or the IT shop being more Windows focused in general, StarWind’s main goal was bringing NVMeoF capabilities and performance which they were clearly able to achieve.

Conclusion

The goal of this analysis is not to determine the best way or fastest way to roll your own NVMe-oF solution. Most storage deployments follow the application, versus the application that follows the storage. That said, there are a number of reasons why an organization may want to use Windows. It could be specific applications, existing infrastructure, cost reasons, or any other number of issues that make Windows the preferred platform. At least now, with StarWind’s NVMe-oF Initiator for Windows, we have an option when it comes to sharing NVMe SSDs and getting them as close to application systems as possible.

Ignoring the client OS for a moment, the main limitation in our testing really comes down to network links between the systems. In our case, leveraging 100Gb NICs, we saturated the network and topped out at 46.6GB/s in both Linux and Windows environments. Even the peak 4K random read test in Windows pushed 16.3GB/s, which would work out to more than six 25GbE links for random I/O. Networking ends up playing a more important role for NVMe-oF since NVMe performance any way you slice it can absorb a lot of traffic.

But at the end of the day, our goal was to evaluate how well the StarWind initiator works. It works really darn well. Considering the alternative is “No NVMe-oF for you!” in Windows, we’re happy to have any options. Yeah, there’s a CPU hit for the privilege, but even though the percentage deltas from Linux to Windows are scary, outside of 4K random reads the perceived impact is minimal. If you’re not sure if this is the right fit, StarWind lets you try it for free. It’s so simple to install there’s every reason to give it a whirl and see just how well NVMe-oF can work for your applications in Windows.

Amazon

Amazon