The new Ampere-based GPUs from NVIDIA are here and if you’re lucky, you might actually get to buy one. As of this writing in February of 2021, stock of new GPUs is still extremely scarce and isn’t expected to return to normal until at least Q3 of this year. But are you really missing out on that much if you can’t get your hands on a new NVIDIA GeForce RTX 3090 for your workstation? There are, of course, a lot of factors to know if you need to upgrade.

Today we’ll take a look at a range of use-cases to shed some light on if the wait will be worth it. We’ll be looking at creative professional use-cases, things like Blender, Davinci Resolve, and LuxMark, but we’ll also look at a machine learning benchmark based on the open-source TensorFlow library in Python, and a bit of gaming for good measure. We’ll be comparing an NVIDIA GeForce RTX 3090 Founder’s Edition to the NVIDIA Quadro RTX 8000, the (former) king of NVIDIA’s workstation line. While a more apt comparison would be to the Quadro RTX 6000, as both cards have 24GB of VRAM, the results would be nearly identical, as the actual compute capabilities of the 8000 and 6000 are the same and none of these benchmarks used more than 24GB of VRAM. We also have a couple of OEM workstations for comparison, including a Lenovo P920 we previously reviewed with dual RTX 8000s and a new Lenovo P620 packed with AMD’s latest Threadripper PRO.

Why are we comparing a workstation Quadro card with a consumer GeForce card? Because never before has NVIDIA put out a consumer card this powerful, and the value proposition of spending a third of the money versus the Quadro line has become very enticing. Particularly because as of writing this article, there is no official word on a true TITAN RTX successor, which has traditionally filled the niche for work and play GPUs. It’s also impossible to ignore the state of the world right now, with work-from-home solutions being a top priority for a lot of people, and having one extremely powerful GPU for CAD by day and gaming by night sounds better than ever. We know, we can hear you through the screen about the differences for a “true” workstation card! We’ll get there, we promise; keep reading.

The main test system and GPU specifications are below, including driver and BIOS versions.

| OS | Windows 10 Professional (ver 20H2, October 2020) |

| CPU | AMD Ryzen 9 3900X |

| Memory | 4x8GB (32GB) G.Skill TridentZ Neo 3600MHz CL16 |

| Test Drive | 2TB Samsung 970 Pro |

| Motherboard | ASRock X570 Taichi (BIOS v4.00, PCIe Gen4) |

| GPU 1 | NVIDIA RTX 3090 Founder’s Edition (Studio Driver 461.40) |

| GPU 2 | NVIDIA Quadro RTX 8000 (Production Branch Driver R460 U3, 461.40) |

| Quadro RTX 8000 | RTX 3090 FE | |

| Architecture | Turing (12nm) | Ampere (8nm) |

| CUDA Cores | 4,608 | 10,496 |

| Tensor Cores | 576 (2nd Gen) | 328 (3rd Gen) |

| RT Cores | 72 (1st Gen) | 82 (2nd Gen) |

| GPU Memory | 48GB GDDR6 with ECC | 24GB GDDR6X |

| FP32 Performance | 16.3 TFLOPS | 35.6 TFLOPS |

| Power Consumption | 295W | 350W |

| PCIe Interface | PCIe 3.0 x16 | PCIe 4.0 x16 |

| Cooler | Blower-style | Flow-through |

| NVLink Multi-GPU Support | Yes | Yes |

| SR-IOV Support | Yes | No |

GeForce RTX 3090 vs Quadro RTX 8000 Benchmarks

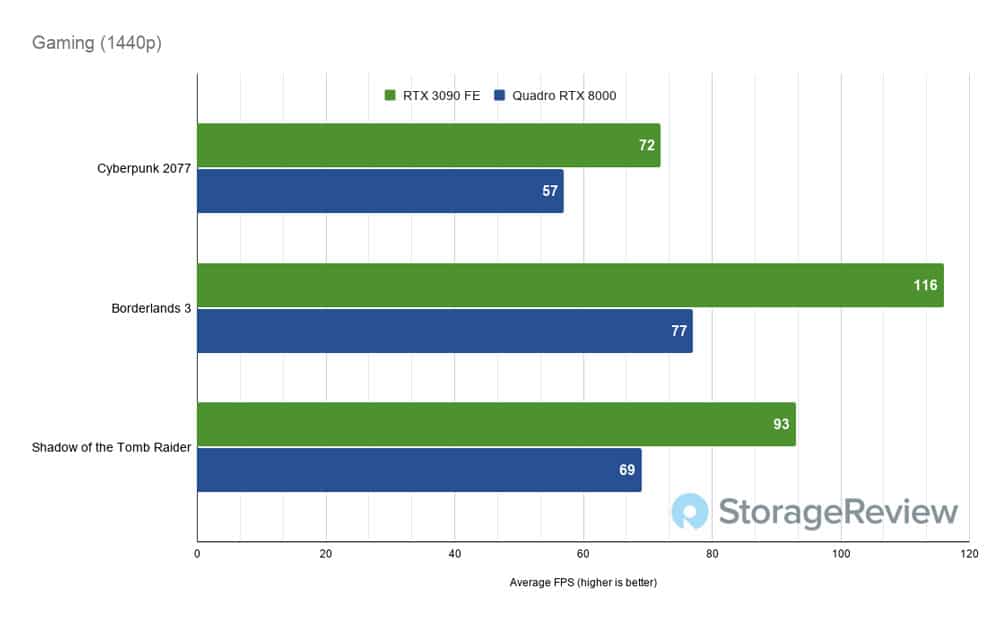

Let’s start with gaming. This may seem like a weird thing to include in an article about workstation graphics, but with so many people working from home these days, it’s not unreasonable to expect a lot of professionals to finish their work and get to gaming on the same machine. We tested Cyberpunk 2077, Shadow of the Tomb Raider, and Borderlands 3. Borderlands and Tomb Raider have built-in benchmarking tools, and while Cyberpunk doesn’t, we felt we were able to record a good amount of data between the two cards to give an average. All tests were done at 1440p; Borderlands was set to all max settings except motion blur; Tomb Raider was set to all max, RTX Ultra and no DLSS; and Cyberpunk 2077 was set to the RTX Medium Preset, with DLSS set to Quality. Esports titles like League of Legends and CS:GO will easily maintain over 250 FPS with either of these cards so they weren’t tested thoroughly. Anecdotally, we saw well over 150 FPS consistently with both cards in Call of Duty: Warzone but didn’t test it thoroughly enough to consider it a true benchmark to include on this chart.

To absolutely no one’s surprise, the RTX 3090 is the absolute best gaming GPU on the market right now and while the AMD Radeon 6900XT will go blow for blow with it in certain titles, when it comes to ray-tracing, the 3090 has no competition. Cyberpunk 2077, love it or hate it, is the most demanding title available right now and in all of our testing, the RTX 3090 maintained at least 65 FPS, even in very crowded scenes, and most of the time the FPS was in the high 70s and low 80s with consistently low frame times. It’s safe to say that anything less demanding can easily be run at maximum settings and maintain high framerates. Also keep in mind that these tests were done in February of 2021, before NVIDIA released Resizable BAR support for GeForce cards. This PCIe standard, marketed as Smart Access Memory on AMD’s new cards, will allow CPUs direct access to the entire VRAM at once and improve performance in gaming. Early results show anywhere from a 2%-5% gain in FPS, depending on the game. Resizable BAR will likely not increase performance in compute tasks and as such, will likely not come to the Quadro line any time soon.

The more interesting result here is how competent of a gaming card the Quadro RTX 8000 is. While it doesn’t seem like it should be a surprise given its raw power, its design and drivers haven’t been optimized for gaming at all, yet in all but Cyberpunk 2077, it maintains well over 60 FPS. Cyberpunk was choppy at best, but dialing back the ray-tracing and setting DLSS to Performance improved the results greatly, maintaining over 60 FPS in all but the most demanding scenes. It’s worth noting, we did run into a lot of ray-tracing related bugs when running Cyberpunk on the Quadro, particularly an issue where reflections would disappear entirely and the scenes would turn dark. This didn’t happen on the RTX 3090, so we’ll chalk it up to an issue related to the Quadro driver and Cyberpunk’s notorious buginess.

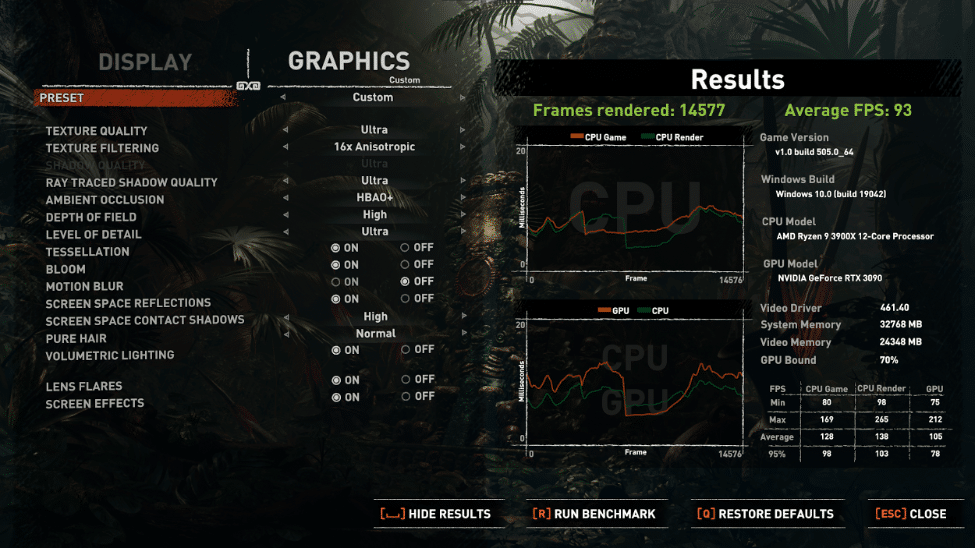

Shadow of the Tomb Raider on the GeForce RTX 3090, 1440p with RTX on and DLSS off.

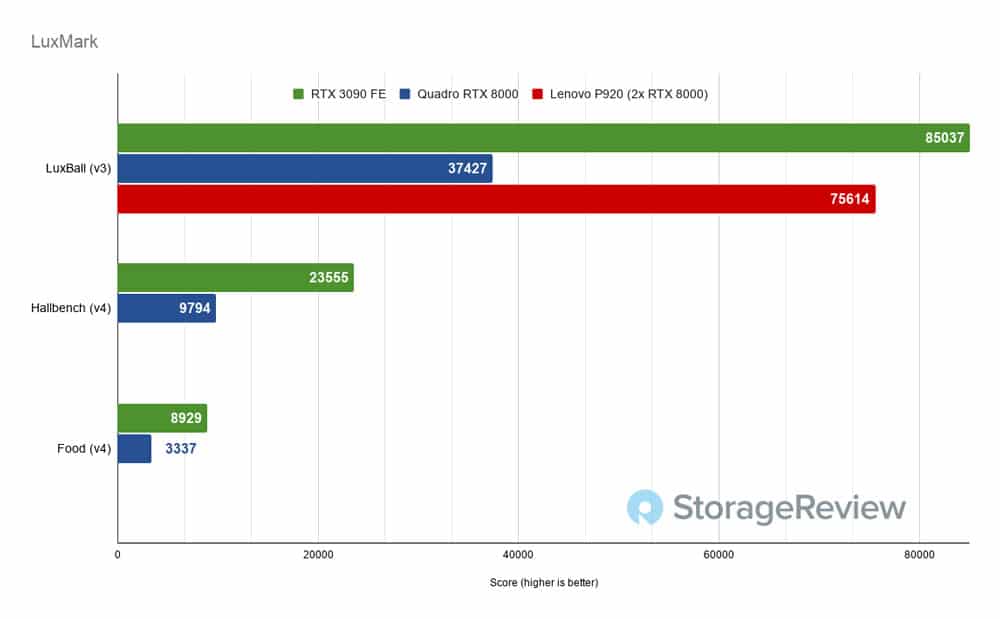

Next up is the good stuff; the workstation results you’re expecting to see. This batch of benchmarks will be looking at performance in 3D modeling, lighting, and video work. We’ll begin with LuxMark, an OpenCL GPU benchmarking utility. We’ve used the newest version, v4alpha0, as well as the older v3 with the classic LuxBall.

To say the RTX 3090 has impressive performance would be an understatement. In applications that are heavily GPU-bound, the new Ampere architecture really flexes its muscles, beating out even the Lenovo P920 and its dual RTX 8000s. In LuxMark v3, the performance was over double, and the v4 benchmarks were approaching 150% performance. Expect to see a lot more charts that look like this, but (spoiler alert) not everything is as clear-cut as it seems.

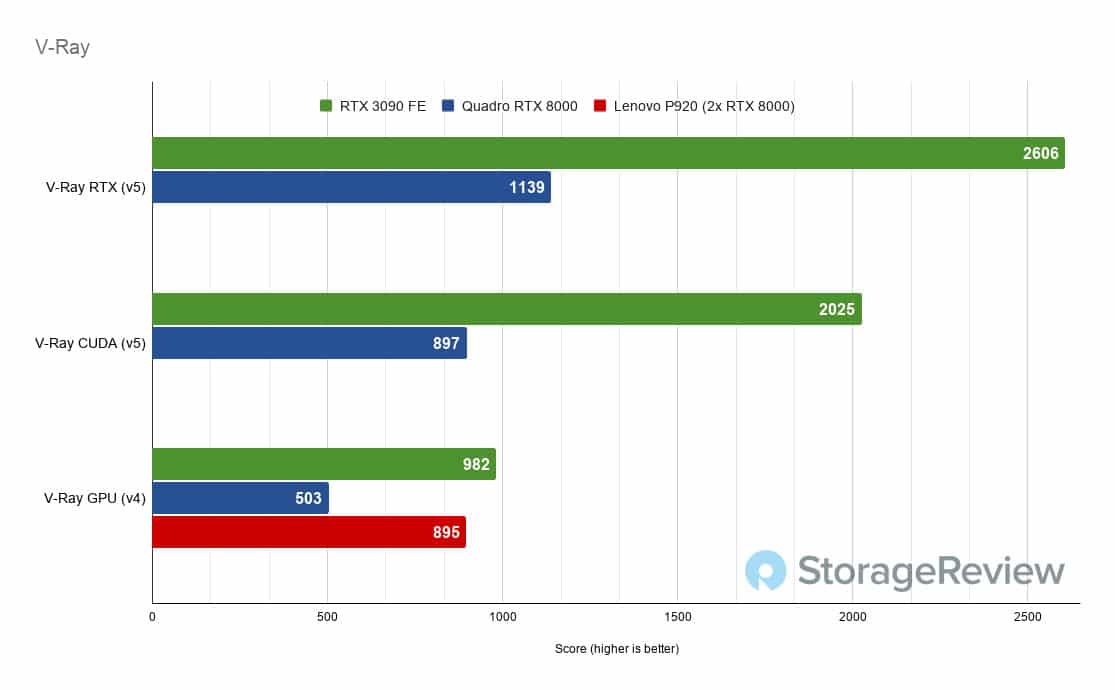

Next up, let’s take a look at V-Ray from Chaos Group. This benchmark is for the V-Ray 3D rendering and simulation toolset, which is available as a plugin for a wide range of 3D modeling applications, such as Cinema 4D, Maya, Rhino, and Unreal, among others. This benchmark focuses on CUDA and RTX performance specifically. We used the newest version of the V-Ray benchmark (v5) as well as an older one (v4), just for comparison.

The story here is very similar to LuxMark with the RTX 3090 vastly outperforming the RTX 8000, and even eeking out above the Lenovo P920 and its dual GPUs. This benchmark specifically lets us see the massive improvements NVIDIA has made to their 2nd generation ray-tracing cores; the CUDA performance is about doubled, while the RTX performance is nearly 150%.

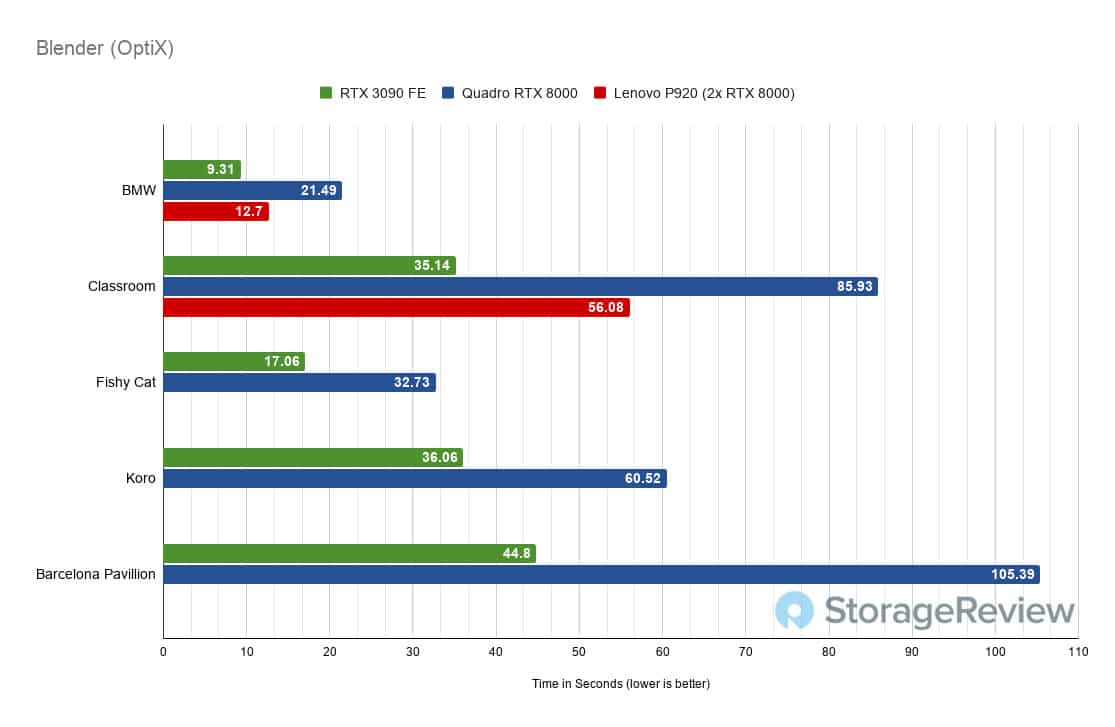

Next up is the ubiquitous Blender, an open-source 3D modeling application. This benchmark was run using the Blender Benchmark utility. NVIDIA OptiX was the chosen render method, as opposed to CUDA, as all these systems can utilize RTX.

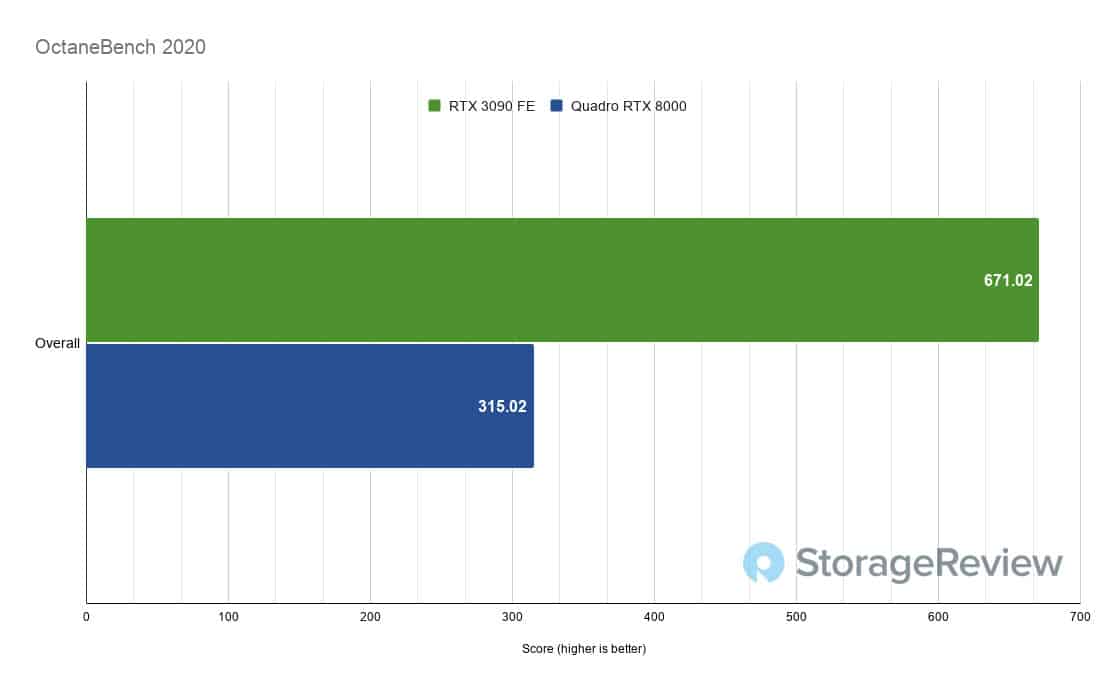

The story is much the same once again, with the next-gen RTX cores making quick work of the Blender renders, even burning through the BMW render in under 10 seconds. This story continues, but don’t worry, it’s going to get very interesting soon. Here we look at OctaneBench, a benchmarking utility for OctaneRender, another 3D renderer with RTX support, similar to V-Ray.

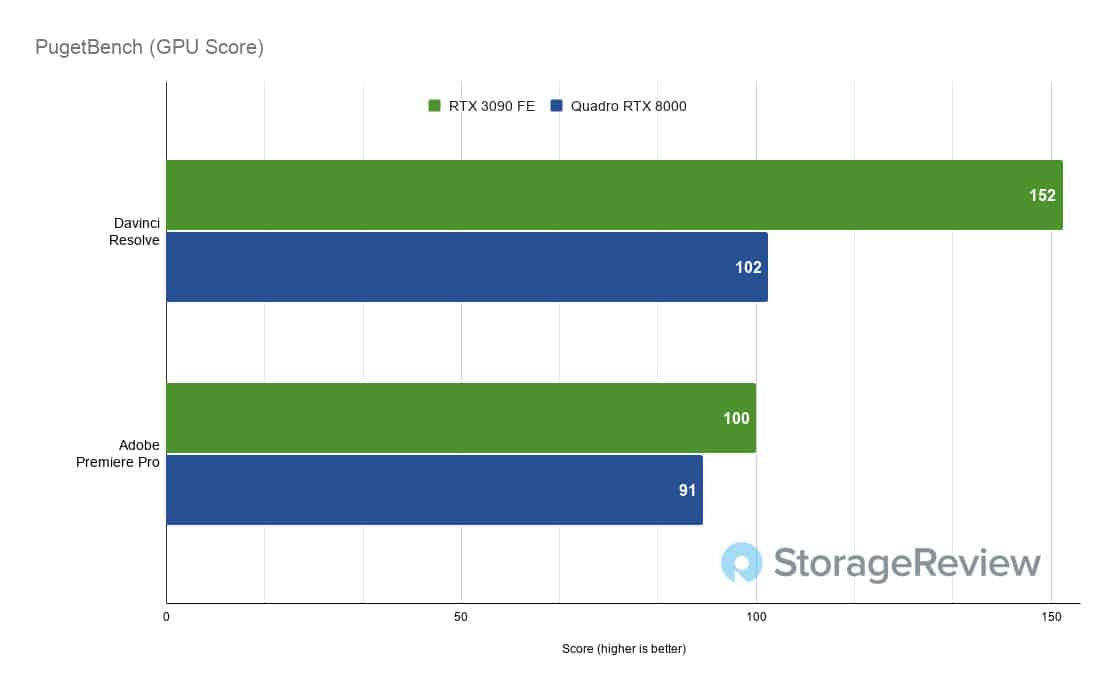

Yeah. Next, we’ll take a look at video editing applications, specifically Davinci Resolve Studio 16.2.8 and Adobe Premiere Pro 2020. We’ll be using PugetBench for Adobe CC and PugetBench for Resolve, both developed by PugetSystems, a PC manufacturer that makes professional workstations for specific applications. This chart specifically looks at the GPU score of the overall benchmark, scoring performance in GPU accelerated effects.

Finally, we have some use cases where the value proposition drops a bit. Applications like Premiere Pro and Davinci Resolve vary wildly in their performance needs depending on the project and effects, and they’re often CPU bottlenecked. There is an improvement, especially in Resolve which has much better GPU optimization, but users of the Adobe Creative Cloud suite will find more value in lower-end GeForce cards like the RTX 3080 where you’ll get ostensibly 90% of the performance for 46% of the price (based on MSRP of the Founder’s Edition cards). While not reported here, performance in After Effects and Photoshop were similar, where most tests were CPU limited. Also worth noting the Quadro and GeForce cards both use the 7th generation version of NVENC (the NVIDIA Encoder), so H.264 and HEVC render times will be similar. Do keep in mind that the GeForce cards are limited to 3 simultaneous encoding tasks (for things like streaming and recording), where the Quadros have no such limit. We’re aware of bypasses for this limit, but we’ll address that later. The RTX 3090 does use a new generation of the NVDEC (NVIDIA Decoder), so there will be marginal improvements in timeline scrubbing when working with HEVC and H.264 footage.

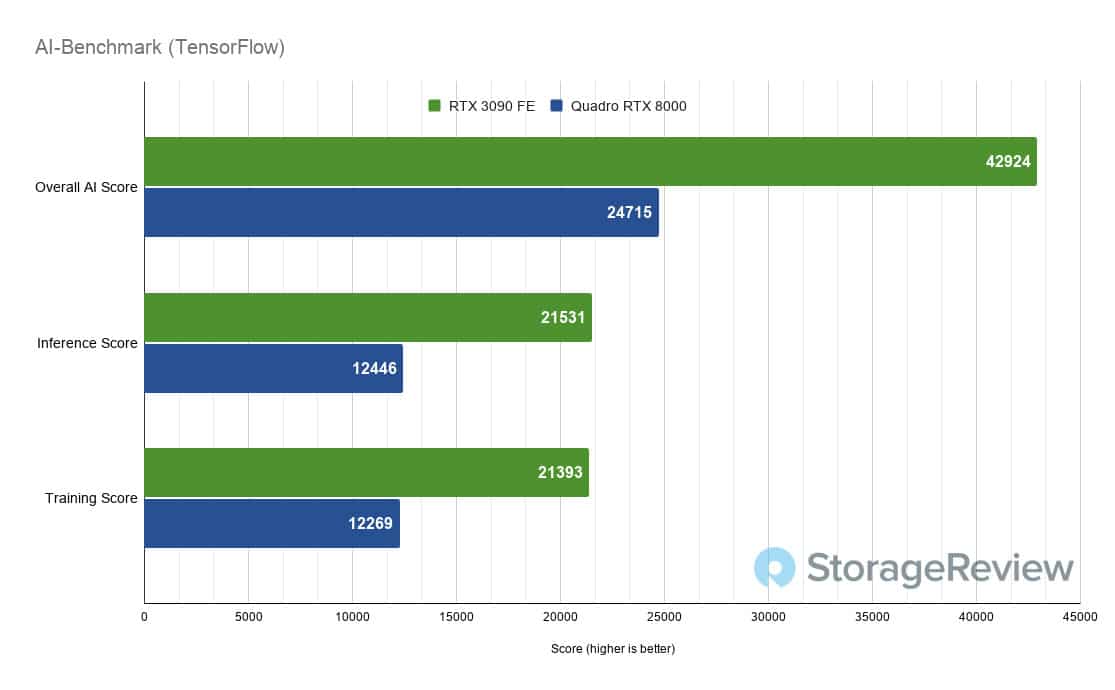

Let’s move on from creative application and into something new. We’ll be taking a look at the machine learning performance of these cards using a benchmark aptly named AI-Benchmark. It’s an open-source Python library that runs a series of deep learning tests using the TensorFlow machine learning library. You can find out more about it here as well as which specific testing methodologies it uses.

Your collective gasps have been heard. It’s the same story here as most of the creative benchmarks, with the GeForce card ostensibly doubling the performance of the Quadro. This test was done in Windows 10, but you can expect similar results in your Linux distro of choice. At the time of testing, TensorFlow hasn’t been updated to support the new Ampere cards, but through a bit of hackery, we were able to make it run by mixing and matching components of different CUDA developer kits. We expect that a properly updated version in the future can only improve results.

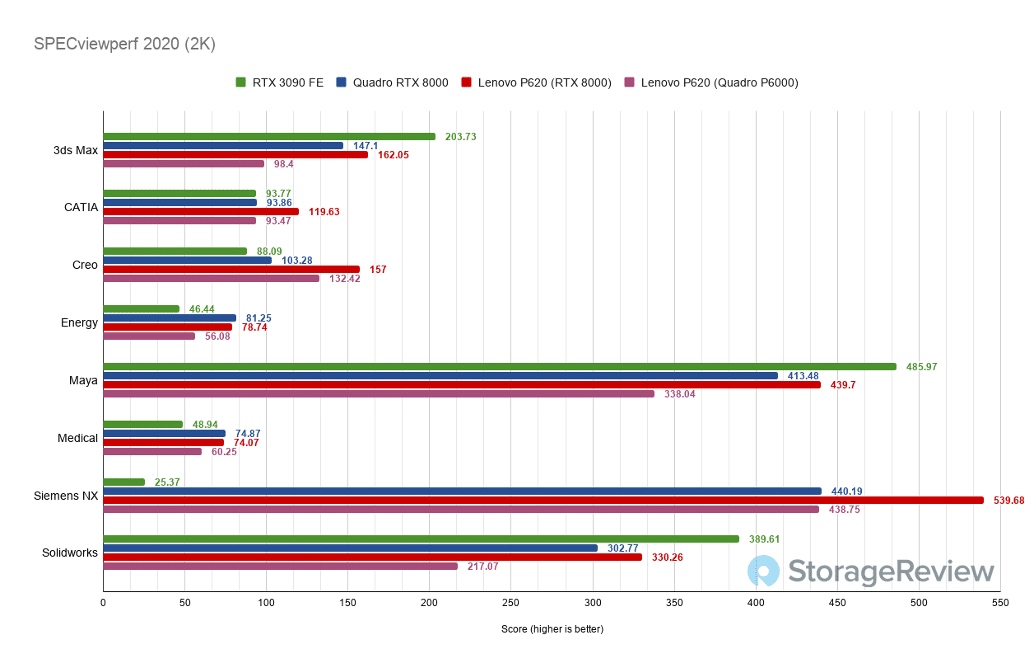

Last, but definitely not least, we’ll look at SPECviewperf 2020, the newest revision of the industry-standard benchmarking utility from the Standard Performance Evaluation Corporation. This benchmark looks at graphics performance in a variety of professional applications. We’ve also included the Lenovo P620 equipped with an older Quadro P6000 for comparison.

This is where things get interesting. The 3D modeling results are about what you’d expect, with the RTX 3090 outperforming the RTX 8000 by a healthy margin, but applications like CATIA and Creo, and the Energy and Medical tests, actually have the 3090 underperforming by a small, but significant margin. It even underperformed against the significantly older Quadro P6000 in our Lenovo P620. But what about Siemens NX? This is the crux of the workstation versus consumer, Quadro versus GeForce, predicament. You’re reading that chart correctly; the 3090 scored a 25.37 in Siemens NX. We ran this test seven times and referenced other benchmarking results on the internet and this result is absolutely correct. The RTX 3090 had less than 5% of the performance of the Lenovo P620 with the RTX 8000 in this test. Let’s explore this more in the next section.

Workstation versus Consumer GPUs; The Drivers

The GeForce RTX 3090 is a very powerful GPU, there’s no denying that, and the Quadro RTX 8000 is also a powerful GPU, but where they differ is important. The RTX 3090 is first and foremost a gaming video card. It was designed, and is marketed, as the absolute best GPU for gaming and the “world’s first 8K capable” gaming card. The fact that it can outperform the RTX 8000 in a multitude of tasks, with extreme margins in most cases, is a testament to its raw power, essentially brute forcing its way through these tests. The Quadro RTX 8000 is first and foremost a workstation card, designed to be placed in workstations, crammed in rack-mount servers, linked together over NVLink, virtualized, ran hard and put away wet (metaphorically, of course) and survive.

So that begs the question, why did the GeForce RTX 3090 underperform the 3-year old Quadro RTX 8000 in certain tasks, and even underperform the nearly 6-year old Quadro P6000? Specifically, why does the RTX 8000 see over an order of magnitude more performance in Siemens NX? We’re sure you, savvy reader, already know; it comes down to driver optimizations. NVIDIA is judge, jury, and executioner when it comes to what GPUs get workstation optimizations and it’s clear that they did not want the RTX 3090 to be able to absolutely wipe the floor of their previous Quadro line in every application. A lot of potential buyers will be very disappointed to see their hopes of top-tier workstation performance by day and high-end gaming by night isn’t possible, at least not at the RTX 3090’s $1,499 MSRP. “Well, that’s what the TITAN line is for,” we hear you saying, but there is no TITAN RTX replacement available right now, and the pricing of the 3090 certainly positions it as a TITAN replacement. NVIDIA even boasts the 3090 as having “TITAN class performance” in the first line of their marketing materials, but that Siemens NX result is nowhere near “TITAN class.” Is the 3090 just an overbuilt, overpriced 3080? Well, not quite. It’s still the best GPU available for 3D modelers and video work, especially with its 24GBs of VRAM that allows creatives to work with large models and 8K footage with ease, but that puts it in a pretty small niche, a niche previously served by the TITAN-class cards. If you don’t work with CAD applications like Siemens NX, Creo, or CATIA, the RTX 3090 is still appealing, but it’s an absolute non-starter if those applications are critical to your workflow.

There’s other considerations, too. While both the Quadro and the GeForce card offer multi-GPU setups over NVLink, and it’s worth noting that only the 3090 has this capability among the 3000-series cards, only the Quadro can be virtualized with SR-IOV. If your workload involves distributing one GPU, or two NVLinked GPUs, to multiple VMs, the Quadro is still your only choice. While there are ways around this, the point still stands that this feature is artificially limited by NVIDIA’s GeForce driver. The same driver which, by the way, does offer certifications for some creative applications like the Adobe suite and Autodesk, as well as WHQL (Windows Hardware Quality Labs) certification in its Studio variant, confounding the point further. While we anticipate a true Ampere-based TITAN replacement to come in the future from NVIDIA, the artificial limitations placed on the GeForce RTX 3090, including the limit to concurrent encodes with NVENC, feel out of touch with what professional users are looking for right now.

While writing this article, NVIDIA also announced their new line of cryptocurrency mining specific GPUs and their intention to half the mining performance of the upcoming RTX 3060. This decrease in crypto mining performance is completely artificial and done entirely through drivers, further driving home the point that we are simply at the mercy of whatever NVIDIA deems our cards worthy of doing. Will this “unhackable” limit on the 3060 be exploited? Without a doubt, much the way the NVENC limit has been beaten. But for now, NVIDIA holds all the cards and for a lot of professional workflows that are highly optimized for CUDA, RTX, TensorFlow, and other NVIDIA specific computing platforms, there’s simply no other choice but NVIDIA.

Conclusion

Let’s break it down a bit. Should you keep your stock alerts on for the RTX 3090? Brave the lines outside your local MicroCenter for the chance to get one? As with most things the answer is, “it depends.”

- Do you work exclusively with large 3D models in applications like Blender and Cinema 4D or regularly edit large 6K and 8K video files? Then yes; the RTX 3090 is the best creative professional GPU on the market for CUDA and RTX accelerated workflows.

- Do you have enough disposable income for an absolutely no-compromises gaming rig? Also yes; the RTX 3090 is the best gaming GPU money can buy, particularly in games with ray-tracing and DLSS 2.0 support, a list that is growing every day.

- Do you work primarily in CAD, particularly in scientific fields with applications like Creo and CATIA? Then no; the RTX 3090 doesn’t provide the necessary driver optimizations that come with the Quadro line of cards, and no amount of brute forced raw power can overcome that. We’re working to get our hands on the new RTX A6000, the RTX 8000s actual replacement, to help inform folks like you.

- Do you work in the machine learning and AI field? This one’s a mixed bag, because it seems like a “yes” as the performance in TensorFlow is excellent, but there are unfortunately not enough standardized tests or data points to make a definitive conclusion versus a newer Quadro card. Also, as of this writing, TensorFlow hasn’t been updated to properly support Ampere cards. Our current answer is a soft yes. We may look at MLCommons and MLperf in the future as a follow-up, specifically for AI and machine learning use cases.

- Do you currently own a TITAN RTX and are looking to upgrade? We recommend you wait for an official announcement from NVIDIA on the Ampere-based TITAN, which is rumored to be coming eventually. If your use cases make good use of the TITAN, the RTX 3090 may end up a downgrade, depending on your workflow.

- Do you do a lot of GPU virtualization? This is a solid no; the RTX 3090 does not natively support SR-IOV.

With all that considered, we hope this can inform your decision to upgrade or not, and whether you go GeForce or Quadro. If the $1,499 MSRP of the RTX 3090 is too rich for your blood and you don’t need more than 10GB of VRAM, the RTX 3080 is a great option as well. While not explicitly tested here, we found our results were generally 20%-30% better than the RTX 3080 based on results throughout the web, so you can expect it to outperform (and underperform) the Quadro RTX 8000 in similar applications. It may also be worth waiting for the rumored RTX 3080 Ti, whenever that may be coming, for a middle ground. For now, we will keep our eyes peeled for the Ampere-based TITAN to truly fill the gap between a full workstation card and a full gaming card. We will also be keeping an eye on NVIDIA’s driver pipeline to see if they assuage any of the card’s work-related shortcomings in future releases, but we have our doubts. We would love to hear your thoughts on this, and also if there were any benchmarks or use-cases we missed that you’d like to see, especially as it pertains to AI and machine learning. Get in touch with us on our social channels and stay tuned for more creative professional and workstation reviews.

Read More – NVIDIA RTX A6000 Review

Amazon

Amazon