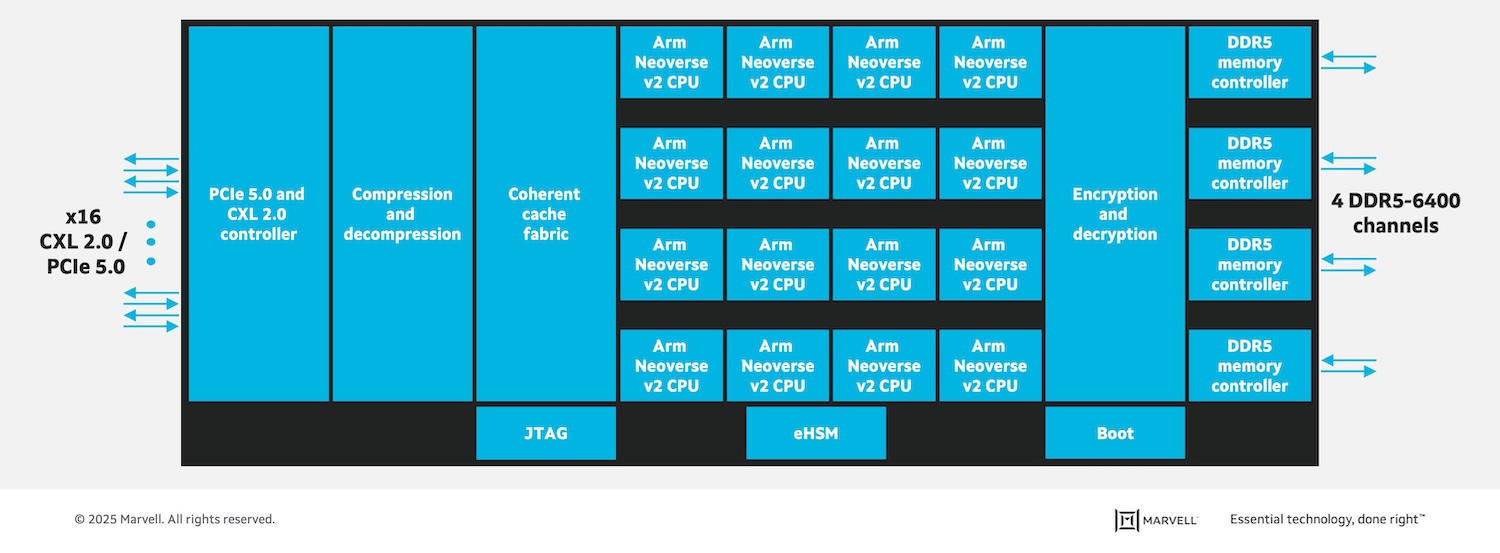

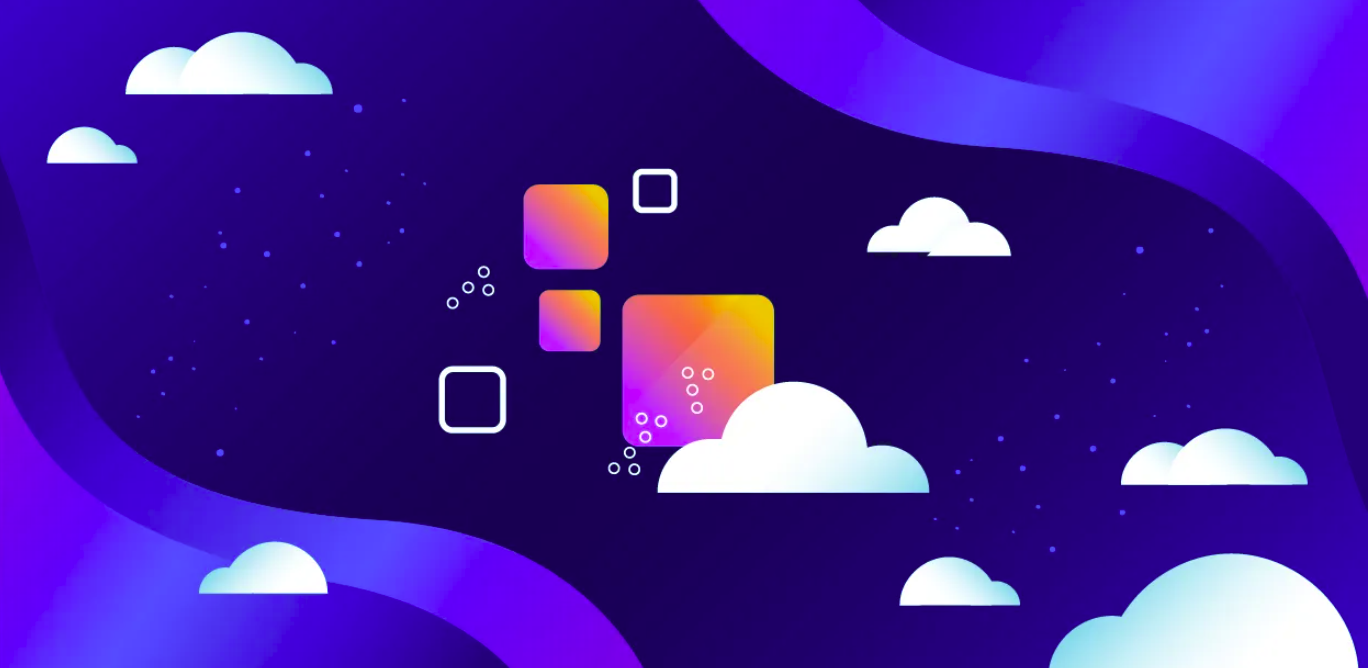

Marvell Structera completes interoperability testing with DDR4 and DDR5 solutions from Micron, Samsung, and SK hynix.

Marvell Structera completes interoperability testing with DDR4 and DDR5 solutions from Micron, Samsung, and SK hynix.

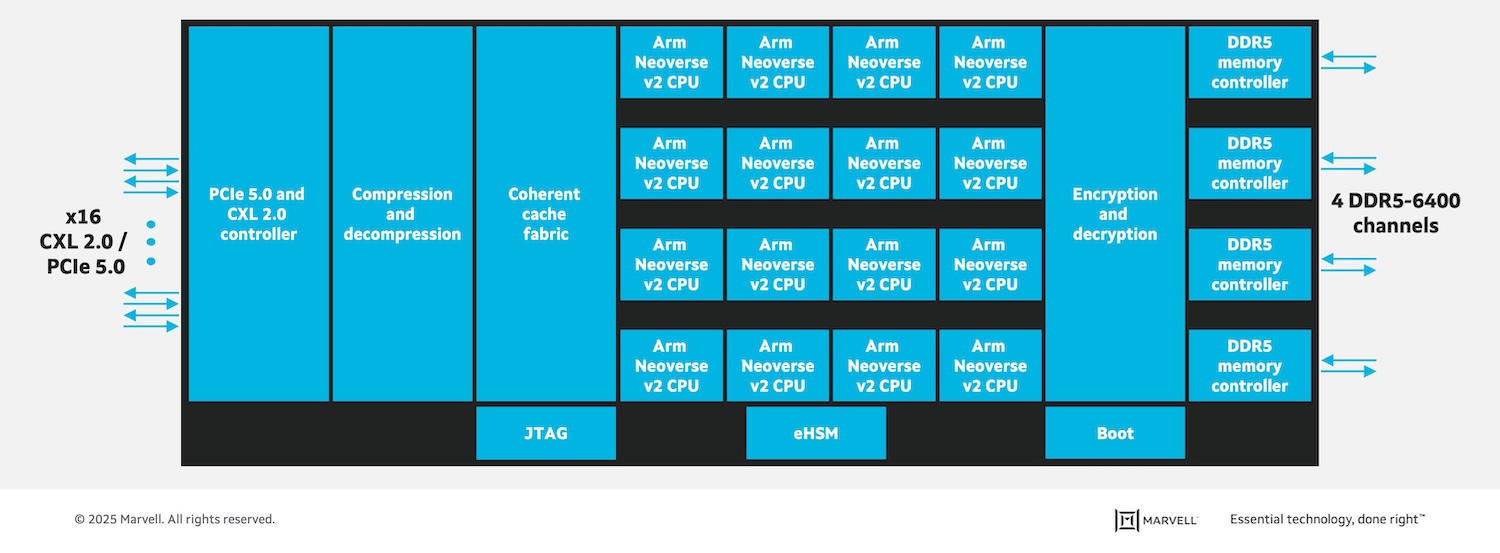

IBM and AMD partner on quantum-centric supercomputing, merging quantum with HPC and AI to enable hybrid workflows and accelerate discovery.

Broadcom introduces VMware Tanzu Data Intelligence at VMware Explore 2025.

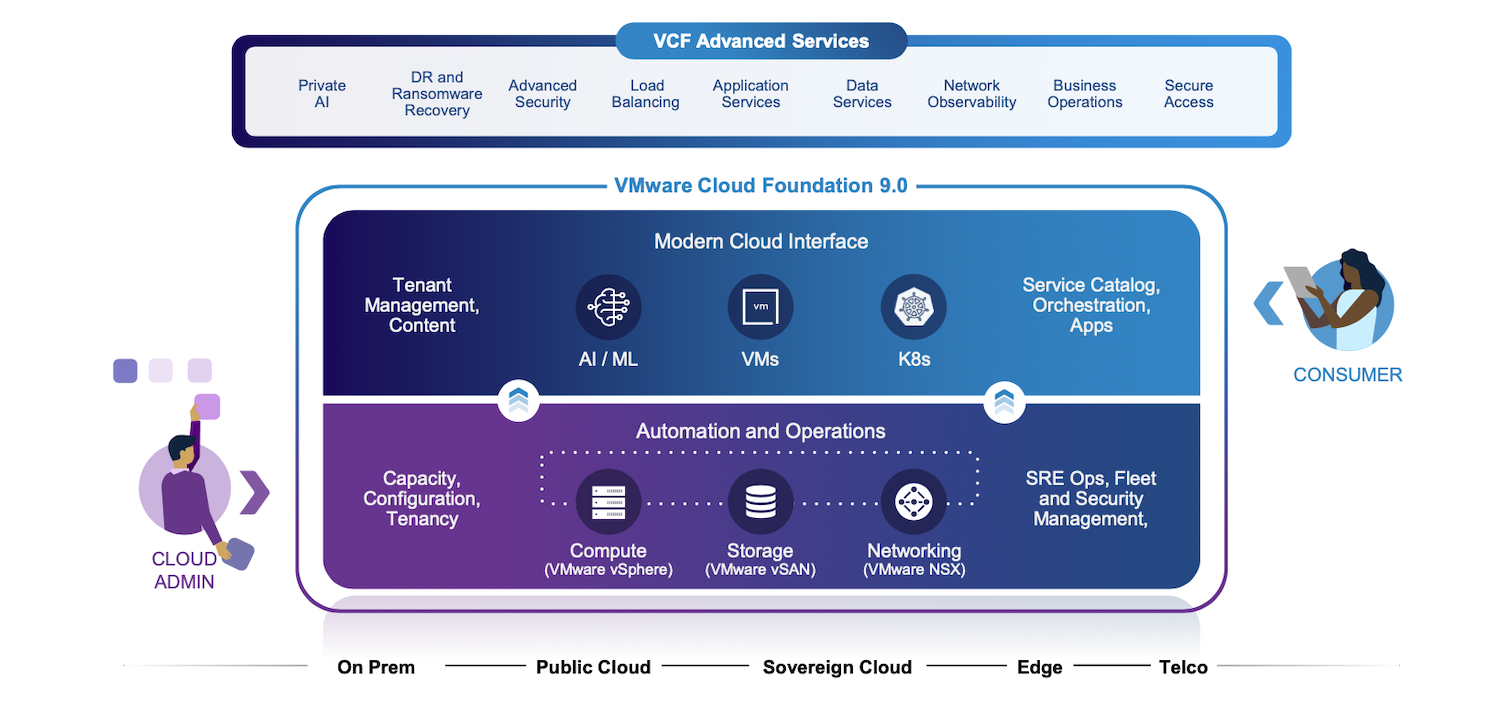

VMware Private AI Services is now included as a standard feature in VCF 9.0.

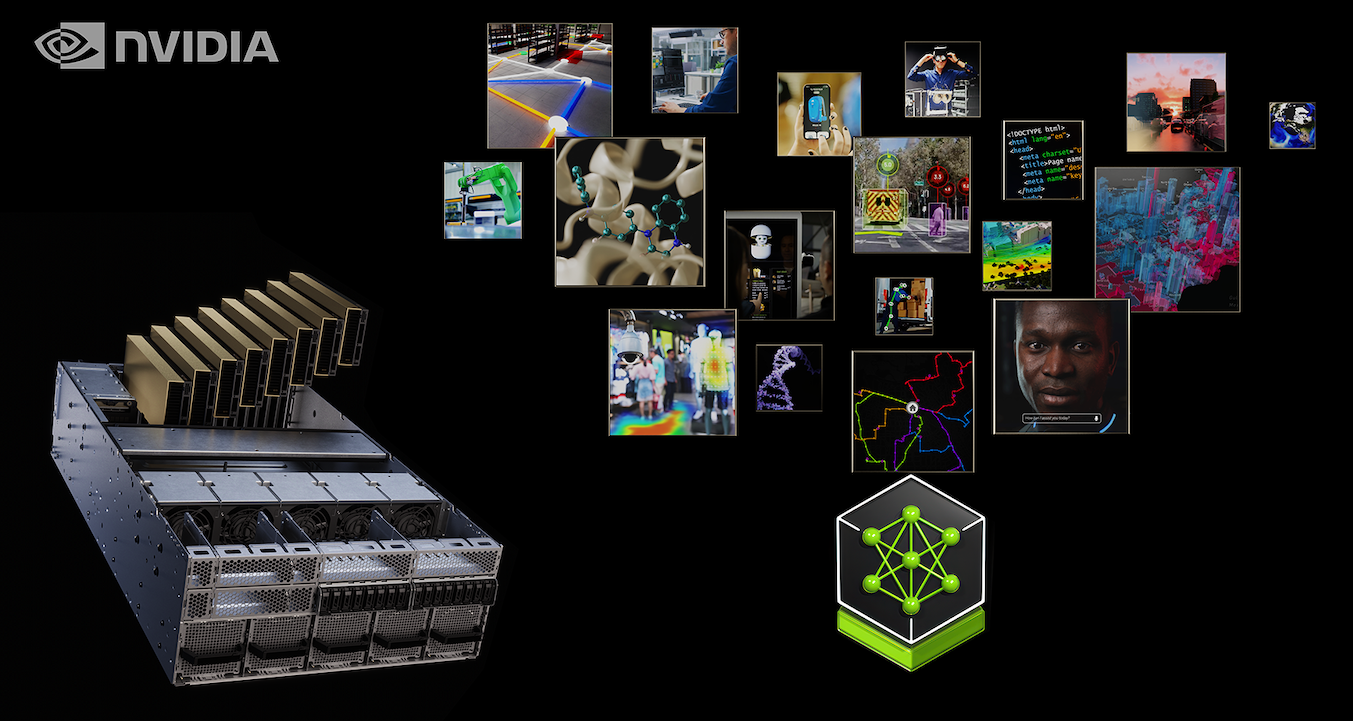

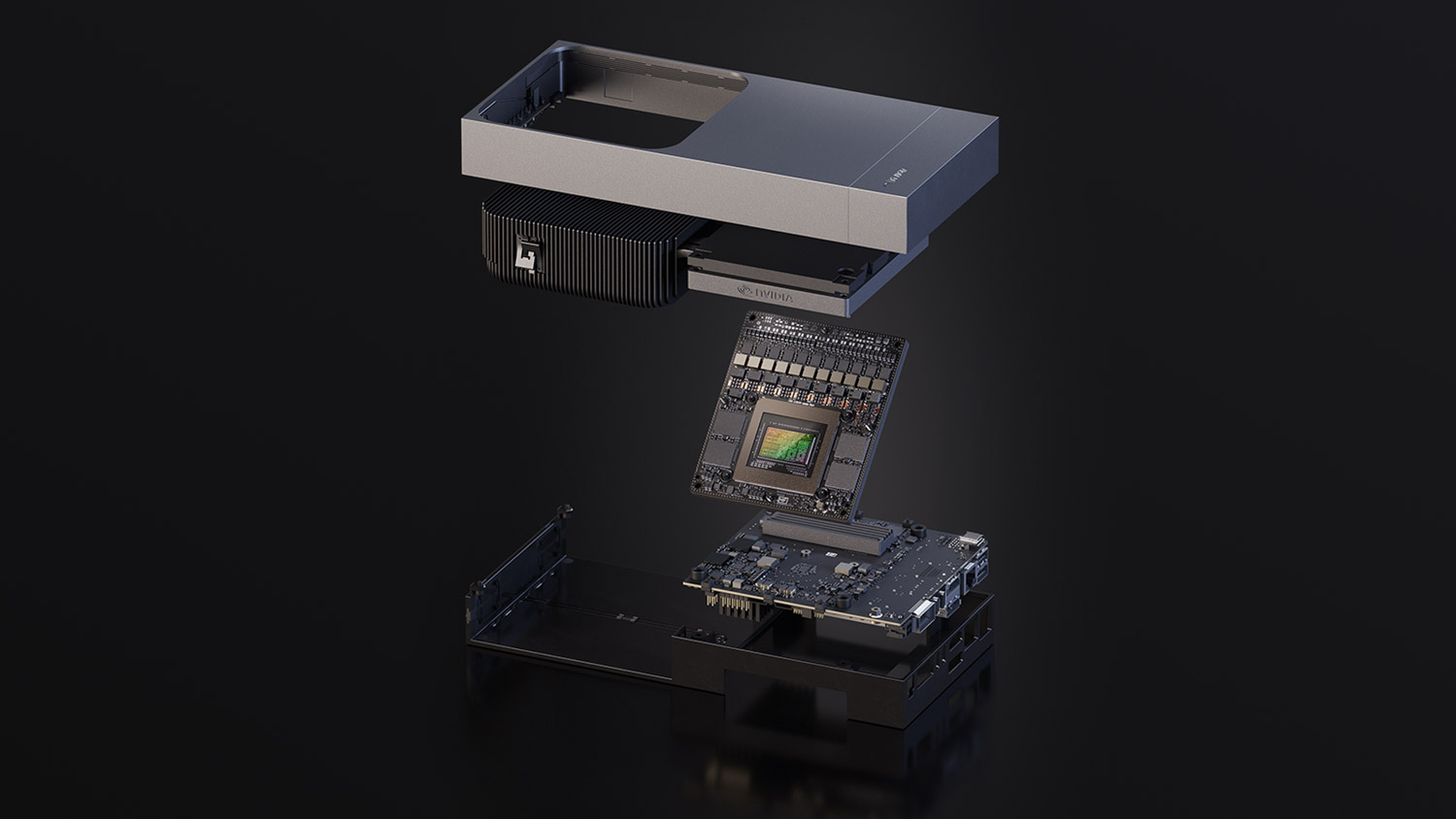

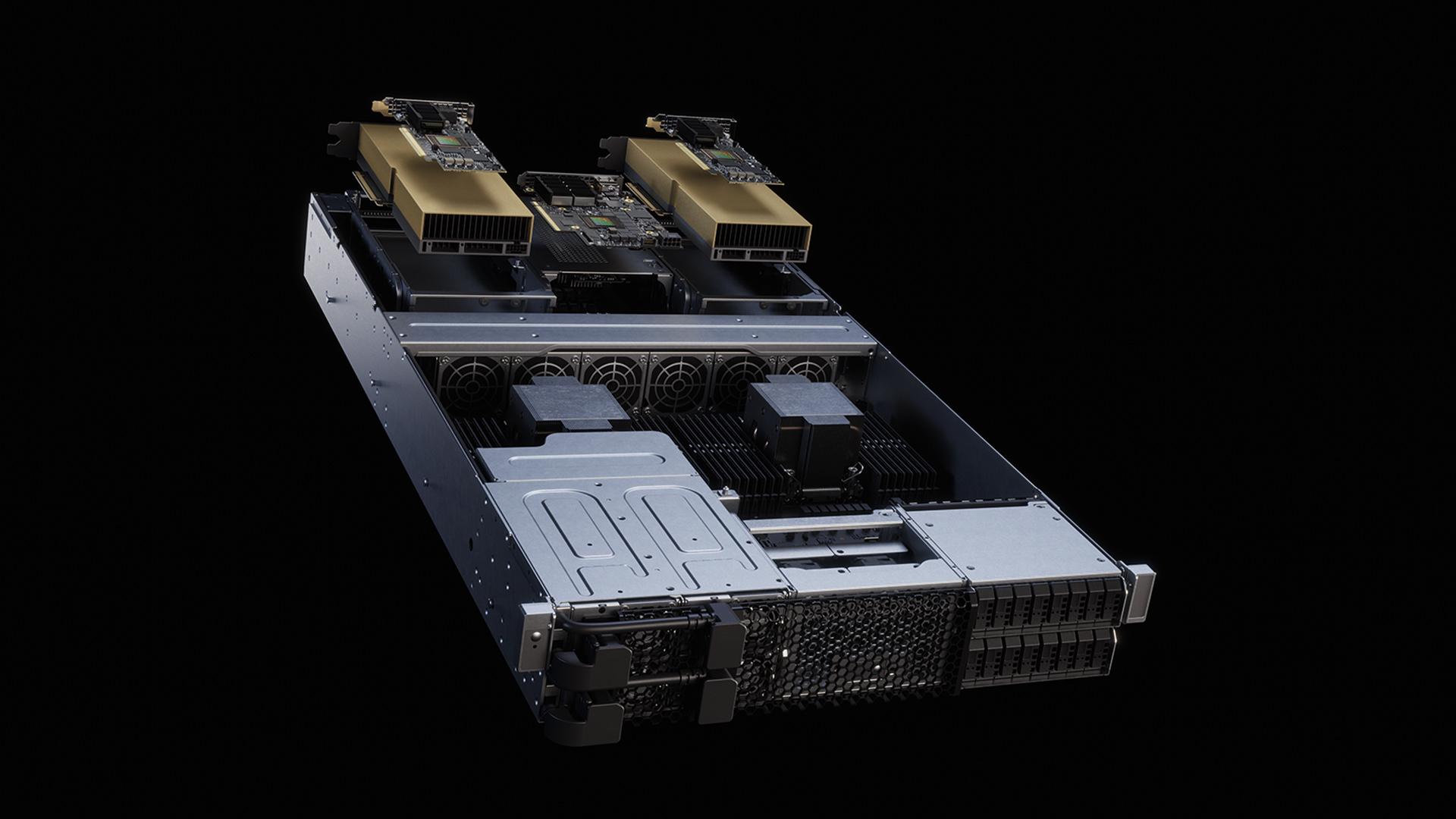

NVIDIA RTX PRO Servers are infiltrating enterprise data centers at a rapid pace.

NVIDIA declares the data center is now the computer, delivering that message during Stanford’s Hot Chips conference.

EUC World Amplify conference wraps up with a focus on the future and expanding to Melbourne, Australia!

NVIDIA launches RTX PRO 6000 Blackwell for servers and new workstation GPUs, boosting AI, simulation, and design performance.

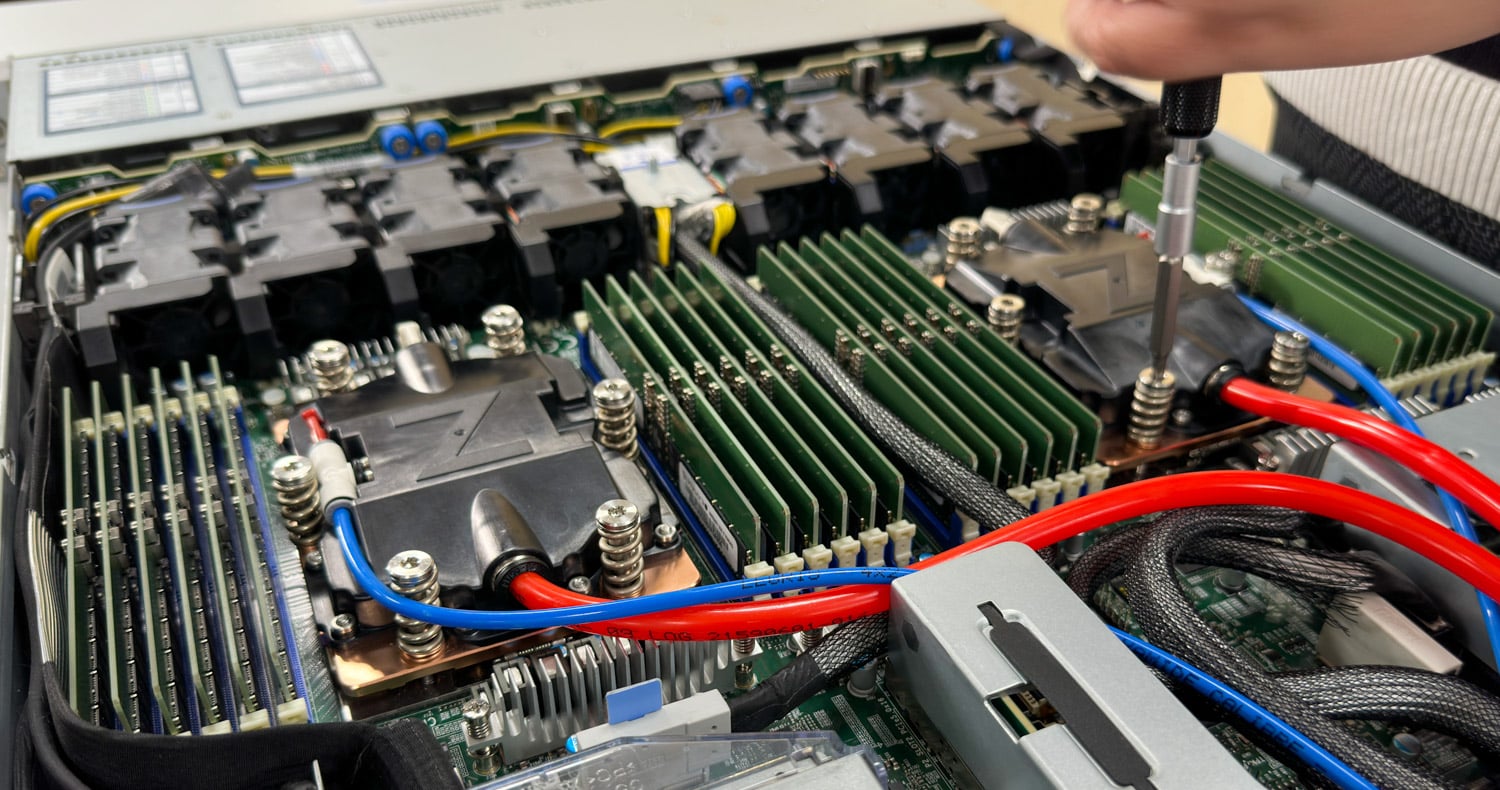

ZutaCore debuts a modular, waterless two-phase cooling sidecar for AI-ready data centers without facility water loops.