Lexar is known for its memory cards but it also makes desktop memory kits, like the Lexar ARES RGB DDR5-6000 kit we’re reviewing here. This highly-clocked RAM has Intel XMP 3.0 and AMD EXPO compatibility and RGB heatsinks.

Lexar is known for its memory cards but it also makes desktop memory kits, like the Lexar ARES RGB DDR5-6000 kit we’re reviewing here. This highly-clocked RAM has Intel XMP 3.0 and AMD EXPO compatibility and RGB heatsinks.

New block and file services highlighted the HPE GreenLake Storage Day. While HPE emphasizes the operational and management benefits of making these services available in GreenLake, we’re interested in the new universal hardware platform that sits behind the scenes – the HPE Alletra MP storage server.

In recent months, large language models have been the subject of extensive research and development, with state-of-the-art models like GPT-4, Meta LLaMa, and Alpaca pushing the boundaries of natural language processing and the hardware required to run them. Running inference on these models can be computationally challenging, requiring powerful hardware to deliver real-time results.

Pi represents the ratio of a circle’s circumference to its diameter, and it has an infinite number of decimal digits that never repeat or end. Calculating infinite Pi isn’t just a thrilling quest for mathematicians; it’s also a way to put computing power and storage capacity through the ultimate endurance test. Up to now, Google’s

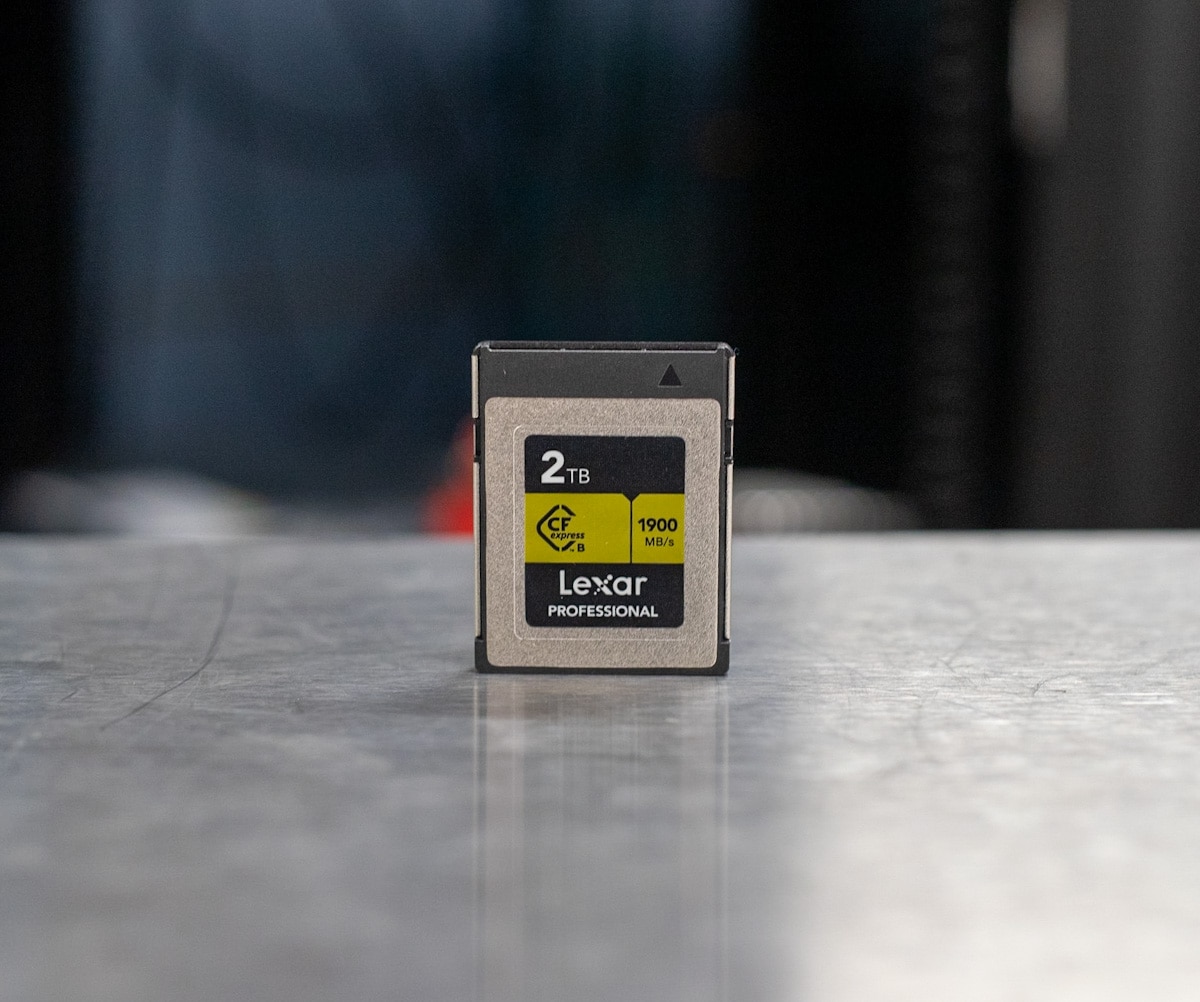

Rising demand for faster data transfer and greater storage capacity is being driven by advancements in image resolutions and color depth in the latest professional cameras. To keep pace with these evolving needs, Lexar created the Professional CFexpress Type B Gold card a few years ago, a high-performance flash media solution tailored for seamless integration

Portable power stations have become increasingly popular in recent years, as they offer a reliable and convenient source of energy for outdoor activities, power outages, and emergencies. In this review, we look at the BougeRV FORT 1000 Portable Power Station, which features a 1120Wh LiFePO4 battery and a 1200W AC power inverter.

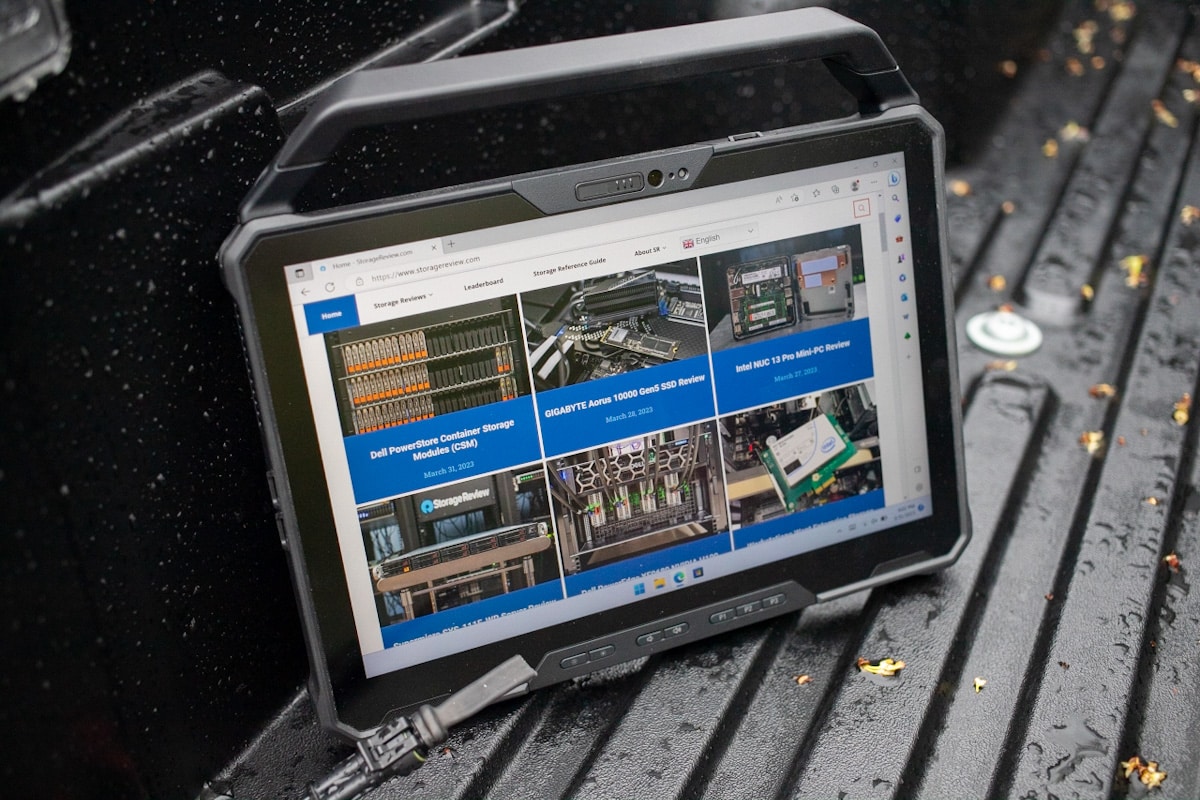

The Dell Latitude 7230 Rugged Extreme Tablet brings high-performance computing to just about anywhere, thanks to its durable yet lightweight design.

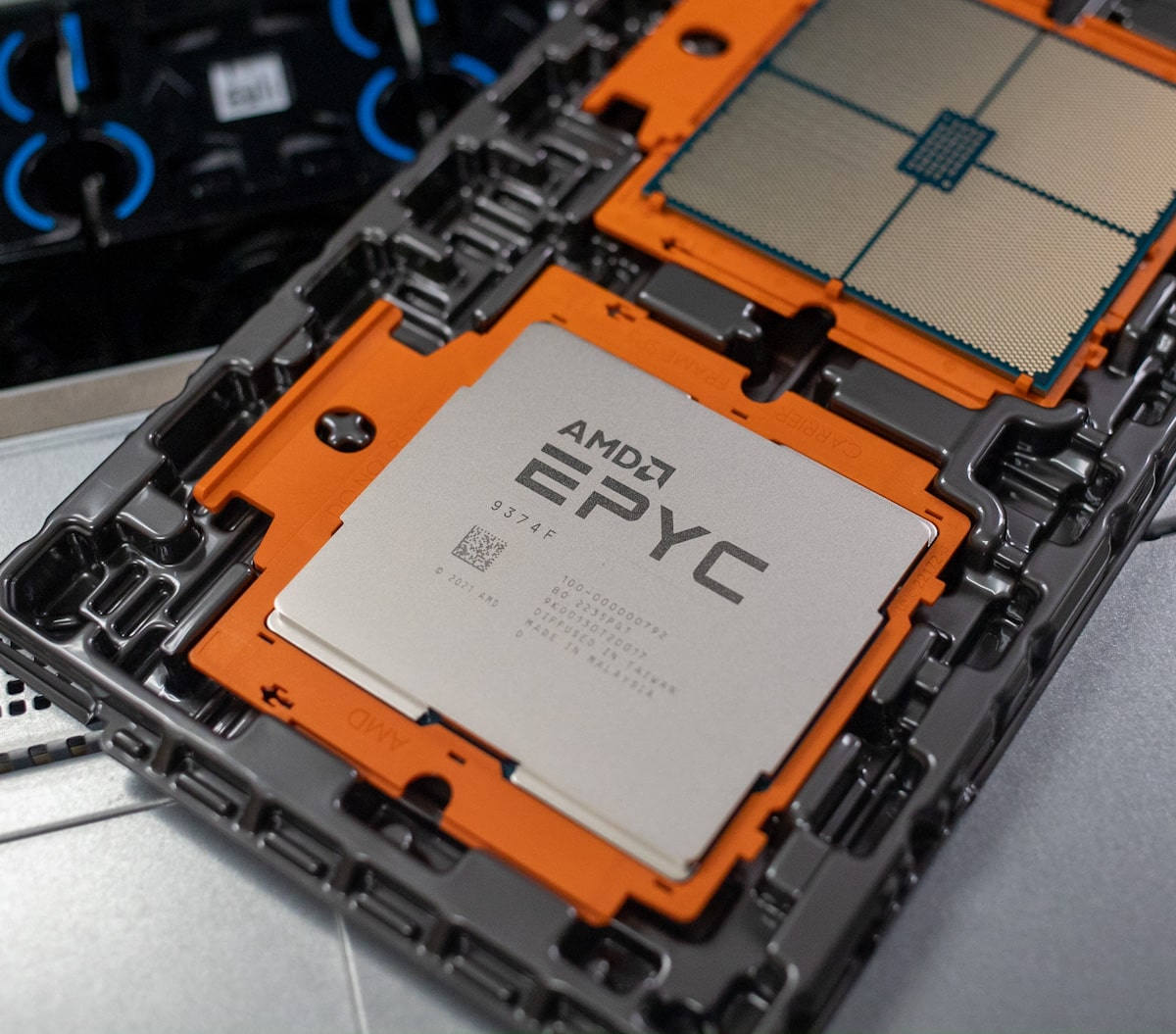

The Dell PowerEdge R660 is a 1U rackmount server designed to support the latest and greatest in server technology, including E3.S Gen5 storage, DDR5 memory, and configurations with liquid CPU cooling. It features support for two processors (dual sockets), 32 RDIMMs, and up to three graphics cards.

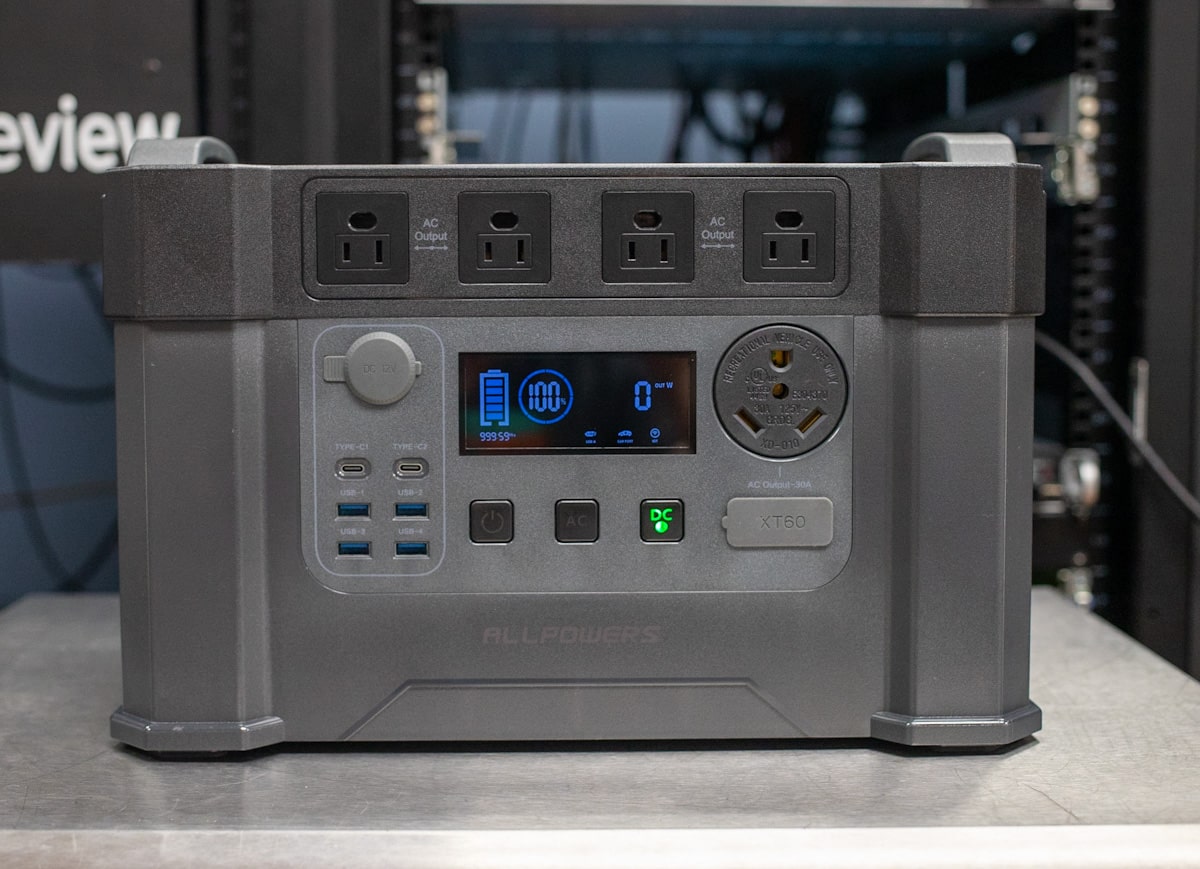

The ALLPOWERS S2000 Pro Portable Power Station has a 2400W inverter matched with a 1500Wh battery. Aimed at larger deployment use cases, the Pro model specifically includes a 30A power plug for high-power-draw devices.

As the digital landscape has continued to evolve, storage solutions that can accommodate a wide range of enterprise workloads and emerging application development platforms have become increasingly important. Notably, Dell Technologies PowerStore is a primary storage array designed to meet the needs of both traditional enterprise workloads and modern, containerized applications running on Kubernetes (K8s).