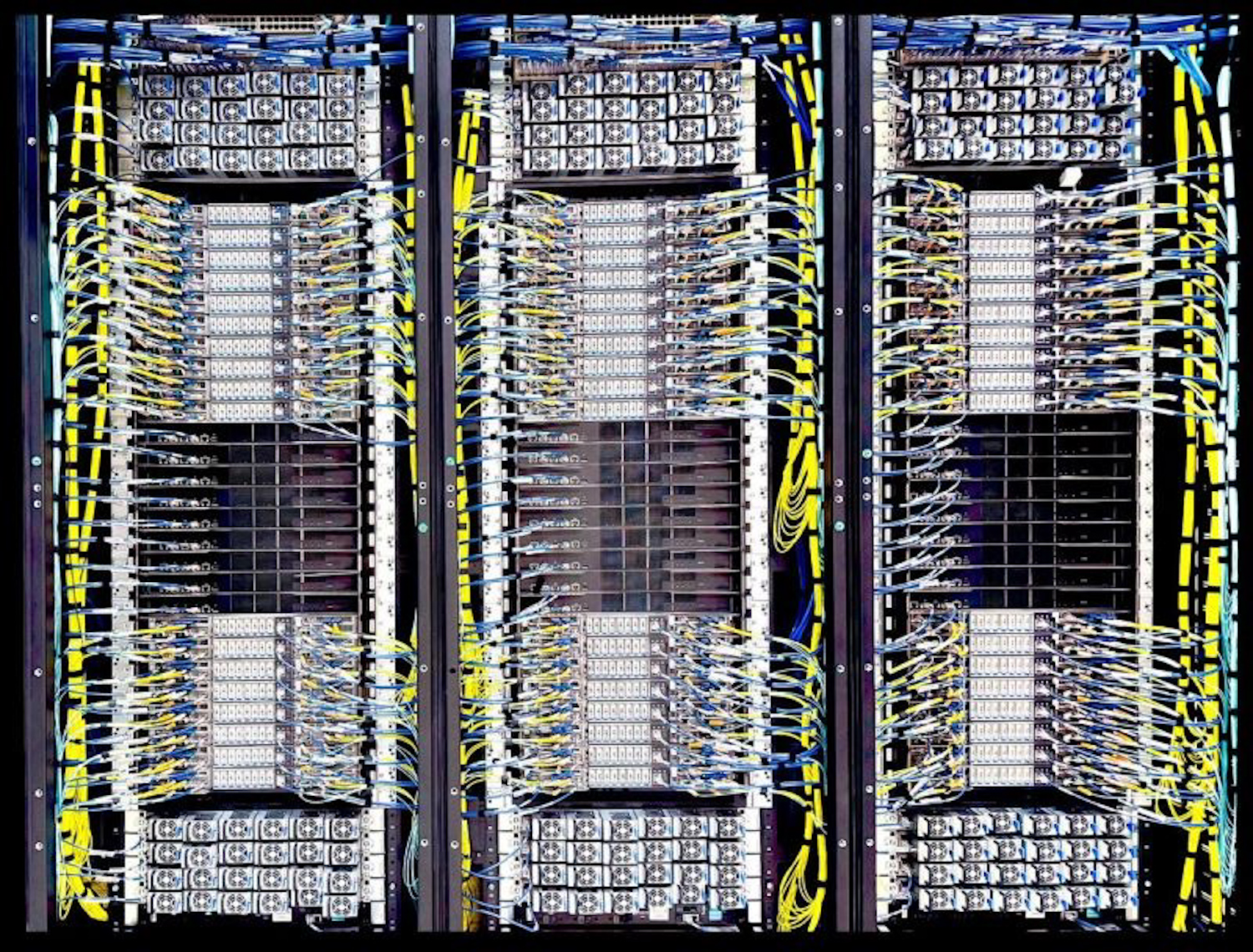

NVIDIA declares the data center is now the computer, delivering that message during Stanford’s Hot Chips conference.

NVIDIA declares the data center is now the computer, delivering that message during Stanford’s Hot Chips conference.

The L40S is especially valuable for synthetic data generation, multimodal AI development, and Omniverse applications that require both computing and graphics performance.

NVIDIA launches RTX PRO 6000 Blackwell for servers and new workstation GPUs, boosting AI, simulation, and design performance.

Following a tour of the facillities, Valvoline’s VP of R&D, sat down with Brian to go deep on immersion-cooling.

NVIDIA Run:ai boosts GPU efficiency for AI workloads, with support from partners like Dell, HPE, and Cisco for enterprise-scale deployments.

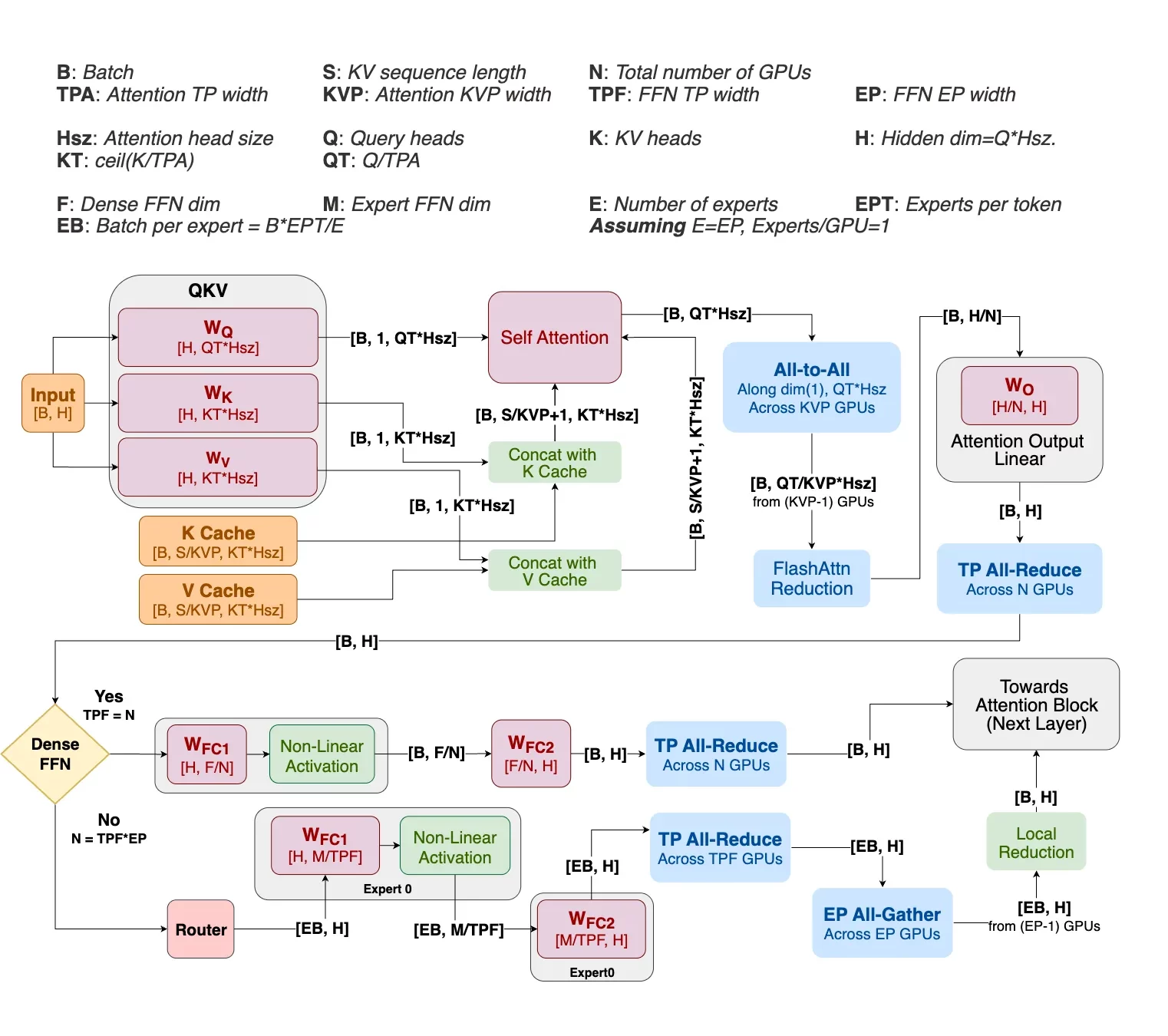

NVIDIA Helix Parallelism boosts real-time LLM performance on Blackwell GPUs, scaling multi-million-token AI with 32x efficiency gains.

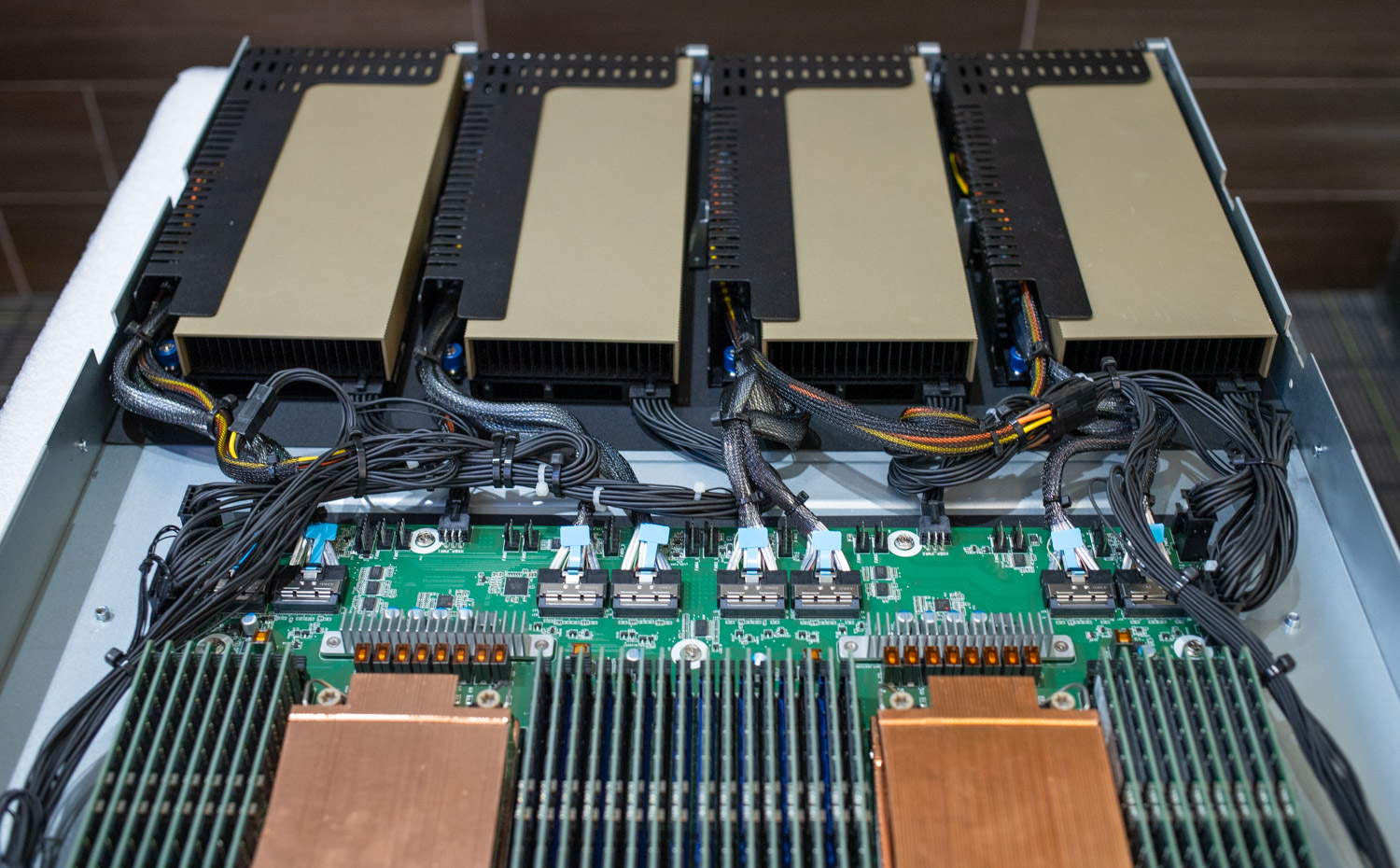

Hypertec TRIDENT iGW610R-G6, a 1U server, supports up to four full-height GPUs in a single-phase immersion environment. That’s up to 200 GPUs in a 50U tank.

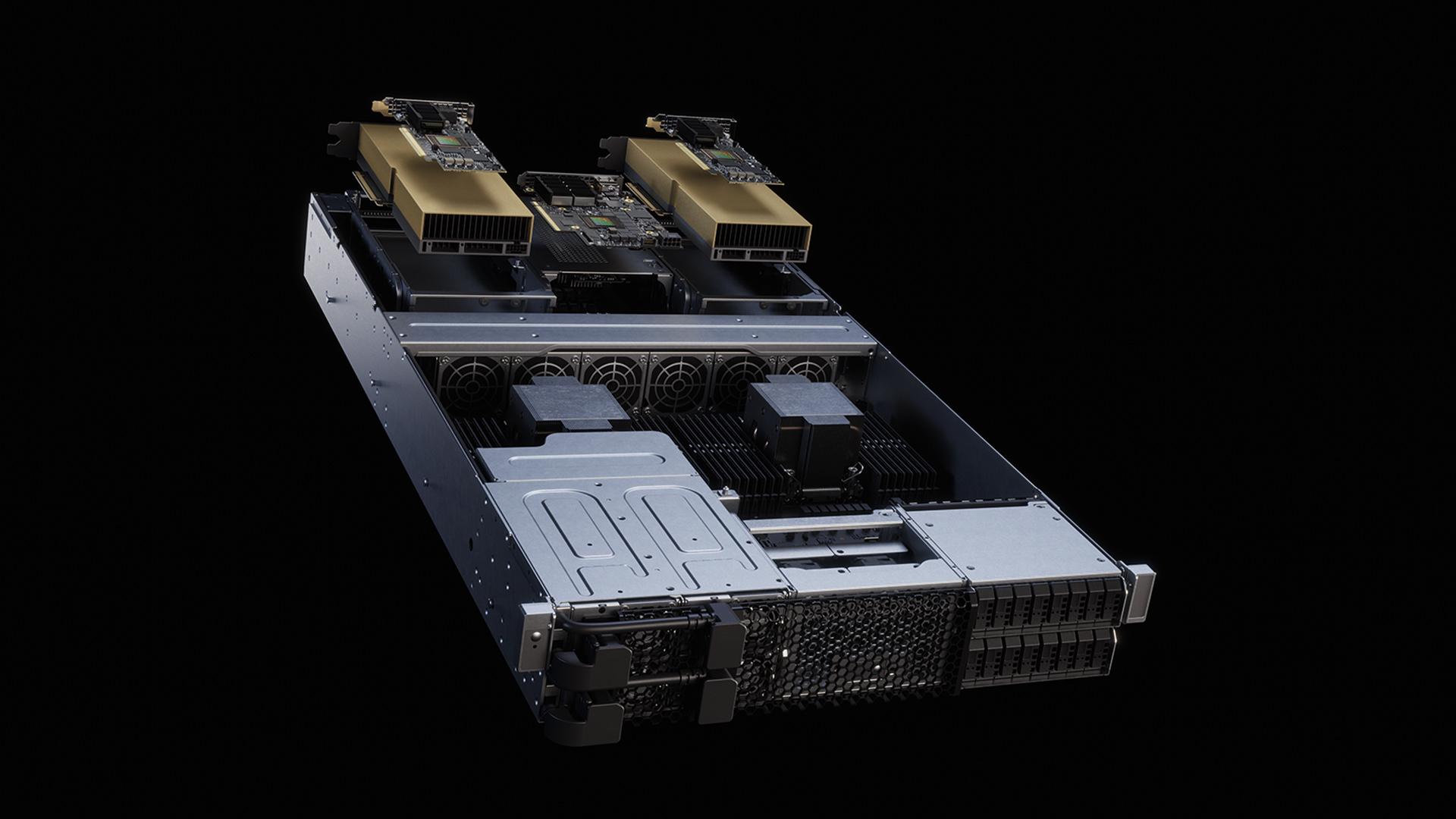

Dell and CoreWeave deliver the first NVIDIA GB300 NVL72 system, setting a new benchmark in AI performance and scalability for enterprise workloads.

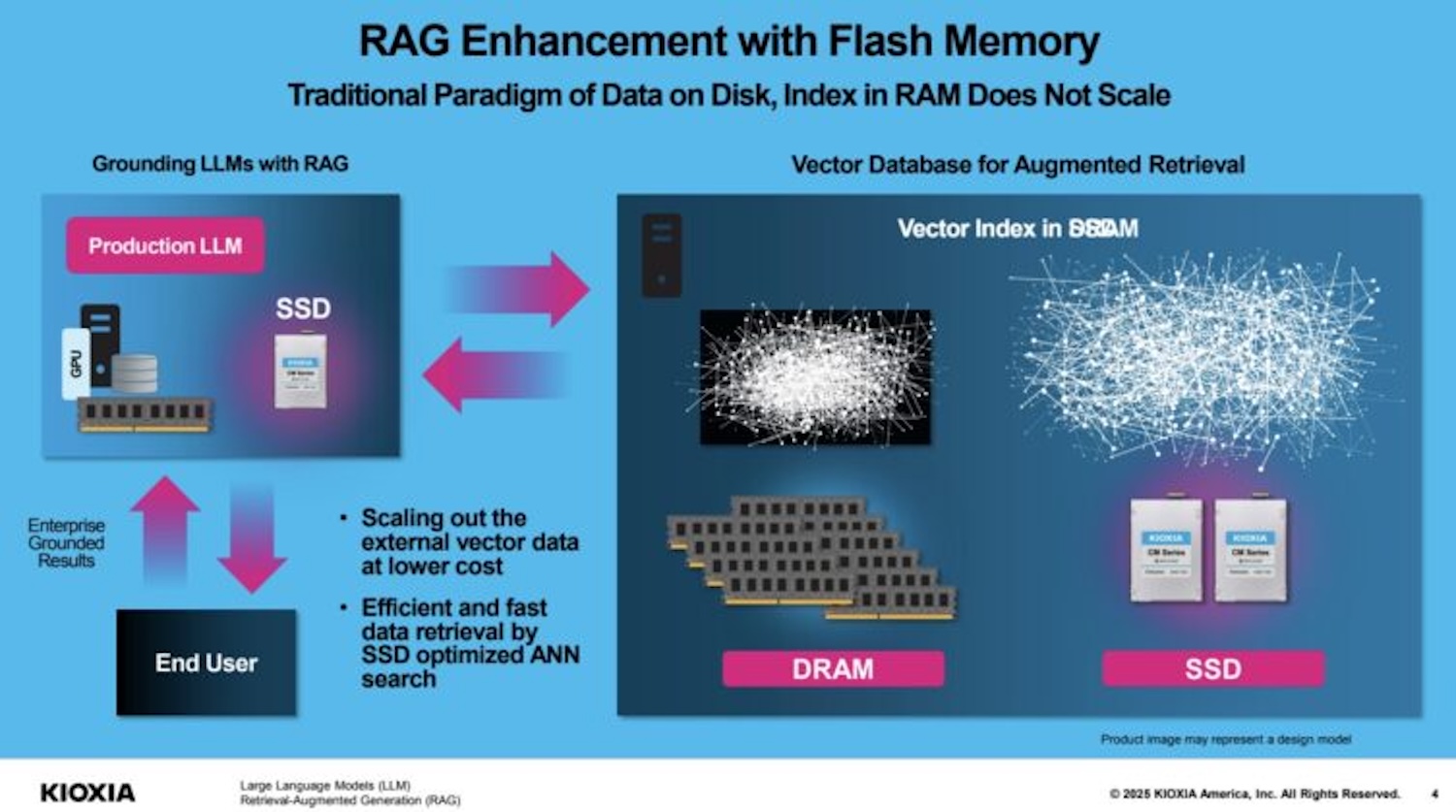

KIOXIA delivers a significant update to its open-source AiSAQ software, featuring advanced usability and flexibility in AI database searches within RAG systems.

HPE GreenLake Intelligence uses a new agentic AI framework that unifies and automates operations across the entire IT stack.