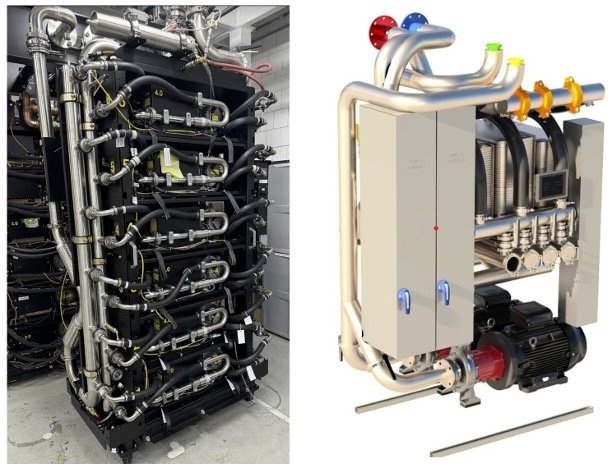

Supermicro introduces DCBBS and DLC-2, a modular solution for building scalable, liquid-cooled AI data centers with faster time-to-deployment.

Supermicro introduces DCBBS and DLC-2, a modular solution for building scalable, liquid-cooled AI data centers with faster time-to-deployment.

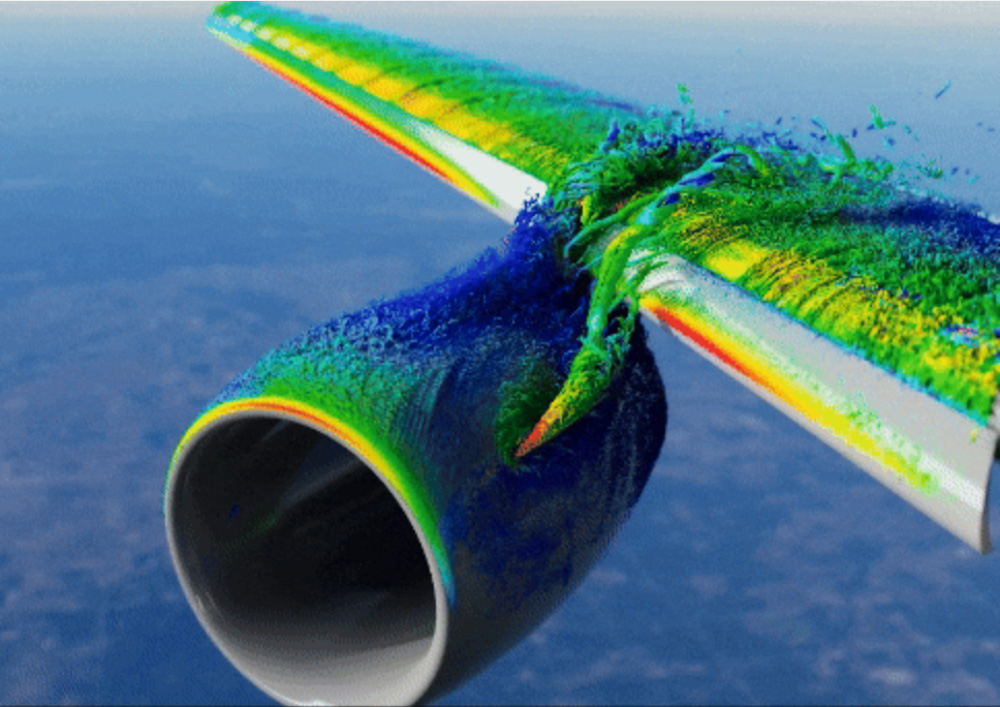

Cadence and NVIDIA introduce Millennium M2000, an AI-optimized supercomputer for advanced engineering and life sciences simulations.

Google outlines new AI data center infrastructure with +/-400 VDC power and liquid cooling to handle 1MW racks and rising thermal loads.

Google unveils the Ironwood TPU, its most powerful AI accelerator yet, delivering massive improvements in inference performance and efficiency.

UALink Consortium ratifies Ultra Accelerator Link 200G 1.0, an open standard to meet the needs of growing AI workloads.

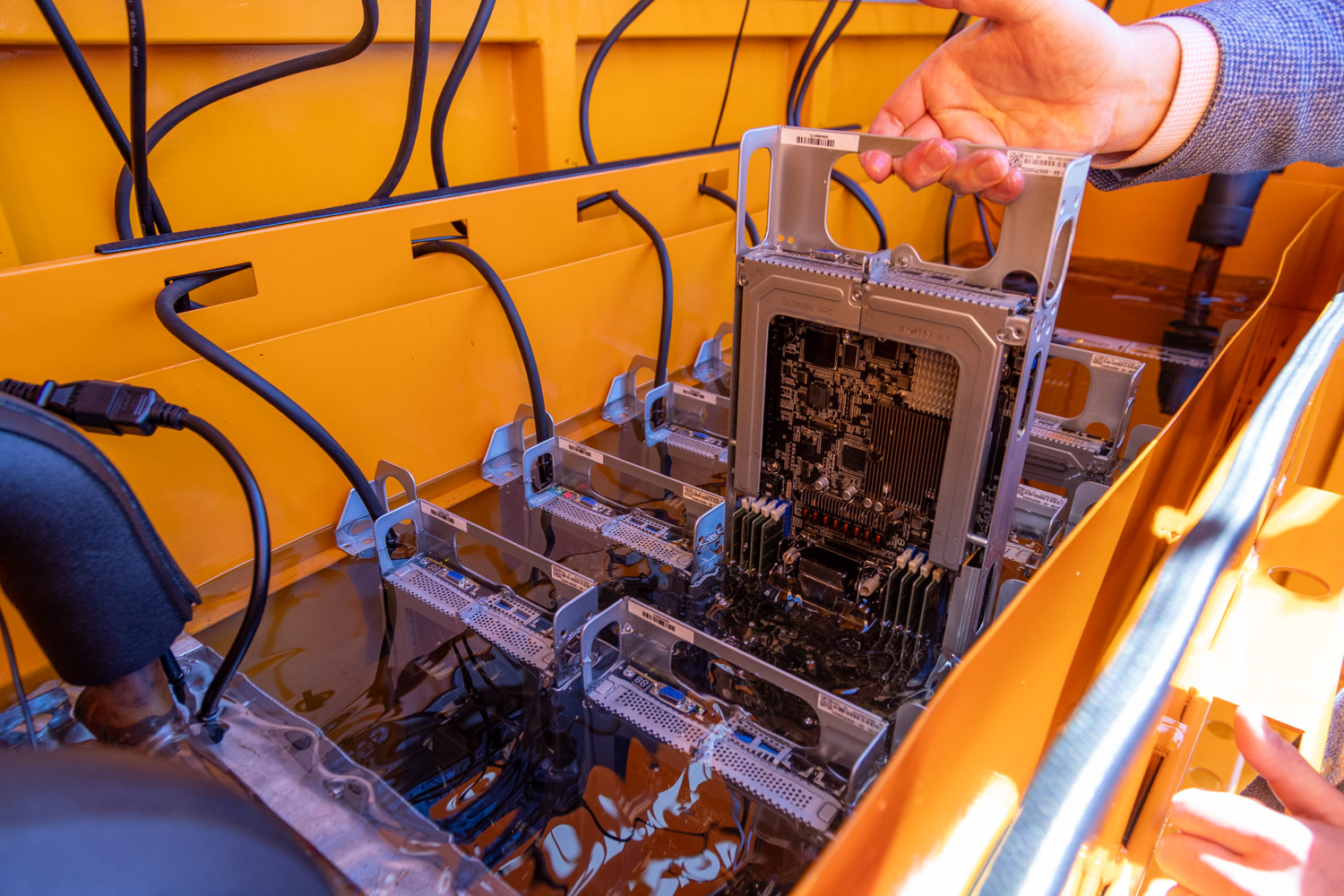

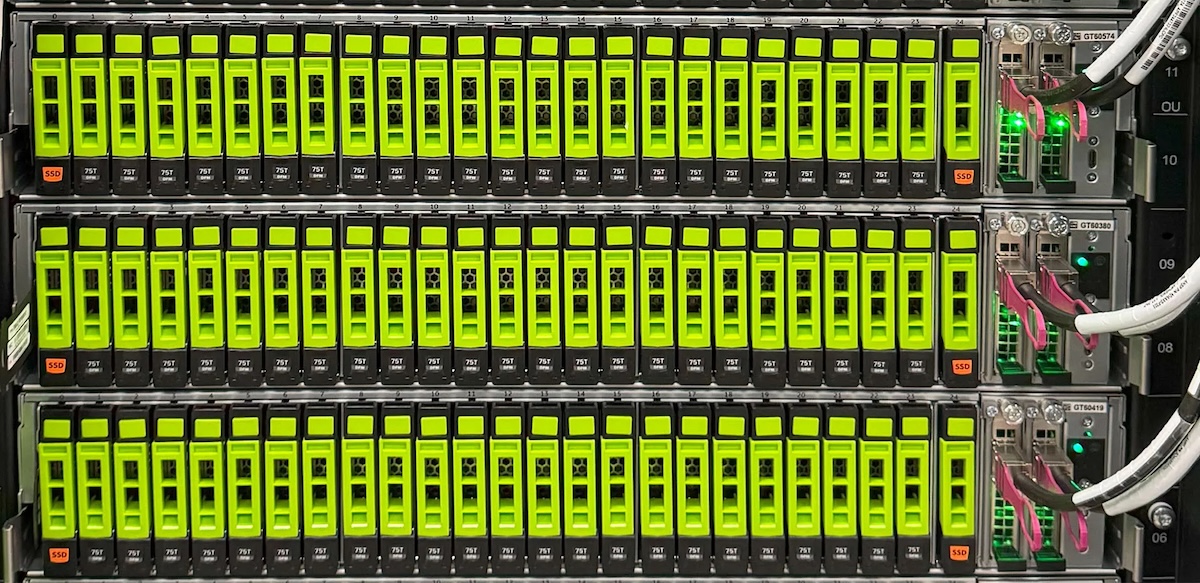

DUG Nomad mobile data centers deliver immersion-cooled AI and HPC capabilities at the edge with Hypertec servers and Solidigm SSDs.

NVIDIA and Google Cloud collaborate to bring agentic AI to enterprises utilizing Google Gemini AI models through Blackwell HGX and DGX platforms.

IBM integrates two of Meta’s latest Llama 4 models, Scout and Maverick, into watsonx.ai platform.

IBM’s z17 mainframe has the processing power to deliver 50% more AI inference operations daily.

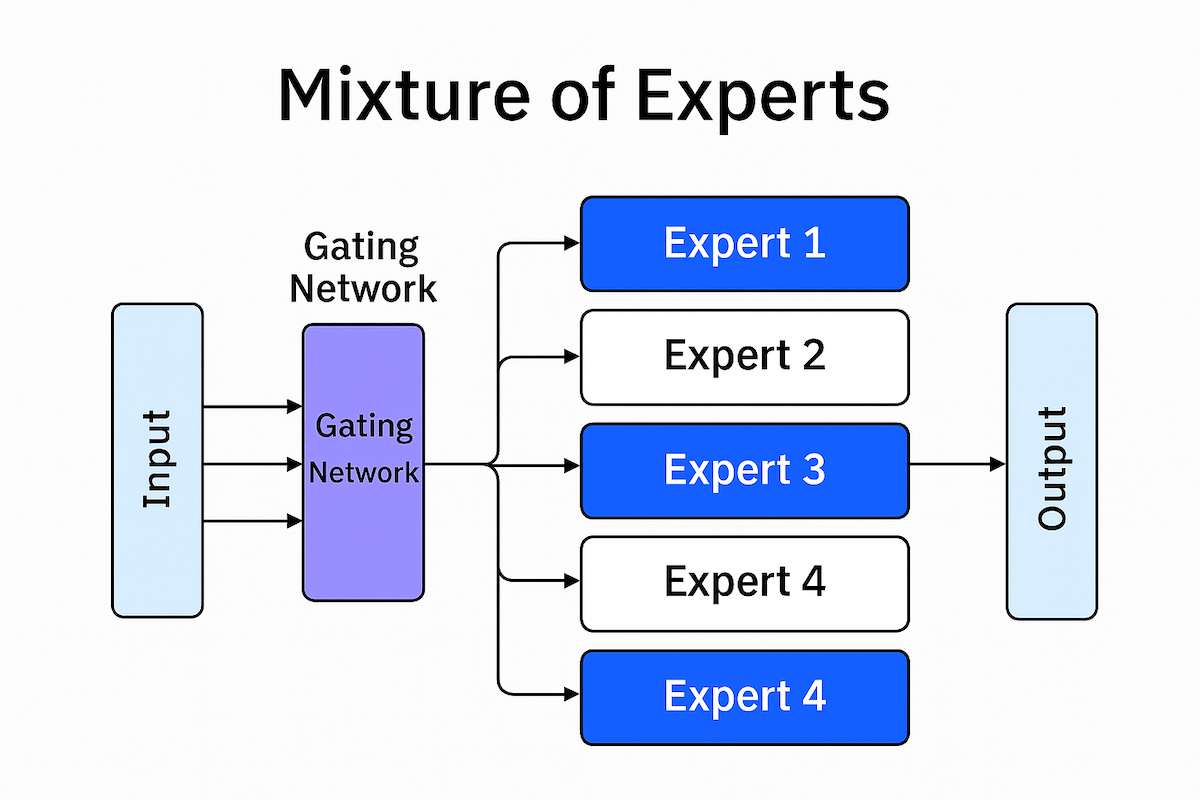

Meta unveils Llama 4, a powerful MoE-based AI model family offering improved efficiency, scalability, and multimodal performance.